Uncover hidden connections in unstructured monetary knowledge with Amazon Bedrock and Amazon Neptune

In asset administration, portfolio managers must carefully monitor corporations of their funding universe to determine dangers and alternatives, and information funding choices. Monitoring direct occasions like earnings experiences or credit score downgrades is simple—you possibly can arrange alerts to inform managers of reports containing firm names. Nevertheless, detecting second and third-order impacts arising from occasions at suppliers, prospects, companions, or different entities in an organization’s ecosystem is difficult.

For instance, a provide chain disruption at a key vendor would probably negatively impression downstream producers. Or the lack of a high buyer for a significant consumer poses a requirement danger for the provider. Fairly often, such occasions fail to make headlines that includes the impacted firm instantly, however are nonetheless essential to concentrate to. On this publish, we show an automatic answer combining data graphs and generative artificial intelligence (AI) to floor such dangers by cross-referencing relationship maps with real-time information.

Broadly, this entails two steps: First, constructing the intricate relationships between corporations (prospects, suppliers, administrators) right into a data graph. Second, utilizing this graph database together with generative AI to detect second and third-order impacts from information occasions. As an example, this answer can spotlight that delays at a components provider could disrupt manufacturing for downstream auto producers in a portfolio although none are instantly referenced.

With AWS, you possibly can deploy this answer in a serverless, scalable, and absolutely event-driven structure. This publish demonstrates a proof of idea constructed on two key AWS companies nicely fitted to graph data illustration and pure language processing: Amazon Neptune and Amazon Bedrock. Neptune is a quick, dependable, absolutely managed graph database service that makes it simple to construct and run purposes that work with extremely linked datasets. Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main AI corporations like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon by means of a single API, together with a broad set of capabilities to construct generative AI purposes with safety, privateness, and accountable AI.

Total, this prototype demonstrates the artwork of attainable with data graphs and generative AI—deriving alerts by connecting disparate dots. The takeaway for funding professionals is the flexibility to remain on high of developments nearer to the sign whereas avoiding noise.

Construct the data graph

Step one on this answer is constructing a data graph, and a useful but typically ignored knowledge supply for data graphs is corporate annual experiences. As a result of official company publications bear scrutiny earlier than launch, the knowledge they include is prone to be correct and dependable. Nevertheless, annual experiences are written in an unstructured format meant for human studying relatively than machine consumption. To unlock their potential, you want a option to systematically extract and construction the wealth of information and relationships they include.

With generative AI companies like Amazon Bedrock, you now have the potential to automate this course of. You may take an annual report and set off a processing pipeline to ingest the report, break it down into smaller chunks, and apply pure language understanding to tug out salient entities and relationships.

For instance, a sentence stating that “[Company A] expanded its European electrical supply fleet with an order for 1,800 electrical vans from [Company B]” would enable Amazon Bedrock to determine the next:

- [Company A] as a buyer

- [Company B] as a provider

- A provider relationship between [Company A] and [Company B]

- Relationship particulars of “provider of electrical supply vans”

Extracting such structured knowledge from unstructured paperwork requires offering fastidiously crafted prompts to massive language fashions (LLMs) to allow them to analyze textual content to tug out entities like corporations and other people, in addition to relationships equivalent to prospects, suppliers, and extra. The prompts include clear directions on what to look out for and the construction to return the information in. By repeating this course of throughout your complete annual report, you possibly can extract the related entities and relationships to assemble a wealthy data graph.

Nevertheless, earlier than committing the extracted data to the data graph, it’s good to first disambiguate the entities. As an example, there could already be one other ‘[Company A]’ entity within the data graph, however it may symbolize a unique group with the identical identify. Amazon Bedrock can cause and evaluate the attributes equivalent to enterprise focus space, trade, and revenue-generating industries and relationships to different entities to find out if the 2 entities are literally distinct. This prevents inaccurately merging unrelated corporations right into a single entity.

After disambiguation is full, you possibly can reliably add new entities and relationships into your Neptune data graph, enriching it with the information extracted from annual experiences. Over time, the ingestion of dependable knowledge and integration of extra dependable knowledge sources will assist construct a complete data graph that may assist revealing insights by means of graph queries and analytics.

This automation enabled by generative AI makes it possible to course of hundreds of annual experiences and unlocks a useful asset for data graph curation that will in any other case go untapped as a result of prohibitively excessive guide effort wanted.

The next screenshot exhibits an instance of the visible exploration that’s attainable in a Neptune graph database utilizing the Graph Explorer device.

Course of information articles

The subsequent step of the answer is routinely enriching portfolio managers’ information feeds and highlighting articles related to their pursuits and investments. For the information feed, portfolio managers can subscribe to any third-party information supplier by means of AWS Data Exchange or one other information API of their selection.

When a information article enters the system, an ingestion pipeline is invoked to course of the content material. Utilizing methods just like the processing of annual experiences, Amazon Bedrock is used to extract entities, attributes, and relationships from the information article, that are then used to disambiguate in opposition to the data graph to determine the corresponding entity within the data graph.

The data graph comprises connections between corporations and other people, and by linking article entities to present nodes, you possibly can determine if any topics are inside two hops of the businesses that the portfolio supervisor has invested in or is all in favour of. Discovering such a connection signifies the article could also be related to the portfolio supervisor, and since the underlying knowledge is represented in a data graph, it may be visualized to assist the portfolio supervisor perceive why and the way this context is related. Along with figuring out connections to the portfolio, you may as well use Amazon Bedrock to carry out sentiment evaluation on the entities referenced.

The ultimate output is an enriched information feed surfacing articles prone to impression the portfolio supervisor’s areas of curiosity and investments.

Answer overview

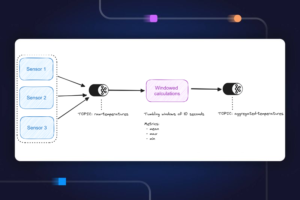

The general structure of the answer seems like the next diagram.

The workflow consists of the next steps:

- A person uploads official experiences (in PDF format) to an Amazon Simple Storage Service (Amazon S3) bucket. The experiences must be formally revealed experiences to attenuate the inclusion of inaccurate knowledge into your data graph (versus information and tabloids).

- The S3 occasion notification invokes an AWS Lambda perform, which sends the S3 bucket and file identify to an Amazon Simple Queue Service (Amazon SQS) queue. The First-In-First-Out (FIFO) queue makes certain that the report ingestion course of is carried out sequentially to scale back the probability of introducing duplicate knowledge into your data graph.

- An Amazon EventBridge time-based occasion runs each minute to begin the run of an AWS Step Functions state machine asynchronously.

- The Step Capabilities state machine runs by means of a collection of duties to course of the uploaded doc by extracting key data and inserting it into your data graph:

- Obtain the queue message from Amazon SQS.

- Obtain the PDF report file from Amazon S3, break up it into a number of smaller textual content chunks (roughly 1,000 phrases) for processing, and retailer the textual content chunks in Amazon DynamoDB.

- Use Anthropic’s Claude v3 Sonnet on Amazon Bedrock to course of the primary few textual content chunks to find out the principle entity that the report is referring to, along with related attributes (equivalent to trade).

- Retrieve the textual content chunks from DynamoDB and for every textual content chunk, invoke a Lambda perform to extract out entities (equivalent to firm or particular person), and its relationship (buyer, provider, companion, competitor, or director) to the principle entity utilizing Amazon Bedrock.

- Consolidate all extracted data.

- Filter out noise and irrelevant entities (for instance, generic phrases equivalent to “shoppers”) utilizing Amazon Bedrock.

- Use Amazon Bedrock to carry out disambiguation by reasoning utilizing the extracted data in opposition to the listing of comparable entities from the data graph. If the entity doesn’t exist, insert it. In any other case, use the entity that already exists within the data graph. Insert all relationships extracted.

- Clear up by deleting the SQS queue message and the S3 file.

- A person accesses a React-based net utility to view the information articles which can be supplemented with the entity, sentiment, and connection path data.

- Utilizing the online utility, the person specifies the variety of hops (default N=2) on the connection path to observe.

- Utilizing the online utility, the person specifies the listing of entities to trace.

- To generate fictional information, the person chooses Generate Pattern Information to generate 10 pattern monetary information articles with random content material to be fed into the information ingestion course of. Content material is generated utilizing Amazon Bedrock and is solely fictional.

- To obtain precise information, the person chooses Obtain Newest Information to obtain the highest information occurring at this time (powered by NewsAPI.org).

- The information file (TXT format) is uploaded to an S3 bucket. Steps 8 and 9 add information to the S3 bucket routinely, however you may as well construct integrations to your most well-liked information supplier equivalent to AWS Information Alternate or any third-party information supplier to drop information articles as recordsdata into the S3 bucket. Information knowledge file content material must be formatted as

<date>{dd mmm yyyy}</date><title>{title}</title><textual content>{information content material}</textual content>. - The S3 occasion notification sends the S3 bucket or file identify to Amazon SQS (normal), which invokes a number of Lambda features to course of the information knowledge in parallel:

- Use Amazon Bedrock to extract entities talked about within the information along with any associated data, relationships, and sentiment of the talked about entity.

- Test in opposition to the data graph and use Amazon Bedrock to carry out disambiguation by reasoning utilizing the accessible data from the information and from throughout the data graph to determine the corresponding entity.

- After the entity has been positioned, seek for and return any connection paths connecting to entities marked with

INTERESTED=YESwithin the data graph which can be inside N=2 hops away.

- The net utility auto refreshes each 1 second to tug out the newest set of processed information to show on the internet utility.

Deploy the prototype

You may deploy the prototype answer and begin experimenting your self. The prototype is offered from GitHub and consists of particulars on the next:

- Deployment conditions

- Deployment steps

- Cleanup steps

Abstract

This publish demonstrated a proof of idea answer to assist portfolio managers detect second- and third-order dangers from information occasions, with out direct references to corporations they observe. By combining a data graph of intricate firm relationships with real-time information evaluation utilizing generative AI, downstream impacts will be highlighted, equivalent to manufacturing delays from provider hiccups.

Though it’s solely a prototype, this answer exhibits the promise of data graphs and language fashions to attach dots and derive alerts from noise. These applied sciences can assist funding professionals by revealing dangers sooner by means of relationship mappings and reasoning. Total, this can be a promising utility of graph databases and AI that warrants exploration to reinforce funding evaluation and decision-making.

If this instance of generative AI in monetary companies is of curiosity to what you are promoting, or you will have an analogous concept, attain out to your AWS account supervisor, and we shall be delighted to discover additional with you.

Concerning the Writer

Xan Huang is a Senior Options Architect with AWS and relies in Singapore. He works with main monetary establishments to design and construct safe, scalable, and extremely accessible options within the cloud. Outdoors of labor, Xan spends most of his free time along with his household and getting bossed round by his 3-year-old daughter. You could find Xan on LinkedIn.

Xan Huang is a Senior Options Architect with AWS and relies in Singapore. He works with main monetary establishments to design and construct safe, scalable, and extremely accessible options within the cloud. Outdoors of labor, Xan spends most of his free time along with his household and getting bossed round by his 3-year-old daughter. You could find Xan on LinkedIn.