Pushing RL Boundaries: Integrating Foundational Fashions, e.g. LLMs and VLMs, into Reinforcement Studying | by Elahe Aghapour & Salar Rahili | Apr, 2024

In-Depth Exploration of Integrating Foundational Fashions similar to LLMs and VLMs into RL Coaching Loop

Authors: Elahe Aghapour, Salar Rahili

Overview:

With the rise of the transformer structure and high-throughput compute, coaching foundational fashions has became a sizzling matter not too long ago. This has led to promising efforts to both combine or practice foundational fashions to boost the capabilities of reinforcement studying (RL) algorithms, signaling an thrilling route for the sector. Right here, we’re discussing how foundational fashions may give reinforcement studying a serious increase.

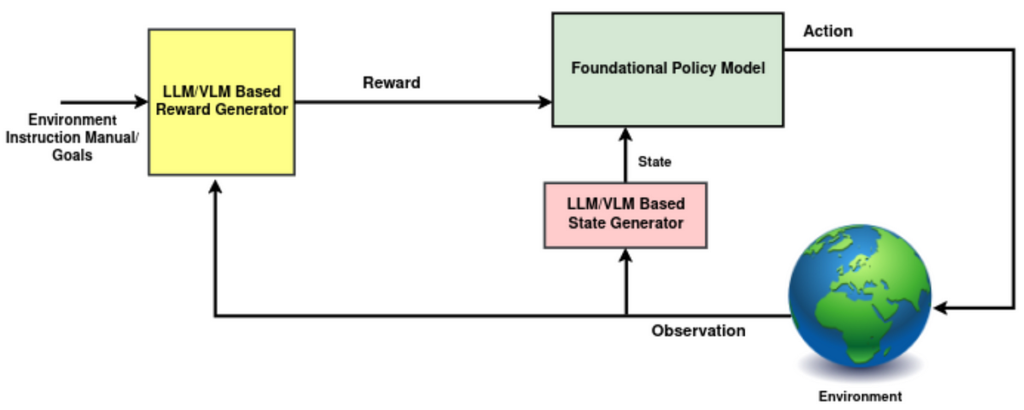

Earlier than diving into the newest analysis on how foundational fashions may give reinforcement studying a serious increase, let’s interact in a brainstorming session. Our purpose is to pinpoint areas the place pre-trained foundational fashions, notably Massive Language Fashions (LLMs) or Imaginative and prescient-Language Fashions (VLMs), may help us, or how we’d practice a foundational mannequin from scratch. A helpful method is to look at every factor of the reinforcement studying coaching loop individually, to establish the place there is likely to be room for enchancment:

1- Setting: Provided that pre-trained foundational fashions perceive the causal relationships between occasions, they are often utilized to forecast environmental modifications ensuing from present actions. Though this idea is intriguing, we’re not but conscious of any particular research that target it. There are two main causes holding us again from exploring this concept additional for now.

- Whereas the reinforcement studying coaching course of calls for extremely correct predictions for the subsequent step observations, pre-trained LLMs/VLMs haven’t been immediately skilled on datasets that allow such exact forecasting and thus fall quick on this side. It’s vital to notice, as we highlighted in our previous post, {that a} high-level planner, notably one utilized in lifelong studying situations, may successfully incorporate a foundational mannequin.

- Latency in surroundings steps is a important issue that may constrain the RL algorithm, particularly when working inside a set funds for coaching steps. The presence of a really giant mannequin that introduces important latency may be fairly restrictive. Observe that whereas it is likely to be difficult, distillation right into a smaller community is usually a resolution right here.

2- State (LLM/VLM Primarily based State Generator): Whereas specialists typically use the phrases remark and state interchangeably, there are distinctions between them. A state is a complete illustration of the surroundings, whereas an remark could solely present partial info. In the usual RL framework, we don’t typically talk about the precise transformations that extract and merge helpful options from observations, previous actions, and any inside data of the surroundings to supply “state”, the coverage enter. Such a change might be considerably enhanced by using LLMs/VLMs, which permit us to infuse the “state” with broader data of the world, physics, and historical past (discuss with Fig. 1, highlighted in pink).

3- Coverage (Foundational Coverage Mannequin): Integrating foundational fashions into the coverage, the central decision-making element in RL, may be extremely helpful. Though using such fashions to generate high-level plans has confirmed profitable, reworking the state into low-level actions has challenges we’ll delve into later. Thankfully, there was some promising analysis on this space not too long ago.

4- Reward (LLM/VLM Primarily based Reward Generator): Leveraging foundational fashions to extra precisely assess chosen actions inside a trajectory has been a main focus amongst researchers. This comes as no shock, provided that rewards have historically served because the communication channel between people and brokers, setting objectives and guiding the agent in direction of what’s desired.

- Pre-trained foundational fashions include a deep data of the world, and injecting this sort of understanding into our decision-making processes could make these selections extra in tune with human needs and extra more likely to succeed. Furthermore, utilizing foundational fashions to guage the agent’s actions can rapidly trim down the search area and equip the agent with a head begin in understanding, versus ranging from scratch.

- Pre-trained foundational fashions have been skilled on internet-scale information generated largely by people, which has enabled them to know worlds equally to people. This makes it doable to make use of foundational fashions as cost-effective annotators. They’ll generate labels or assess trajectories or rollouts on a big scale.

1- Foundational fashions in reward

It’s difficult to make use of foundational fashions to generate low degree management actions as low degree actions are extremely depending on the setting of the agent and are underrepresented in foundational fashions’ coaching dataset. Therefore, the inspiration mannequin utility is usually centered on excessive degree plans fairly than low degree actions. Reward bridges the hole between high-level planner and low degree actions the place basis fashions can be utilized. Researchers have adopted numerous methodologies integrating basis fashions for reward task. Nevertheless, the core precept revolves round using a VLM/LLM to successfully observe the progress in direction of a subgoal or process.

1.a Assigning reward values based mostly on similarity

Take into account the reward worth as a sign that signifies whether or not the agent’s earlier motion was helpful in transferring in direction of the purpose. A smart technique includes evaluating how intently the earlier motion aligns with the present goal. To place this method into follow, as may be seen in Fig. 2, it’s important to:

– Generate significant embeddings of those actions, which may be finished by way of photographs, movies, or textual content descriptions of the latest remark.

– Generate significant representations of the present goal.

– Assess the similarity between these representations.

Let’s discover the precise mechanics behind the main analysis on this space.

Dense and well-shaped reward features improve the steadiness and coaching velocity of the RL agent. Intrinsic rewards deal with this problem by rewarding the agent for novel states’ exploration. Nevertheless, in giant environments the place many of the unseen states are irrelevant to the downstream process, this method turns into much less efficient. ELLM makes use of background data of LLM to form the exploration. It queries LLM to generate a listing of doable objectives/subgoals given a listing of the agent’s accessible actions and a textual content description of the agent present remark, generated by a state captioner. Then, at every time step, the reward is computed by the semantic similarity, cosine similarity, between the LLM generated purpose and the outline of the agent’s transition.

LiFT has an identical framework but in addition leverages CLIP4Clip-style VLMs for reward task. CLIP4Clip is pre-trained to align movies and corresponding language descriptions by way of contrastive studying. In LiFT, the agent is rewarded based mostly on the alignment rating, cosine similarity, between the duty directions and movies of the agent’s corresponding habits, each encoded by CLIP4CLIP.

UAFM has an identical framework the place the principle focus is on robotic manipulation duties, e.g., stacking a set of objects. For reward task, they measure the similarity between the agent state picture and the duty description, each embedded by CLIP. They finetune CLIP on a small quantity of information from the simulated stacking area to be extra aligned on this use case.

1.b Assigning rewards by way of reasoning on auxiliary duties:

In situations the place the foundational mannequin has the right understanding of the surroundings, it turns into possible to immediately move the observations inside a trajectory to the mannequin, LLM/VLM. This analysis may be finished both by way of easy QA periods based mostly on the observations or by verifying the mannequin’s functionality in predicting the purpose solely by trying on the remark trajectory.

Read and Reward integrates the surroundings’s instruction handbook into reward era by way of two key parts, as may be seen in Fig. 3:

- QA extraction module: it creates a abstract of sport goals and options. This LLM-based module, RoBERTa-large, takes within the sport handbook and a query, and extracts the corresponding reply from the textual content. Questions are centered on the sport goal, and agent-object interplay, recognized by their significance utilizing TF-IDF. For every important object, a query as: “What occurs when the participant hits a <object>?” is added to the query set. A abstract is then shaped with the concatenation of all non-empty question-answer pairs.

- Reasoning module: Throughout gameplay, a rule-based algorithm detects “hit” occasions. Following every “hit” occasion, the LLM based mostly reasoning module is queried with the abstract of the surroundings and a query: “Must you hit a <object of interplay> if you wish to win?” the place the doable reply is restricted to {sure, no}. A “sure” response provides a constructive reward, whereas “no” results in a damaging reward.

EAGER introduces a novel technique for creating intrinsic rewards by way of a specifically designed auxiliary process. This method presents a novel idea the place the auxiliary process includes predicting the purpose based mostly on the present remark. If the mannequin predicts precisely, this means a robust alignment with the meant purpose, and thus, a bigger intrinsic reward is given based mostly on the prediction confidence degree. To realize this purpose, To perform this, two modules are employed:

- Query Era (QG): This element works by masking all nouns and adjectives within the detailed goal offered by the person.

- Query Answering (QA): This can be a mannequin skilled in a supervised method, which takes the remark, query masks, and actions, and predicts the masked tokens.

(P.S. Though this work doesn’t make the most of a foundational mannequin, we’ve included it right here because of its intriguing method, which may be simply tailored to any pre-trained LLM)

1.c Producing reward perform code

Up so far, we’ve mentioned producing reward values immediately for the reinforcement studying algorithms. Nevertheless, working a big mannequin at each step of the RL loop can considerably decelerate the velocity of each coaching and inference. To bypass this bottleneck, one technique includes using our foundational mannequin to generate the code for the reward perform. This enables for the direct era of reward values at every step, streamlining the method.

For the code era schema to work successfully, two key parts are required:

1- A code generator, LLM, which receives an in depth immediate containing all the mandatory info to craft the code.

2- A refinement course of that evaluates and enhances the code in collaboration with the code generator.

Let’s take a look at the important thing contributions for producing reward code:

R2R2S generates reward perform code by way of two most important parts:

- LLM based mostly movement descriptor: This module makes use of a pre-defined template to explain robotic actions, and leverages Massive Language Fashions (LLMs) to know the movement. The Movement Descriptor fills within the template, changing placeholders e.g. “Vacation spot Level Coordinate” with particular particulars, to explain the specified robotic movement inside a pre-defined template.

- LLM based mostly reward coder: this element generates the reward perform by processing a immediate containing: a movement description, a listing of features with their description that LLM can use to generate the reward perform code, an instance code of how the response ought to appear like, and constraints and guidelines the reward perform should observe.

Text2Reward develops a way to generate dense reward features as an executable code inside iterative refinement. Given the subgoal of the duty, it has two key parts:

- LLM-based reward coder: generates reward perform code. Its immediate consists of: an summary of remark and accessible actions, a compact pythonic fashion surroundings to signify the configuration of the objects, robotic, and callable features; a background data for reward perform design (e.g. “reward perform for process X usually features a time period for the space between object x and y”), and a few-shot examples. They assume entry to a pool of instruction, and reward perform pairs that high okay related directions are retrieved as few-shot examples.

- LLM-Primarily based Refinement: as soon as the reward code is generated, the code is executed to establish the syntax errors and runtime errors. These feedbacks are built-in into subsequent prompts to generate extra refined reward features. Moreover, human suggestions is requested based mostly on a process execution video by the present coverage.

Auto MC-Reward has an identical algorithm to Text2Reward, to generate the reward perform code, see Fig. 4. The principle distinction is within the refinement stage the place it has two modules, each LLMs:

- LLM-Primarily based Reward Critic: It evaluates the code and gives suggestions on whether or not the code is self-consistent and freed from syntax and semantic errors.

- LLM-Primarily based Trajectory Analyser: It opinions the historic info of the interplay between the skilled agent and the surroundings and makes use of it to information the modifications of the reward perform.

EUREKA generates reward code with out the necessity for task-specific prompting, predefined reward templates, or predefined few-shot examples. To realize this purpose, it has two phases:

- LLM-based code era: The uncooked surroundings code, the duty, generic reward design and formatting ideas are fed to the LLM as context and LLM returns the executable reward code with a listing of its parts.

- Evolutionary search and refinement: At every iteration, EUREKA queries the LLM to generate a number of i.i.d reward features. Coaching an agent with executable reward features gives suggestions on how nicely the agent is performing. For an in depth and centered evaluation of the rewards, the suggestions additionally consists of scalar values for every element of the reward perform. The LLM takes top-performing reward code together with this detailed suggestions to mutate the reward code in-context. In every subsequent iteration, the LLM makes use of the highest reward code as a reference to generate Ok extra i.i.d reward codes. This iterative optimization continues till a specified variety of iterations has been reached.

Inside these two steps, EUREKA is ready to generate reward features that outperform knowledgeable human-engineered rewards with none process particular templates.

1.d. Practice a reward mannequin based mostly on preferences (RLAIF)

Another technique is to make use of a foundational mannequin to generate information for coaching a reward perform mannequin. The numerous successes of Reinforcement Studying with Human Suggestions (RLHF) have not too long ago drawn elevated consideration in direction of using skilled reward features on a bigger scale. The guts of such algorithms is using a desire dataset to coach a reward mannequin which might subsequently be built-in into reinforcement studying algorithms. Given the excessive value related to producing desire information (e.g., motion A is preferable to motion B) by way of human suggestions, there’s rising curiosity in developing this dataset by acquiring suggestions from an AI agent, i.e. VLM/LLM. Coaching a reward perform, utilizing AI-generated information and integrating it inside a reinforcement studying algorithm, is called Reinforcement Studying with AI Suggestions (RLAIF).

MOTIF requires entry to a passive dataset of observations with adequate protection. Initially, LLM is queried with a abstract of desired behaviors inside the surroundings and a textual content description of two randomly sampled observations. It then generates the desire, choosing between 1, 2, or 0 (indicating no desire), as seen in Fig. 5. This course of constructs a dataset of preferences between remark pairs. Subsequently, this dataset is used to coach a reward mannequin using preference based RL techniques.

2- Basis fashions as Coverage

Reaching the aptitude to coach a foundational coverage that not solely excels in duties beforehand encountered but in addition possesses the power to cause about and adapt to new duties utilizing previous studying, is an ambition inside the RL neighborhood. Such a coverage would ideally generalize from previous experiences to sort out novel conditions and, by way of environmental suggestions, obtain objectives beforehand unseen with human-like adaptability.

Nevertheless, a number of challenges stand in the best way of coaching such brokers. Amongst these challenges are:

- The need of managing a really giant mannequin, which introduces important latency into the decision-making course of for low-level management actions.

- The requirement to gather an enormous quantity of interplay information throughout a wide selection of duties to allow efficient studying.

- Moreover, the method of coaching a really giant community from scratch utilizing RL introduces further complexities. It is because backpropagation effectivity inherently is weaker in RL in comparison with supervised coaching strategies .

To date, it’s largely been groups with substantial assets and top-notch setups who’ve actually pushed the envelope on this area.

AdA paved the best way for coaching an RL basis mannequin inside the X.Land 2.0 3D surroundings. This mannequin achieves human time-scale adaptation on held-out take a look at duties with none additional coaching. The mannequin’s success is based on three elements:

- The core of the AdA’s studying mechanism is a Transformer-XL structure from 23 to 265 million parameters, employed alongside the Muesli RL algorithm. Transformer-XL takes in a trajectory of observations, actions, and rewards from time t to T and outputs a sequence of hidden states for every time step. The hidden state is utilized to foretell reward, worth, and motion distribution π. The mixture of each long-term and short-term reminiscence is important for quick adaptation. Lengthy-term reminiscence is achieved by way of sluggish gradient updates, whereas short-term reminiscence may be captured inside the context size of the transformer. This distinctive mixture permits the mannequin to protect data throughout a number of process makes an attempt by retaining reminiscence throughout trials, though the surroundings resets between trials.

- The mannequin advantages from meta-RL coaching throughout 1⁰⁴⁰ completely different partially observable Markov choice processes (POMDPs) duties. Since transformers are meta-learners, no further meta step is required.

- Given the scale and variety of the duty pool, many duties will both be too simple or too exhausting to generate a great coaching sign. To sort out this, they used an automatic curriculum to prioritize duties which can be inside its functionality frontier.

RT-2 introduces a way to co-finetune a VLM on each robotic trajectory information and vision-language duties, leading to a coverage mannequin known as RT-2. To allow vision-language fashions to generate low-level actions, actions are discretized into 256 bins and represented as language tokens.

By representing actions as language tokens, RT-2 can immediately make the most of pre-existing VLM architectures with out requiring substantial modifications. Therefore, VLM enter consists of robotic digicam picture and textual process description formatted equally to Imaginative and prescient Query Answering duties and the output is a collection of language tokens that signify the robotic’s low-level actions; see Fig. 6.

They observed that co-finetuning on each sorts of information with the unique net information results in extra generalizable insurance policies. The co-finetuning course of equips RT-2 with the power to know and execute instructions that weren’t explicitly current in its coaching information, showcasing outstanding adaptability. This method enabled them to leverage internet-scale pretraining of VLM to generalize to novel duties by way of semantic reasoning.

3- Basis Fashions as State Illustration

In RL, a coverage’s understanding of the surroundings at any given second comes from its “state” which is actually the way it perceives its environment. Trying on the RL block diagram, an inexpensive module to inject world data into is the state. If we are able to enrich observations with common data helpful for finishing duties, the coverage can decide up new duties a lot quicker in comparison with RL brokers that start studying from scratch.

PR2L introduces a novel method to inject the background data of VLMs from web scale information into RL.PR2L employs generative VLMs which generate language in response to a picture and a textual content enter. As VLMs are proficient in understanding and responding to visible and textual inputs, they’ll present a wealthy supply of semantic options from observations to be linked to actions.

PR2L, queries a VLM with a task-relevant immediate for every visible remark acquired by the agent, and receives each the generated textual response and the mannequin’s intermediate representations. They discard the textual content and use some or all the fashions intermediate illustration generated for each the visible and textual content enter and the VLM’s generated textual response as “promptable representations”. As a result of variable dimension of those representations, PR2L incorporates an encoder-decoder Transformer layer to embed all the knowledge embedded in promptable representations into a set dimension embedding. This embedding, mixed with any accessible non-visual remark information, is then offered to the coverage community, representing the state of the agent. This progressive integration permits the RL agent to leverage the wealthy semantic understanding and background data of VLMs, facilitating extra speedy and knowledgeable studying of duties.

Additionally Learn Our Earlier Publish: Towards AGI: LLMs and Foundational Models’ Roles in the Lifelong Learning Revolution

References:

[1] ELLM: Du, Yuqing, et al. “Guiding pretraining in reinforcement studying with giant language fashions.” 2023.

[2] Text2Reward: Xie, Tianbao, et al. “Text2reward: Automated dense reward perform era for reinforcement studying.” 2023.

[3] R2R2S: Yu, Wenhao, et al. “Language to rewards for robotic ability synthesis.” 2023.

[4] EUREKA: Ma, Yecheng Jason, et al. “Eureka: Human-level reward design through coding giant language fashions.” 2023.

[5] MOTIF: Klissarov, Martin, et al. “Motif: Intrinsic motivation from synthetic intelligence suggestions.” 2023.

[6] Read and Reward: Wu, Yue, et al. “Learn and reap the rewards: Studying to play atari with the assistance of instruction manuals.” 2024.

[7] Auto MC-Reward: Li, Hao, et al. “Auto MC-reward: Automated dense reward design with giant language fashions for minecraft.” 2023.

[8] EAGER: Carta, Thomas, et al. “Keen: Asking and answering questions for automated reward shaping in language-guided RL.” 2022.

[9] LiFT: Nam, Taewook, et al. “LiFT: Unsupervised Reinforcement Studying with Basis Fashions as Academics.” 2023.

[10] UAFM: Di Palo, Norman, et al. “In the direction of a unified agent with basis fashions.” 2023.

[11] RT-2: Brohan, Anthony, et al. “Rt-2: Imaginative and prescient-language-action fashions switch net data to robotic management.” 2023.

[12] AdA: Staff, Adaptive Agent, et al. “Human-timescale adaptation in an open-ended process area.” 2023.

[13] PR2L: Chen, William, et al. “Imaginative and prescient-Language Fashions Present Promptable Representations for Reinforcement Studying.” 2024.

[14] Clip4Clip: Luo, Huaishao, et al. “Clip4clip: An empirical examine of clip for finish to finish video clip retrieval and captioning.” 2022.

[15] Clip: Radford, Alec, et al. “Studying transferable visible fashions from pure language supervision.” 2021.

[16] RoBERTa: Liu, Yinhan, et al. “Roberta: A robustly optimized bert pretraining method.” 2019.

[17] Preference based RL: SWirth, Christian, et al. “A survey of preference-based reinforcement studying strategies.” 2017.

[18] Muesli: Hessel, Matteo, et al. “Muesli: Combining enhancements in coverage optimization.” 2021.

[19] Melo, Luckeciano C. “Transformers are meta-reinforcement learners.” 2022.

[20] RLHF: Ouyang, Lengthy, et al. “Coaching language fashions to observe directions with human suggestions, 2022.