Reaching scalability and high quality in textual content clustering – Google Analysis Weblog

Clustering is a elementary, ubiquitous drawback in knowledge mining and unsupervised machine studying, the place the purpose is to group collectively comparable objects. The usual types of clustering are metric clustering and graph clustering. In metric clustering, a given metric area defines distances between knowledge factors, that are grouped collectively based mostly on their separation. In graph clustering, a given graph connects comparable knowledge factors by edges, and the clustering course of teams knowledge factors collectively based mostly on the connections between them. Each clustering varieties are significantly helpful for big corpora the place class labels can’t be outlined. Examples of such corpora are the ever-growing digital textual content collections of assorted web platforms, with purposes together with organizing and looking out paperwork, figuring out patterns in textual content, and recommending related paperwork to customers (see extra examples within the following posts: clustering related queries based on user intent and practical differentially private clustering).

The selection of textual content clustering methodology typically presents a dilemma. One strategy is to make use of embedding fashions, corresponding to BERT or RoBERTa, to outline a metric clustering drawback. One other is to make the most of cross-attention (CA) fashions, corresponding to PaLM or GPT, to outline a graph clustering drawback. CA fashions can present extremely correct similarity scores, however developing the enter graph might require a prohibitive quadratic variety of inference calls to the mannequin. Then again, a metric area can effectively be outlined by distances of embeddings produced by embedding fashions. Nevertheless, these similarity distances are usually of considerable lower-quality in comparison with the similarity indicators of CA fashions, and therefore the produced clustering may be of a lot lower-quality.

|

|

| An summary of the embedding-based and cross-attention–based mostly similarity scoring features and their scalability vs. high quality dilemma. |

Motivated by this, in “KwikBucks: Correlation Clustering with Cheap-Weak and Expensive-Strong Signals”, presented at ICLR 2023, we describe a novel clustering algorithm that successfully combines the scalability advantages from embedding fashions and the standard from CA fashions. This graph clustering algorithm has question entry to each the CA mannequin and the embedding mannequin, nonetheless, we apply a funds on the variety of queries made to the CA mannequin. This algorithm makes use of the CA mannequin to reply edge queries, and advantages from limitless entry to similarity scores from the embedding mannequin. We describe how this proposed setting bridges algorithm design and sensible issues, and may be utilized to different clustering issues with comparable out there scoring features, corresponding to clustering issues on photographs and media. We exhibit how this algorithm yields high-quality clusters with nearly a linear variety of question calls to the CA mannequin. Now we have additionally open-sourced the info utilized in our experiments.

The clustering algorithm

The KwikBucks algorithm is an extension of the well-known KwikCluster algorithm (Pivot algorithm). The high-level concept is to first choose a set of paperwork (i.e., facilities) with no similarity edge between them, after which type clusters round these facilities. To acquire the standard from CA fashions and the runtime effectivity from embedding fashions, we introduce the novel combo similarity oracle mechanism. On this strategy, we make the most of the embedding mannequin to information the number of queries to be despatched to the CA mannequin. When given a set of heart paperwork and a goal doc, the combo similarity oracle mechanism outputs a middle from the set that’s just like the goal doc, if current. The combo similarity oracle allows us to save lots of on funds by limiting the variety of question calls to the CA mannequin when deciding on facilities and forming clusters. It does this by first rating facilities based mostly on their embedding similarity to the goal doc, after which querying the CA mannequin for the pair (i.e., goal doc and ranked heart), as proven under.

|

| A combo similarity oracle that for a set of paperwork and a goal doc, returns the same doc from the set, if current. |

We then carry out a put up processing step to merge clusters if there’s a sturdy connection between two of them, i.e., when the variety of connecting edges is increased than the variety of lacking edges between two clusters. Moreover, we apply the next steps for additional computational financial savings on queries made to the CA mannequin, and to enhance efficiency at runtime:

- We leverage query-efficient correlation clustering to type a set of facilities from a set of randomly chosen paperwork as an alternative of choosing these facilities from all of the paperwork (within the illustration under, the middle nodes are pink).

- We apply the combo similarity oracle mechanism to carry out the cluster task step in parallel for all non-center paperwork and go away paperwork with no comparable heart as singletons. Within the illustration under, the assignments are depicted by blue arrows and initially two (non-center) nodes are left as singletons because of no task.

- Within the post-processing step, to make sure scalability, we use the embedding similarity scores to filter down the potential mergers (within the illustration under, the inexperienced dashed boundaries present these merged clusters).

|

| Illustration of progress of the clustering algorithm on a given graph occasion. |

Outcomes

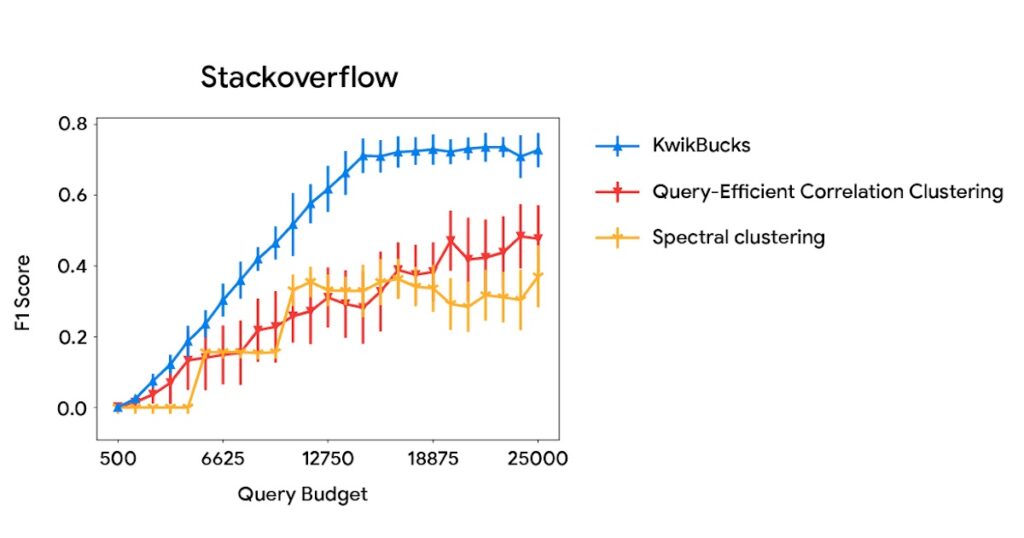

We consider the novel clustering algorithm on numerous datasets with completely different properties utilizing completely different embedding-based and cross-attention–based mostly fashions. We examine the clustering algorithm’s efficiency with the 2 finest performing baselines (see the paper for extra particulars):

To guage the standard of clustering, we use precision and recall. Precision is used to calculate the proportion of comparable pairs out of all co-clustered pairs and recall is the proportion of co-clustered comparable pairs out of all comparable pairs. To measure the standard of the obtained options from our experiments, we use the F1-score, which is the harmonic mean of the precision and recall, the place 1.0 is the very best potential worth that signifies good precision and recall, and 0 is the bottom potential worth that signifies if both precision or recall are zero. The desk under stories the F1-score for Kwikbucks and numerous baselines within the case that we enable solely a linear variety of queries to the CA mannequin. We present that Kwikbucks gives a considerable increase in efficiency with a forty five% relative enchancment in comparison with one of the best baseline when averaging throughout all datasets.

The determine under compares the clustering algorithm’s efficiency with baselines utilizing completely different question budgets. We observe that KwikBucks constantly outperforms different baselines at numerous budgets.

|

| A comparability of KwikBucks with top-2 baselines when allowed completely different budgets for querying the cross-attention mannequin. |

Conclusion

Textual content clustering typically presents a dilemma within the alternative of similarity perform: embedding fashions are scalable however lack high quality, whereas cross-attention fashions supply high quality however considerably harm scalability. We current a clustering algorithm that provides one of the best of each worlds: the scalability of embedding fashions and the standard of cross-attention fashions. KwikBucks can be utilized to different clustering issues with a number of similarity oracles of various accuracy ranges. That is validated with an exhaustive set of experiments on numerous datasets with various properties. See the paper for extra particulars.

Acknowledgements

This undertaking was initiated throughout Sandeep Silwal’s summer season internship at Google in 2022. We wish to categorical our gratitude to our co-authors, Andrew McCallum, Andrew Nystrom, Deepak Ramachandran, and Sandeep Silwal, for his or her invaluable contributions to this work. We additionally thank Ravi Kumar and John Guilyard for help with this weblog put up.