Some Kick Ass Immediate Engineering Strategies to Enhance our LLM Fashions

Picture created with DALL-E3

Synthetic Intelligence has been an entire revolution within the tech world.

Its skill to imitate human intelligence and carry out duties that have been as soon as thought-about solely human domains nonetheless amazes most of us.

Nonetheless, irrespective of how good these late AI leap forwards have been, there’s at all times room for enchancment.

And that is exactly the place immediate engineering kicks in!

Enter this subject that may considerably improve the productiveness of AI fashions.

Let’s uncover all of it collectively!

Immediate engineering is a fast-growing area inside AI that focuses on bettering the effectivity and effectiveness of language fashions. It’s all about crafting good prompts to information AI fashions to supply our desired outputs.

Consider it as studying easy methods to give higher directions to somebody to make sure they perceive and execute a job appropriately.

Why Immediate Engineering Issues

- Enhanced Productiveness: By utilizing high-quality prompts, AI fashions can generate extra correct and related responses. This implies much less time spent on corrections and extra time leveraging AI’s capabilities.

- Price Effectivity: Coaching AI fashions is resource-intensive. Immediate engineering can scale back the necessity for retraining by optimizing mannequin efficiency by way of higher prompts.

- Versatility: A well-crafted immediate could make AI fashions extra versatile, permitting them to deal with a broader vary of duties and challenges.

Earlier than diving into probably the most superior methods, let’s recall two of probably the most helpful (and fundamental) immediate engineering methods.

Sequential Pondering with “Let’s assume step-by-step”

Immediately it’s well-known that LLM fashions’ accuracy is considerably improved when including the phrase sequence “Let’s assume step-by-step”.

Why… you may ask?

Nicely, it is because we’re forcing the mannequin to interrupt down any job into a number of steps, thus ensuring the mannequin has sufficient time to course of every of them.

As an illustration, I may problem GPT3.5 with the next immediate:

If John has 5 pears, then eats 2, buys 5 extra, then offers 3 to his pal, what number of pears does he have?

The mannequin will give me a solution immediately. Nonetheless, if I add the ultimate “Let’s assume step-by-step”, I’m forcing the mannequin to generate a considering course of with a number of steps.

Few-Shot Prompting

Whereas the Zero-shot prompting refers to asking the mannequin to carry out a job with out offering any context or earlier information, the few-shot prompting method implies that we current the LLM with a couple of examples of our desired output together with some particular query.

For instance, if we need to provide you with a mannequin that defines any time period utilizing a poetic tone, it could be fairly onerous to clarify. Proper?

Nonetheless, we may use the next few-shot prompts to steer the mannequin within the route we would like.

Your job is to reply in a constant model aligned with the next model.

<consumer>: Train me about resilience.

<system>: Resilience is sort of a tree that bends with the wind however by no means breaks.

It’s the skill to bounce again from adversity and hold shifting ahead.

<consumer>: Your enter right here.

When you have not tried it out but, you may go problem GPT.

Nonetheless, as I’m fairly certain most of you already know these fundamental methods, I’ll attempt to problem you with some superior methods.

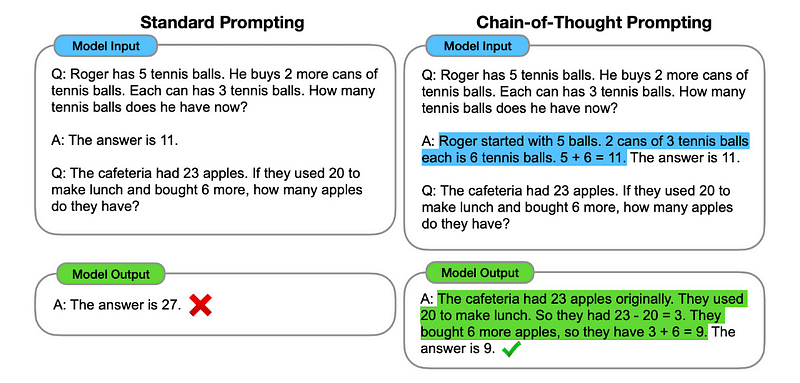

1. Chain of Thought (CoT) Prompting

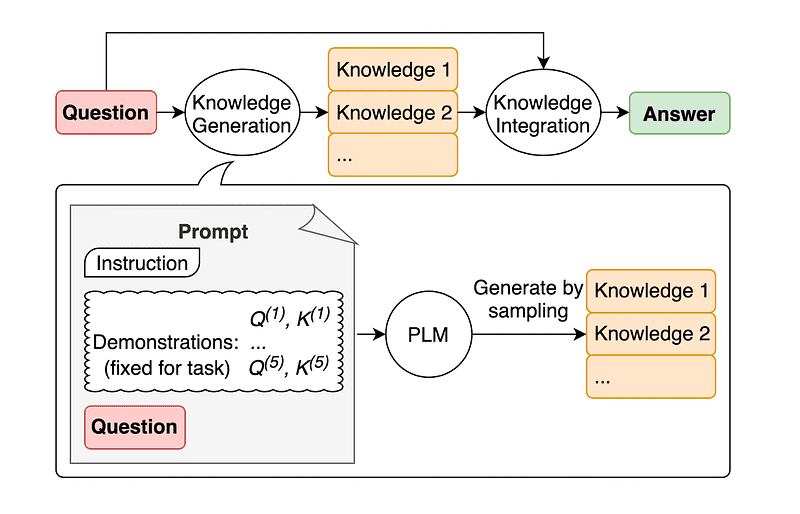

Launched by Google in 2022, this methodology includes instructing the mannequin to bear a number of reasoning phases earlier than delivering the last word response.

Sounds acquainted proper? In that case, you might be completely proper.

It’s like merging each Sequential Pondering and Few-Shot Prompting.

How?

Primarily, CoT prompting directs the LLM to course of data sequentially. This implies we exemplify easy methods to remedy a primary drawback with a number of steps reasoning after which ship to the mannequin our actual job, anticipating it to emulate a comparable chain of thought when responding to the precise question we would like it to unravel.

Picture taken from the paper.

So, following the very first instance, we may present a immediate with the primary drawback and a sequential rationalization of easy methods to remedy it. Then, we are able to ship our enter and see how magic occurs!

<consumer> If John has 5 pears, then eats 2, buys 5 extra, then offers 3 to his pal, what number of pears does he have? Let’s assume step-by-step.

<agent> Let’s go step-by-step. John begins with 5 pears. He eats 2 pears, so he has 5–2 = 3 pears left. Then, he buys 5 extra pears. So, he now has 3 + 5 = 8 pears. Lastly, he offers 3 pears to his pal. Subsequently, he has 8–3 = 5 pears left. So, in any case these steps, John has 5 pears.

<consumer> Your enter right here.

Nonetheless, most of you should be considering… Do I’ve to consider a sequential technique to remedy any drawback any time I need to ask one thing on ChatGPT?

Nicely… you aren’t the primary one! And this leads us to…

2. Computerized Chain-of-Thought (Auto-CoT)

In 2022, Zhang and colleagues launched a technique to keep away from this handbook course of. There are two most important causes to keep away from any handbook job:

- It may be boring.

- It may well yield unhealthy outcomes – for example, when our psychological course of is incorrect.

They steered utilizing LLMs mixed with the “Let’s assume step-by-step” immediate to sequentially produce reasoning chains for every demonstration.

This implies asking ChatGPT easy methods to remedy any drawback sequentially after which utilizing this exact same instance to coach it easy methods to remedy another drawback.

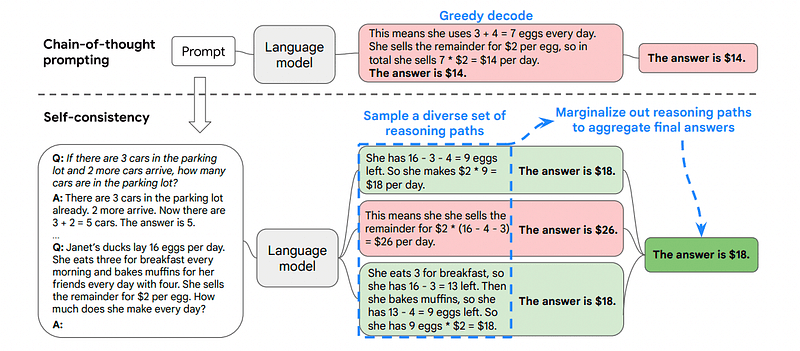

3. Self-Consistency

Self-consistency is one other fascinating prompting method that goals to enhance the chain of thought prompting for extra complicated reasoning issues.

So… what’s the principle distinction?

The principle concept underneath Self-Consistency is being conscious that we are able to practice the mannequin with a incorrect instance. Simply think about I remedy the earlier drawback with a incorrect psychological course of:

<Person> If John has 5 pears, then eats 2, buys 5 extra, then offers 3 to his pal, what number of pears does he have? Let’s assume step-by-step.

<Agent> Begin with 5 pears. John eats 2 pears. Then, he offers 3 pears to his pal. These actions will be mixed: 2 (eaten) + 3 (given) = 5 pears in complete affected. Now, subtract the whole pears affected from the preliminary 5 pears: 5 (preliminary) – 5 (affected) = 0 pears left.

Then, another job I ship to the mannequin will probably be incorrect.

This is the reason Self-Consistency includes sampling from numerous reasoning paths, every of them containing a sequence of thought, after which letting the LLM select the perfect and most constant path to unravel the issue.

Picture taken from the paper

On this case, and following the very first instance once more, we are able to present the mannequin other ways of fixing the issue.

<Person> If John has 5 pears, then eats 2, buys 5 extra, then offers 3 to his pal, what number of pears does he have?

<Agent> Begin with 5 pears. John eats 2 pears, leaving him with 5–2 = 3 pears. He buys 5 extra pears, which brings the whole to three + 5 = 8 pears. Lastly, he offers 3 pears to his pal, so he has 8–3 = 5 pears left.

<Person> If John has 5 pears, then eats 2, buys 5 extra, then offers 3 to his pal, what number of pears does he have?

<Agent> Begin with 5 pears. He then buys 5 extra pears. John eats 2 pears now. These actions will be mixed: 2 (eaten) + 5 (purchased) = 7 pears in complete. Subtract the pear that Jon has eaten from the whole quantity of pears 7 (complete quantity) – 2 (eaten) = 5 pears left.

<Person> Your enter right here.

And right here comes the final method.

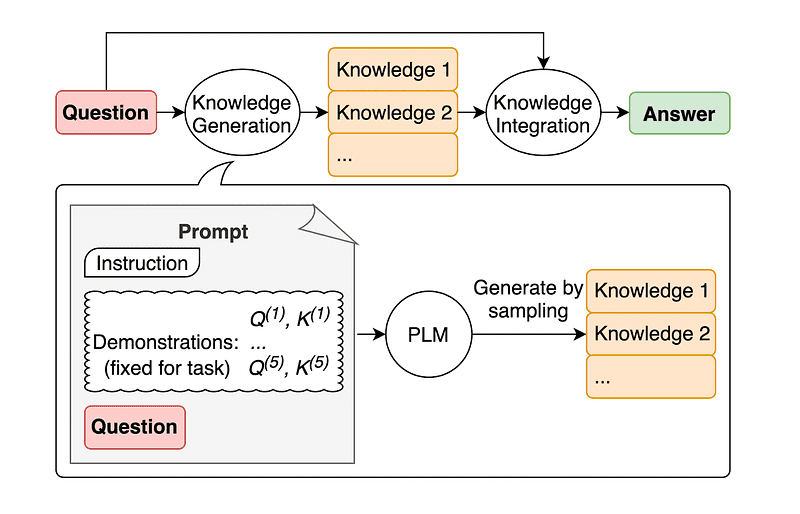

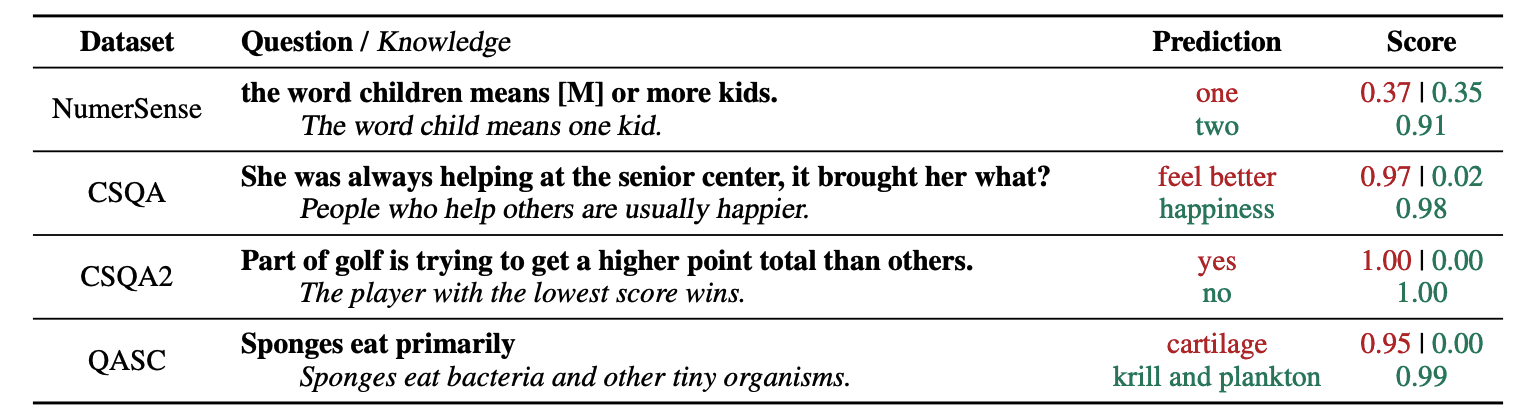

4. Basic Information Prompting

A typical apply of immediate engineering is augmenting a question with further information earlier than sending the ultimate API name to GPT-3 or GPT-4.

In line with Jiacheng Liu and Co, we are able to at all times add some information to any request so the LLM is aware of higher concerning the query.

Picture taken from the paper.

So for example, when asking ChatGPT if a part of golf is attempting to get the next level complete than others, it should validate us. However, the principle objective of golf is kind of the alternative. This is the reason we are able to add some earlier information telling it “The participant with the decrease rating wins”.

So.. what’s the humorous half if we’re telling the mannequin precisely the reply?

On this case, this system is used to enhance the way in which LLM interacts with us.

So quite than pulling supplementary context from an outdoor database, the paper’s authors suggest having the LLM produce its personal information. This self-generated information is then built-in into the immediate to bolster commonsense reasoning and provides higher outputs.

So that is how LLMs will be improved with out rising its coaching dataset!

Immediate engineering has emerged as a pivotal method in enhancing the capabilities of LLM. By iterating and bettering prompts, we are able to talk in a extra direct method to AI fashions and thus get hold of extra correct and contextually related outputs, saving each time and assets.

For tech fans, knowledge scientists, and content material creators alike, understanding and mastering immediate engineering is usually a helpful asset in harnessing the complete potential of AI.

By combining rigorously designed enter prompts with these extra superior methods, having the ability set of immediate engineering will undoubtedly provide you with an edge within the coming years.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is at present working within the Knowledge Science subject utilized to human mobility. He’s a part-time content material creator centered on knowledge science and expertise. You may contact him on LinkedIn, Twitter or Medium.