The Finest Optimization Algorithm for Your Neural Community | by Riccardo Andreoni | Oct, 2023

How to decide on it and decrease your neural community coaching time.

Creating any machine studying mannequin entails a rigorous experimental course of that follows the idea-experiment-evaluation cycle.

The above cycle is repeated a number of occasions till passable efficiency ranges are achieved. The “experiment” part entails each the coding and the coaching steps of the machine studying mannequin. As fashions turn out to be extra advanced and are skilled over a lot bigger datasets, coaching time inevitably expands. As a consequence, coaching a big deep neural community could be painfully sluggish.

Luckily for information science practitioners, there exist a number of methods to speed up the coaching course of, together with:

- Switch Studying.

- Weight Initialization, as Glorot or He initialization.

- Batch Normalization for coaching information.

- Choosing a dependable activation operate.

- Use a quicker optimizer.

Whereas all of the methods I identified are vital, on this publish I’ll focus deeply on the final level. I’ll describe a number of algorithm for neural community parameters optimization, highlighting each their benefits and limitations.

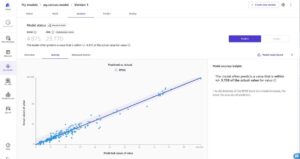

Within the final part of this publish, I’ll current a visualization displaying the comparability between the mentioned optimization algorithms.

For sensible implementation, all of the code used on this article could be accessed on this GitHub repository:

Traditonally, Batch Gradient Descent is taken into account the default selection for the optimizer methodology in neural networks.