How Can We Successfully Compress Massive Language Fashions with One-Bit Weights? This Synthetic Intelligence Analysis Proposes PB-LLM: Exploring the Potential of Partially-Binarized LLMs

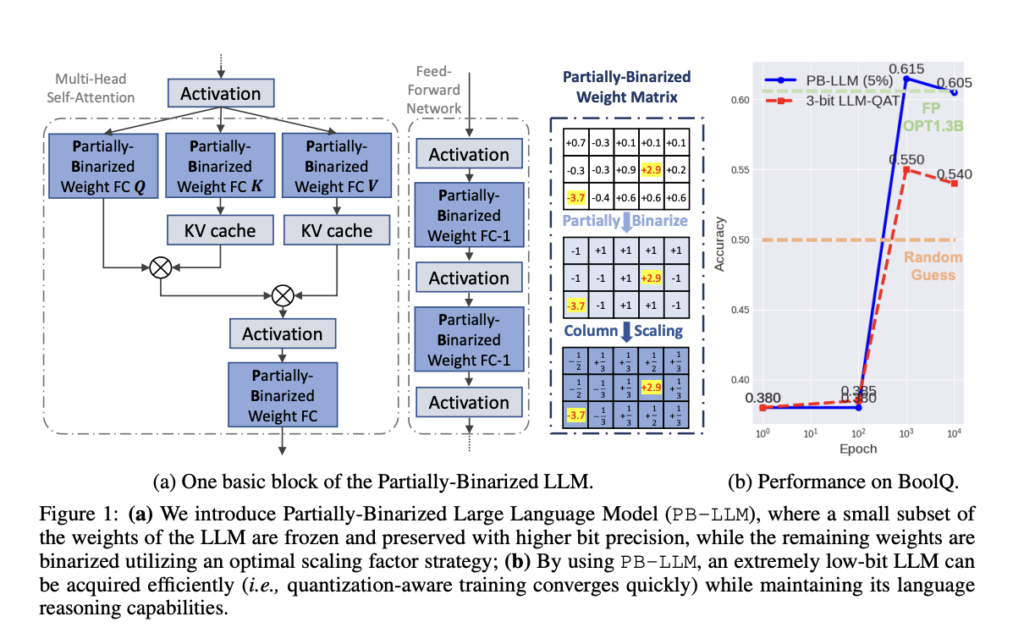

In Massive Language Fashions (LLMs), Partially-Binarized LLMs (PB-LLM) is a cutting-edge method for attaining excessive low-bit quantization in LLMs with out sacrificing language reasoning capabilities. PB-LLM strategically filters salient weights throughout binarization, reserving them for higher-bit storage. Furthermore, it introduces post-training quantization (PTQ) and quantization-aware coaching (QAT) strategies to get better the reasoning capability of quantized LLMs. This method represents a big development in community binarization for LLMs.

Researchers from the Illinois Institute of Know-how, Huomo AI, and UC Berkeley launched PB-LLM as an revolutionary method for excessive low-bit quantization whereas preserving language reasoning capability. Their course addresses the constraints of present binarization algorithms and emphasizes the importance of salient weights. Their research additional explores PTQ and QAT methods to get better reasoning capability in quantized LLMs. Their findings contribute to developments in LLM community binarization, with the PB-LLM code obtainable for additional exploration and implementation.

Their methodology delves into the problem of deploying LLMs on memory-constrained gadgets. It explores community binarization, lowering weight bit-width to 1 bit to compress LLMs. Their proposed method, PB-LLM, goals to realize extraordinarily low-bit quantization whereas preserving language reasoning capability. Their analysis additionally investigates the salient-weight property of LLM quantization and employs PTQ and QAT methods to regain reasoning capability in quantized LLMs.

Their method introduces PB-LLM as an revolutionary methodology for attaining extraordinarily low-bit quantization in LLMs whereas preserving their language reasoning capability. It addresses the constraints of present binarization algorithms by emphasizing the significance of salient weights. PB-LLM selectively bins a fraction of salient penalties into higher-bit storage, enabling partial binarization.

PB-LLM selectively binarizes a fraction of those salient weights, assigning them to higher-bit storage. The paper extends PB-LLM’s capabilities by means of PTQ and QAT methodologies, revitalizing the efficiency of low-bit quantized LLMs. These developments contribute considerably to community binarization for LLMs and provide accessible code for additional exploration. Their method explored the viability of binarization methods for quantizing LLMs. Present binarization algorithms battle to quantize LLMs, suggesting the need for revolutionary approaches successfully.

Their analysis underscores the function of salient weights in efficient binarization and proposes optimum scaling methods. The mixed use of PTQ and QAT can restore quantized LLM capacities. The offered PB-LLM code encourages analysis and improvement in LLM community binarization, notably in resource-constrained environments.

In conclusion, the paper introduces PB-LLM as an revolutionary answer for excessive low-bit quantization in LLMs whereas preserving language reasoning capabilities. It addresses the constraints of present binarization algorithms and emphasizes the significance of salient weights. PB-LLM selectively binarizes salient weights, allocating them to higher-bit storage. Their analysis extends PB-LLM by means of PTQ and QAT methodologies, revitalizing low-bit quantized LLMs’ efficiency. These developments considerably contribute to community binarization for LLMs.

Take a look at the Paper and Github. All Credit score For This Analysis Goes To the Researchers on This Undertaking. Additionally, don’t overlook to hitch our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra.

If you like our work, you will love our newsletter..

We’re additionally on WhatsApp. Join our AI Channel on Whatsapp..

Good day, My title is Adnan Hassan. I’m a consulting intern at Marktechpost and shortly to be a administration trainee at American Specific. I’m at the moment pursuing a twin diploma on the Indian Institute of Know-how, Kharagpur. I’m enthusiastic about know-how and wish to create new merchandise that make a distinction.