Re-weighted gradient descent through distributionally sturdy optimization – Google Analysis Weblog

Deep neural networks (DNNs) have develop into important for fixing a variety of duties, from customary supervised studying (image classification using ViT) to meta-learning. Probably the most commonly-used paradigm for studying DNNs is empirical risk minimization (ERM), which goals to determine a community that minimizes the common loss on coaching information factors. A number of algorithms, together with stochastic gradient descent (SGD), Adam, and Adagrad, have been proposed for fixing ERM. Nevertheless, a downside of ERM is that it weights all of the samples equally, usually ignoring the uncommon and harder samples, and specializing in the better and plentiful samples. This results in suboptimal efficiency on unseen information, particularly when the coaching information is scarce.

To beat this problem, latest works have developed information re-weighting methods for bettering ERM efficiency. Nevertheless, these approaches concentrate on particular studying duties (corresponding to classification) and/or require studying an additional meta model that predicts the weights of every information level. The presence of a further mannequin considerably will increase the complexity of coaching and makes them unwieldy in follow.

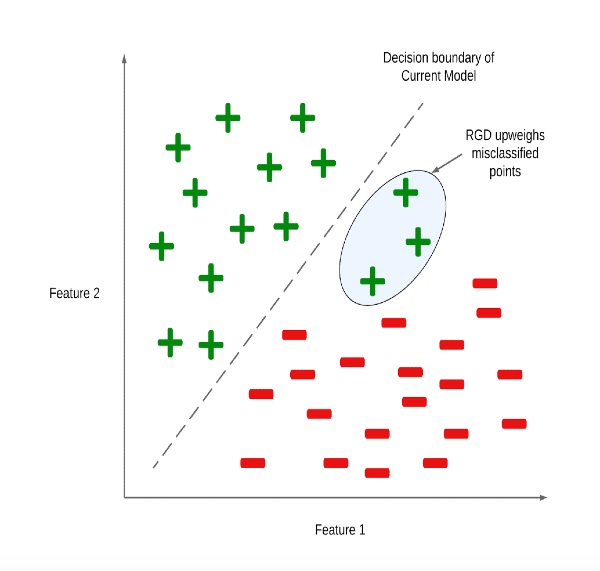

In “Stochastic Re-weighted Gradient Descent via Distributionally Robust Optimization” we introduce a variant of the classical SGD algorithm that re-weights information factors throughout every optimization step based mostly on their issue. Stochastic Re-weighted Gradient Descent (RGD) is a light-weight algorithm that comes with a easy closed-form expression, and could be utilized to resolve any studying process utilizing simply two strains of code. At any stage of the training course of, RGD merely reweights an information level because the exponential of its loss. We empirically reveal that the RGD reweighting algorithm improves the efficiency of quite a few studying algorithms throughout numerous duties, starting from supervised studying to meta studying. Notably, we present enhancements over state-of-the-art strategies on DomainBed and Tabular classification. Furthermore, the RGD algorithm additionally boosts efficiency for BERT utilizing the GLUE benchmarks and ViT on ImageNet-1K.

Distributionally sturdy optimization

Distributionally robust optimization (DRO) is an strategy that assumes a “worst-case” information distribution shift might happen, which might hurt a mannequin’s efficiency. If a mannequin has focussed on figuring out few spurious options for prediction, these “worst-case” information distribution shifts may result in the misclassification of samples and, thus, a efficiency drop. DRO optimizes the loss for samples in that “worst-case” distribution, making the mannequin sturdy to perturbations (e.g., eradicating a small fraction of factors from a dataset, minor up/down weighting of information factors, and so on.) within the information distribution. Within the context of classification, this forces the mannequin to position much less emphasis on noisy options and extra emphasis on helpful and predictive options. Consequently, fashions optimized utilizing DRO are likely to have better generalization guarantees and stronger performance on unseen samples.

Impressed by these outcomes, we develop the RGD algorithm as a method for fixing the DRO goal. Particularly, we concentrate on Kullback–Leibler divergence-based DRO, the place one provides perturbations to create distributions which might be near the unique information distribution within the KL divergence metric, enabling a mannequin to carry out nicely over all attainable perturbations.

|

| Determine illustrating DRO. In distinction to ERM, which learns a mannequin that minimizes anticipated loss over unique information distribution, DRO learns a mannequin that performs nicely on a number of perturbed variations of the unique information distribution. |

Stochastic re-weighted gradient descent

Think about a random subset of samples (known as a mini-batch), the place every information level has an related loss Li. Conventional algorithms like SGD give equal significance to all of the samples within the mini-batch, and replace the parameters of the mannequin by descending alongside the averaged gradients of the lack of these samples. With RGD, we reweight every pattern within the mini-batch and provides extra significance to factors that the mannequin identifies as harder. To be exact, we use the loss as a proxy to calculate the problem of some extent, and reweight it by the exponential of its loss. Lastly, we replace the mannequin parameters by descending alongside the weighted common of the gradients of the samples.

On account of stability concerns, in our experiments we clip and scale the loss earlier than computing its exponential. Particularly, we clip the loss at some threshold T, and multiply it with a scalar that’s inversely proportional to the edge. An vital facet of RGD is its simplicity because it doesn’t depend on a meta mannequin to compute the weights of information factors. Moreover, it may be carried out with two strains of code, and mixed with any widespread optimizers (corresponding to SGD, Adam, and Adagrad.

Outcomes

We current empirical outcomes evaluating RGD with state-of-the-art methods on customary supervised studying and area adaptation (confer with the paper for outcomes on meta studying). In all our experiments, we tune the clipping stage and the training price of the optimizer utilizing a held-out validation set.

Supervised studying

We consider RGD on a number of supervised studying duties, together with language, imaginative and prescient, and tabular classification. For the duty of language classification, we apply RGD to the BERT mannequin educated on the General Language Understanding Evaluation (GLUE) benchmark and present that RGD outperforms the BERT baseline by +1.94% with a typical deviation of 0.42%. To guage RGD’s efficiency on imaginative and prescient classification, we apply RGD to the ViT-S mannequin educated on the ImageNet-1K dataset, and present that RGD outperforms the ViT-S baseline by +1.01% with a typical deviation of 0.23%. Furthermore, we carry out hypothesis tests to verify that these outcomes are statistically vital with a p-value that’s lower than 0.05.

For tabular classification, we use MET as our baseline, and take into account numerous binary and multi-class datasets from UC Irvine’s machine learning repository. We present that making use of RGD to the MET framework improves its efficiency by 1.51% and 1.27% on binary and multi-class tabular classification, respectively, reaching state-of-the-art efficiency on this area.

|

|

| Efficiency of RGD for classification of varied tabular datasets. |

Area generalization

To guage RGD’s generalization capabilities, we use the usual DomainBed benchmark, which is often used to check a mannequin’s out-of-domain efficiency. We apply RGD to FRR, a latest strategy that improved out-of-domain benchmarks, and present that RGD with FRR performs a median of 0.7% higher than the FRR baseline. Moreover, we verify with hypothesis tests that almost all benchmark outcomes (apart from Workplace House) are statistically vital with a p-value lower than 0.05.

|

| Efficiency of RGD on DomainBed benchmark for distributional shifts. |

Class imbalance and equity

To reveal that fashions realized utilizing RGD carry out nicely regardless of class imbalance, the place sure courses within the dataset are underrepresented, we examine RGD’s efficiency with ERM on long-tailed CIFAR-10. We report that RGD improves the accuracy of baseline ERM by a median of two.55% with a typical deviation of 0.23%. Moreover, we carry out hypothesis tests and make sure that these outcomes are statistically vital with a p-value of lower than 0.05.

|

| Efficiency of RGD on the long-tailed Cifar-10 benchmark for sophistication imbalance area. |

Limitations

The RGD algorithm was developed utilizing widespread analysis datasets, which had been already curated to take away corruptions (e.g., noise and incorrect labels). Subsequently, RGD might not present efficiency enhancements in situations the place coaching information has a excessive quantity of corruptions. A possible strategy to deal with such situations is to use an outlier removal technique to the RGD algorithm. This outlier elimination approach must be able to filtering out outliers from the mini-batch and sending the remaining factors to our algorithm.

Conclusion

RGD has been proven to be efficient on a wide range of duties, together with out-of-domain generalization, tabular illustration studying, and sophistication imbalance. It’s easy to implement and could be seamlessly built-in into present algorithms with simply two strains of code change. General, RGD is a promising approach for reinforcing the efficiency of DNNs, and will assist push the boundaries in numerous domains.

Acknowledgements

The paper described on this weblog publish was written by Ramnath Kumar, Arun Sai Suggala, Dheeraj Nagaraj and Kushal Majmundar. We prolong our honest gratitude to the nameless reviewers, Prateek Jain, Pradeep Shenoy, Anshul Nasery, Lovish Madaan, and the quite a few devoted members of the machine studying and optimization staff at Google Analysis India for his or her invaluable suggestions and contributions to this work.