Accenture creates a Information Help resolution utilizing generative AI providers on AWS

This put up is co-written with Ilan Geller and Shuyu Yang from Accenture.

Enterprises at this time face main challenges relating to utilizing their data and information bases for each inner and exterior enterprise operations. With consistently evolving operations, processes, insurance policies, and compliance necessities, it may be extraordinarily tough for workers and clients to remain updated. On the identical time, the unstructured nature of a lot of this content material makes it time consuming to seek out solutions utilizing conventional search.

Internally, workers can typically spend numerous hours looking down data they should do their jobs, resulting in frustration and diminished productiveness. And once they can’t discover solutions, they must escalate points or make selections with out full context, which might create danger.

Externally, clients may discover it irritating to find the knowledge they’re searching for. Though enterprise information bases have, over time, improved the shopper expertise, they’ll nonetheless be cumbersome and tough to make use of. Whether or not searching for solutions to a product-related query or needing details about working hours and areas, a poor expertise can result in frustration, or worse, a buyer defection.

In both case, as information administration turns into extra complicated, generative AI presents a game-changing alternative for enterprises to attach individuals to the knowledge they should carry out and innovate. With the fitting technique, these clever options can rework how information is captured, organized, and used throughout a corporation.

To assist deal with this problem, Accenture collaborated with AWS to construct an revolutionary generative AI resolution known as Information Help. Through the use of AWS generative AI providers, the crew has developed a system that may ingest and comprehend huge quantities of unstructured enterprise content material.

Moderately than conventional key phrase searches, customers can now ask questions and extract exact solutions in an easy, conversational interface. Generative AI understands context and relationships inside the information base to ship customized and correct responses. Because it fields extra queries, the system constantly improves its language processing by machine studying (ML) algorithms.

Since launching this AI help framework, corporations have seen dramatic enhancements in worker information retention and productiveness. By offering fast and exact entry to data and enabling workers to self-serve, this resolution reduces coaching time for brand spanking new hires by over 50% and cuts escalations by as much as 40%.

With the facility of generative AI, enterprises can rework how information is captured, organized, and shared throughout the group. By unlocking their current information bases, corporations can enhance worker productiveness and buyer satisfaction. As Accenture’s collaboration with AWS demonstrates, the way forward for enterprise information administration lies in AI-driven techniques that evolve by interactions between people and machines.

Accenture is working with AWS to assist purchasers deploy Amazon Bedrock, make the most of probably the most superior foundational fashions resembling Amazon Titan, and deploy industry-leading applied sciences resembling Amazon SageMaker JumpStart and Amazon Inferentia alongside different AWS ML providers.

This put up gives an summary of an end-to-end generative AI resolution developed by Accenture for a manufacturing use case utilizing Amazon Bedrock and different AWS providers.

Answer overview

A big public well being sector consumer serves tens of millions of residents each day, they usually demand quick access to up-to-date data in an ever-changing well being panorama. Accenture has built-in this generative AI performance into an current FAQ bot, permitting the chatbot to supply solutions to a broader array of consumer questions. Rising the power for residents to entry pertinent data in a self-service method saves the division money and time, lessening the necessity for name middle agent interplay. Key options of the answer embrace:

- Hybrid intent method – Makes use of generative and pre-trained intents

- Multi-lingual help – Converses in English and Spanish

- Conversational evaluation – Studies on consumer wants, sentiment, and issues

- Pure conversations – Maintains context with human-like pure language processing (NLP)

- Clear citations – Guides customers to the supply data

Accenture’s generative AI resolution gives the next benefits over current or conventional chatbot frameworks:

- Generates correct, related, and natural-sounding responses to consumer queries rapidly

- Remembers the context and solutions follow-up questions

- Handles queries and generates responses in a number of languages (resembling English and Spanish)

- Constantly learns and improves responses based mostly on consumer suggestions

- Is well integrable along with your current internet platform

- Ingests an enormous repository of enterprise information base

- Responds in a human-like method

- The evolution of the information is constantly obtainable with minimal to no effort

- Makes use of a pay-as-you-use mannequin with no upfront prices

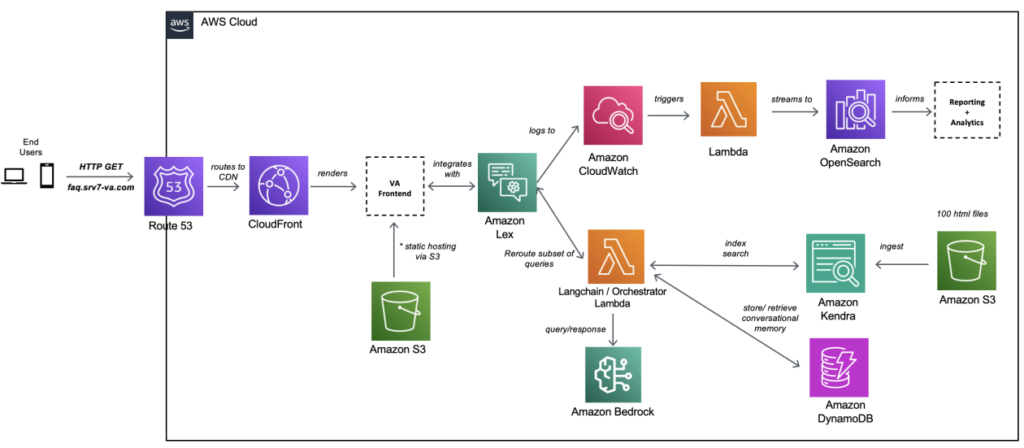

The high-level workflow of this resolution entails the next steps:

- Customers create a easy integration with current internet platforms.

- Information is ingested into the platform as a bulk add on day 0 after which incremental uploads day 1+.

- Consumer queries are processed in actual time with the system scaling as required to fulfill consumer demand.

- Conversations are saved in software databases (Amazon Dynamo DB) to help multi-round conversations.

- The Anthropic Claude basis mannequin is invoked by way of Amazon Bedrock, which is used to generate question responses based mostly on probably the most related content material.

- The Anthropic Claude basis mannequin is used to translate queries in addition to responses from English to different desired languages to help multi-language conversations.

- The Amazon Titan basis mannequin is invoked by way of Amazon Bedrock to generate vector embeddings.

- Content material relevance is set by similarity of uncooked content material embeddings and the consumer question embedding through the use of Pinecone vector database embeddings.

- The context together with the consumer’s query is appended to create a immediate, which is offered as enter to the Anthropic Claude mannequin. The generated response is offered again to the consumer by way of the online platform.

The next diagram illustrates the answer structure.

The structure move could be understood in two elements:

Within the following sections, we talk about completely different points of the answer and its growth in additional element.

Mannequin choice

The method for mannequin choice included regress testing of assorted fashions obtainable in Amazon Bedrock, which included AI21 Labs, Cohere, Anthropic, and Amazon basis fashions. We checked for supported use instances, mannequin attributes, most tokens, value, accuracy, efficiency, and languages. Based mostly on this, we chosen Claude-2 as greatest fitted to this use case.

Information supply

We created an Amazon Kendra index and added an information supply utilizing internet crawler connectors with a root internet URL and listing depth of two ranges. A number of webpages have been ingested into the Amazon Kendra index and used as the info supply.

GenAI chatbot request and response course of

Steps on this course of encompass an end-to-end interplay with a request from Amazon Lex and a response from a big language mannequin (LLM):

- The consumer submits the request to the conversational front-end software hosted in an Amazon Simple Storage Service (Amazon S3) bucket by Amazon Route 53 and Amazon CloudFront.

- Amazon Lex understands the intent and directs the request to the orchestrator hosted in an AWS Lambda perform.

- The orchestrator Lambda perform performs the next steps:

- The perform interacts with the applying database, which is hosted in a DynamoDB-managed database. The database shops the session ID and consumer ID for dialog historical past.

- One other request is shipped to the Amazon Kendra index to get the highest 5 related search outcomes to construct the related context. Utilizing this context, modified immediate is constructed required for the LLM mannequin.

- The connection is established between Amazon Bedrock and the orchestrator. A request is posted to the Amazon Bedrock Claude-2 mannequin to get the response from the LLM mannequin chosen.

- The info is post-processed from the LLM response and a response is shipped to the consumer.

On-line reporting

The net reporting course of consists of the next steps:

- Finish-users work together with the chatbot by way of a CloudFront CDN front-end layer.

- Every request/response interplay is facilitated by the AWS SDK and sends community visitors to Amazon Lex (the NLP part of the bot).

- Metadata in regards to the request/response pairings are logged to Amazon CloudWatch.

- The CloudWatch log group is configured with a subscription filter that sends logs into Amazon OpenSearch Service.

- As soon as obtainable in OpenSearch Service, logs can be utilized to generate stories and dashboards utilizing Kibana.

Conclusion

On this put up, we showcased how Accenture is utilizing AWS generative AI providers to implement an end-to-end method in the direction of digital transformation. We recognized the gaps in conventional query answering platforms and augmented generative intelligence inside its framework for sooner response occasions and constantly enhancing the system whereas partaking with the customers throughout the globe. Attain out to the Accenture Heart of Excellence crew to dive deeper into the answer and deploying this resolution to your purchasers.

This Information Help platform could be utilized to completely different industries, together with however not restricted to well being sciences, monetary providers, manufacturing, and extra. This platform gives pure, human-like responses to questions utilizing information that’s secured. This platform allows effectivity, productiveness, and extra correct actions for its customers can take.

The joint effort builds on the 15-year strategic relationship between the businesses and makes use of the identical confirmed mechanisms and accelerators constructed by the Accenture AWS Business Group (AABG).

Join with the AABG crew at accentureaws@amazon.com to drive enterprise outcomes by reworking to an clever information enterprise on AWS.

For additional details about generative AI on AWS utilizing Amazon Bedrock or Amazon SageMaker, we suggest the next sources:

You may also sign up for the AWS generative AI newsletter, which incorporates instructional sources, blogs, and repair updates.

Concerning the Authors

Ilan Geller is the Managing Director at Accenture with deal with Synthetic Intelligence, serving to purchasers Scale Synthetic Intelligence purposes and the World GenAI COE Companion Lead for AWS.

Ilan Geller is the Managing Director at Accenture with deal with Synthetic Intelligence, serving to purchasers Scale Synthetic Intelligence purposes and the World GenAI COE Companion Lead for AWS.

Shuyu Yang is Generative AI and Giant Language Mannequin Supply Lead and in addition leads CoE (Heart of Excellence) Accenture AI (AWS DevOps skilled) groups.

Shuyu Yang is Generative AI and Giant Language Mannequin Supply Lead and in addition leads CoE (Heart of Excellence) Accenture AI (AWS DevOps skilled) groups.

Shikhar Kwatra is an AI/ML specialist options architect at Amazon Internet Providers, working with a number one World System Integrator. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and helps the GSI associate in constructing strategic {industry} options on AWS.

Shikhar Kwatra is an AI/ML specialist options architect at Amazon Internet Providers, working with a number one World System Integrator. He has earned the title of one of many Youngest Indian Grasp Inventors with over 500 patents within the AI/ML and IoT domains. Shikhar aids in architecting, constructing, and sustaining cost-efficient, scalable cloud environments for the group, and helps the GSI associate in constructing strategic {industry} options on AWS.

Jay Pillai is a Principal Answer Architect at Amazon Internet Providers. On this position, he capabilities because the World Generative AI Lead Architect and in addition the Lead Architect for Provide Chain Options with AABG. As an Data Expertise Chief, Jay focuses on synthetic intelligence, information integration, enterprise intelligence, and consumer interface domains. He holds 23 years of in depth expertise working with a number of purchasers throughout provide chain, authorized applied sciences, actual property, monetary providers, insurance coverage, funds, and market analysis enterprise domains.

Jay Pillai is a Principal Answer Architect at Amazon Internet Providers. On this position, he capabilities because the World Generative AI Lead Architect and in addition the Lead Architect for Provide Chain Options with AABG. As an Data Expertise Chief, Jay focuses on synthetic intelligence, information integration, enterprise intelligence, and consumer interface domains. He holds 23 years of in depth expertise working with a number of purchasers throughout provide chain, authorized applied sciences, actual property, monetary providers, insurance coverage, funds, and market analysis enterprise domains.

Karthik Sonti leads a world crew of Options Architects targeted on conceptualizing, constructing, and launching horizontal, purposeful, and vertical options with Accenture to assist our joint clients rework their enterprise in a differentiated method on AWS.

Karthik Sonti leads a world crew of Options Architects targeted on conceptualizing, constructing, and launching horizontal, purposeful, and vertical options with Accenture to assist our joint clients rework their enterprise in a differentiated method on AWS.