Researchers from NYU and Meta AI Research Enhancing Social Conversational Brokers by Studying from Pure Dialogue between Customers and a Deployed Mannequin, with out Additional Annotations

Human enter is a key tactic for enhancing social dialogue fashions. In reinforcement studying with human suggestions, when many human annotations are required to ensure a passable reward operate, there was super enchancment in studying from suggestions. The sources of suggestions embody numerical scores, rankings, or feedback in pure language from customers a couple of dialogue flip or dialogue episode, in addition to binary assessments of a bot flip. Most works intentionally collect these alerts using crowdworkers since pure customers may wish to keep away from being bothered with doing so or might supply inaccurate info in the event that they do.

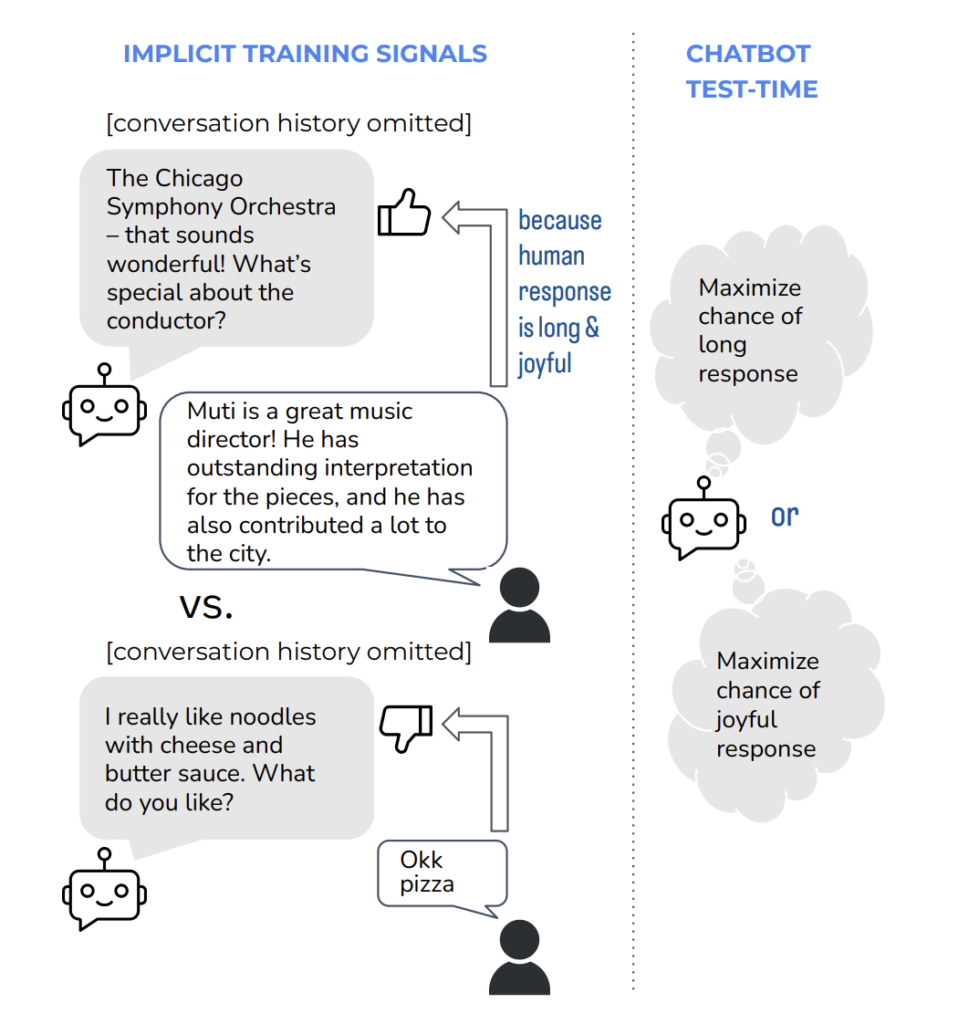

On this examine, researchers from New York College and Meta AI contemplate the scenario the place they’ve plenty of deployment-time dialogue episodes that characteristic actual discussions between the mannequin and natural customers. They’re making an attempt to find out whether or not they can glean any implicit indications from these pure person discussions and make the most of these alerts to reinforce the dialogue mannequin. There are two causes for this. First, though they won’t contribute specific annotations, natural customers most practically approximate the information distribution for future deployment. Second, utilizing implicit alerts from earlier episodes of dialogue saves cash that may have been spent on crowdsourcing.

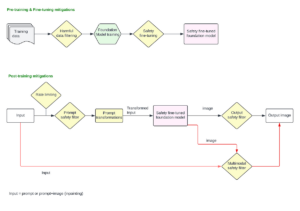

Extra exactly, they study whether or not they can regulate the chatbot to make use of the very best implicit suggestions alerts like the amount, size, sentiment, or responsiveness of upcoming human solutions. They use publicly out there, de-identified information from the BlenderBot on-line deployment to analyze this downside. Utilizing this information, they practice pattern and rerank fashions, evaluating numerous implicit suggestions alerts. Their novel fashions are found to be superior to the baseline replies by means of each automated and human judgments. Moreover, they inquire whether or not supporting these measures will end in undesirable behaviors, provided that their implicit suggestions alerts are tough proxy indicators of the caliber of each generations.

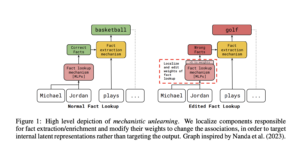

Sure, relying on the sign used. Specifically, optimizing for longer dialogue lengths may trigger the mannequin to supply contentious opinions or reply in a hostile or combative method. Then again, optimizing for a good response or temper reduces these behaviors relative to the baseline. They conclude that implicit suggestions from people is a useful coaching sign that may improve general efficiency, however the particular motion employed has vital behavioral repercussions.

Try the Paper. All Credit score For This Analysis Goes To the Researchers on This Venture. Additionally, don’t neglect to hitch our 27k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

Aneesh Tickoo is a consulting intern at MarktechPost. He’s at present pursuing his undergraduate diploma in Information Science and Synthetic Intelligence from the Indian Institute of Expertise(IIT), Bhilai. He spends most of his time engaged on tasks geared toward harnessing the facility of machine studying. His analysis curiosity is picture processing and is keen about constructing options round it. He loves to attach with individuals and collaborate on attention-grabbing tasks.