MIT Researchers Obtain a Breakthrough in Privateness Safety for Machine Studying Fashions with Most likely Roughly Appropriate (PAC) Privateness

MIT researchers have made important progress in addressing the problem of defending delicate information encoded inside machine-learning fashions. A staff of scientists has developed a machine-learning mannequin that may precisely predict whether or not a affected person has most cancers from lung scan photos. Nonetheless, sharing the mannequin with hospitals worldwide poses a major threat of potential information extraction by malicious brokers. To deal with this challenge, the researchers have launched a novel privateness metric known as Most likely Roughly Appropriate (PAC) Privateness, together with a framework that determines the minimal quantity of noise required to guard delicate information.

Standard privateness approaches, corresponding to Differential Privateness, give attention to stopping an adversary from distinguishing the utilization of particular information by including monumental quantities of noise, which reduces the mannequin’s accuracy. PAC Privateness takes a special perspective by evaluating an adversary’s issue in reconstructing components of the delicate information even after the noise has been added. As an example, if the delicate information are human faces, differential privateness would forestall the adversary from figuring out if a particular particular person’s face was within the dataset. In distinction, PAC Privateness explores whether or not an adversary may extract an approximate silhouette that may very well be acknowledged as a selected particular person’s face.

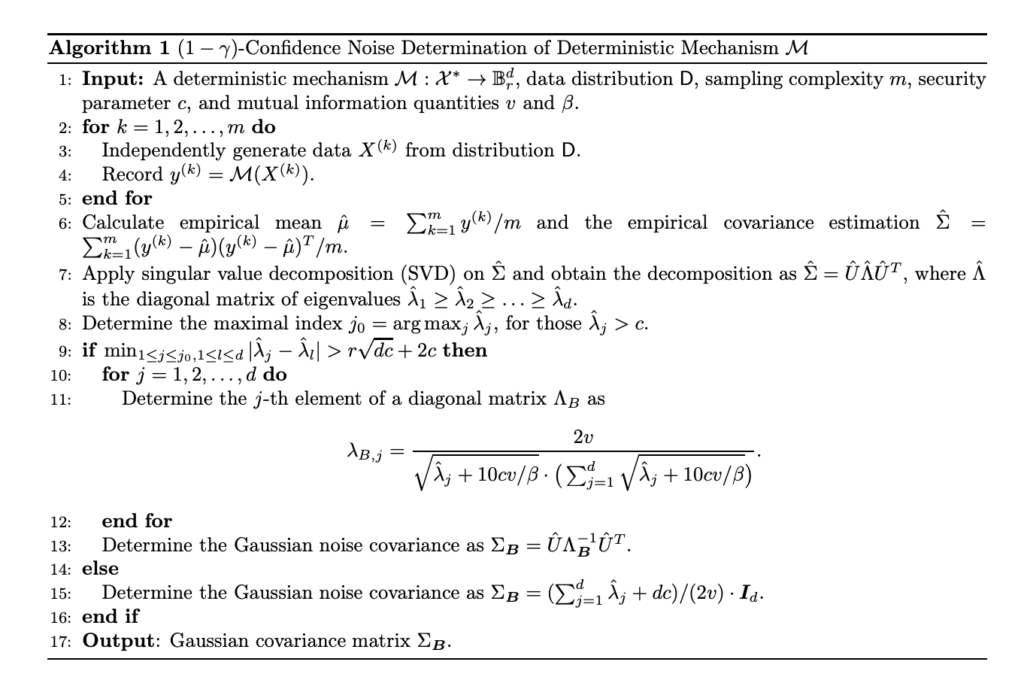

To implement PAC Privateness, the researchers developed an algorithm that determines the optimum quantity of noise to be added to a mannequin, guaranteeing privateness even in opposition to adversaries with infinite computing energy. The algorithm depends on the uncertainty or entropy of the unique information from the adversary’s perspective. By subsampling information and working the machine-learning coaching algorithm a number of occasions, the algorithm compares the variance throughout totally different outputs to find out the mandatory quantity of noise. A smaller variance signifies that much less noise is required.

One of many key benefits of the PAC Privateness algorithm is that it doesn’t require data of the mannequin’s interior workings or the coaching course of. Customers can specify their desired confidence degree concerning the adversary’s skill to reconstruct the delicate information, and the algorithm offers the optimum quantity of noise to realize that aim. Nonetheless, it’s necessary to notice that the algorithm doesn’t estimate the lack of accuracy ensuing from including noise to the mannequin. Moreover, implementing PAC Privateness could be computationally costly because of the repeated coaching of machine-learning fashions on numerous subsampled datasets.

To reinforce PAC Privateness, researchers recommend modifying the machine-learning coaching course of to extend stability, which reduces the variance between subsample outputs. This method would cut back the algorithm’s computational burden and reduce the quantity of noise wanted. Moreover, extra steady fashions typically exhibit decrease generalization errors, resulting in extra correct predictions on new information.

Whereas the researchers acknowledge the necessity for additional exploration of the connection between stability, privateness, and generalization error, their work presents a promising step ahead in defending delicate information in machine-learning fashions. By leveraging PAC Privateness, engineers can develop fashions that safeguard coaching information whereas sustaining accuracy in real-world purposes. With the potential for considerably lowering the quantity of noise required, this method opens up new prospects for safe information sharing within the healthcare area and past.

Try the Paper. All Credit score For This Analysis Goes To the Researchers on This Mission. Additionally, don’t overlook to hitch our 26k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

🚀 Check Out 800+ AI Tools in AI Tools Club

Niharika is a Technical consulting intern at Marktechpost. She is a 3rd 12 months undergraduate, at the moment pursuing her B.Tech from Indian Institute of Expertise(IIT), Kharagpur. She is a extremely enthusiastic particular person with a eager curiosity in Machine studying, Information science and AI and an avid reader of the most recent developments in these fields.