A Group of Researchers from China Developed WebGLM: A Net-Enhanced Query-Answering System based mostly on the Common Language Mannequin (GLM)

Massive language fashions (LLMs), together with GPT-3, PaLM, OPT, BLOOM, and GLM-130B, have vastly pushed the boundaries of what’s potential for computer systems to understand and produce by way of language. One of the vital basic language purposes, query answering, has been considerably improved as a result of current LLM breakthroughs. Based on current research, the efficiency of LLMs’ closed-book QA and in-context studying QA is on par with that of supervised fashions, which contributes to our understanding of LLMs’ capability for memorization. However even LLMs have a finite capability, and so they fall in need of human expectations when confronted with issues that want appreciable distinctive information. Subsequently, current makes an attempt have targeting constructing LLMs enhanced with exterior information, together with retrieval and on-line search.

For example, WebGPT is able to on-line shopping, prolonged solutions to sophisticated inquiries, and equally useful references. Regardless of its recognition, the unique WebGPT strategy has but to be broadly adopted. First, it depends on many expert-level annotations of shopping trajectories, well-written responses, and reply desire labeling, all of which require costly assets, numerous time, and intensive coaching. Second, by telling the system to work together with an internet browser, give operation directions (akin to “Search,” “Learn,” and “Quote”), after which collect pertinent materials from on-line sources, the habits cloning strategy (i.e., imitation studying) necessitates that its primary mannequin, GPT-3, resemble human specialists.

Lastly, the multi-turn construction of internet browsing necessitates intensive computational assets and will be excessively sluggish for person expertise for instance, it takes WebGPT-13B round 31 seconds to reply to a 500-token question. Researchers from Tsinghua College, Beihang College and Zhipu.AI introduce WebGLM on this research, a sound web-enhanced high quality assurance system constructed on the 10-billion-parameter Common Language Mannequin (GLM-10B). Determine 1 reveals an illustration of 1. It’s efficient, reasonably priced, delicate to human preferences, and most importantly, it’s of a caliber that’s on par with WebGPT. To realize good efficiency, the system makes use of a number of novel approaches and designs, together with An LLM-augmented Retriever, a two-staged retriever that mixes fine-grained LLM-distilled retrieval with a coarse-grained internet search.

The capability of LLMs like GPT-3 to spontaneously settle for the precise references is the supply of inspiration for this system, which is likely to be refined to boost smaller dense retrievers. A GLM-10B-based response generator bootstrapped through LLM in-context studying and educated on quoted long-formed QA samples is called a bootstrapped generator. LLMs could also be ready to offer high-quality knowledge utilizing ample citation-based filtering as an alternative of counting on costly human specialists to write down in WebGPT. A scorer that’s taught utilizing person thumbs-up alerts from on-line QA boards can perceive the preferences of the human majority on the subject of numerous replies.

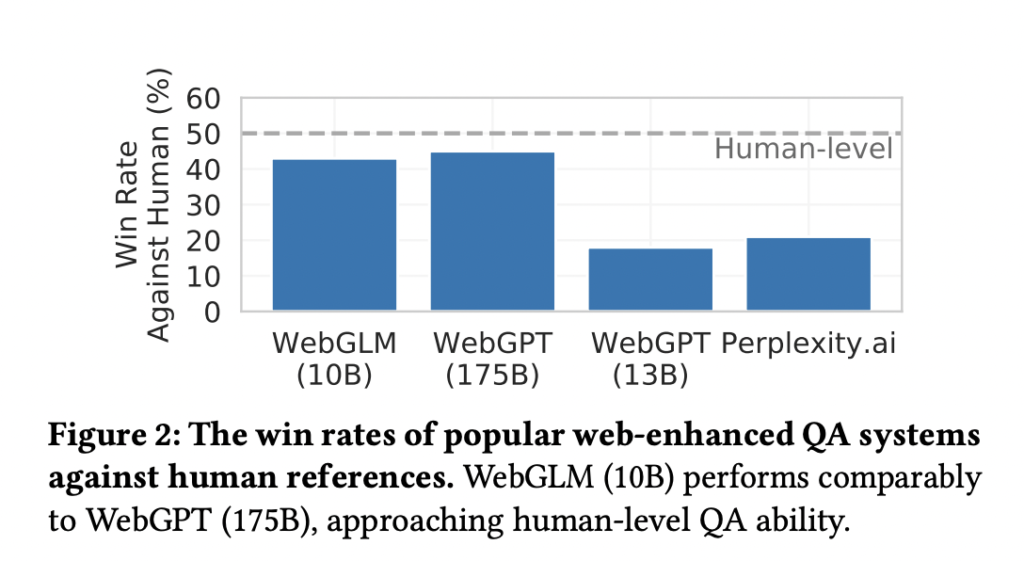

They reveal {that a} appropriate dataset structure may produce a high-quality scorer in comparison with WebGPT’s professional labeling. The outcomes of their quantitative ablation assessments and in-depth human analysis present how environment friendly and efficient the WebGLM system is. Particularly, WebGLM (10B) outperforms WebGPT (175B) on their Turing take a look at and outperforms the equally sized WebGPT (13B). WebGLM is without doubt one of the biggest publicly out there web-enhanced QA techniques as of this submission, because of its enhancement over the one publicly accessible system, Perplexity.ai. In conclusion, they supply the next on this paper: • They construct WebGLM, an efficient web-enhanced high quality assurance system with human preferences. It performs equally to WebGPT (175B) and considerably higher than WebGPT (13B), the same dimension.

It additionally surpasses Perplexity.ai, a preferred system powered by LLMs and serps. • They establish WebGPT’s limitations on real-world deployments. They suggest a set of latest designs and techniques to permit WebGLM’s excessive accuracy whereas attaining environment friendly and cost-effective benefits over baseline techniques. • They formulate the human analysis metrics for evaluating web-enhanced QA techniques. Intensive human analysis and experiments reveal WebGLM’s sturdy functionality and generate insights into the system’s future developments. The code implementation is accessible on GitHub.

Verify Out The Paper and Github. Don’t neglect to hitch our 24k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra. If in case you have any questions concerning the above article or if we missed something, be at liberty to electronic mail us at Asif@marktechpost.com

Featured Instruments From AI Tools Club

🚀 Check Out 100’s AI Tools in AI Tools Club

Aneesh Tickoo is a consulting intern at MarktechPost. He’s at the moment pursuing his undergraduate diploma in Knowledge Science and Synthetic Intelligence from the Indian Institute of Expertise(IIT), Bhilai. He spends most of his time engaged on tasks geared toward harnessing the facility of machine studying. His analysis curiosity is picture processing and is keen about constructing options round it. He loves to attach with individuals and collaborate on attention-grabbing tasks.