The Final Open-Supply Massive Language Mannequin Ecosystem

Picture by Writer

We’re witnessing an upsurge in open-source language mannequin ecosystems that supply complete assets for people to create language functions for each analysis and business functions.

Beforehand, we now have highlighted Open Assistant and OpenChatKit. Right this moment, we’ll delve into GPT4ALL, which extends past particular use circumstances by providing complete constructing blocks that allow anybody to develop a chatbot just like ChatGPT.

GPT4ALL is a undertaking that gives all the pieces it is advisable to work with state-of-the-art pure language fashions. You’ll be able to entry open supply fashions and datasets, prepare and run them with the offered code, use an online interface or a desktop app to work together with them, connect with the Langchain Backend for distributed computing, and use the Python API for straightforward integration.

Apache-2 licensed GPT4All-J chatbot was not too long ago launched by the builders, which was educated on an enormous, curated corpus of assistant interactions, comprising phrase issues, multi-turn dialogues, code, poems, songs, and tales. To make it extra accessible, they’ve additionally launched Python bindings and a Chat UI, enabling nearly anybody to run the mannequin on CPU.

You’ll be able to attempt it your self by putting in native chat-client in your desktop.

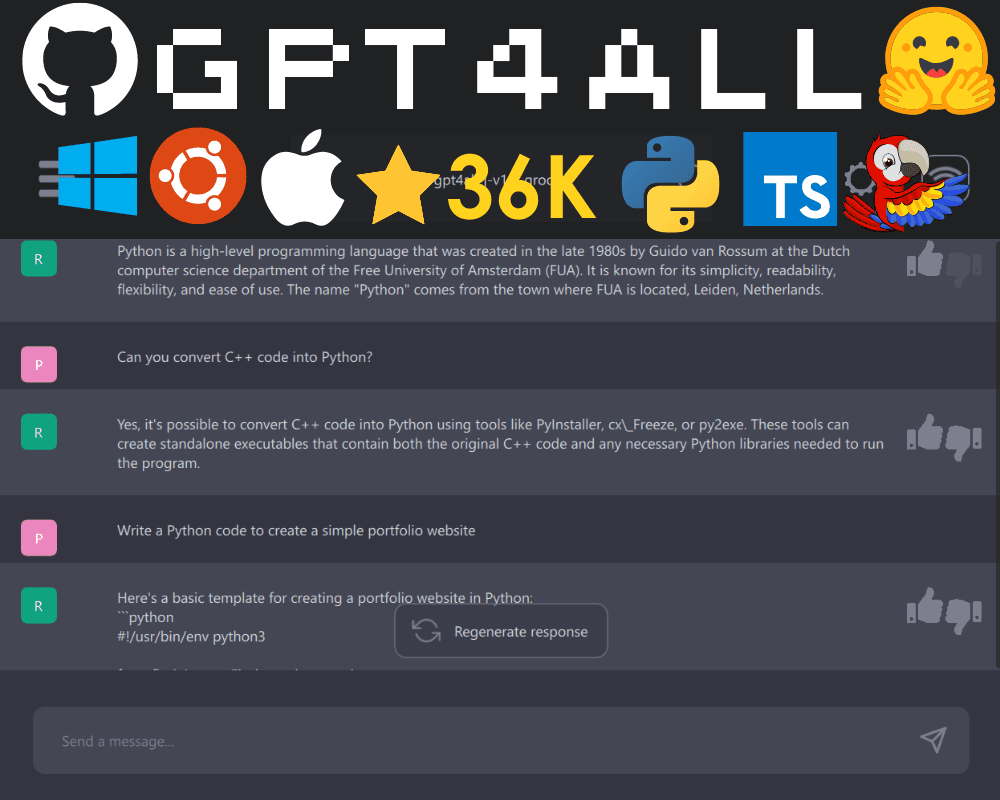

After that, run the GPT4ALL program and obtain the mannequin of your selection. You may also obtain fashions manually here and set up them within the location indicated by the mannequin obtain dialog within the GUI.

Picture by Writer

I’ve had an ideal expertise utilizing it on my laptop computer, receiving quick and correct responses. Moreover, it’s user-friendly, making it accessible even to non-technical people.

Gif by Writer

The GPT4ALL comes with Python, TypeScript, Web Chat interface, and Langchain backend.

On this part, we’ll look into the Python API to entry the fashions utilizing nomic-ai/pygpt4all.

- Set up the Python GPT4ALL library utilizing PIP.

- Obtain a GPT4All mannequin from http://gpt4all.io/models/ggml-gpt4all-l13b-snoozy.bin. You may also browse different fashions here.

- Create a textual content callback perform, load the mannequin, and supply a immediate to

mode.generate()perform to generate textual content. Take a look at the library documentation to study extra.

from pygpt4all.fashions.gpt4all import GPT4All

def new_text_callback(textual content):

print(textual content, finish="")

mannequin = GPT4All("./fashions/ggml-gpt4all-l13b-snoozy.bin")

mannequin.generate("As soon as upon a time, ", n_predict=55, new_text_callback=new_text_callback)

Furthermore, you possibly can obtain and run inference utilizing transformers. Simply present the mannequin identify and the model. In our case, we’re accessing the newest and improved v1.3-groovy mannequin.

from transformers import AutoModelForCausalLM

mannequin = AutoModelForCausalLM.from_pretrained(

"nomic-ai/gpt4all-j", revision="v1.3-groovy"

)

The nomic-ai/gpt4all repository comes with supply code for coaching and inference, mannequin weights, dataset, and documentation. You can begin by making an attempt a couple of fashions by yourself after which attempt to combine it utilizing a Python shopper or LangChain.

The GPT4ALL offers us with a CPU quantized GPT4All mannequin checkpoint. To entry it, we now have to:

- Obtain the gpt4all-lora-quantized.bin file from Direct Link or [Torrent-Magnet].

- Clone this repository and transfer the downloaded bin file to

chatfolder. - Run the suitable command to entry the mannequin:

- M1 Mac/OSX:

cd chat;./gpt4all-lora-quantized-OSX-m1 - Linux:

cd chat;./gpt4all-lora-quantized-linux-x86 - Home windows (PowerShell):

cd chat;./gpt4all-lora-quantized-win64.exe - Intel Mac/OSX:

cd chat;./gpt4all-lora-quantized-OSX-intel

- M1 Mac/OSX:

You may also head to Hugging Face Areas and check out the Gpt4all demo. It isn’t official, however it’s a begin.

Picture from Gpt4all

Assets:

GPT4ALL Backend: GPT4All — ???? LangChain 0.0.154

Abid Ali Awan (@1abidaliawan) is an authorized knowledge scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in Know-how Administration and a bachelor’s diploma in Telecommunication Engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students combating psychological sickness.