Saying New Instruments for Constructing with Generative AI on AWS

The seeds of a machine studying (ML) paradigm shift have existed for many years, however with the prepared availability of scalable compute capability, a large proliferation of knowledge, and the fast development of ML applied sciences, clients throughout industries are reworking their companies. Only in the near past, generative AI purposes like ChatGPT have captured widespread consideration and creativeness. We’re actually at an thrilling inflection level within the widespread adoption of ML, and we imagine most buyer experiences and purposes will likely be reinvented with generative AI.

AI and ML have been a spotlight for Amazon for over 20 years, and most of the capabilities clients use with Amazon are pushed by ML. Our e-commerce suggestions engine is pushed by ML; the paths that optimize robotic choosing routes in our achievement facilities are pushed by ML; and our provide chain, forecasting, and capability planning are knowledgeable by ML. Prime Air (our drones) and the pc imaginative and prescient know-how in Amazon Go (our bodily retail expertise that lets customers choose objects off a shelf and depart the shop with out having to formally try) use deep studying. Alexa, powered by greater than 30 completely different ML programs, helps clients billions of instances every week to handle sensible houses, store, get data and leisure, and extra. Now we have hundreds of engineers at Amazon dedicated to ML, and it’s a giant a part of our heritage, present ethos, and future.

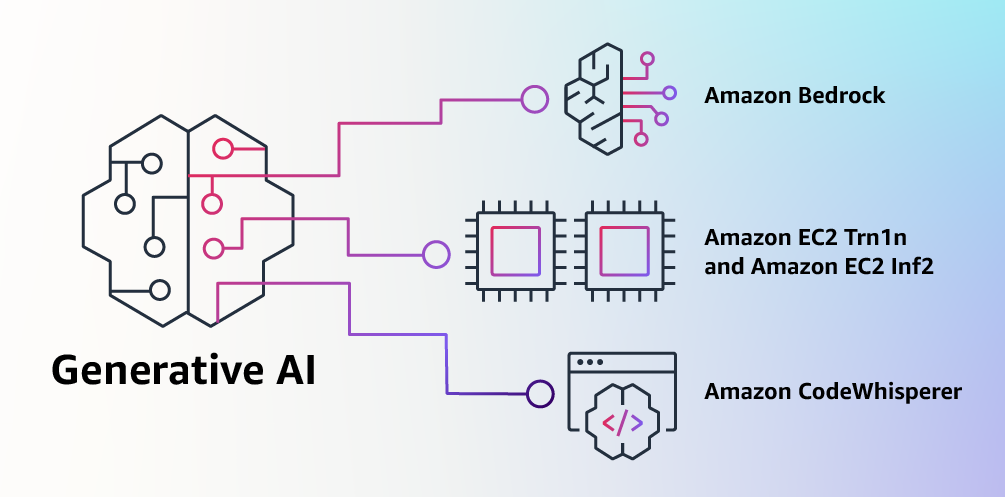

At AWS, we have now performed a key function in democratizing ML and making it accessible to anybody who needs to make use of it, together with greater than 100,000 clients of all sizes and industries. AWS has the broadest and deepest portfolio of AI and ML companies in any respect three layers of the stack. We’ve invested and innovated to supply probably the most performant, scalable infrastructure for cost-effective ML coaching and inference; developed Amazon SageMaker, which is the best means for all builders to construct, practice, and deploy fashions; and launched a variety of companies that permit clients so as to add AI capabilities like picture recognition, forecasting, and clever search to purposes with a easy API name. Because of this clients like Intuit, Thomson Reuters, AstraZeneca, Ferrari, Bundesliga, 3M, and BMW, in addition to hundreds of startups and authorities companies all over the world, are reworking themselves, their industries, and their missions with ML. We take the identical democratizing strategy to generative AI: we work to take these applied sciences out of the realm of analysis and experiments and prolong their availability far past a handful of startups and huge, well-funded tech corporations. That’s why right this moment I’m excited to announce a number of new improvements that may make it straightforward and sensible for our clients to make use of generative AI of their companies.

Constructing with Generative AI on AWS

Generative AI and basis fashions

Generative AI is a kind of AI that may create new content material and concepts, together with conversations, tales, photographs, movies, and music. Like all AI, generative AI is powered by ML fashions—very giant fashions which are pre-trained on huge quantities of knowledge and generally known as Basis Fashions (FMs). Latest developments in ML (particularly the invention of the transformer-based neural community structure) have led to the rise of fashions that comprise billions of parameters or variables. To provide a way for the change in scale, the most important pre-trained mannequin in 2019 was 330M parameters. Now, the most important fashions are greater than 500B parameters—a 1,600x enhance in dimension in only a few years. At the moment’s FMs, equivalent to the massive language fashions (LLMs) GPT3.5 or BLOOM, and the text-to-image mannequin Steady Diffusion from Stability AI, can carry out a variety of duties that span a number of domains, like writing weblog posts, producing photographs, fixing math issues, participating in dialog, and answering questions primarily based on a doc. The dimensions and general-purpose nature of FMs make them completely different from conventional ML fashions, which generally carry out particular duties, like analyzing textual content for sentiment, classifying photographs, and forecasting tendencies.

FMs can carry out so many extra duties as a result of they comprise such a lot of parameters that make them able to studying complicated ideas. And thru their pre-training publicity to internet-scale information in all its varied varieties and myriad of patterns, FMs study to use their data inside a variety of contexts. Whereas the capabilities and ensuing potentialities of a pre-trained FM are superb, clients get actually excited as a result of these usually succesful fashions may also be personalized to carry out domain-specific features which are differentiating to their companies, utilizing solely a small fraction of the information and compute required to coach a mannequin from scratch. The personalized FMs can create a singular buyer expertise, embodying the corporate’s voice, fashion, and companies throughout all kinds of client industries, like banking, journey, and healthcare. For example, a monetary agency that should auto-generate a every day exercise report for inner circulation utilizing all of the related transactions can customise the mannequin with proprietary information, which is able to embrace previous reviews, in order that the FM learns how these reviews ought to learn and what information was used to generate them.

The potential of FMs is extremely thrilling. However, we’re nonetheless within the very early days. Whereas ChatGPT has been the primary broad generative AI expertise to catch clients’ consideration, most people finding out generative AI have shortly come to understand that a number of corporations have been engaged on FMs for years, and there are a number of completely different FMs accessible—every with distinctive strengths and traits. As we’ve seen over time with fast-moving applied sciences, and within the evolution of ML, issues change quickly. We count on new architectures to come up sooner or later, and this range of FMs will set off a wave of innovation. We’re already seeing new utility experiences by no means seen earlier than. AWS clients have requested us how they’ll shortly benefit from what’s on the market right this moment (and what’s possible coming tomorrow) and shortly start utilizing FMs and generative AI inside their companies and organizations to drive new ranges of productiveness and remodel their choices.

Saying Amazon Bedrock and Amazon Titan fashions, the best approach to construct and scale generative AI purposes with FMs

Clients have advised us there are a number of large issues standing of their means right this moment. First, they want an easy approach to discover and entry high-performing FMs that give excellent outcomes and are best-suited for his or her functions. Second, clients need integration into purposes to be seamless, with out having to handle enormous clusters of infrastructure or incur giant prices. Lastly, clients need it to be straightforward to take the bottom FM, and construct differentiated apps utilizing their very own information (somewhat information or rather a lot). Because the information clients wish to use for personalisation is extremely helpful IP, they want it to remain utterly protected, safe, and personal throughout that course of, and so they need management over how their information is shared and used.

We took all of that suggestions from clients, and right this moment we’re excited to announce Amazon Bedrock, a brand new service that makes FMs from AI21 Labs, Anthropic, Stability AI, and Amazon accessible through an API. Bedrock is the best means for patrons to construct and scale generative AI-based purposes utilizing FMs, democratizing entry for all builders. Bedrock will supply the power to entry a variety of highly effective FMs for textual content and pictures—together with Amazon’s Titan FMs, which encompass two new LLMs we’re additionally asserting right this moment—by means of a scalable, dependable, and safe AWS managed service. With Bedrock’s serverless expertise, clients can simply discover the correct mannequin for what they’re attempting to get accomplished, get began shortly, privately customise FMs with their very own information, and simply combine and deploy them into their purposes utilizing the AWS instruments and capabilities they’re conversant in (together with integrations with Amazon SageMaker ML options like Experiments to check completely different fashions and Pipelines to handle their FMs at scale) with out having to handle any infrastructure.

Bedrock clients can select from among the most cutting-edge FMs accessible right this moment. This contains the Jurassic-2 household of multilingual LLMs from AI21 Labs, which comply with pure language directions to generate textual content in Spanish, French, German, Portuguese, Italian, and Dutch. Claude, Anthropic’s LLM, can carry out all kinds of conversational and textual content processing duties and is predicated on Anthropic’s in depth analysis into coaching sincere and accountable AI programs. Bedrock additionally makes it straightforward to entry Stability AI’s suite of text-to-image basis fashions, together with Steady Diffusion (the preferred of its variety), which is able to producing distinctive, life like, high-quality photographs, artwork, logos, and designs.

One of the vital necessary capabilities of Bedrock is how straightforward it’s to customise a mannequin. Clients merely level Bedrock at a number of labeled examples in Amazon S3, and the service can fine-tune the mannequin for a specific job with out having to annotate giant volumes of knowledge (as few as 20 examples is sufficient). Think about a content material advertising and marketing supervisor who works at a number one vogue retailer and must develop contemporary, focused advert and marketing campaign copy for an upcoming new line of purses. To do that, they supply Bedrock a number of labeled examples of their finest performing taglines from previous campaigns, together with the related product descriptions, and Bedrock will robotically begin producing efficient social media, show advert, and internet copy for the brand new purses. Not one of the buyer’s information is used to coach the underlying fashions, and since all information is encrypted and doesn’t depart a buyer’s Digital Non-public Cloud (VPC), clients can belief that their information will stay personal and confidential.

Bedrock is now in restricted preview, and clients like Coda are enthusiastic about how briskly their improvement groups have gotten up and operating. Shishir Mehrotra, Co-founder and CEO of Coda, says, “As a longtime joyful AWS buyer, we’re enthusiastic about how Amazon Bedrock can convey high quality, scalability, and efficiency to Coda AI. Since all our information is already on AWS, we’re in a position to shortly incorporate generative AI utilizing Bedrock, with all the safety and privateness we have to defend our information built-in. With over tens of hundreds of groups operating on Coda, together with giant groups like Uber, the New York Occasions, and Sq., reliability and scalability are actually necessary.”

Now we have been previewing Amazon’s new Titan FMs with a number of clients earlier than we make them accessible extra broadly within the coming months. We’ll initially have two Titan fashions. The primary is a generative LLM for duties equivalent to summarization, textual content era (for instance, making a weblog publish), classification, open-ended Q&A, and knowledge extraction. The second is an embeddings LLM that interprets textual content inputs (phrases, phrases or probably giant models of textual content) into numerical representations (often known as embeddings) that comprise the semantic which means of the textual content. Whereas this LLM won’t generate textual content, it’s helpful for purposes like personalization and search as a result of by evaluating embeddings the mannequin will produce extra related and contextual responses than phrase matching. In reality, Amazon.com’s product search functionality makes use of an identical embeddings mannequin amongst others to assist clients discover the merchandise they’re on the lookout for. To proceed supporting finest practices within the accountable use of AI, Titan FMs are constructed to detect and take away dangerous content material within the information, reject inappropriate content material within the consumer enter, and filter the fashions’ outputs that comprise inappropriate content material (equivalent to hate speech, profanity, and violence).

Bedrock makes the ability of FMs accessible to corporations of all sizes in order that they’ll speed up the usage of ML throughout their organizations and construct their very own generative AI purposes as a result of it is going to be straightforward for all builders. We predict Bedrock will likely be a large step ahead in democratizing FMs, and our companions like Accenture, Deloitte, Infosys, and Slalom are constructing practices to assist enterprises go quicker with generative AI. Unbiased Software program Distributors (ISVs) like C3 AI and Pega are excited to leverage Bedrock for straightforward entry to its nice collection of FMs with the entire safety, privateness, and reliability they count on from AWS.

Saying the overall availability of Amazon EC2 Trn1n situations powered by AWS Trainium and Amazon EC2 Inf2 situations powered by AWS Inferentia2, probably the most cost-effective cloud infrastructure for generative AI

No matter clients are attempting to do with FMs—operating them, constructing them, customizing them—they want probably the most performant, cost-effective infrastructure that’s purpose-built for ML. Over the past 5 years, AWS has been investing in our personal silicon to push the envelope on efficiency and value efficiency for demanding workloads like ML coaching and Inference, and our AWS Trainium and AWS Inferentia chips supply the bottom price for coaching fashions and operating inference within the cloud. This means to maximise efficiency and management prices by selecting the optimum ML infrastructure is why main AI startups, like AI21 Labs, Anthropic, Cohere, Grammarly, Hugging Face, Runway, and Stability AI run on AWS.

Trn1 situations, powered by Trainium, can ship as much as 50% financial savings on coaching prices over another EC2 occasion, and are optimized to distribute coaching throughout a number of servers linked with 800 Gbps of second-generation Elastic Material Adapter (EFA) networking. Clients can deploy Trn1 situations in UltraClusters that may scale as much as 30,000 Trainium chips (greater than 6 exaflops of compute) situated in the identical AWS Availability Zone with petabit scale networking. Many AWS clients, together with Helixon, Cash Ahead, and the Amazon Search group, use Trn1 situations to assist cut back the time required to coach the largest-scale deep studying fashions from months to weeks and even days whereas reducing their prices. 800 Gbps is a variety of bandwidth, however we have now continued to innovate to ship extra, and right this moment we’re asserting the common availability of latest, network-optimized Trn1n situations, which provide 1600 Gbps of community bandwidth and are designed to ship 20% greater efficiency over Trn1 for big, network-intensive fashions.

At the moment, more often than not and cash spent on FMs goes into coaching them. It’s because many shoppers are solely simply beginning to deploy FMs into manufacturing. Nevertheless, sooner or later, when FMs are deployed at scale, most prices will likely be related to operating the fashions and doing inference. When you usually practice a mannequin periodically, a manufacturing utility will be always producing predictions, often known as inferences, probably producing tens of millions per hour. And these predictions have to occur in real-time, which requires very low-latency and high-throughput networking. Alexa is a superb instance with tens of millions of requests coming in each minute, which accounts for 40% of all compute prices.

As a result of we knew that a lot of the future ML prices would come from operating inferences, we prioritized inference-optimized silicon once we began investing in new chips a number of years in the past. In 2018, we introduced Inferentia, the primary purpose-built chip for inference. Yearly, Inferentia helps Amazon run trillions of inferences and has saved corporations like Amazon over 100 million {dollars} in capital expense already. The outcomes are spectacular, and we see many alternatives to maintain innovating as workloads will solely enhance in dimension and complexity as extra clients combine generative AI into their purposes.

That’s why we’re asserting right this moment the common availability of Inf2 situations powered by AWS Inferentia2, that are optimized particularly for large-scale generative AI purposes with fashions containing a whole lot of billions of parameters. Inf2 situations ship as much as 4x greater throughput and as much as 10x decrease latency in comparison with the prior era Inferentia-based situations. In addition they have ultra-high-speed connectivity between accelerators to assist large-scale distributed inference. These capabilities drive as much as 40% higher inference value efficiency than different comparable Amazon EC2 situations and the bottom price for inference within the cloud. Clients like Runway are seeing as much as 2x greater throughput with Inf2 than comparable Amazon EC2 situations for a few of their fashions. This high-performance, low-cost inference will allow Runway to introduce extra options, deploy extra complicated fashions, and in the end ship a greater expertise for the tens of millions of creators utilizing Runway.

Saying the overall availability of Amazon CodeWhisperer, free for particular person builders

We all know that constructing with the correct FMs and operating Generative AI purposes at scale on probably the most performant cloud infrastructure will likely be transformative for patrons. The brand new wave of experiences may also be transformative for customers. With generative AI built-in, customers will be capable of have extra pure and seamless interactions with purposes and programs. Consider how we will unlock our cell phones simply by taking a look at them, without having to know something concerning the highly effective ML fashions that make this characteristic potential.

One space the place we foresee the usage of generative AI rising quickly is in coding. Software program builders right this moment spend a major quantity of their time writing code that’s fairly simple and undifferentiated. In addition they spend a variety of time attempting to maintain up with a posh and ever-changing device and know-how panorama. All of this leaves builders much less time to develop new, progressive capabilities and companies. Builders attempt to overcome this by copying and modifying code snippets from the net, which may end up in inadvertently copying code that doesn’t work, incorporates safety vulnerabilities, or doesn’t observe utilization of open supply software program. And, in the end, looking and copying nonetheless takes time away from the great things.

Generative AI can take this heavy lifting out of the equation by “writing” a lot of the undifferentiated code, permitting builders to construct quicker whereas releasing them as much as concentrate on the extra inventive elements of coding. Because of this, final yr, we introduced the preview of Amazon CodeWhisperer, an AI coding companion that makes use of a FM underneath the hood to radically enhance developer productiveness by producing code options in real-time primarily based on builders’ feedback in pure language and prior code of their Built-in Improvement Atmosphere (IDE). Builders can merely inform CodeWhisperer to do a job, equivalent to “parse a CSV string of songs” and ask it to return a structured checklist primarily based on values equivalent to artist, title, and highest chart rank. CodeWhisperer supplies a productiveness increase by producing a complete operate that parses the string and returns the checklist as specified. Developer response to the preview has been overwhelmingly optimistic, and we proceed to imagine that serving to builders code might find yourself being probably the most highly effective makes use of of generative AI we’ll see within the coming years. Throughout the preview, we ran a productiveness problem, and contributors who used CodeWhisperer accomplished duties 57% quicker, on common, and had been 27% extra more likely to full them efficiently than those that didn’t use CodeWhisperer. This can be a large leap ahead in developer productiveness, and we imagine that is solely the start.

At the moment, we’re excited to announce the common availability of Amazon CodeWhisperer for Python, Java, JavaScript, TypeScript, and C#—plus ten new languages, together with Go, Kotlin, Rust, PHP, and SQL. CodeWhisperer will be accessed from IDEs equivalent to VS Code, IntelliJ IDEA, AWS Cloud9, and plenty of extra through the AWS Toolkit IDE extensions. CodeWhisperer can also be accessible within the AWS Lambda console. Along with studying from the billions of strains of publicly accessible code, CodeWhisperer has been educated on Amazon code. We imagine CodeWhisperer is now probably the most correct, quickest, and most safe approach to generate code for AWS companies, together with Amazon EC2, AWS Lambda, and Amazon S3.

Builders aren’t actually going to be extra productive if code recommended by their generative AI device incorporates hidden safety vulnerabilities or fails to deal with open supply responsibly. CodeWhisperer is the one AI coding companion with built-in safety scanning (powered by automated reasoning) for locating and suggesting remediations for hard-to-detect vulnerabilities, equivalent to these within the prime ten Open Worldwide Software Safety Challenge (OWASP), people who don’t meet crypto library finest practices, and others. To assist builders code responsibly, CodeWhisperer filters out code options that is likely to be thought-about biased or unfair, and CodeWhisperer is the one coding companion that may filter and flag code options that resemble open supply code that clients might wish to reference or license to be used.

We all know generative AI goes to vary the sport for builders, and we wish it to be helpful to as many as potential. Because of this CodeWhisperer is free for all particular person customers with no {qualifications} or cut-off dates for producing code! Anybody can join CodeWhisperer with simply an e mail account and turn into extra productive inside minutes. You don’t even should have an AWS account. For enterprise customers, we’re providing a CodeWhisperer Skilled Tier that features administration options like single sign-on (SSO) with AWS Identification and Entry Administration (IAM) integration, in addition to greater limits on safety scanning.

Constructing highly effective purposes like CodeWhisperer is transformative for builders and all our clients. Now we have much more coming, and we’re enthusiastic about what you’ll construct with generative AI on AWS. Our mission is to make it potential for builders of all ability ranges and for organizations of all sizes to innovate utilizing generative AI. That is just the start of what we imagine would be the subsequent wave of ML powering new potentialities for you.

Sources

Try the next sources to study extra about generative AI on AWS and these bulletins:

In regards to the creator

Swami Sivasubramanian is Vice President of Information and Machine Studying at AWS. On this function, Swami oversees all AWS Database, Analytics, and AI & Machine Studying companies. His group’s mission is to assist organizations put their information to work with an entire, end-to-end information resolution to retailer, entry, analyze, and visualize, and predict.

Swami Sivasubramanian is Vice President of Information and Machine Studying at AWS. On this function, Swami oversees all AWS Database, Analytics, and AI & Machine Studying companies. His group’s mission is to assist organizations put their information to work with an entire, end-to-end information resolution to retailer, entry, analyze, and visualize, and predict.