Researchers From ETH Zurich and Microsoft Suggest X-Avatar: An Animatable Implicit Human Avatar Mannequin Able to Capturing Human Physique Pose and Facial Expressions

Pose, look, facial features, hand gestures, and so on.—collectively known as “physique language”—has been the topic of many tutorial investigations. Precisely recording, deciphering, and creating non-verbal alerts might enormously improve the realism of avatars in telepresence, augmented actuality (AR), and digital actuality (VR) settings.

Current state-of-the-art avatar fashions, comparable to these within the SMPL household, can accurately depict completely different human physique kinds in practical positions. Nonetheless, they’re restricted by the mesh-based representations they use and the standard of the 3D mesh. Furthermore, such fashions typically solely simulate naked our bodies and don’t depict clothes or hair, decreasing the outcomes’ realism.

They introduce X-Avatar, an progressive mannequin that may seize the whole vary of human expression in digital avatars to create practical telepresence, augmented actuality, and digital actuality environments. X-Avatar is an expressive implicit human avatar mannequin developed by ETH Zurich and Microsoft researchers. It will possibly seize high-fidelity human physique and hand actions, facial feelings, and different look traits. The approach can study from both full 3D scans or RGB-D information, producing complete fashions of our bodies, fingers, facial feelings, and appears.

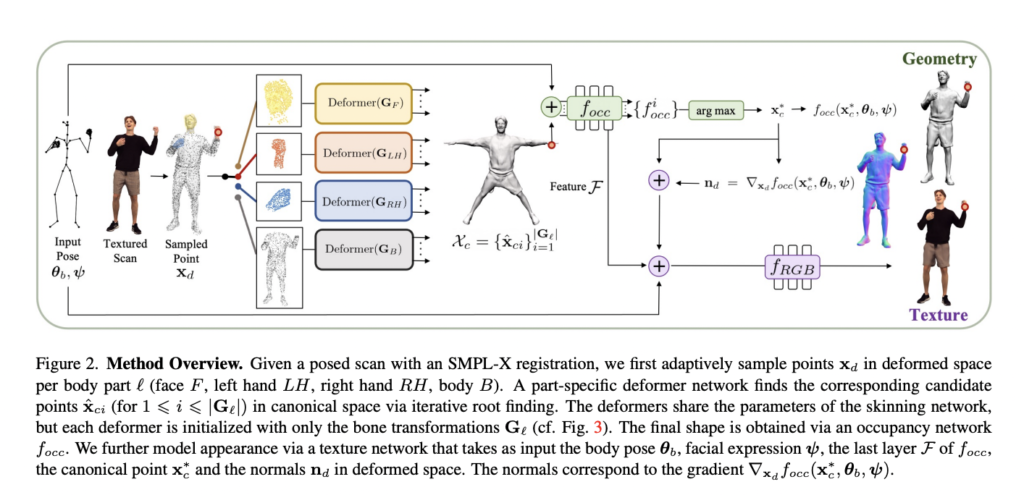

The researchers suggest a part-aware studying ahead skinning module that the SMPL-X parameter area might management, enabling expressive animation of X-Avatars. Researchers current distinctive part-aware sampling and initialization algorithms to coach the neural form and deformation fields successfully. Researchers increase the geometry and deformation fields with a texture community conditioned by place, facial features, geometry, and the deformed floor’s normals to seize the avatar’s look with high-frequency particulars. This yields improved constancy outcomes, significantly for smaller physique components, whereas retaining coaching efficient regardless of the rising variety of articulated bones. Researchers display empirically that the strategy achieves superior quantitative and qualitative outcomes on the animation job in comparison with robust baselines in each information areas.

Researchers current a brand new dataset, dubbed X-People, with 233 sequences of high-quality textured scans from 20 topics, for 35,500 information frames to assist future analysis on expressive avatars. X-Avatar suggests a human mannequin characterised by articulated neural implicit surfaces that accommodate the varied topology of clothed people and obtain improved geometric decision and elevated constancy of general look. The research’s authors outline three distinct neural fields: one for modeling geometry utilizing an implicit occupancy community, one other for modeling deformation utilizing discovered ahead linear mix skinning (LBS) with steady skinning weights, and a 3rd for modeling look utilizing the RGB coloration worth.

Mannequin X-Avatar might soak up both a 3D posed scan or an RGB-D image for processing. A part of its design incorporates a shaping community for modeling geometry in canonical area and a deformation community that makes use of discovered linear mix skinning (LBS) to construct correspondences between canonical and deformed areas.

The researchers start with the parameter area of SMPL-X, an SMPL extension that captures the form, look, and deformations of full-body folks, paying particular consideration handy positions and facial feelings to generate expressive and controllable human avatars. A human mannequin described by articulated neural implicit surfaces represents the assorted topology of clothed people. On the similar time, a novel part-aware initialization technique significantly enhances the consequence’s realism by elevating the pattern charge for smaller physique components.

The outcomes present that X-Avatar can precisely file human physique and hand poses in addition to facial feelings and look, permitting for creating extra expressive and lifelike avatars. The group behind this initiative retains their fingers crossed that their technique might encourage extra research to offer AIs extra character.

Utilized Dataset

Excessive-quality textured scans and SMPL[-X] registrations; 20 topics; 233 sequences; 35,427 frames; physique place + hand gesture + facial features; a variety of attire and coiffure choices; a variety of ages

Options

- A number of strategies exist for educating X-Avatars.

- Picture from 3D scans utilized in coaching, higher proper. On the backside: test-pose-driven avatars.

- RGB-D data for educational functions, up high. Pose-testing avatars carry out at a decrease stage.

- The strategy recovers higher hand articulation and facial features than different baselines on the animation check. This leads to animated X-Avatars utilizing actions recovered by PyMAF-X from monocular RGB movies.

Limitations

The X-Avatar has problem modeling off-the-shoulder tops or pants (e.g., skirts). Nonetheless, researchers typically solely practice a single mannequin per topic, so their capability to generalize past a single particular person nonetheless must be expanded.

Contributions

- X-Avatar is the primary expressive implicit human avatar mannequin that holistically captures physique posture, hand pose, facial feelings, and look.

- Initialization and sampling procedures that take into account underlying construction increase output high quality and keep coaching effectivity.

- X-People is a model new dataset of 233 sequences totaling 35,500 frames of high-quality textured scans of 20 folks displaying a variety of physique and hand motions and facial feelings.

X-Avatar is unmatched when capturing physique stance, hand pose, facial feelings, and general look. Utilizing the not too long ago launched X-People dataset, researchers have proven the strategy’s

Take a look at the Paper, Project, and Github. All Credit score For This Analysis Goes To the Researchers on This Mission. Additionally, don’t neglect to affix our 16k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

Dhanshree Shenwai is a Laptop Science Engineer and has a great expertise in FinTech corporations protecting Monetary, Playing cards & Funds and Banking area with eager curiosity in functions of AI. She is smitten by exploring new applied sciences and developments in in the present day’s evolving world making everybody’s life straightforward.