Researchers at Stanford Introduce SUQL: A Formal Question Language for Integrating Structured and Unstructured Information

Massive Language Fashions (LLMs) have gained traction for his or her distinctive efficiency in varied duties. Current analysis goals to reinforce their factuality by integrating exterior assets, together with structured information and free textual content. Nonetheless, quite a few information sources, comparable to affected person data and monetary databases, comprise a mixture of each sorts of info. “Can you discover me an Italian restaurant with a romantic environment?”, an agent wants to mix the structured attribute cuisines and the free-text attribute critiques.

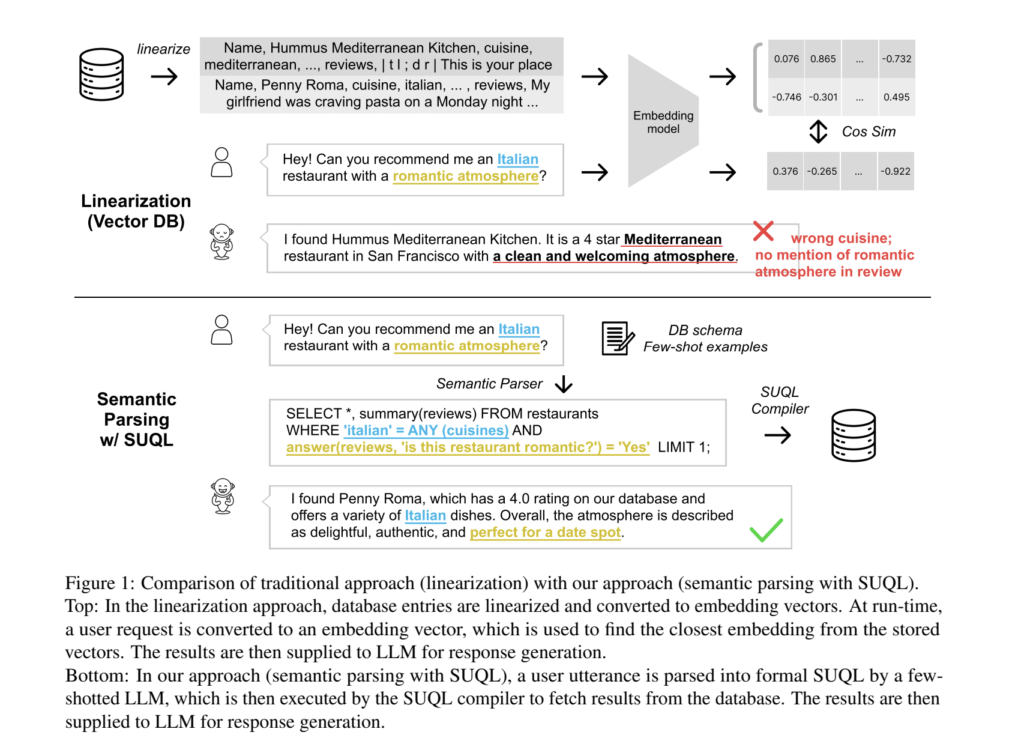

Earlier chat methods sometimes make use of classifiers to direct queries to specialised modules for dealing with structured information, unstructured information, or chitchat. Nonetheless, this methodology falls brief for questions requiring each structured and free-text information. One other strategy entails changing structured information into free textual content, limiting the usage of SQL for database queries and the effectiveness of free textual content retrievers. The need for hybrid information queries is underscored by datasets like HybridQA, containing questions necessitating info from each structured and free textual content sources. Prior endeavours to floor question-answering methods on hybrid information both function on small datasets, sacrifice the richness of structured information queries or help restricted mixtures of structured and unstructured data queries.

Stanford researchers introduce an strategy to grounding conversational brokers in hybrid information sources, using each structured information queries and free-text retrieval strategies. It empirically demonstrates that customers incessantly ask questions spanning each structured and unstructured information in real-life conversations, with over 49% of queries requiring data from each sorts. To boost expressiveness and precision, they suggest SUQL (Structured and Unstructured Question Language), a proper language augmenting SQL with primitives for processing free textual content, enabling a mix of off-the-shelf retrieval fashions and LLMs with SQL semantics and operators.

The SUQL’s design goals for expressiveness, accuracy, and effectivity. SUQL extends SQL with NLP operators like SUMMARY and ANSWER, facilitating full-spectrum queries on hybrid data sources. LLMs proficiently translate advanced textual content into SQL queries, empowering SUQL for advanced queries. Whereas SUQL queries can run on normal SQL compilers, a naive implementation could also be inefficient. Outlining SUQL’s free-text primitives, highlighting its distinction from retrieval-based strategies by expressing queries comprehensively.

Researchers consider SUQL by two experiments: one on HybridQA, a question-answering dataset, and one other on actual restaurant information from Yelp.com. The HybridQA experiment makes use of LLMs and SUQL to attain 59.3% Precise Match (EM) and 68.3% F1 rating. SUQL outperforms current fashions by 8.9% EM and seven.1% F1 on the check set. In real-life restaurant experiments, SUQL demonstrates 93.8% and 90.3% flip accuracy in single-turn and conversational queries respectively, surpassing linearization-based strategies by as much as 36.8% and 26.9%.

To conclude, this paper introduces SUQL because the inaugural formal question language for hybrid data corpora, encompassing structured and unstructured information. Its innovation lies in integrating free-text primitives right into a exact and succinct question framework. In-context studying utilized to HybridQA achieves outcomes inside 8.9% of the SOTA, trainable on 62K samples. Not like prior strategies, SUQL accommodates giant databases and free-text corpora. Experiments on Yelp information reveal SUQL’s effectiveness, with a 90.3% success fee in satisfying person queries in comparison with 63.4% for linearization baselines.

Take a look at the Paper, Github, and Demo. All credit score for this analysis goes to the researchers of this venture. Additionally, don’t overlook to comply with us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

In the event you like our work, you’ll love our newsletter..

Don’t Neglect to hitch our 41k+ ML SubReddit