Revolutionize Buyer Satisfaction with tailor-made reward fashions for what you are promoting on Amazon SageMaker

As extra highly effective giant language fashions (LLMs) are used to carry out quite a lot of duties with higher accuracy, the variety of purposes and companies which are being constructed with generative artificial intelligence (AI) can be rising. With nice energy comes duty, and organizations need to make it possible for these LLMs produce responses that align with their organizational values and supply the identical distinctive expertise they at all times supposed for his or her end-customers.

Evaluating AI-generated responses presents challenges. This submit discusses methods to align them with firm values and construct a customized reward mannequin utilizing Amazon SageMaker. By doing so, you possibly can present personalized buyer experiences that uniquely replicate your group’s model identification and ethos.

Challenges with out-of-the-box LLMs

Out-of-the-box LLMs present excessive accuracy, however typically lack customization for a corporation’s particular wants and end-users. Human suggestions varies in subjectivity throughout organizations and buyer segments. Gathering numerous, subjective human suggestions to refine LLMs is time-consuming and unscalable.

This submit showcases a reward modeling approach to effectively customise LLMs for a corporation by programmatically defining rewards features that seize preferences for mannequin habits. We exhibit an strategy to ship LLM outcomes tailor-made to a corporation with out intensive, continuous human judgement. The methods intention to beat customization and scalability challenges by encoding a corporation’s subjective high quality requirements right into a reward mannequin that guides the LLM to generate preferable outputs.

Goal vs. subjective human suggestions

Not all human suggestions is similar. We will categorize human suggestions into two sorts: goal and subjective.

Any human being who’s requested to guage the colour of the next containers would affirm that the left one is a white field and proper one is a black field. That is goal, and there are not any modifications to it by any means.

Figuring out whether or not an AI mannequin’s output is “nice” is inherently subjective. Contemplate the next coloration spectrum. If requested to explain the colours on the ends, individuals would supply diverse, subjective responses primarily based on their perceptions. One particular person’s white could also be one other’s grey.

This subjectivity poses a problem for bettering AI by way of human suggestions. In contrast to goal proper/mistaken suggestions, subjective preferences are nuanced and customized. The identical output may elicit reward from one particular person and criticism from one other. The hot button is acknowledging and accounting for the basic subjectivity of human preferences in AI coaching. Relatively than looking for elusive goal truths, we should present fashions publicity to the colourful range of human subjective judgment.

In contrast to conventional mannequin duties reminiscent of classification, which may be neatly benchmarked on take a look at datasets, assessing the standard of a sprawling conversational agent is extremely subjective. One human’s riveting prose is one other’s aimless drivel. So how ought to we refine these expansive language fashions when people intrinsically disagree on the hallmarks of a “good” response?

The hot button is gathering suggestions from a various crowd. With sufficient subjective viewpoints, patterns emerge on partaking discourse, logical coherence, and innocent content material. Fashions can then be tuned primarily based on broader human preferences. There’s a basic notion that reward fashions are sometimes related solely with Reinforcement Studying from Human Suggestions (RLHF). Reward modeling, the truth is, goes past RLHF, and is usually a highly effective instrument for aligning AI-generated responses with a corporation’s particular values and model identification.

Reward modeling

You’ll be able to select an LLM and have it generate quite a few responses to numerous prompts, after which your human labelers will rank these responses. It’s essential to have range in human labelers. Clear labeling pointers are crucial. With out specific standards, judgments can turn out to be arbitrary. Helpful dimensions embody coherence, relevance, creativity, factual correctness, logical consistency, and extra. Human labelers put these responses into classes and label them favourite to least favourite, as proven within the following instance. This instance showcases how totally different people understand these potential responses from the LLM when it comes to their most favourite (labeled as 1 on this case) and least favourite (labeled as 3 on this case). Every column is labeled 1, 2, or 3 from every human to suggest their most most popular and least most popular response from the LLM.

By compiling these subjective scores, patterns emerge on what resonates throughout readers. The aggregated human suggestions primarily trains a separate reward mannequin on writing qualities that enchantment to individuals. This system of distilling crowd views into an AI reward operate is known as reward modeling. It supplies a way to enhance LLM output high quality primarily based on numerous subjective viewpoints.

Answer overview

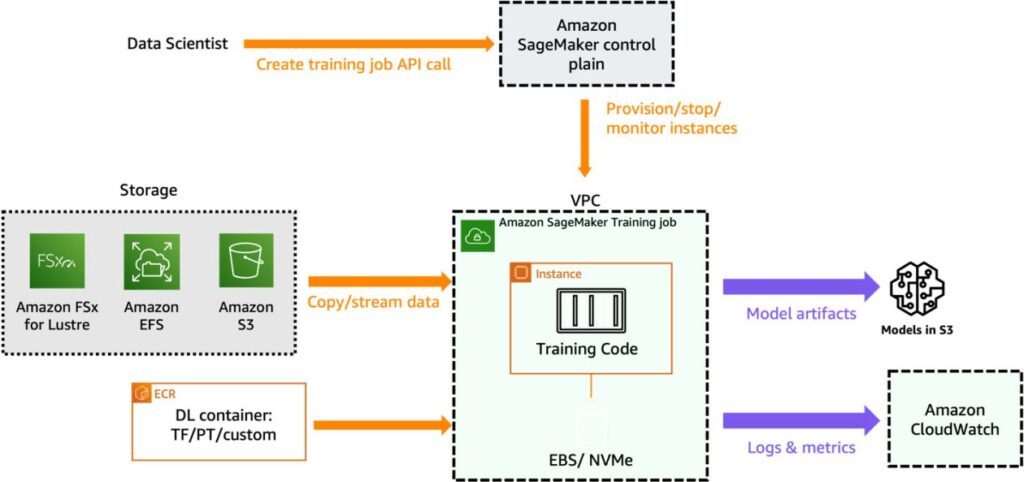

On this submit, we element learn how to prepare a reward mannequin primarily based on organization-specific human labeling suggestions collected for varied prompts examined on the bottom FM. The next diagram illustrates the answer structure.

For extra particulars, see the accompanying notebook.

Stipulations

To efficiently prepare a reward mannequin, you want the next:

Launch SageMaker Studio

Full the next steps to launch SageMaker Studio:

- On the SageMaker console, select Studio within the navigation pane.

- On the Studio touchdown web page, choose the area and consumer profile for launching Studio.

- Select Open Studio.

- To launch SageMaker Studio, select Launch private Studio.

Let’s see learn how to create a reward mannequin regionally in a SageMaker Studio pocket book surroundings through the use of a pre-existing mannequin from the Hugging Face mannequin hub.

Put together a human-labeled dataset and prepare a reward mannequin

When doing reward modeling, getting suggestions knowledge from people may be costly. It is because reward modeling wants suggestions from different human staff as a substitute of solely utilizing knowledge collected throughout common system use. How properly your reward mannequin behaves will depend on the standard and quantity of suggestions from people.

We advocate utilizing AWS-managed choices reminiscent of Amazon SageMaker Ground Truth. It gives probably the most complete set of human-in-the-loop capabilities, permitting you to harness the facility of human suggestions throughout the machine studying (ML) lifecycle to enhance the accuracy and relevancy of fashions. You’ll be able to full quite a lot of human-in-the-loop duties with SageMaker Floor Reality, from knowledge technology and annotation to mannequin evaluate, customization, and analysis, both by way of a self-service or AWS-managed providing.

For this submit, we use the IMDB dataset to coach a reward mannequin that gives a better rating for textual content that people have labeled as constructive, and a decrease rating for detrimental textual content.

We put together the dataset with the next code:

The next instance reveals a pattern file from the ready dataset, which incorporates references to rejected and chosen responses. We now have additionally embedded the enter ID and a focus masks for the chosen and rejected responses.

Load the pre-trained mannequin

On this case, we use the OPT-1.3b (Open Pre-trained Transformer Language Mannequin) mannequin in Amazon SageMaker JumpStart from Hugging Face. If you wish to do all the coaching regionally in your pocket book as a substitute of distributed coaching, it’s essential to use an occasion with sufficient accelerator reminiscence. We run the next coaching on a pocket book working on ml.g4dn.xlarge occasion sort:

Outline the customized coach operate

Within the following code snippet, we create a customized coach that calculates how properly a mannequin is acting on a job:

It compares the mannequin’s outcomes for 2 units of enter knowledge: one set that was chosen and one other set that was rejected. The coach then makes use of these outcomes to determine how good the mannequin is at distinguishing between the chosen and rejected knowledge. This helps the coach alter the mannequin to enhance its efficiency on the duty. The CustomTrainer class is used to create a specialised coach that calculates the loss operate for a particular job involving chosen and rejected enter sequences. This practice coach extends the performance of the usual Coach class supplied by the transformers library, permitting for a tailor-made strategy to dealing with mannequin outputs and loss computation primarily based on the particular necessities of the duty. See the next code:

The TrainingArguments within the supplied code snippet are used to configure varied facets of the coaching course of for an ML mannequin. Let’s break down the aim of every parameter, and the way they’ll affect the coaching final result:

- output_dir – Specifies the listing the place the educated mannequin and related information will probably be saved. This parameter helps manage and retailer the educated mannequin for future use.

- overwrite_output_dir – Determines whether or not to overwrite the output listing if it already exists. Setting this to True permits for reusing the identical listing with out guide deletion.

- do_train – Signifies whether or not to carry out coaching. If set to True, the mannequin will probably be educated utilizing the supplied coaching dataset.

- do_eval and do_predict – Management whether or not to carry out analysis and prediction duties, respectively. On this case, each are set to False, that means solely coaching will probably be carried out.

- evaluation_strategy – Defines when analysis must be carried out throughout coaching. Setting it to “no” means analysis is not going to be achieved throughout coaching.

- learning_rate – Specifies the training fee for the optimizer, influencing how rapidly or slowly the mannequin learns from the information.

- num_train_epochs – Units the variety of instances the mannequin will undergo your entire coaching dataset throughout coaching. One epoch means one full go by way of all coaching samples.

- per_device_train_batch_size – Determines what number of samples are processed in every batch throughout coaching on every machine (for instance, GPU). A smaller batch dimension can result in slower however extra steady coaching.

- gradient_accumulation_steps – Controls how typically gradients are collected earlier than updating the mannequin’s parameters. This might help stabilize coaching with giant batch sizes.

- remove_unused_columns – Specifies whether or not unused columns within the dataset must be eliminated earlier than processing, optimizing reminiscence utilization.

By configuring these parameters within the TrainingArguments, you possibly can affect varied facets of the coaching course of, reminiscent of mannequin efficiency, convergence pace, reminiscence utilization, and total coaching final result primarily based in your particular necessities and constraints.

If you run this code, it trains the reward mannequin primarily based on the numerical illustration of subjective suggestions you gathered from the human labelers. A educated reward mannequin will give a better rating to LLM responses that people usually tend to choose.

Use the reward mannequin to judge the bottom LLM

Now you can feed the response out of your LLM to this reward mannequin, and the numerical rating produced as output informs you of how properly the response from the LLM is aligning to the subjective group preferences that had been embedded on the reward mannequin. The next diagram illustrates this course of. You should utilize this quantity as the brink for deciding whether or not or not the response from the LLM may be shared with the end-user.

For instance, let’s say we created an reward mannequin to avoiding poisonous, dangerous, or inappropriate content material. If a chatbot powered by an LLM produces a response, the reward mannequin can then rating the chatbot’s responses. Responses with scores above a pre-determined threshold are deemed acceptable to share with customers. Scores under the brink imply the content material must be blocked. This lets us robotically filter chatbot content material that doesn’t meet requirements we need to implement. To discover extra, see the accompanying notebook.

Clear up

To keep away from incurring future expenses, delete all of the sources that you simply created. Delete the deployed SageMaker models, if any, and cease the SageMaker Studio pocket book you launched for this train.

Conclusion

On this submit, we confirmed learn how to prepare a reward mannequin that predicts a human desire rating from the LLM’s response. That is achieved by producing a number of outputs for every immediate with the LLM, then asking human annotators to rank or rating the responses to every immediate. The reward mannequin is then educated to foretell the human desire rating from the LLM’s response. After the reward mannequin is educated, you need to use the reward mannequin to judge the LLM’s responses in opposition to your subjective organizational requirements.

As a corporation evolves, the reward features should evolve alongside altering organizational values and consumer expectations. What defines a “nice” AI output is subjective and remodeling. Organizations want versatile ML pipelines that frequently retrain reward fashions with up to date rewards reflecting newest priorities and wishes. This house is constantly evolving: direct preference-based policy optimization, tool-augmented reward modeling, and example-based control are different fashionable different methods to align AI methods with human values and targets.

We invite you to take the following step in customizing your AI options by partaking with the varied and subjective views of human suggestions. Embrace the facility of reward modeling to make sure your AI methods resonate along with your model identification and ship the distinctive experiences your clients deserve. Begin refining your AI fashions right this moment with Amazon SageMaker and be part of the vanguard of companies setting new requirements in customized buyer interactions. You probably have any questions or suggestions, please depart them within the feedback part.

Concerning the Writer

Dinesh Kumar Subramani is a Senior Options Architect primarily based in Edinburgh, Scotland. He makes a speciality of synthetic intelligence and machine studying, and is member of technical area group with in Amazon. Dinesh works carefully with UK Central Authorities clients to resolve their issues utilizing AWS companies. Exterior of labor, Dinesh enjoys spending high quality time together with his household, taking part in chess, and exploring a various vary of music.

Dinesh Kumar Subramani is a Senior Options Architect primarily based in Edinburgh, Scotland. He makes a speciality of synthetic intelligence and machine studying, and is member of technical area group with in Amazon. Dinesh works carefully with UK Central Authorities clients to resolve their issues utilizing AWS companies. Exterior of labor, Dinesh enjoys spending high quality time together with his household, taking part in chess, and exploring a various vary of music.