Construct an inner SaaS service with value and utilization monitoring for basis fashions on Amazon Bedrock

Enterprises are searching for to shortly unlock the potential of generative AI by offering entry to basis fashions (FMs) to totally different traces of enterprise (LOBs). IT groups are accountable for serving to the LOB innovate with pace and agility whereas offering centralized governance and observability. For instance, they could want to trace the utilization of FMs throughout groups, chargeback prices and supply visibility to the related value heart within the LOB. Moreover, they could want to control entry to totally different fashions per workforce. For instance, if solely particular FMs could also be authorized to be used.

Amazon Bedrock is a completely managed service that provides a alternative of high-performing basis fashions from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI. As a result of Amazon Bedrock is serverless, you don’t need to handle any infrastructure, and you may securely combine and deploy generative AI capabilities into your functions utilizing the AWS companies you’re already acquainted with.

A software program as a service (SaaS) layer for basis fashions can present a easy and constant interface for end-users, whereas sustaining centralized governance of entry and consumption. API gateways can present unfastened coupling between mannequin customers and the mannequin endpoint service, and suppleness to adapt to altering mannequin, architectures, and invocation strategies.

On this submit, we present you methods to construct an inner SaaS layer to entry basis fashions with Amazon Bedrock in a multi-tenant (workforce) structure. We particularly concentrate on utilization and value monitoring per tenant and likewise controls similar to utilization throttling per tenant. We describe how the answer and Amazon Bedrock consumption plans map to the overall SaaS journey framework. The code for the answer and an AWS Cloud Development Kit (AWS CDK) template is out there within the GitHub repository.

Challenges

An AI platform administrator wants to supply standardized and quick access to FMs to a number of growth groups.

The next are among the challenges to supply ruled entry to basis fashions:

- Value and utilization monitoring – Monitor and audit particular person tenant prices and utilization of basis fashions, and supply chargeback prices to particular value facilities

- Price range and utilization controls – Handle API quota, price range, and utilization limits for the permitted use of basis fashions over an outlined frequency per tenant

- Entry management and mannequin governance – Outline entry controls for particular permit listed fashions per tenant

- Multi-tenant standardized API – Present constant entry to basis fashions with OpenAPI requirements

- Centralized administration of API – Present a single layer to handle API keys for accessing fashions

- Mannequin variations and updates – Deal with new and up to date mannequin model rollouts

Answer overview

On this answer, we consult with a multi-tenant method. A tenant right here can vary from a person consumer, a selected challenge, workforce, and even a complete division. As we focus on the method, we use the time period workforce, as a result of it’s the most typical. We use API keys to limit and monitor API entry for groups. Every workforce is assigned an API key for entry to the FMs. There could be totally different consumer authentication and authorization mechanisms deployed in a company. For simplicity, we don’t embody these on this answer. You may additionally combine present identification suppliers with this answer.

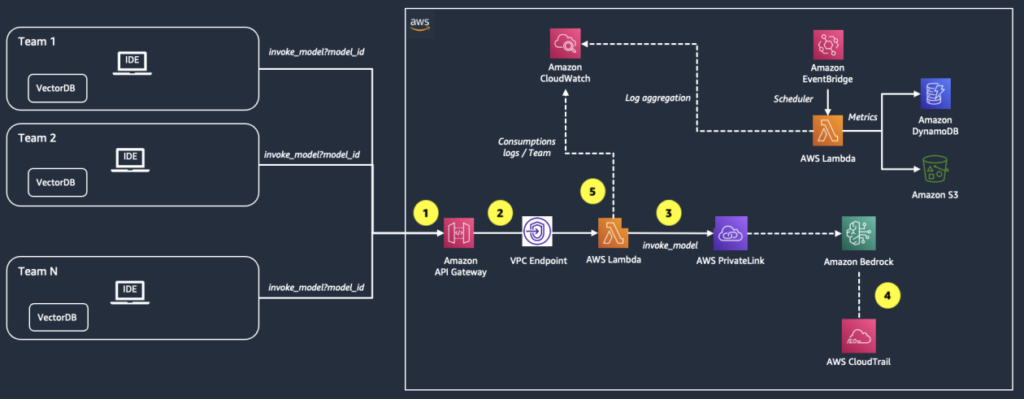

The next diagram summarizes the answer structure and key parts. Groups (tenants) assigned to separate value facilities eat Amazon Bedrock FMs through an API service. To trace consumption and value per workforce, the answer logs knowledge for every particular person invocation, together with the mannequin invoked, variety of tokens for textual content era fashions, and picture dimensions for multi-modal fashions. As well as, it aggregates the invocations per mannequin and prices by every workforce.

You possibly can deploy the answer in your individual account utilizing the AWS CDK. AWS CDK is an open supply software program growth framework to mannequin and provision your cloud utility sources utilizing acquainted programming languages. The AWS CDK code is out there within the GitHub repository.

Within the following sections, we focus on the important thing parts of the answer in additional element.

Capturing basis mannequin utilization per workforce

The workflow to seize FM utilization per workforce consists of the next steps (as numbered within the previous diagram):

- A workforce’s utility sends a POST request to Amazon API Gateway with the mannequin to be invoked within the

model_idquestion parameter and the consumer immediate within the request physique. - API Gateway routes the request to an AWS Lambda perform (

bedrock_invoke_model) that’s accountable for logging workforce utilization info in Amazon CloudWatch and invoking the Amazon Bedrock mannequin. - Amazon Bedrock gives a VPC endpoint powered by AWS PrivateLink. On this answer, the Lambda perform sends the request to Amazon Bedrock utilizing PrivateLink to determine a personal connection between the VPC in your account and the Amazon Bedrock service account. To study extra about PrivateLink, see Use AWS PrivateLink to set up private access to Amazon Bedrock.

- After the Amazon Bedrock invocation, Amazon CloudTrail generates a CloudTrail event.

- If the Amazon Bedrock name is profitable, the Lambda perform logs the next info relying on the kind of invoked mannequin and returns the generated response to the applying:

- team_id – The distinctive identifier for the workforce issuing the request.

- requestId – The distinctive identifier of the request.

- model_id – The ID of the mannequin to be invoked.

- inputTokens – The variety of tokens despatched to the mannequin as a part of the immediate (for textual content era and embeddings fashions).

- outputTokens – The utmost variety of tokens to be generated by the mannequin (for textual content era fashions).

- peak – The peak of the requested picture (for multi-modal fashions and multi-modal embeddings fashions).

- width – The width of the requested picture (for multi-modal fashions solely).

- steps – The steps requested (for Stability AI fashions).

Monitoring prices per workforce

A special movement aggregates the utilization info, then calculates and saves the on-demand prices per workforce each day. By having a separate movement, we be certain that value monitoring doesn’t influence the latency and throughput of the mannequin invocation movement. The workflow steps are as follows:

- An Amazon EventBridge rule triggers a Lambda perform (

bedrock_cost_tracking) every day. - The Lambda perform will get the utilization info from CloudWatch for the day past, calculates the related prices, and shops the info aggregated by

team_idandmodel_idin Amazon Simple Storage Service (Amazon S3) in CSV format.

To question and visualize the info saved in Amazon S3, you’ve totally different choices, together with S3 Select, and Amazon Athena and Amazon QuickSight.

Controlling utilization per workforce

A utilization plan specifies who can entry a number of deployed APIs and optionally units the goal request fee to start out throttling requests. The plan makes use of API keys to determine API purchasers who can entry the related API for every key. You need to use API Gateway usage plans to throttle requests that exceed predefined thresholds. You may as well use API keys and quota limits, which allow you to set the utmost variety of requests per API key every workforce is permitted to concern inside a specified time interval. That is along with Amazon Bedrock service quotas which can be assigned solely on the account degree.

Stipulations

Earlier than you deploy the answer, be sure you have the next:

Deploy the AWS CDK stack

Comply with the directions within the README file of the GitHub repository to configure and deploy the AWS CDK stack.

The stack deploys the next sources:

- Personal networking atmosphere (VPC, personal subnets, safety group)

- IAM position for controlling mannequin entry

- Lambda layers for the required Python modules

- Lambda perform

invoke_model - Lambda perform

list_foundation_models - Lambda perform

cost_tracking - Relaxation API (API Gateway)

- API Gateway utilization plan

- API key related to the utilization plan

Onboard a brand new workforce

For offering entry to new groups, you may both share the identical API key throughout totally different groups and observe the mannequin consumptions by offering a unique team_id for the API invocation, or create devoted API keys used for accessing Amazon Bedrock sources by following the directions offered within the README.

The stack deploys the next sources:

- API Gateway utilization plan related to the beforehand created REST API

- API key related to the utilization plan for the brand new workforce, with reserved throttling and burst configurations for the API

For extra details about API Gateway throttling and burst configurations, consult with Throttle API requests for better throughput.

After you deploy the stack, you may see that the brand new API key for team-2 is created as properly.

Configure mannequin entry management

The platform administrator can permit entry to particular basis fashions by modifying the IAM coverage related to the Lambda perform invoke_model. The

IAM permissions are outlined within the file setup/stack_constructs/iam.py. See the next code:

Invoke the service

After you’ve deployed the answer, you may invoke the service immediately out of your code. The next

is an instance in Python for consuming the invoke_model API for textual content era by a POST request:

Output: Amazon Bedrock is an inner expertise platform developed by Amazon to run and function lots of their companies and merchandise. Some key issues about Bedrock …

The next is one other instance in Python for consuming the invoke_model API for embeddings era by a POST request:

model_id = "amazon.titan-embed-text-v1" #the mannequin id for the Amazon Titan Embeddings Textual content mannequin

immediate = "What's Amazon Bedrock?"

response = requests.submit(

f"{api_url}/invoke_model?model_id={model_id}",

json={"inputs": immediate, "parameters": model_kwargs},

headers={

"x-api-key": api_key, #key for querying the API

"team_id": team_id #distinctive tenant identifier,

"embeddings": "true" #boolean worth for the embeddings mannequin

}

)

textual content = response.json()[0]["embedding"]

Output: 0.91796875, 0.45117188, 0.52734375, -0.18652344, 0.06982422, 0.65234375, -0.13085938, 0.056884766, 0.092285156, 0.06982422, 1.03125, 0.8515625, 0.16308594, 0.079589844, -0.033935547, 0.796875, -0.15429688, -0.29882812, -0.25585938, 0.45703125, 0.044921875, 0.34570312 …

Entry denied to basis fashions

The next is an instance in Python for consuming the invoke_model API for textual content era by a POST request with an entry denied response:

<Response [500]> “Traceback (most up-to-date name final):n File ”/var/job/index.py”, line 213, in lambda_handlern response = _invoke_text(bedrock_client, model_id, physique, model_kwargs)n File ”/var/job/index.py”, line 146, in _invoke_textn increase en File ”/var/job/index.py”, line 131, in _invoke_textn response = bedrock_client.invoke_model(n File ”/choose/python/botocore/consumer.py”, line 535, in _api_calln return self._make_api_call(operation_name, kwargs)n File ”/choose/python/botocore/consumer.py”, line 980, in _make_api_calln increase error_class(parsed_response, operation_name)nbotocore.errorfactory.AccessDeniedException: An error occurred (AccessDeniedException) when calling the InvokeModel operation: Your account just isn’t approved to invoke this API operation.n”

Value estimation instance

When invoking Amazon Bedrock fashions with on-demand pricing, the whole value is calculated because the sum of the enter and output prices. Enter prices are primarily based on the variety of enter tokens despatched to the mannequin, and output prices are primarily based on the tokens generated. The costs are per 1,000 enter tokens and per 1,000 output tokens. For extra particulars and particular mannequin costs, consult with Amazon Bedrock Pricing.

Let’s have a look at an instance the place two groups, team1 and team2, entry Amazon Bedrock by the answer on this submit. The utilization and value knowledge saved in Amazon S3 in a single day is proven within the following desk.

The columns input_tokens and output_tokens retailer the whole enter and output tokens throughout mannequin invocations per mannequin and per workforce, respectively, for a given day.

The columns input_cost and output_cost retailer the respective prices per mannequin and per workforce. These are calculated utilizing the next formulation:

input_cost = input_token_count * model_pricing["input_cost"] / 1000output_cost = output_token_count * model_pricing["output_cost"] / 1000

| team_id | model_id | input_tokens | output_tokens | invocations | input_cost | output_cost |

| Team1 | amazon.titan-tg1-large | 24000 | 2473 | 1000 | 0.0072 | 0.00099 |

| Team1 | anthropic.claude-v2 | 2448 | 4800 | 24 | 0.02698 | 0.15686 |

| Team2 | amazon.titan-tg1-large | 35000 | 52500 | 350 | 0.0105 | 0.021 |

| Team2 | ai21.j2-grande-instruct | 4590 | 9000 | 45 | 0.05738 | 0.1125 |

| Team2 | anthropic.claude-v2 | 1080 | 4400 | 20 | 0.0119 | 0.14379 |

Finish-to-end view of a practical multi-tenant serverless SaaS atmosphere

Let’s perceive what an end-to-end practical multi-tenant serverless SaaS atmosphere may appear to be. The next is a reference structure diagram.

This structure diagram is a zoomed-out model of the earlier structure diagram defined earlier within the submit, the place the earlier structure diagram explains the main points of one of many microservices talked about (foundational mannequin service). This diagram explains that, aside from foundational mannequin service, it is advisable produce other parts as properly in your multi-tenant SaaS platform to implement a practical and scalable platform.

Let’s undergo the main points of the structure.

Tenant functions

The tenant functions are the entrance finish functions that work together with the atmosphere. Right here, we present a number of tenants accessing from totally different native or AWS environments. The entrance finish functions could be prolonged to incorporate a registration web page for brand new tenants to register themselves and an admin console for directors of the SaaS service layer. If the tenant functions require a customized logic to be carried out that wants interplay with the SaaS atmosphere, they will implement the specs of the applying adaptor microservice. Instance situations may very well be including customized authorization logic whereas respecting the authorization specs of the SaaS atmosphere.

Shared companies

The next are shared companies:

- Tenant and consumer administration companies –These companies are accountable for registering and managing the tenants. They supply the cross-cutting performance that’s separate from utility companies and shared throughout all the tenants.

- Basis mannequin service –The answer structure diagram defined initially of this submit represents this microservice, the place the interplay from API Gateway to Lambda features is going on throughout the scope of this microservice. All tenants use this microservice to invoke the foundations fashions from Anthropic, AI21, Cohere, Stability, Meta, and Amazon, in addition to fine-tuned fashions. It additionally captures the knowledge wanted for utilization monitoring in CloudWatch logs.

- Value monitoring service –This service tracks the associated fee and utilization for every tenant. This microservice runs on a schedule to question the CloudWatch logs and output the aggregated utilization monitoring and inferred value to the info storage. The associated fee monitoring service could be prolonged to construct additional studies and visualization.

Utility adaptor service

This service presents a set of specs and APIs {that a} tenant could implement with a view to combine their customized logic to the SaaS atmosphere. Based mostly on how a lot customized integration is required, this part could be non-obligatory for tenants.

Multi-tenant knowledge retailer

The shared companies retailer their knowledge in a knowledge retailer that may be a single shared Amazon DynamoDB desk with a tenant partitioning key that associates DynamoDB objects with particular person tenants. The associated fee monitoring shared service outputs the aggregated utilization and value monitoring knowledge to Amazon S3. Based mostly on the use case, there could be an application-specific knowledge retailer as properly.

A multi-tenant SaaS atmosphere can have much more parts. For extra info, consult with Building a Multi-Tenant SaaS Solution Using AWS Serverless Services.

Assist for a number of deployment fashions

SaaS frameworks usually define two deployment fashions: pool and silo. For the pool mannequin, all tenants entry FMs from a shared atmosphere with frequent storage and compute infrastructure. Within the silo mannequin, every tenant has its personal set of devoted sources. You possibly can examine isolation fashions within the SaaS Tenant Isolation Strategies whitepaper.

The proposed answer could be adopted for each SaaS deployment fashions. Within the pool method, a centralized AWS atmosphere hosts the API, storage, and compute sources. In silo mode, every workforce accesses APIs, storage, and compute sources in a devoted AWS atmosphere.

The answer additionally suits with the accessible consumption plans offered by Amazon Bedrock. AWS gives a alternative of two consumptions plan for inference:

- On-Demand – This mode means that you can use basis fashions on a pay-as-you-go foundation with out having to make any time-based time period commitments

- Provisioned Throughput – This mode means that you can provision adequate throughput to satisfy your utility’s efficiency necessities in trade for a time-based time period dedication

For extra details about these choices, consult with Amazon Bedrock Pricing.

The serverless SaaS reference answer described on this submit can apply the Amazon Bedrock consumption plans to supply primary and premium tiering choices to end-users. Primary might embody On-Demand or Provisioned Throughput consumption of Amazon Bedrock and will embody particular utilization and price range limits. Tenant limits may very well be enabled by throttling requests primarily based on requests, token sizes, or price range allocation. Premium tier tenants might have their very own devoted sources with provisioned throughput consumption of Amazon Bedrock. These tenants would usually be related to manufacturing workloads that require excessive throughput and low latency entry to Amazon Bedrock FMs.

Conclusion

On this submit, we mentioned methods to construct an inner SaaS platform to entry basis fashions with Amazon Bedrock in a multi-tenant setup with a concentrate on monitoring prices and utilization, and throttling limits for every tenant. Extra matters to discover embody integrating present authentication and authorization options within the group, enhancing the API layer to incorporate internet sockets for bi-directional consumer server interactions, including content material filtering and different governance guardrails, designing a number of deployment tiers, integrating different microservices within the SaaS structure, and plenty of extra.

Your complete code for this answer is out there within the GitHub repository.

For extra details about SaaS-based frameworks, consult with SaaS Journey Framework: Building a New SaaS Solution on AWS.

In regards to the Authors

Hasan Poonawala is a Senior AI/ML Specialist Options Architect at AWS, working with Healthcare and Life Sciences clients. Hasan helps design, deploy and scale Generative AI and Machine studying functions on AWS. He has over 15 years of mixed work expertise in machine studying, software program growth and knowledge science on the cloud. In his spare time, Hasan likes to discover nature and spend time with family and friends.

Hasan Poonawala is a Senior AI/ML Specialist Options Architect at AWS, working with Healthcare and Life Sciences clients. Hasan helps design, deploy and scale Generative AI and Machine studying functions on AWS. He has over 15 years of mixed work expertise in machine studying, software program growth and knowledge science on the cloud. In his spare time, Hasan likes to discover nature and spend time with family and friends.

Anastasia Tzeveleka is a Senior AI/ML Specialist Options Architect at AWS. As a part of her work, she helps clients throughout EMEA construct basis fashions and create scalable generative AI and machine studying options utilizing AWS companies.

Anastasia Tzeveleka is a Senior AI/ML Specialist Options Architect at AWS. As a part of her work, she helps clients throughout EMEA construct basis fashions and create scalable generative AI and machine studying options utilizing AWS companies.

Bruno Pistone is a Generative AI and ML Specialist Options Architect for AWS primarily based in Milan. He works with massive clients serving to them to deeply perceive their technical wants and design AI and Machine Studying options that make the most effective use of the AWS Cloud and the Amazon Machine Studying stack. His experience embody: Machine Studying finish to finish, Machine Studying Industrialization, and Generative AI. He enjoys spending time along with his pals and exploring new locations, in addition to travelling to new locations.

Bruno Pistone is a Generative AI and ML Specialist Options Architect for AWS primarily based in Milan. He works with massive clients serving to them to deeply perceive their technical wants and design AI and Machine Studying options that make the most effective use of the AWS Cloud and the Amazon Machine Studying stack. His experience embody: Machine Studying finish to finish, Machine Studying Industrialization, and Generative AI. He enjoys spending time along with his pals and exploring new locations, in addition to travelling to new locations.

Vikesh Pandey is a Generative AI/ML Options architect, specialising in monetary companies the place he helps monetary clients construct and scale Generative AI/ML platforms and answer which scales to a whole lot to even 1000’s of customers. In his spare time, Vikesh likes to jot down on numerous weblog boards and construct legos along with his child.

Vikesh Pandey is a Generative AI/ML Options architect, specialising in monetary companies the place he helps monetary clients construct and scale Generative AI/ML platforms and answer which scales to a whole lot to even 1000’s of customers. In his spare time, Vikesh likes to jot down on numerous weblog boards and construct legos along with his child.