Getting began with Amazon Titan Textual content Embeddings

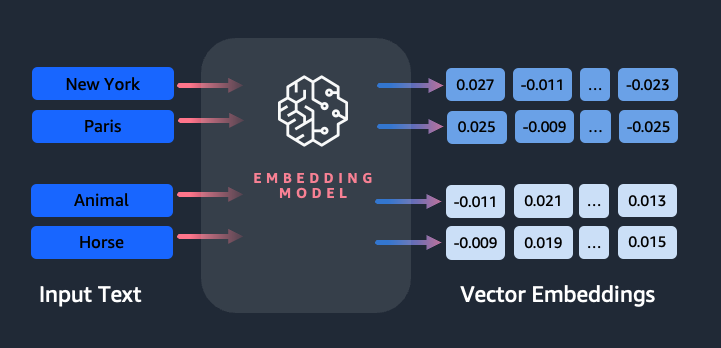

Embeddings play a key position in pure language processing (NLP) and machine studying (ML). Textual content embedding refers back to the course of of remodeling textual content into numerical representations that reside in a high-dimensional vector area. This method is achieved by using ML algorithms that allow the understanding of the which means and context of knowledge (semantic relationships) and the educational of advanced relationships and patterns inside the knowledge (syntactic relationships). You should use the ensuing vector representations for a variety of purposes, corresponding to info retrieval, textual content classification, pure language processing, and lots of others.

Amazon Titan Text Embeddings is a textual content embeddings mannequin that converts pure language textual content—consisting of single phrases, phrases, and even massive paperwork—into numerical representations that can be utilized to energy use circumstances corresponding to search, personalization, and clustering primarily based on semantic similarity.

On this put up, we focus on the Amazon Titan Textual content Embeddings mannequin, its options, and instance use circumstances.

Some key ideas embrace:

- Numerical illustration of textual content (vectors) captures semantics and relationships between phrases

- Wealthy embeddings can be utilized to match textual content similarity

- Multilingual textual content embeddings can determine which means in numerous languages

How is a bit of textual content transformed right into a vector?

There are a number of strategies to transform a sentence right into a vector. One in style technique is utilizing phrase embeddings algorithms, corresponding to Word2Vec, GloVe, or FastText, after which aggregating the phrase embeddings to kind a sentence-level vector illustration.

One other widespread strategy is to make use of massive language fashions (LLMs), like BERT or GPT, which may present contextualized embeddings for complete sentences. These fashions are primarily based on deep studying architectures corresponding to Transformers, which may seize the contextual info and relationships between phrases in a sentence extra successfully.

Why do we’d like an embeddings mannequin?

Vector embeddings are basic for LLMs to grasp the semantic levels of language, and in addition allow LLMs to carry out effectively on downstream NLP duties like sentiment evaluation, named entity recognition, and textual content classification.

Along with semantic search, you should use embeddings to enhance your prompts for extra correct outcomes by Retrieval Augmented Technology (RAG)—however so as to use them, you’ll have to retailer them in a database with vector capabilities.

The Amazon Titan Textual content Embeddings mannequin is optimized for textual content retrieval to allow RAG use circumstances. It allows you to first convert your textual content knowledge into numerical representations or vectors, after which use these vectors to precisely seek for related passages from a vector database, permitting you to profit from your proprietary knowledge together with different basis fashions.

As a result of Amazon Titan Textual content Embeddings is a managed mannequin on Amazon Bedrock, it’s supplied as a wholly serverless expertise. You should use it by way of both the Amazon Bedrock REST API or the AWS SDK. The required parameters are the textual content that you just wish to generate the embeddings of and the modelID parameter, which represents the title of the Amazon Titan Textual content Embeddings mannequin. The next code is an instance utilizing the AWS SDK for Python (Boto3):

The output will look one thing like the next:

Seek advice from Amazon Bedrock boto3 Setup for extra particulars on set up the required packages, connect with Amazon Bedrock, and invoke fashions.

Options of Amazon Titan Textual content Embeddings

With Amazon Titan Textual content Embeddings, you possibly can enter as much as 8,000 tokens, making it effectively suited to work with single phrases, phrases, or complete paperwork primarily based in your use case. Amazon Titan returns output vectors of dimension 1536, giving it a excessive diploma of accuracy, whereas additionally optimizing for low-latency, cost-effective outcomes.

Amazon Titan Textual content Embeddings helps creating and querying embeddings for textual content in over 25 completely different languages. This implies you possibly can apply the mannequin to your use circumstances with no need to create and preserve separate fashions for every language you wish to help.

Having a single embeddings mannequin skilled on many languages supplies the next key advantages:

- Broader attain – By supporting over 25 languages out of the field, you possibly can broaden the attain of your purposes to customers and content material in lots of worldwide markets.

- Constant efficiency – With a unified mannequin masking a number of languages, you get constant outcomes throughout languages as a substitute of optimizing individually per language. The mannequin is skilled holistically so that you get the benefit throughout languages.

- Multilingual question help – Amazon Titan Textual content Embeddings permits querying textual content embeddings in any of the supported languages. This supplies flexibility to retrieve semantically related content material throughout languages with out being restricted to a single language. You’ll be able to construct purposes that question and analyze multilingual knowledge utilizing the identical unified embeddings area.

As of this writing, the next languages are supported:

- Arabic

- Chinese language (Simplified)

- Chinese language (Conventional)

- Czech

- Dutch

- English

- French

- German

- Hebrew

- Hindi

- Italian

- Japanese

- Kannada

- Korean

- Malayalam

- Marathi

- Polish

- Portuguese

- Russian

- Spanish

- Swedish

- Filipino Tagalog

- Tamil

- Telugu

- Turkish

Utilizing Amazon Titan Textual content Embeddings with LangChain

LangChain is a well-liked open supply framework for working with generative AI fashions and supporting applied sciences. It features a BedrockEmbeddings client that conveniently wraps the Boto3 SDK with an abstraction layer. The BedrockEmbeddings consumer means that you can work with textual content and embeddings instantly, with out realizing the small print of the JSON request or response buildings. The next is a straightforward instance:

You can even use LangChain’s BedrockEmbeddings consumer alongside the Amazon Bedrock LLM consumer to simplify implementing RAG, semantic search, and different embeddings-related patterns.

Use circumstances for embeddings

Though RAG is at the moment the preferred use case for working with embeddings, there are various different use circumstances the place embeddings may be utilized. The next are some further situations the place you should use embeddings to unravel particular issues, both on their very own or in cooperation with an LLM:

- Query and reply – Embeddings might help help query and reply interfaces by the RAG sample. Embeddings technology paired with a vector database can help you discover shut matches between questions and content material in a information repository.

- Customized suggestions – Much like query and reply, you should use embeddings to seek out trip locations, faculties, autos, or different merchandise primarily based on the standards supplied by the consumer. This might take the type of a easy record of matches, or you possibly can then use an LLM to course of every suggestion and clarify the way it satisfies the consumer’s standards. You might additionally use this strategy to generate customized “10 greatest” articles for a consumer primarily based on their particular wants.

- Knowledge administration – When you’ve knowledge sources that don’t map cleanly to one another, however you do have textual content content material that describes the information file, you should use embeddings to determine potential duplicate information. For instance, you possibly can use embeddings to determine duplicate candidates that may use completely different formatting, abbreviations, and even have translated names.

- Utility portfolio rationalization – When seeking to align utility portfolios throughout a dad or mum firm and an acquisition, it’s not all the time apparent the place to begin discovering potential overlap. The standard of configuration administration knowledge could be a limiting issue, and it may be troublesome coordinating throughout groups to grasp the appliance panorama. Through the use of semantic matching with embeddings, we will do a fast evaluation throughout utility portfolios to determine high-potential candidate purposes for rationalization.

- Content material grouping – You should use embeddings to assist facilitate grouping related content material into classes that you just may not know forward of time. For instance, let’s say you had a set of buyer emails or on-line product critiques. You might create embeddings for every merchandise, then run these embeddings by k-means clustering to determine logical groupings of buyer considerations, product reward or complaints, or different themes. You’ll be able to then generate targeted summaries from these groupings’ content material utilizing an LLM.

Semantic search instance

In our example on GitHub, we display a easy embeddings search utility with Amazon Titan Textual content Embeddings, LangChain, and Streamlit.

The instance matches a consumer’s question to the closest entries in an in-memory vector database. We then show these matches instantly within the consumer interface. This may be helpful if you wish to troubleshoot a RAG utility, or instantly consider an embeddings mannequin.

For simplicity, we use the in-memory FAISS database to retailer and seek for embeddings vectors. In a real-world state of affairs at scale, you’ll doubtless wish to use a persistent knowledge retailer just like the vector engine for Amazon OpenSearch Serverless or the pgvector extension for PostgreSQL.

Attempt a couple of prompts from the online utility in numerous languages, corresponding to the next:

- How can I monitor my utilization?

- How can I customise fashions?

- Which programming languages can I take advantage of?

- Remark mes données sont-elles sécurisées ?

- 私のデータはどのように保護されていますか?

- Quais fornecedores de modelos estão disponíveis por meio do Bedrock?

- In welchen Regionen ist Amazon Bedrock verfügbar?

- 有哪些级别的支持?

Word that despite the fact that the supply materials was in English, the queries in different languages have been matched with related entries.

Conclusion

The textual content technology capabilities of basis fashions are very thrilling, however it’s essential to keep in mind that understanding textual content, discovering related content material from a physique of data, and making connections between passages are essential to reaching the complete worth of generative AI. We’ll proceed to see new and fascinating use circumstances for embeddings emerge over the following years as these fashions proceed to enhance.

Subsequent steps

You’ll find further examples of embeddings as notebooks or demo purposes within the following workshops:

In regards to the Authors

Jason Stehle is a Senior Options Architect at AWS, primarily based within the New England space. He works with prospects to align AWS capabilities with their biggest enterprise challenges. Outdoors of labor, he spends his time constructing issues and watching comedian e-book films along with his household.

Jason Stehle is a Senior Options Architect at AWS, primarily based within the New England space. He works with prospects to align AWS capabilities with their biggest enterprise challenges. Outdoors of labor, he spends his time constructing issues and watching comedian e-book films along with his household.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply captivated with exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected purposes on the AWS platform, and is devoted to fixing know-how challenges and helping with their cloud journey.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply captivated with exploring the probabilities of generative AI. He collaborates with prospects to assist them construct well-architected purposes on the AWS platform, and is devoted to fixing know-how challenges and helping with their cloud journey.

Raj Pathak is a Principal Options Architect and Technical Advisor to massive Fortune 50 firms and mid-sized monetary providers establishments (FSI) throughout Canada and america. He makes a speciality of machine studying purposes corresponding to generative AI, pure language processing, clever doc processing, and MLOps.

Raj Pathak is a Principal Options Architect and Technical Advisor to massive Fortune 50 firms and mid-sized monetary providers establishments (FSI) throughout Canada and america. He makes a speciality of machine studying purposes corresponding to generative AI, pure language processing, clever doc processing, and MLOps.

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the e-book – Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Girls in Manufacturing Schooling Basis Board. She leads machine studying (ML) initiatives in varied domains corresponding to pc imaginative and prescient, pure language processing and generative AI. She helps prospects to construct, practice and deploy massive machine studying fashions at scale. She speaks in inside and exterior conferences such re:Invent, Girls in Manufacturing West, YouTube webinars and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.

Mani Khanuja is a Tech Lead – Generative AI Specialists, writer of the e-book – Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Girls in Manufacturing Schooling Basis Board. She leads machine studying (ML) initiatives in varied domains corresponding to pc imaginative and prescient, pure language processing and generative AI. She helps prospects to construct, practice and deploy massive machine studying fashions at scale. She speaks in inside and exterior conferences such re:Invent, Girls in Manufacturing West, YouTube webinars and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML options. Mark’s work covers a variety of ML use circumstances, with a major curiosity in pc imaginative and prescient, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, together with the ML Specialty Certification. Previous to becoming a member of AWS, Mark was an architect, developer, and know-how chief for over 25 years, together with 19 years in monetary providers.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML options. Mark’s work covers a variety of ML use circumstances, with a major curiosity in pc imaginative and prescient, deep studying, and scaling ML throughout the enterprise. He has helped firms in lots of industries, together with insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, together with the ML Specialty Certification. Previous to becoming a member of AWS, Mark was an architect, developer, and know-how chief for over 25 years, together with 19 years in monetary providers.