NousResearch Launched Nous-Hermes-2-Mixtral-8x7B: An Open-Supply LLM with SFT and DPO Variations

In synthetic intelligence and language fashions, customers typically face challenges in coaching and using fashions for numerous duties. The necessity for a flexible, high-performing mannequin to know and generate content material throughout completely different domains is clear. Current options could present some stage of efficiency, however they should catch up in attaining state-of-the-art outcomes and adaptableness. The issue is for a sophisticated language mannequin that may excel in understanding and producing content material throughout many duties. Whereas different fashions can be found, the present choices could solely partially meet the standards of attaining cutting-edge efficiency and flexibility.

NousResearch simply launched Nous-Hermes-2-Mixtral-8x7B. It has 2 variations, together with an SFT and a DPO model of this mannequin. Nous Hermes 2 Mixtral 8x7B DPO goals to deal with these challenges by providing a state-of-the-art answer. Educated on an unlimited dataset comprising primarily GPT-4 generated information and supplemented with high-quality info from open datasets within the AI area, this mannequin reveals distinctive efficiency throughout numerous duties. It introduces a novel SFT + DPO model, and for many who choose a distinct strategy, an SFT-only model can also be made obtainable.

The Nous Hermes 2 Mixtral 8x7B SFT is a specialised model of the newest Nous Analysis mannequin, designed solely for supervised fine-tuning. It’s constructed on the Mixtral 8x7B MoE LLM structure. This mannequin has been educated utilizing a couple of million entries, predominantly generated by GPT-4, together with different high-quality information from numerous open datasets within the AI area. It demonstrates distinctive efficiency throughout a variety of duties, setting new benchmarks within the business.

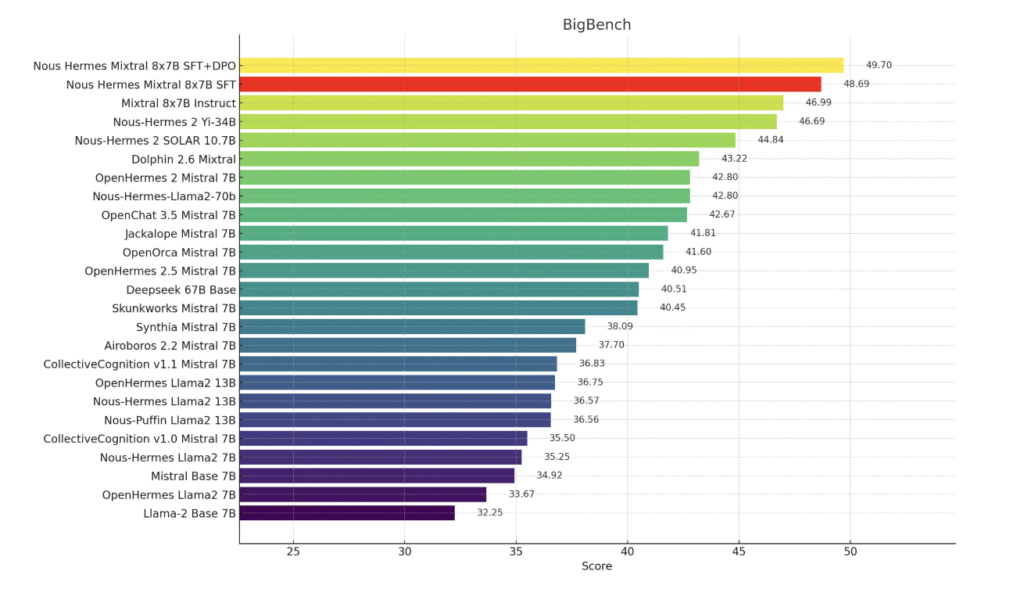

The Nous-Hermes-2-Mixtral-8x7B mannequin has undergone benchmark testing in opposition to GPT4All, AGIEval, and BigBench duties. The outcomes show important enhancements over the bottom Mixtral mannequin, surpassing even the flagship Mixtral Finetune by MistralAI. The typical efficiency throughout these benchmarks is a formidable 75.70 for GPT4All, 46.05 for AGIEval, and 49.70 for BigBench.

The introduction of ChatML because the immediate format permits for a extra structured and interesting interplay with the mannequin, notably in multi-turn chat dialogues. System prompts allow steerability, offering customers with a nuanced approach to information the mannequin’s responses primarily based on roles, guidelines, and stylistic selections. This format, which aligns with the OpenAI endpoint compatibility, enhances the consumer expertise and makes the mannequin extra accessible.

In conclusion, Nous Hermes 2 Mixtral 8x7B DPO is a strong answer to language mannequin coaching and utilization challenges. Its complete coaching information, modern variations, and spectacular benchmark outcomes make it a flexible and high-performing mannequin. With a concentrate on consumer interplay via ChatML and a dedication to surpassing present benchmarks, this mannequin stands out as a sophisticated and efficient instrument in synthetic intelligence.

Niharika is a Technical consulting intern at Marktechpost. She is a 3rd yr undergraduate, presently pursuing her B.Tech from Indian Institute of Expertise(IIT), Kharagpur. She is a extremely enthusiastic particular person with a eager curiosity in Machine studying, Information science and AI and an avid reader of the newest developments in these fields.