Working Mixtral 8x7b On Google Colab For Free

Picture by Writer

On this put up, we’ll discover the brand new state-of-the-art open-source mannequin referred to as Mixtral 8x7b. We may also discover ways to entry it utilizing the LLaMA C++ library and how you can run giant language fashions on decreased computing and reminiscence.

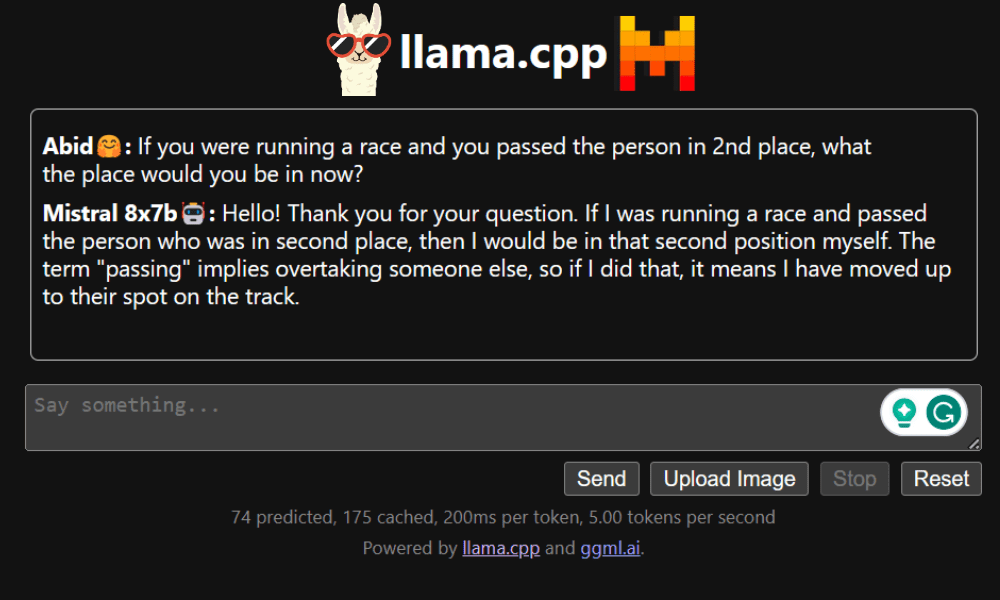

Mixtral 8x7b is a high-quality sparse combination of specialists (SMoE) mannequin with open weights, created by Mistral AI. It’s licensed beneath Apache 2.0 and outperforms Llama 2 70B on most benchmarks whereas having 6x quicker inference. Mixtral matches or beats GPT3.5 on most traditional benchmarks and is one of the best open-weight mannequin concerning value/efficiency.

Picture from Mixtral of experts

Mixtral 8x7B makes use of a decoder-only sparse mixture-of-experts community. This includes a feedforward block deciding on from 8 teams of parameters, with a router community selecting two of those teams for every token, combining their outputs additively. This technique enhances the mannequin’s parameter depend whereas managing value and latency, making it as environment friendly as a 12.9B mannequin, regardless of having 46.7B whole parameters.

Mixtral 8x7B mannequin excels in dealing with a large context of 32k tokens and helps a number of languages, together with English, French, Italian, German, and Spanish. It demonstrates robust efficiency in code technology and might be fine-tuned into an instruction-following mannequin, reaching excessive scores on benchmarks like MT-Bench.

LLaMA.cpp is a C/C++ library that gives a high-performance interface for big language fashions (LLMs) primarily based on Fb’s LLM structure. It’s a light-weight and environment friendly library that can be utilized for a wide range of duties, together with textual content technology, translation, and query answering. LLaMA.cpp helps a variety of LLMs, together with LLaMA, LLaMA 2, Falcon, Alpaca, Mistral 7B, Mixtral 8x7B, and GPT4ALL. It’s appropriate with all working methods and might operate on each CPUs and GPUs.

On this part, we shall be operating the llama.cpp internet software on Colab. By writing a couple of strains of code, it is possible for you to to expertise the brand new state-of-the-art mannequin efficiency in your PC or on Google Colab.

Getting Began

First, we’ll obtain the llama.cpp GitHub repository utilizing the command line beneath:

!git clone --depth 1 https://github.com/ggerganov/llama.cpp.git

After that, we’ll change listing into the repository and set up the llama.cpp utilizing the `make` command. We’re putting in the llama.cpp for the NVidia GPU with CUDA put in.

%cd llama.cpp

!make LLAMA_CUBLAS=1

Obtain the Mannequin

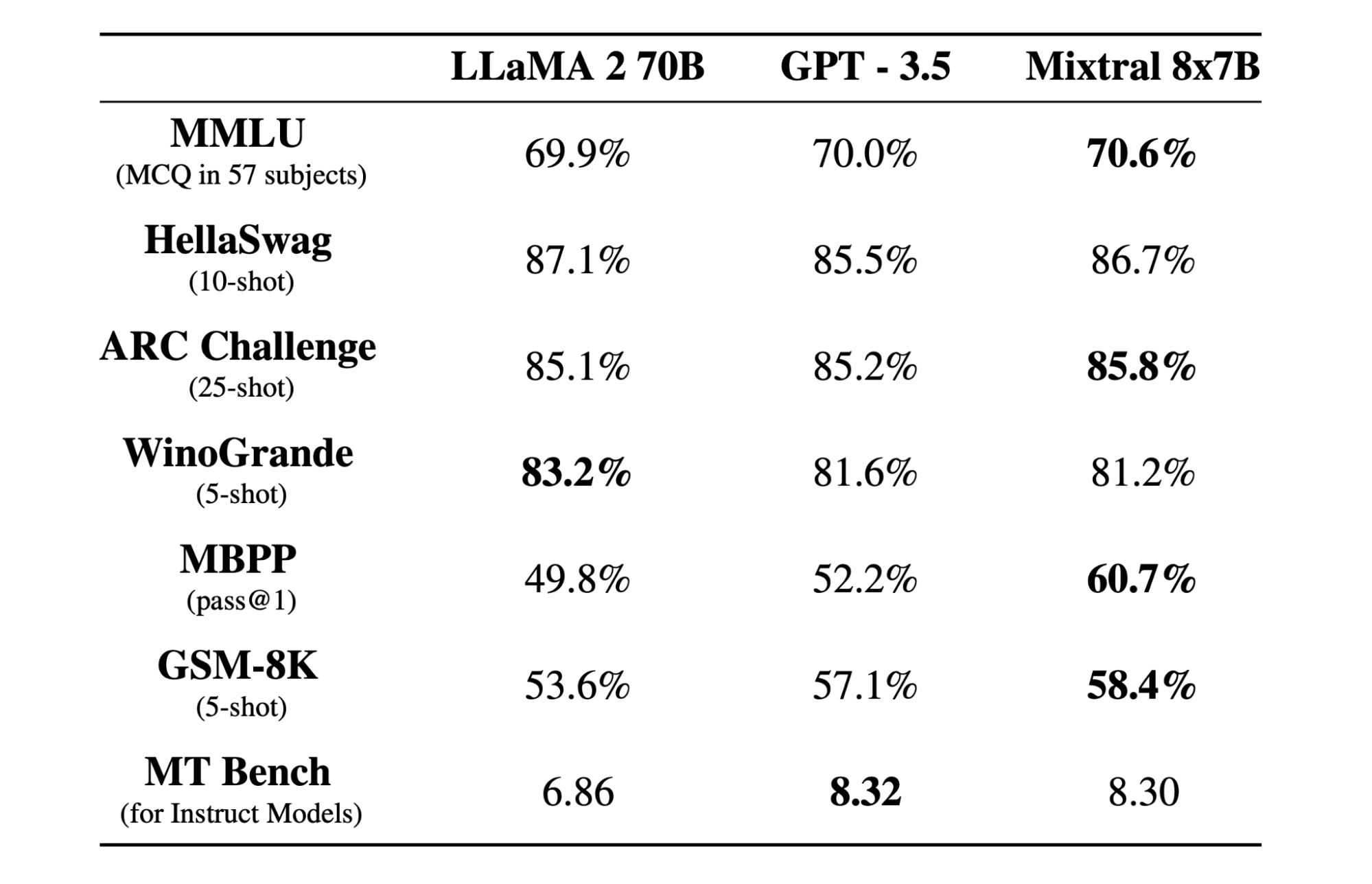

We are able to obtain the mannequin from the Hugging Face Hub by deciding on the suitable model of the `.gguf` mannequin file. Extra data on numerous variations might be present in TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF.

Picture from TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF

You should utilize the command `wget` to obtain the mannequin within the present listing.

!wget https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF/resolve/major/mixtral-8x7b-instruct-v0.1.Q2_K.gguf

Exterior Deal with for LLaMA Server

After we run the LLaMA server it should give us a localhost IP which is ineffective for us on Colab. We want the connection to the localhost proxy through the use of the Colab kernel proxy port.

After operating the code beneath, you’ll get the worldwide hyperlink. We’ll use this hyperlink to entry our webapp later.

from google.colab.output import eval_js

print(eval_js("google.colab.kernel.proxyPort(6589)"))

https://8fx1nbkv1c8-496ff2e9c6d22116-6589-colab.googleusercontent.com/

Working the Server

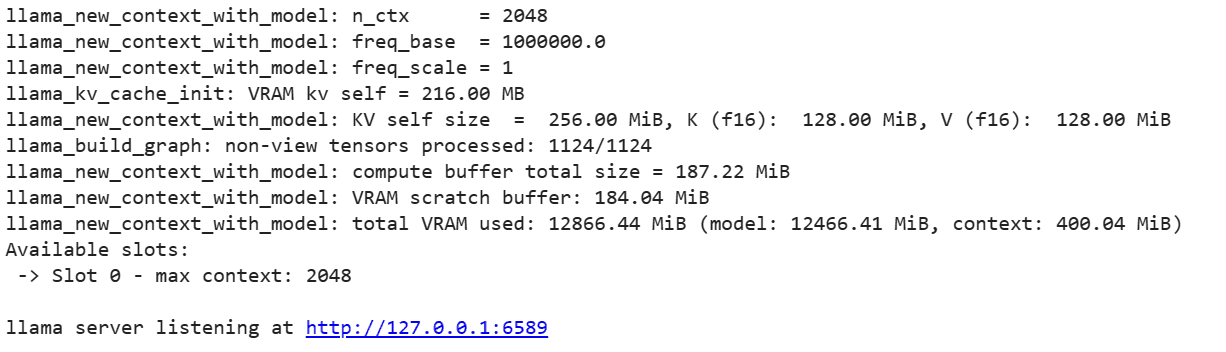

To run the LLaMA C++ server, it is advisable present the server command with the placement of the mannequin file and the proper port quantity. It is necessary to be sure that the port quantity matches the one we initiated within the earlier step for the proxy port.

%cd /content material/llama.cpp

!./server -m mixtral-8x7b-instruct-v0.1.Q2_K.gguf -ngl 27 -c 2048 --port 6589

The chat webapp might be accessed by clicking on the proxy port hyperlink within the earlier step because the server shouldn’t be operating regionally.

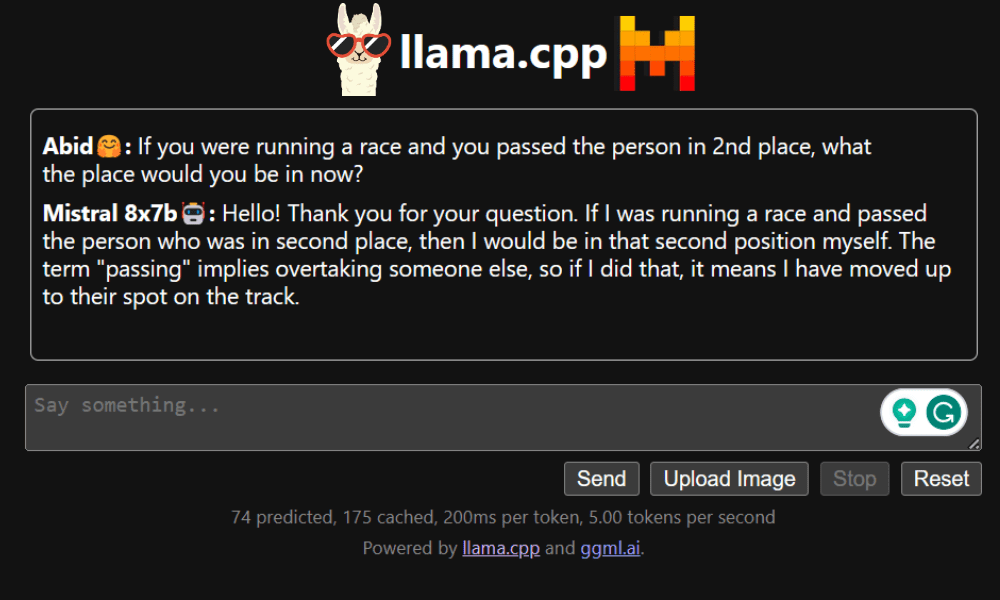

LLaMA C++ Webapp

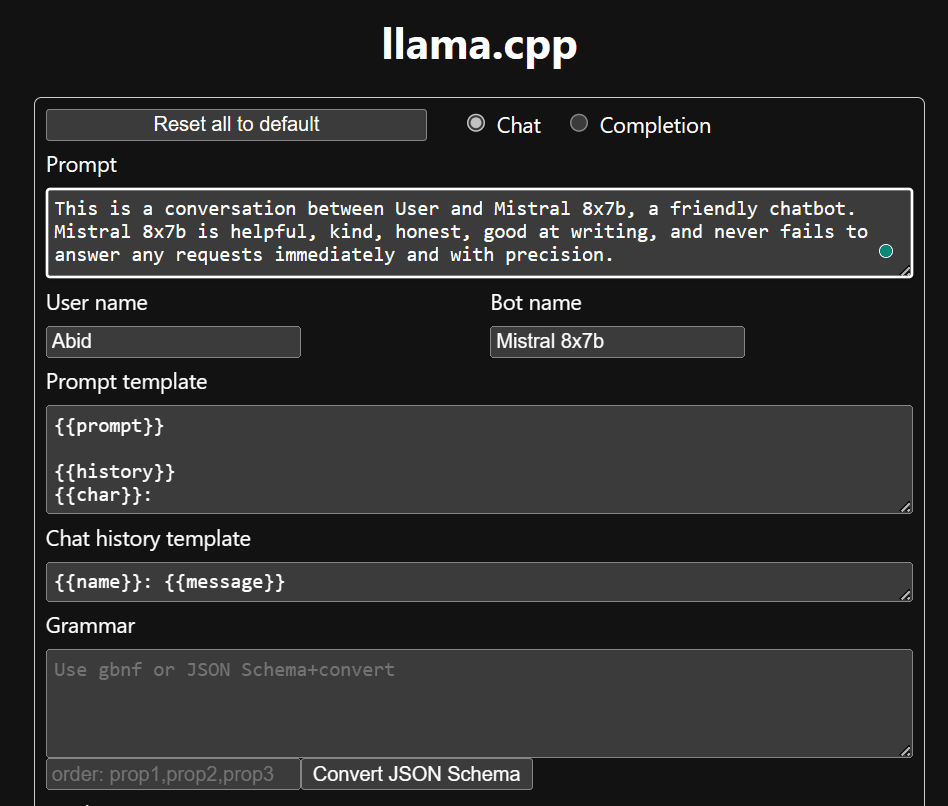

Earlier than we start utilizing the chatbot, we have to customise it. Exchange “LLaMA” together with your mannequin title within the immediate part. Moreover, modify the consumer title and bot title to differentiate between the generated responses.

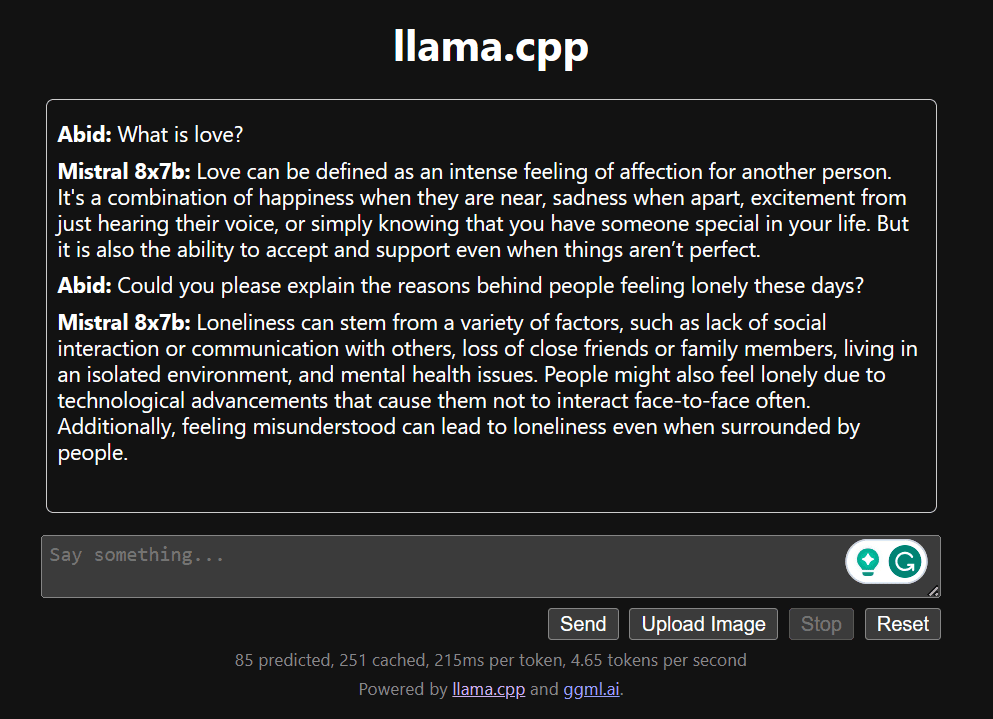

Begin chatting by scrolling down and typing within the chat part. Be happy to ask technical questions that different open supply fashions have didn’t reply correctly.

If you happen to encounter points with the app, you’ll be able to attempt operating it by yourself utilizing my Google Colab: https://colab.analysis.google.com/drive/1gQ1lpSH-BhbKN-DdBmq5r8-8Rw8q1p9r?usp=sharing

This tutorial gives a complete information on how you can run the superior open-source mannequin, Mixtral 8x7b, on Google Colab utilizing the LLaMA C++ library. In comparison with different fashions, Mixtral 8x7b delivers superior efficiency and effectivity, making it a superb answer for individuals who wish to experiment with giant language fashions however do not need in depth computational sources. You’ll be able to simply run it in your laptop computer or on a free cloud compute. It’s user-friendly, and you’ll even deploy your chat app for others to make use of and experiment with.

I hope you discovered this straightforward answer to operating the big mannequin useful. I’m all the time searching for easy and higher choices. In case you have a good higher answer, please let me know, and I’ll cowl it subsequent time.

Abid Ali Awan (@1abidaliawan) is an authorized information scientist skilled who loves constructing machine studying fashions. Presently, he’s specializing in content material creation and writing technical blogs on machine studying and information science applied sciences. Abid holds a Grasp’s diploma in Expertise Administration and a bachelor’s diploma in Telecommunication Engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students combating psychological sickness.