Establish cybersecurity anomalies in your Amazon Safety Lake knowledge utilizing Amazon SageMaker

Clients are confronted with growing safety threats and vulnerabilities throughout infrastructure and utility sources as their digital footprint has expanded and the enterprise impression of these digital belongings has grown. A standard cybersecurity problem has been two-fold:

- Consuming logs from digital sources that come in numerous codecs and schemas and automating the evaluation of risk findings primarily based on these logs.

- Whether or not logs are coming from Amazon Internet Providers (AWS), different cloud suppliers, on-premises, or edge units, clients must centralize and standardize safety knowledge.

Moreover, the analytics for figuring out safety threats have to be able to scaling and evolving to satisfy a altering panorama of risk actors, safety vectors, and digital belongings.

A novel method to unravel this complicated safety analytics state of affairs combines the ingestion and storage of safety knowledge utilizing Amazon Security Lake and analyzing the safety knowledge with machine studying (ML) utilizing Amazon SageMaker. Amazon Safety Lake is a purpose-built service that routinely centralizes a corporation’s safety knowledge from cloud and on-premises sources right into a purpose-built knowledge lake saved in your AWS account. Amazon Safety Lake automates the central administration of safety knowledge, normalizes logs from built-in AWS companies and third-party companies and manages the lifecycle of knowledge with customizable retention and in addition automates storage tiering. Amazon Safety Lake ingests log information within the Open Cybersecurity Schema Framework (OCSF) format, with help for companions similar to Cisco Safety, CrowdStrike, Palo Alto Networks, and OCSF logs from sources outdoors your AWS atmosphere. This unified schema streamlines downstream consumption and analytics as a result of the information follows a standardized schema and new sources could be added with minimal knowledge pipeline adjustments. After the safety log knowledge is saved in Amazon Safety Lake, the query turns into easy methods to analyze it. An efficient method to analyzing the safety log knowledge is utilizing ML; particularly, anomaly detection, which examines exercise and visitors knowledge and compares it in opposition to a baseline. The baseline defines what exercise is statistically regular for that atmosphere. Anomaly detection scales past a person occasion signature, and it may evolve with periodic retraining; visitors categorised as irregular or anomalous can then be acted upon with prioritized focus and urgency. Amazon SageMaker is a completely managed service that permits clients to organize knowledge and construct, practice, and deploy ML fashions for any use case with totally managed infrastructure, instruments, and workflows, together with no-code choices for enterprise analysts. SageMaker helps two built-in anomaly detection algorithms: IP Insights and Random Cut Forest. It’s also possible to use SageMaker to create your individual customized outlier detection mannequin utilizing algorithms sourced from a number of ML frameworks.

On this submit, you discover ways to put together knowledge sourced from Amazon Safety Lake, after which practice and deploy an ML mannequin utilizing an IP Insights algorithm in SageMaker. This mannequin identifies anomalous community visitors or habits which might then be composed as half of a bigger end-to-end safety resolution. Such an answer may invoke a multi-factor authentication (MFA) verify if a person is signing in from an uncommon server or at an uncommon time, notify employees if there’s a suspicious community scan coming from new IP addresses, alert directors if uncommon community protocols or ports are used, or enrich the IP insights classification end result with different knowledge sources similar to Amazon GuardDuty and IP popularity scores to rank risk findings.

Resolution overview

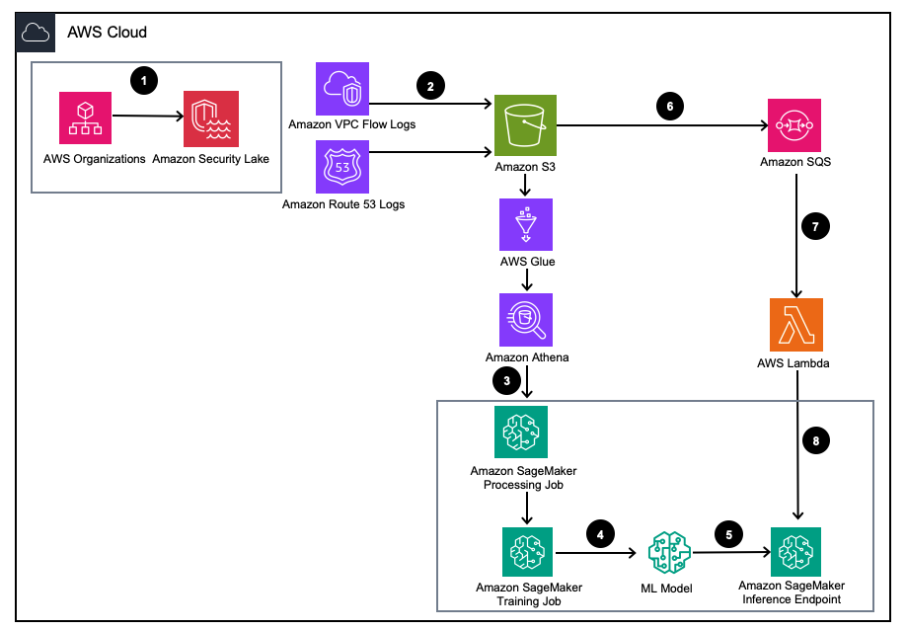

Determine 1 – Resolution Structure

- Allow Amazon Safety Lake with AWS Organizations for AWS accounts, AWS Areas, and exterior IT environments.

- Arrange Safety Lake sources from Amazon Virtual Private Cloud (Amazon VPC) Movement Logs and Amazon Route53 DNS logs to the Amazon Safety Lake S3 bucket.

- Course of Amazon Safety Lake log knowledge utilizing a SageMaker Processing job to engineer options. Use Amazon Athena to question structured OCSF log knowledge from Amazon Simple Storage Service (Amazon S3) by means of AWS Glue tables managed by AWS LakeFormation.

- Prepare a SageMaker ML mannequin utilizing a SageMaker Coaching job that consumes the processed Amazon Safety Lake logs.

- Deploy the skilled ML mannequin to a SageMaker inference endpoint.

- Retailer new safety logs in an S3 bucket and queue occasions in Amazon Simple Queue Service (Amazon SQS).

- Subscribe an AWS Lambda operate to the SQS queue.

- Invoke the SageMaker inference endpoint utilizing a Lambda operate to categorise safety logs as anomalies in actual time.

Conditions

To deploy the answer, it’s essential to first full the next stipulations:

- Enable Amazon Security Lake inside your group or a single account with each VPC Movement Logs and Route 53 resolver logs enabled.

- Make sure that the AWS Identity and Access Management (IAM) function utilized by SageMaker processing jobs and notebooks has been granted an IAM coverage together with the Amazon Security Lake subscriber query access permission for the managed Amazon Safety lake database and tables managed by AWS Lake Formation. This processing job ought to be run from inside an analytics or safety tooling account to stay compliant with AWS Security Reference Architecture (AWS SRA).

- Make sure that the IAM function utilized by the Lambda operate has been granted an IAM coverage together with the Amazon Security Lake subscriber data access permission.

Deploy the answer

To arrange the atmosphere, full the next steps:

- Launch a SageMaker Studio or SageMaker Jupyter pocket book with a

ml.m5.giantoccasion. Notice: Occasion measurement relies on the datasets you employ. - Clone the GitHub repository.

- Open the pocket book

01_ipinsights/01-01.amazon-securitylake-sagemaker-ipinsights.ipy. - Implement the provided IAM policy and corresponding IAM trust policy on your SageMaker Studio Pocket book occasion to entry all the mandatory knowledge in S3, Lake Formation, and Athena.

This weblog walks by means of the related portion of code inside the pocket book after it’s deployed in your atmosphere.

Set up the dependencies and import the required library

Use the next code to put in dependencies, import the required libraries, and create the SageMaker S3 bucket wanted for knowledge processing and mannequin coaching. One of many required libraries, awswrangler, is an AWS SDK for pandas dataframe that’s used to question the related tables inside the AWS Glue Knowledge Catalog and retailer the outcomes domestically in a dataframe.

Question the Amazon Safety Lake VPC movement log desk

This portion of code makes use of the AWS SDK for pandas to question the AWS Glue desk associated to VPC Movement Logs. As talked about within the stipulations, Amazon Safety Lake tables are managed by AWS Lake Formation, so all correct permissions have to be granted to the function utilized by the SageMaker pocket book. This question will pull a number of days of VPC movement log visitors. The dataset used throughout improvement of this weblog was small. Relying on the size of your use case, try to be conscious of the boundaries of the AWS SDK for pandas. When contemplating terabyte scale, you must think about AWS SDK for pandas help for Modin.

Once you view the information body, you will notice an output of a single column with widespread fields that may be discovered within the Network Activity (4001) class of the OCSF.

Normalize the Amazon Safety Lake VPC movement log knowledge into the required coaching format for IP Insights.

The IP Insights algorithm requires that the coaching knowledge be in CSV format and include two columns. The primary column have to be an opaque string that corresponds to an entity’s distinctive identifier. The second column have to be the IPv4 handle of the entity’s entry occasion in decimal-dot notation. Within the pattern dataset for this weblog, the distinctive identifier is the Occasion IDs of EC2 situations related to the instance_id worth inside the dataframe. The IPv4 handle can be derived from the src_endpoint. Based mostly on the best way the Amazon Athena question was created, the imported knowledge is already within the appropriate format for coaching an IP Insights mannequin, so no extra characteristic engineering is required. When you modify the question in one other approach, you could want to include extra characteristic engineering.

Question and normalize the Amazon Safety Lake Route 53 resolver log desk

Simply as you probably did above, the following step of the pocket book runs an analogous question in opposition to the Amazon Safety Lake Route 53 resolver desk. Since you’ll be utilizing all OCSF compliant knowledge inside this pocket book, any characteristic engineering duties stay the identical for Route 53 resolver logs as they have been for VPC Movement Logs. You then mix the 2 knowledge frames right into a single knowledge body that’s used for coaching. For the reason that Amazon Athena question hundreds the information domestically within the appropriate format, no additional characteristic engineering is required.

Get IP Insights coaching picture and practice the mannequin with the OCSF knowledge

On this subsequent portion of the pocket book, you practice an ML mannequin primarily based on the IP Insights algorithm and use the consolidated dataframe of OCSF from several types of logs. An inventory of the IP Insights hyperparmeters could be discovered here. Within the instance beneath we chosen hyperparameters that outputted the very best performing mannequin, for instance, 5 for epoch and 128 for vector_dim. For the reason that coaching dataset for our pattern was comparatively small, we utilized a ml.m5.giant occasion. Hyperparameters and your coaching configurations similar to occasion rely and occasion kind ought to be chosen primarily based in your goal metrics and your coaching knowledge measurement. One functionality which you can make the most of inside Amazon SageMaker to search out the very best model of your mannequin is Amazon SageMaker automatic model tuning that searches for the very best mannequin throughout a spread of hyperparameter values.

Deploy the skilled mannequin and take a look at with legitimate and anomalous visitors

After the mannequin has been skilled, you deploy the mannequin to a SageMaker endpoint and ship a sequence of distinctive identifier and IPv4 handle combos to check your mannequin. This portion of code assumes you have got take a look at knowledge saved in your S3 bucket. The take a look at knowledge is a .csv file, the place the primary column is occasion ids and the second column is IPs. It’s endorsed to check legitimate and invalid knowledge to see the outcomes of the mannequin. The next code deploys your endpoint.

Now that your endpoint is deployed, now you can submit inference requests to establish if visitors is doubtlessly anomalous. Under is a pattern of what your formatted knowledge ought to appear to be. On this case, the primary column identifier is an occasion id and the second column is an related IP handle as proven within the following:

After you have got your knowledge in CSV format, you’ll be able to submit the information for inference utilizing the code by studying your .csv file from an S3 bucket.:

The output for an IP Insights mannequin offers a measure of how statistically anticipated an IP handle and on-line useful resource are. The vary for this handle and useful resource is unbounded nonetheless, so there are issues on how you’ll decide if an occasion ID and IP handle mixture ought to be thought-about anomalous.

Within the previous instance, 4 totally different identifier and IP combos have been submitted to the mannequin. The primary two combos have been legitimate occasion ID and IP handle combos which can be anticipated primarily based on the coaching set. The third mixture has the right distinctive identifier however a unique IP handle inside the identical subnet. The mannequin ought to decide there’s a modest anomaly because the embedding is barely totally different from the coaching knowledge. The fourth mixture has a sound distinctive identifier however an IP handle of a nonexistent subnet inside any VPC within the atmosphere.

Notice: Regular and irregular visitors knowledge will change primarily based in your particular use case, for instance: if you wish to monitor exterior and inner visitors you would wish a singular identifier aligned to every IP handle and a scheme to generate the exterior identifiers.

To find out what your threshold ought to be to find out whether or not visitors is anomalous could be executed utilizing recognized regular and irregular visitors. The steps outlined in this sample notebook are as follows:

- Assemble a take a look at set to symbolize regular visitors.

- Add irregular visitors into the dataset.

- Plot the distribution of

dot_productscores for the mannequin on regular visitors and the irregular visitors. - Choose a threshold worth which distinguishes the conventional subset from the irregular subset. This worth is predicated in your false-positive tolerance

Arrange steady monitoring of recent VPC movement log visitors.

To reveal how this new ML mannequin may very well be use with Amazon Safety Lake in a proactive method, we are going to configure a Lambda operate to be invoked on every PutObject occasion inside the Amazon Safety Lake managed bucket, particularly the VPC movement log knowledge. Inside Amazon Safety Lake there’s the idea of a subscriber, that consumes logs and occasions from Amazon Safety Lake. The Lambda operate that responds to new occasions have to be granted an information entry subscription. Knowledge entry subscribers are notified of recent Amazon S3 objects for a supply because the objects are written to the Safety Lake bucket. Subscribers can immediately entry the S3 objects and obtain notifications of recent objects by means of a subscription endpoint or by polling an Amazon SQS queue.

- Open the Security Lake console.

- Within the navigation pane, choose Subscribers.

- On the Subscribers web page, select Create subscriber.

- For Subscriber particulars, enter

inferencelambdafor Subscriber title and an elective Description. - The Area is routinely set as your presently chosen AWS Area and might’t be modified.

- For Log and occasion sources, select Particular log and occasion sources and select VPC Movement Logs and Route 53 logs

- For Knowledge entry technique, select S3.

- For Subscriber credentials, present your AWS account ID of the account the place the Lambda operate will reside and a user-specified external ID.

Notice: If doing this domestically inside an account, you don’t must have an exterior ID. - Select Create.

Create the Lambda operate

To create and deploy the Lambda operate you’ll be able to both full the next steps or deploy the prebuilt SAM template 01_ipinsights/01.02-ipcheck.yaml within the GitHub repo. The SAM template requires you present the SQS ARN and the SageMaker endpoint title.

- On the Lambda console, select Create operate.

- Select Creator from scratch.

- For Operate Identify, enter

ipcheck. - For Runtime, select Python 3.10.

- For Structure, choose x86_64.

- For Execution function, choose Create a brand new function with Lambda permissions.

- After you create the operate, enter the contents of the ipcheck.py file from the GitHub repo.

- Within the navigation pane, select Surroundings Variables.

- Select Edit.

- Select Add atmosphere variable.

- For the brand new atmosphere variable, enter

ENDPOINT_NAMEand for worth enter the endpoint ARN that was outputted throughout deployment of the SageMaker endpoint. - Choose Save.

- Select Deploy.

- Within the navigation pane, select Configuration.

- Choose Triggers.

- Choose Add set off.

- Below Choose a supply, select SQS.

- Below SQS queue, enter the ARN of the principle SQS queue created by Safety Lake.

- Choose the checkbox for Activate set off.

- Choose Add.

Validate Lambda findings

- Open the Amazon CloudWatch console.

- Within the left facet pane, choose Log teams.

- Within the search bar, enter ipcheck, after which choose the log group with the title

/aws/lambda/ipcheck. - Choose the latest log stream beneath Log streams.

- Inside the logs, you must see outcomes that appear to be the next for every new Amazon Safety Lake log:

{'predictions': [{'dot_product': 0.018832731992006302}, {'dot_product': 0.018832731992006302}]}

This Lambda operate regularly analyzes the community visitors being ingested by Amazon Safety Lake. This lets you construct mechanisms to inform your safety groups when a specified threshold is violated, which might point out an anomalous visitors in your atmosphere.

Cleanup

Once you’re completed experimenting with this resolution and to keep away from expenses to your account, clear up your sources by deleting the S3 bucket, SageMaker endpoint, shutting down the compute hooked up to the SageMaker Jupyter pocket book, deleting the Lambda operate, and disabling Amazon Safety Lake in your account.

Conclusion

On this submit you discovered easy methods to put together community visitors knowledge sourced from Amazon Safety Lake for machine studying, after which skilled and deployed an ML mannequin utilizing the IP Insights algorithm in Amazon SageMaker. All the steps outlined within the Jupyter pocket book could be replicated in an end-to-end ML pipeline. You additionally carried out an AWS Lambda operate that consumed new Amazon Safety Lake logs and submitted inferences primarily based on the skilled anomaly detection mannequin. The ML mannequin responses obtained by AWS Lambda may proactively notify safety groups of anomalous visitors when sure thresholds are met. Steady enchancment of the mannequin could be enabled by together with your safety workforce within the loop critiques to label whether or not visitors recognized as anomalous was a false optimistic or not. This might then be added to your coaching set and in addition added to your regular visitors dataset when figuring out an empirical threshold. This mannequin can establish doubtlessly anomalous community visitors or habits whereby it may be included as half of a bigger safety resolution to provoke an MFA verify if a person is signing in from an uncommon server or at an uncommon time, alert employees if there’s a suspicious community scan coming from new IP addresses, or mix the IP insights rating with different sources similar to Amazon Guard Obligation to rank risk findings. This mannequin can embrace customized log sources similar to Azure Movement Logs or on-premises logs by including in customized sources to your Amazon Safety Lake deployment.

Partially 2 of this weblog submit sequence, you’ll discover ways to construct an anomaly detection mannequin utilizing the Random Cut Forest algorithm skilled with extra Amazon Safety Lake sources that combine community and host safety log knowledge and apply the safety anomaly classification as a part of an automatic, complete safety monitoring resolution.

Concerning the authors

Joe Morotti is a Options Architect at Amazon Internet Providers (AWS), serving to Enterprise clients throughout the Midwest US. He has held a variety of technical roles and luxuriate in exhibiting buyer’s artwork of the doable. In his free time, he enjoys spending high quality time along with his household exploring new locations and overanalyzing his sports activities workforce’s efficiency

Joe Morotti is a Options Architect at Amazon Internet Providers (AWS), serving to Enterprise clients throughout the Midwest US. He has held a variety of technical roles and luxuriate in exhibiting buyer’s artwork of the doable. In his free time, he enjoys spending high quality time along with his household exploring new locations and overanalyzing his sports activities workforce’s efficiency

Bishr Tabbaa is a options architect at Amazon Internet Providers. Bishr makes a speciality of serving to clients with machine studying, safety, and observability purposes. Outdoors of labor, he enjoys taking part in tennis, cooking, and spending time with household.

Bishr Tabbaa is a options architect at Amazon Internet Providers. Bishr makes a speciality of serving to clients with machine studying, safety, and observability purposes. Outdoors of labor, he enjoys taking part in tennis, cooking, and spending time with household.

Sriharsh Adari is a Senior Options Architect at Amazon Internet Providers (AWS), the place he helps clients work backwards from enterprise outcomes to develop modern options on AWS. Through the years, he has helped a number of clients on knowledge platform transformations throughout trade verticals. His core space of experience embrace Expertise Technique, Knowledge Analytics, and Knowledge Science. In his spare time, he enjoys taking part in Tennis, binge-watching TV reveals, and taking part in Tabla.

Sriharsh Adari is a Senior Options Architect at Amazon Internet Providers (AWS), the place he helps clients work backwards from enterprise outcomes to develop modern options on AWS. Through the years, he has helped a number of clients on knowledge platform transformations throughout trade verticals. His core space of experience embrace Expertise Technique, Knowledge Analytics, and Knowledge Science. In his spare time, he enjoys taking part in Tennis, binge-watching TV reveals, and taking part in Tabla.