Harnessing the facility of enterprise knowledge with generative AI: Insights from Amazon Kendra, LangChain, and enormous language fashions

Massive language fashions (LLMs) with their broad information, can generate human-like textual content on virtually any matter. Nonetheless, their coaching on large datasets additionally limits their usefulness for specialised duties. With out continued studying, these fashions stay oblivious to new knowledge and developments that emerge after their preliminary coaching. Moreover, the price to coach new LLMs can show prohibitive for a lot of enterprise settings. Nonetheless, it’s attainable to cross-reference a mannequin reply with the unique specialised content material, thereby avoiding the necessity to prepare a brand new LLM mannequin, utilizing Retrieval-Augmented Era (RAG).

RAG empowers LLMs by giving them the flexibility to retrieve and incorporate exterior information. As an alternative of relying solely on their pre-trained information, RAG permits fashions to drag knowledge from paperwork, databases, and extra. The mannequin then skillfully integrates this outdoors data into its generated textual content. By sourcing context-relevant knowledge, the mannequin can present knowledgeable, up-to-date responses tailor-made to your use case. The information augmentation additionally reduces the probability of hallucinations and inaccurate or nonsensical textual content. With RAG, basis fashions change into adaptable consultants that evolve as your information base grows.

In the present day, we’re excited to unveil three generative AI demos, licensed underneath MIT-0 license:

- Amazon Kendra with foundational LLM – Makes use of the deep search capabilities of Amazon Kendra mixed with the expansive information of LLMs. This integration gives exact and context-aware solutions to advanced queries by drawing from a various vary of sources.

- Embeddings mannequin with foundational LLM – Merges the facility of embeddings—a way to seize semantic meanings of phrases and phrases—with the huge information base of LLMs. This synergy permits extra correct matter modeling, content material advice, and semantic search capabilities.

- Basis Fashions Pharma Advert Generator – A specialised software tailor-made for the pharmaceutical trade. Harnessing the generative capabilities of foundational fashions, this device creates convincing and compliant pharmaceutical ads, making certain content material adheres to trade requirements and rules.

These demos may be seamlessly deployed in your AWS account, providing foundational insights and steering on using AWS providers to create a state-of-the-art LLM generative AI query and reply bot and content material technology.

On this publish, we discover how RAG mixed with Amazon Kendra or customized embeddings can overcome these challenges and supply refined responses to pure language queries.

Answer overview

By adopting this answer, you possibly can achieve the next advantages:

- Improved data entry – RAG permits fashions to drag in data from huge exterior sources, which may be particularly helpful when the pre-trained mannequin’s information is outdated or incomplete.

- Scalability – As an alternative of coaching a mannequin on all obtainable knowledge, RAG permits fashions to retrieve related data on the fly. Because of this as new knowledge turns into obtainable, it may be added to the retrieval database while not having to retrain your complete mannequin.

- Reminiscence effectivity – LLMs require important reminiscence to retailer parameters. With RAG, the mannequin may be smaller as a result of it doesn’t have to memorize all particulars; it may well retrieve them when wanted.

- Dynamic information replace – In contrast to typical fashions with a set information endpoint, RAG’s exterior database can endure common updates, granting the mannequin entry to up-to-date data. The retrieval perform may be fine-tuned for distinct duties. For instance, a medical diagnostic process can supply knowledge from medical journals, making certain the mannequin garners professional and pertinent insights.

- Bias mitigation – The flexibility to attract from a well-curated database gives the potential to attenuate biases by making certain balanced and neutral exterior sources.

Earlier than diving into the combination of Amazon Kendra with foundational LLMs, it’s essential to equip your self with the mandatory instruments and system necessities. Having the suitable setup in place is step one in the direction of a seamless deployment of the demos.

Stipulations

You will need to have the next conditions:

Though it’s attainable to arrange and deploy the infrastructure detailed on this tutorial out of your native laptop, AWS Cloud9 gives a handy different. Pre-equipped with instruments like AWS CLI, AWS CDK, and Docker, AWS Cloud9 can perform as your deployment workstation. To make use of this service, merely set up the environment through the AWS Cloud9 console.

With the conditions out of the best way, let’s dive into the options and capabilities of Amazon Kendra with foundational LLMs.

Amazon Kendra with foundational LLM

Amazon Kendra is a complicated enterprise search service enhanced by machine studying (ML) that gives out-of-the-box semantic search capabilities. Using pure language processing (NLP), Amazon Kendra comprehends each the content material of paperwork and the underlying intent of person queries, positioning it as a content material retrieval device for RAG primarily based options. By utilizing the high-accuracy search content material from Kendra as a RAG payload, you will get higher LLM responses. The usage of Amazon Kendra on this answer additionally permits personalised search by filtering responses in keeping with the end-user content material entry permissions.

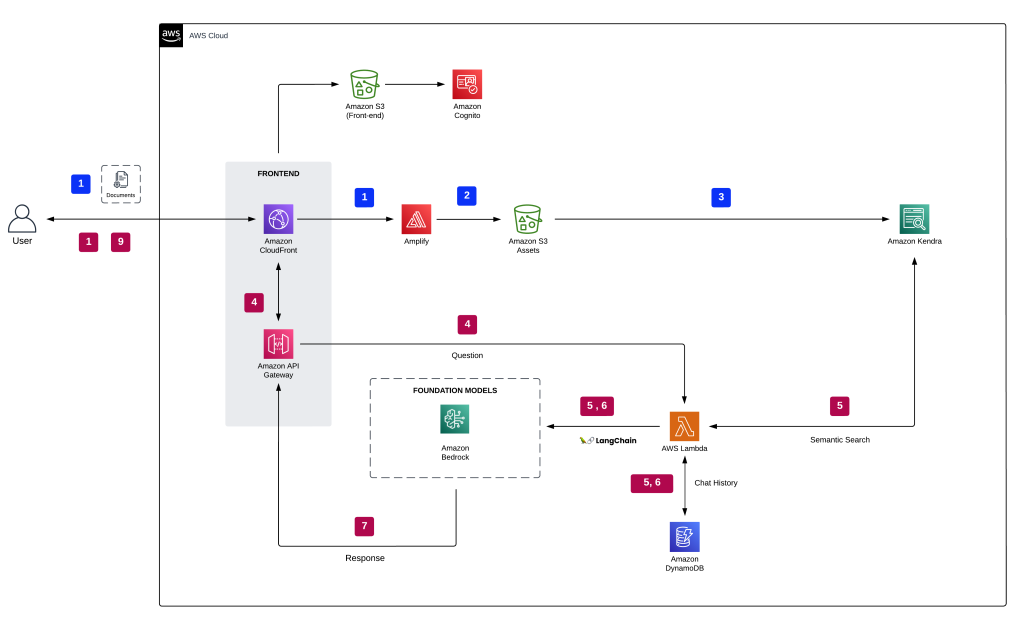

The next diagram exhibits the structure of a generative AI software utilizing the RAG method.

Paperwork are processed and listed by Amazon Kendra by way of the Amazon Simple Storage Service (Amazon S3) connector. Buyer requests and contextual knowledge from Amazon Kendra are directed to an Amazon Bedrock basis mannequin. The demo allows you to select between Amazon’s Titan, AI21’s Jurassic, and Anthropic’s Claude fashions supported by Amazon Bedrock. The dialog historical past is saved in Amazon DynamoDB, providing added context for the LLM to generate responses.

We now have supplied this demo within the GitHub repo. Discuss with the deployment directions throughout the readme file for deploying it into your AWS account.

The next steps define the method when a person interacts with the generative AI app:

- The person logs in to the net app authenticated by Amazon Cognito.

- The person uploads a number of paperwork into Amazon S3.

- The person runs an Amazon Kendra sync job to ingest S3 paperwork into the Amazon Kendra index.

- The person’s query is routed by way of a safe WebSocket API hosted on Amazon API Gateway backed by a AWS Lambda perform.

- The Lambda perform, empowered by the LangChain framework—a flexible device designed for creating functions pushed by AI language fashions—connects to the Amazon Bedrock endpoint to rephrase the person’s query primarily based on chat historical past. After rephrasing, the query is forwarded to Amazon Kendra utilizing the Retrieve API. In response, the Amazon Kendra index shows search outcomes, offering excerpts from pertinent paperwork sourced from the enterprise’s ingested knowledge.

- The person’s query together with the information retrieved from the index are despatched as a context within the LLM immediate. The response from the LLM is saved as chat historical past inside DynamoDB.

- Lastly, the response from the LLM is distributed again to the person.

Doc indexing workflow

The next is the process for processing and indexing paperwork:

- Customers submit paperwork through the person interface (UI).

- Paperwork are transferred to an S3 bucket using the AWS Amplify API.

- Amazon Kendra indexes new paperwork within the S3 bucket by way of the Amazon Kendra S3 connector.

Advantages

The next listing highlights the benefits of this answer:

- Enterprise-level retrieval – Amazon Kendra is designed for enterprise search, making it appropriate for organizations with huge quantities of structured and unstructured knowledge.

- Semantic understanding – The ML capabilities of Amazon Kendra be certain that retrieval is predicated on deep semantic understanding and never simply key phrase matches.

- Scalability – Amazon Kendra can deal with large-scale knowledge sources and gives fast and related search outcomes.

- Flexibility – The foundational mannequin can generate solutions primarily based on a variety of contexts, making certain the system stays versatile.

- Integration capabilities – Amazon Kendra may be built-in with numerous AWS providers and knowledge sources, making it adaptable for various organizational wants.

Embeddings mannequin with foundational LLM

An embedding is a numerical vector that represents the core essence of various knowledge sorts, together with textual content, pictures, audio, and paperwork. This illustration not solely captures the information’s intrinsic that means, but additionally adapts it for a variety of sensible functions. Embedding fashions, a department of ML, rework advanced knowledge, resembling phrases or phrases, into steady vector areas. These vectors inherently grasp the semantic connections between knowledge, enabling deeper and extra insightful comparisons.

RAG seamlessly combines the strengths of foundational fashions, like transformers, with the precision of embeddings to sift by way of huge databases for pertinent data. Upon receiving a question, the system makes use of embeddings to establish and extract related sections from an intensive physique of knowledge. The foundational mannequin then formulates a contextually exact response primarily based on this extracted data. This excellent synergy between knowledge retrieval and response technology permits the system to offer thorough solutions, drawing from the huge information saved in expansive databases.

Within the architectural structure, primarily based on their UI choice, customers are guided to both the Amazon Bedrock or Amazon SageMaker JumpStart basis fashions. Paperwork endure processing, and vector embeddings are produced by the embeddings mannequin. These embeddings are then listed utilizing FAISS to allow environment friendly semantic search. Dialog histories are preserved in DynamoDB, enriching the context for the LLM to craft responses.

The next diagram illustrates the answer structure and workflow.

We now have supplied this demo within the GitHub repo. Discuss with the deployment directions throughout the readme file for deploying it into your AWS account.

Embeddings mannequin

The obligations of the embeddings mannequin are as follows:

- This mannequin is answerable for changing textual content (like paperwork or passages) into dense vector representations, generally often called embeddings.

- These embeddings seize the semantic that means of the textual content, permitting for environment friendly and semantically significant comparisons between completely different items of textual content.

- The embeddings mannequin may be skilled on the identical huge corpus because the foundational mannequin or may be specialised for particular domains.

Q&A workflow

The next steps describe the workflow of the query answering over paperwork:

- The person logs in to the net app authenticated by Amazon Cognito.

- The person uploads a number of paperwork to Amazon S3.

- Upon doc switch, an S3 occasion notification triggers a Lambda perform, which then calls the SageMaker embedding mannequin endpoint to generate embeddings for the brand new doc. The embeddings mannequin converts the query right into a dense vector illustration (embedding). The ensuing vector file is securely saved throughout the S3 bucket.

- The FAISS retriever compares this query embedding with the embeddings of all paperwork or passages within the database to seek out probably the most related passages.

- The passages, together with the person’s query, are supplied as context to the foundational mannequin. The Lambda perform makes use of the LangChain library and connects to the Amazon Bedrock or SageMaker JumpStart endpoint with a context-stuffed question.

- The response from the LLM is saved in DynamoDB together with the person’s question, the timestamp, a novel identifier, and different arbitrary identifiers for the merchandise resembling query class. Storing the query and reply as discrete objects permits the Lambda perform to simply recreate a person’s dialog historical past primarily based on the time when questions have been requested.

- Lastly, the response is distributed again to the person through a HTTPs request by way of the API Gateway WebSocket API integration response.

Advantages

The next listing describe the advantages of this answer:

- Semantic understanding – The embeddings mannequin ensures that the retriever selects passages primarily based on deep semantic understanding, not simply key phrase matches.

- Scalability – Embeddings enable for environment friendly similarity comparisons, making it possible to go looking by way of huge databases of paperwork shortly.

- Flexibility – The foundational mannequin can generate solutions primarily based on a variety of contexts, making certain the system stays versatile.

- Area adaptability – The embeddings mannequin may be skilled or fine-tuned for particular domains, permitting the system to be tailored for numerous functions.

Basis Fashions Pharma Advert Generator

In right now’s fast-paced pharmaceutical trade, environment friendly and localized promoting is extra essential than ever. That is the place an revolutionary answer comes into play, utilizing the facility of generative AI to craft localized pharma adverts from supply pictures and PDFs. Past merely dashing up the advert technology course of, this method streamlines the Medical Authorized Evaluate (MLR) course of. MLR is a rigorous evaluation mechanism during which medical, authorized, and regulatory groups meticulously consider promotional supplies to ensure their accuracy, scientific backing, and regulatory compliance. Conventional content material creation strategies may be cumbersome, typically requiring guide changes and intensive evaluations to make sure alignment with regional compliance and relevance. Nonetheless, with the appearance of generative AI, we are able to now automate the crafting of adverts that actually resonate with native audiences, all whereas upholding stringent requirements and tips.

The next diagram illustrates the answer structure.

Within the architectural structure, primarily based on their chosen mannequin and advert preferences, customers are seamlessly guided to the Amazon Bedrock basis fashions. This streamlined method ensures that new adverts are generated exactly in keeping with the specified configuration. As a part of the method, paperwork are effectively dealt with by Amazon Textract, with the resultant textual content securely saved in DynamoDB. A standout characteristic is the modular design for picture and textual content technology, granting you the pliability to independently regenerate any element as required.

We now have supplied this demo within the GitHub repo. Discuss with the deployment directions throughout the readme file for deploying it into your AWS account.

Content material technology workflow

The next steps define the method for content material technology:

- The person chooses their doc, supply picture, advert placement, language, and picture type.

- Safe entry to the net software is ensured by way of Amazon Cognito authentication.

- The online software’s entrance finish is hosted through Amplify.

- A WebSocket API, managed by API Gateway, facilitates person requests. These requests are authenticated by way of AWS Identity and Access Management (IAM).

- Integration with Amazon Bedrock contains the next steps:

- A Lambda perform employs the LangChain library to hook up with the Amazon Bedrock endpoint utilizing a context-rich question.

- The text-to-text foundational mannequin crafts a contextually applicable advert primarily based on the given context and settings.

- The text-to-image foundational mannequin creates a tailor-made picture, influenced by the supply picture, chosen type, and placement.

- The person receives the response by way of an HTTPS request through the built-in API Gateway WebSocket API.

Doc and picture processing workflow

The next is the process for processing paperwork and pictures:

- The person uploads property through the required UI.

- The Amplify API transfers the paperwork to an S3 bucket.

- After the asset is transferred to Amazon S3, one of many following actions takes place:

- If it’s a doc, a Lambda perform makes use of Amazon Textract to course of and extract textual content for advert technology.

- If it’s a picture, the Lambda perform converts it to base64 format, appropriate for the Steady Diffusion mannequin to create a brand new picture from the supply.

- The extracted textual content or base64 picture string is securely saved in DynamoDB.

Advantages

The next listing describes the advantages of this answer:

- Effectivity – The usage of generative AI considerably accelerates the advert technology course of, eliminating the necessity for guide changes.

- Compliance adherence – The answer ensures that generated adverts adhere to particular steering and rules, such because the FDA’s tips for advertising and marketing.

- Value-effective – By automating the creation of tailor-made adverts, corporations can considerably scale back prices related to advert manufacturing and revisions.

- Streamlined MLR course of – The answer simplifies the MLR course of, lowering friction factors and making certain smoother evaluations.

- Localized resonance – Generative AI produces adverts that resonate with native audiences, making certain relevance and affect in numerous areas.

- Standardization – The answer maintains obligatory requirements and tips, making certain consistency throughout all generated adverts.

- Scalability – The AI-driven method can deal with huge databases of supply pictures and PDFs, making it possible for large-scale advert technology.

- Diminished guide intervention – The automation reduces the necessity for human intervention, minimizing errors and making certain consistency.

You’ll be able to deploy the infrastructure on this tutorial out of your native laptop or you should utilize AWS Cloud9 as your deployment workstation. AWS Cloud9 comes pre-loaded with the AWS CLI, AWS CDK, and Docker. When you go for AWS Cloud9, create the environment from the AWS Cloud9 console.

Clear up

To keep away from pointless value, clear up all of the infrastructure created through the AWS CloudFormation console or by working the next command in your workstation:

Moreover, keep in mind to cease any SageMaker endpoints you initiated through the SageMaker console. Keep in mind, deleting an Amazon Kendra index doesn’t take away the unique paperwork out of your storage.

Conclusion

Generative AI, epitomized by LLMs, heralds a paradigm shift in how we entry and generate data. These fashions, whereas highly effective, are sometimes restricted by the confines of their coaching knowledge. RAG addresses this problem, making certain that the huge information inside these fashions is constantly infused with related, present insights.

Our RAG-based demos present a tangible testomony to this. They showcase the seamless synergy between Amazon Kendra, vector embeddings, and LLMs, making a system the place data isn’t solely huge but additionally correct and well timed. As you dive into these demos, you’ll discover firsthand the transformational potential of merging pre-trained information with the dynamic capabilities of RAG, leading to outputs which might be each reliable and tailor-made to enterprise content material.

Though generative AI powered by LLMs opens up a brand new method of gaining data insights, these insights should be reliable and confined to enterprise content material utilizing the RAG method. These RAG-based demos allow you to be geared up with insights which might be correct and updated. The standard of those insights depends on semantic relevance, which is enabled through the use of Amazon Kendra and vector embeddings.

When you’re able to additional discover and harness the facility of generative AI, listed below are your subsequent steps:

- Interact with our demos – The hands-on expertise is invaluable. Discover the functionalities, perceive the integrations, and familiarize your self with the interface.

- Deepen your information – Reap the benefits of the assets obtainable. AWS gives in-depth documentation, tutorials, and neighborhood assist to help in your AI journey.

- Provoke a pilot undertaking – Take into account beginning with a small-scale implementation of generative AI in your enterprise. This may present insights into the system’s practicality and adaptableness inside your particular context.

For extra details about generative AI functions on AWS, confer with the next:

Keep in mind, the panorama of AI is consistently evolving. Keep up to date, stay curious, and all the time be able to adapt and innovate.

About The Authors

Jin Tan Ruan is a Prototyping Developer throughout the AWS Industries Prototyping and Buyer Engineering (PACE) crew, specializing in NLP and generative AI. With a background in software program growth and 9 AWS certifications, Jin brings a wealth of expertise to help AWS clients in materializing their AI/ML and generative AI visions utilizing the AWS platform. He holds a grasp’s diploma in Pc Science & Software program Engineering from the College of Syracuse. Exterior of labor, Jin enjoys taking part in video video games and immersing himself within the thrilling world of horror films.

Jin Tan Ruan is a Prototyping Developer throughout the AWS Industries Prototyping and Buyer Engineering (PACE) crew, specializing in NLP and generative AI. With a background in software program growth and 9 AWS certifications, Jin brings a wealth of expertise to help AWS clients in materializing their AI/ML and generative AI visions utilizing the AWS platform. He holds a grasp’s diploma in Pc Science & Software program Engineering from the College of Syracuse. Exterior of labor, Jin enjoys taking part in video video games and immersing himself within the thrilling world of horror films.

Aravind Kodandaramaiah is a Senior Prototyping full stack answer builder throughout the AWS Industries Prototyping and Buyer Engineering (PACE) crew. He focuses on serving to AWS clients flip revolutionary concepts into options with measurable and pleasant outcomes. He’s passionate a few vary of matters, together with cloud safety, DevOps, and AI/ML, and may be normally discovered tinkering with these applied sciences.

Aravind Kodandaramaiah is a Senior Prototyping full stack answer builder throughout the AWS Industries Prototyping and Buyer Engineering (PACE) crew. He focuses on serving to AWS clients flip revolutionary concepts into options with measurable and pleasant outcomes. He’s passionate a few vary of matters, together with cloud safety, DevOps, and AI/ML, and may be normally discovered tinkering with these applied sciences.

Arjun Shakdher is a Developer on the AWS Industries Prototyping (PACE) crew who’s obsessed with mixing know-how into the material of life. Holding a grasp’s diploma from Purdue College, Arjun’s present position revolves round architecting and constructing cutting-edge prototypes that span an array of domains, presently prominently that includes the realms of AI/ML and IoT. When not immersed in code and digital landscapes, you’ll discover Arjun indulging on the earth of espresso, exploring the intricate mechanics of horology, or reveling within the artistry of vehicles.

Arjun Shakdher is a Developer on the AWS Industries Prototyping (PACE) crew who’s obsessed with mixing know-how into the material of life. Holding a grasp’s diploma from Purdue College, Arjun’s present position revolves round architecting and constructing cutting-edge prototypes that span an array of domains, presently prominently that includes the realms of AI/ML and IoT. When not immersed in code and digital landscapes, you’ll discover Arjun indulging on the earth of espresso, exploring the intricate mechanics of horology, or reveling within the artistry of vehicles.