Governing the ML lifecycle at scale, Half 1: A framework for architecting ML workloads utilizing Amazon SageMaker

Clients of each measurement and business are innovating on AWS by infusing machine studying (ML) into their services. Current developments in generative AI fashions have additional sped up the necessity of ML adoption throughout industries. Nonetheless, implementing safety, information privateness, and governance controls are nonetheless key challenges confronted by clients when implementing ML workloads at scale. Addressing these challenges builds the framework and foundations for mitigating danger and accountable use of ML-driven merchandise. Though generative AI may have extra controls in place, corresponding to eradicating toxicity and stopping jailbreaking and hallucinations, it shares the identical foundational parts for safety and governance as conventional ML.

We hear from clients that they require specialised information and funding of as much as 12 months for constructing out their custom-made Amazon SageMaker ML platform implementation to make sure scalable, dependable, safe, and ruled ML environments for his or her traces of enterprise (LOBs) or ML groups. If you happen to lack a framework for governing the ML lifecycle at scale, chances are you’ll run into challenges corresponding to team-level useful resource isolation, scaling experimentation sources, operationalizing ML workflows, scaling mannequin governance, and managing safety and compliance of ML workloads.

Governing ML lifecycle at scale is a framework that can assist you construct an ML platform with embedded safety and governance controls based mostly on business greatest practices and enterprise requirements. This framework addresses challenges by offering prescriptive steering by a modular framework method extending an AWS Control Tower multi-account AWS surroundings and the method mentioned within the put up Setting up secure, well-governed machine learning environments on AWS.

It offers prescriptive steering for the next ML platform features:

- Multi-account, safety, and networking foundations – This operate makes use of AWS Management Tower and well-architected principles for organising and working multi-account surroundings, safety, and networking providers.

- Knowledge and governance foundations – This operate makes use of a data mesh architecture for organising and working the information lake, central characteristic retailer, and information governance foundations to allow fine-grained information entry.

- ML platform shared and governance providers – This operate permits organising and working frequent providers corresponding to CI/CD, AWS Service Catalog for provisioning environments, and a central mannequin registry for mannequin promotion and lineage.

- ML workforce environments – This operate permits organising and working environments for ML groups for mannequin growth, testing, and deploying their use instances for embedding safety and governance controls.

- ML platform observability – This operate helps with troubleshooting and figuring out the foundation trigger for issues in ML fashions by centralization of logs and offering instruments for log evaluation visualization. It additionally offers steering for producing price and utilization experiences for ML use instances.

Though this framework can present advantages to all clients, it’s most useful for giant, mature, regulated, or world enterprises clients that wish to scale their ML methods in a managed, compliant, and coordinated method throughout the group. It helps allow ML adoption whereas mitigating dangers. This framework is helpful for the next clients:

- Giant enterprise clients which have many LOBs or departments considering utilizing ML. This framework permits totally different groups to construct and deploy ML fashions independently whereas offering central governance.

- Enterprise clients with a average to excessive maturity in ML. They’ve already deployed some preliminary ML fashions and wish to scale their ML efforts. This framework will help speed up ML adoption throughout the group. These firms additionally acknowledge the necessity for governance to handle issues like entry management, information utilization, mannequin efficiency, and unfair bias.

- Firms in regulated industries corresponding to monetary providers, healthcare, chemistry, and the personal sector. These firms want robust governance and audibility for any ML fashions used of their enterprise processes. Adopting this framework will help facilitate compliance whereas nonetheless permitting for native mannequin growth.

- International organizations that must stability centralized and native management. This framework’s federated method permits the central platform engineering workforce to set some high-level insurance policies and requirements, but additionally offers LOB groups flexibility to adapt based mostly on native wants.

Within the first a part of this sequence, we stroll by the reference structure for organising the ML platform. In a later put up, we are going to present prescriptive steering for easy methods to implement the assorted modules within the reference structure in your group.

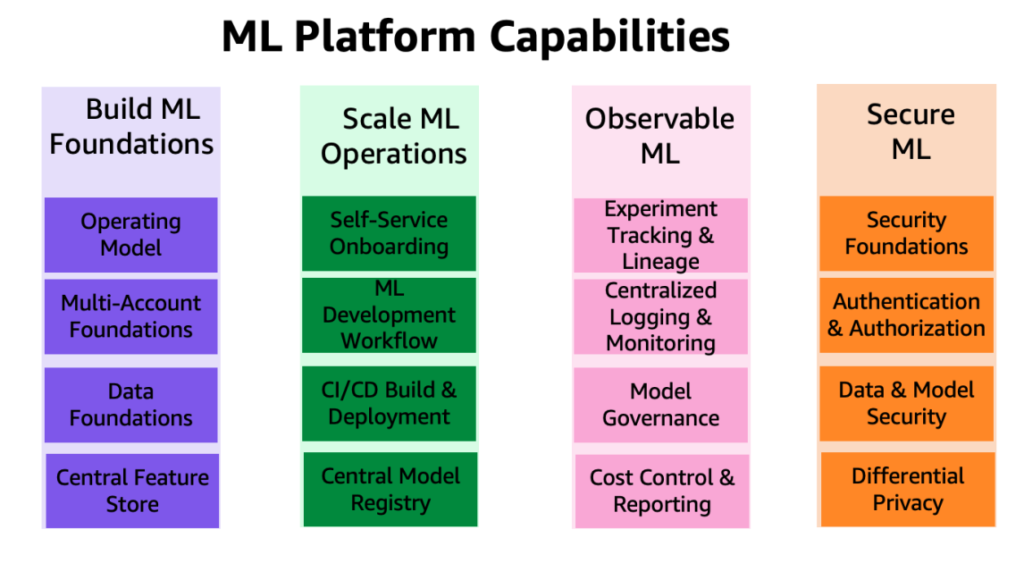

The capabilities of the ML platform are grouped into 4 classes, as proven within the following determine. These capabilities type the inspiration of the reference structure mentioned later on this put up:

- Construct ML foundations

- Scale ML operations

- Observable ML

- Safe ML

Resolution overview

The framework for governing ML lifecycle at scale framework permits organizations to embed safety and governance controls all through the ML lifecycle that in flip assist organizations scale back danger and speed up infusing ML into their services. The framework helps optimize the setup and governance of safe, scalable, and dependable ML environments that may scale to assist an rising variety of fashions and tasks. The framework permits the next options:

- Account and infrastructure provisioning with group coverage compliant infrastructure sources

- Self-service deployment of knowledge science environments and end-to-end ML operations (MLOps) templates for ML use instances

- LOB-level or team-level isolation of sources for safety and privateness compliance

- Ruled entry to production-grade information for experimentation and production-ready workflows

- Administration and governance for code repositories, code pipelines, deployed fashions, and information options

- A mannequin registry and have retailer (native and central parts) for bettering governance

- Safety and governance controls for the end-to-end mannequin growth and deployment course of

On this part, we offer an outline of prescriptive steering that can assist you construct this ML platform on AWS with embedded safety and governance controls.

The useful structure related to the ML platform is proven within the following diagram. The structure maps the totally different capabilities of the ML platform to AWS accounts.

The useful structure with totally different capabilities is applied utilizing quite a few AWS providers, together with AWS Organizations, SageMaker, AWS DevOps providers, and an information lake. The reference structure for the ML platform with varied AWS providers is proven within the following diagram.

This framework considers a number of personas and providers to control the ML lifecycle at scale. We advocate the next steps to prepare your groups and providers:

- Utilizing AWS Management Tower and automation tooling, your cloud administrator units up the multi-account foundations corresponding to Organizations and AWS IAM Identity Center (successor to AWS Single Signal-On) and safety and governance providers corresponding to AWS Key Management Service (AWS KMS) and Service Catalog. As well as, the administrator units up quite a lot of group models (OUs) and preliminary accounts to assist your ML and analytics workflows.

- Knowledge lake directors arrange your information lake and information catalog, and arrange the central characteristic retailer working with the ML platform admin.

- The ML platform admin provisions ML shared providers corresponding to AWS CodeCommit, AWS CodePipeline, Amazon Elastic Container Registry (Amazon ECR), a central mannequin registry, SageMaker Model Cards, SageMaker Model Dashboard, and Service Catalog merchandise for ML groups.

- The ML workforce lead federates by way of IAM Id Middle, makes use of Service Catalog merchandise, and provisions sources within the ML workforce’s growth surroundings.

- Knowledge scientists from ML groups throughout totally different enterprise models federate into their workforce’s growth surroundings to construct the mannequin pipeline.

- Knowledge scientists search and pull options from the central characteristic retailer catalog, construct fashions by experiments, and choose one of the best mannequin for promotion.

- Knowledge scientists create and share new options into the central characteristic retailer catalog for reuse.

- An ML engineer deploys the mannequin pipeline into the ML workforce check surroundings utilizing a shared providers CI/CD course of.

- After stakeholder validation, the ML mannequin is deployed to the workforce’s manufacturing surroundings.

- Safety and governance controls are embedded into each layer of this structure utilizing providers corresponding to AWS Security Hub, Amazon GuardDuty, Amazon Macie, and extra.

- Safety controls are centrally managed from the safety tooling account utilizing Safety Hub.

- ML platform governance capabilities corresponding to SageMaker Mannequin Playing cards and SageMaker Mannequin Dashboard are centrally managed from the governance providers account.

- Amazon CloudWatch and AWS CloudTrail logs from every member account are made accessible centrally from an observability account utilizing AWS native providers.

Subsequent, we dive deep into the modules of the reference structure for this framework.

Reference structure modules

The reference structure contains eight modules, every designed to resolve a selected set of issues. Collectively, these modules deal with governance throughout varied dimensions, corresponding to infrastructure, information, mannequin, and price. Every module provides a definite set of features and interoperates with different modules to offer an built-in end-to-end ML platform with embedded safety and governance controls. On this part, we current a brief abstract of every module’s capabilities.

Multi-account foundations

This module helps cloud directors construct an AWS Control Tower landing zone as a foundational framework. This consists of constructing a multi-account construction, authentication and authorization by way of IAM Id Middle, a community hub-and-spoke design, centralized logging providers, and new AWS member accounts with standardized safety and governance baselines.

As well as, this module offers greatest follow steering on OU and account buildings which might be applicable for supporting your ML and analytics workflows. Cloud directors will perceive the aim of the required accounts and OUs, easy methods to deploy them, and key safety and compliance providers they need to use to centrally govern their ML and analytics workloads.

A framework for merchandising new accounts can also be lined, which makes use of automation for baselining new accounts when they’re provisioned. By having an automatic account provisioning course of arrange, cloud directors can present ML and analytics groups the accounts they should carry out their work extra shortly, with out sacrificing on a robust basis for governance.

Knowledge lake foundations

This module helps information lake admins arrange an information lake to ingest information, curate datasets, and use the AWS Lake Formation governance mannequin for managing fine-grained information entry throughout accounts and customers utilizing a centralized information catalog, information entry insurance policies, and tag-based entry controls. You can begin small with one account on your information platform foundations for a proof of idea or a couple of small workloads. For medium-to-large-scale manufacturing workload implementation, we advocate adopting a multi-account technique. In such a setting, LOBs can assume the position of knowledge producers and information customers utilizing totally different AWS accounts, and the information lake governance is operated from a central shared AWS account. The information producer collects, processes, and shops information from their information area, along with monitoring and making certain the standard of their information belongings. Knowledge customers devour the information from the information producer after the centralized catalog shares it utilizing Lake Formation. The centralized catalog shops and manages the shared information catalog for the information producer accounts.

ML platform providers

This module helps the ML platform engineering workforce arrange shared providers which might be utilized by the information science groups on their workforce accounts. The providers embrace a Service Catalog portfolio with merchandise for SageMaker domain deployment, SageMaker domain user profile deployment, information science mannequin templates for mannequin constructing and deploying. This module has functionalities for a centralized mannequin registry, mannequin playing cards, mannequin dashboard, and the CI/CD pipelines used to orchestrate and automate mannequin growth and deployment workflows.

As well as, this module particulars easy methods to implement the controls and governance required to allow persona-based self-service capabilities, permitting information science groups to independently deploy their required cloud infrastructure and ML templates.

ML use case growth

This module helps LOBs and information scientists entry their workforce’s SageMaker area in a growth surroundings and instantiate a mannequin constructing template to develop their fashions. On this module, information scientists work on a dev account occasion of the template to work together with the information obtainable on the centralized information lake, reuse and share options from a central characteristic retailer, create and run ML experiments, construct and check their ML workflows, and register their fashions to a dev account mannequin registry of their growth environments.

Capabilities corresponding to experiment monitoring, mannequin explainability experiences, information and mannequin bias monitoring, and mannequin registry are additionally applied within the templates, permitting for fast adaptation of the options to the information scientists’ developed fashions.

ML operations

This module helps LOBs and ML engineers work on their dev situations of the mannequin deployment template. After the candidate mannequin is registered and accredited, they arrange CI/CD pipelines and run ML workflows within the workforce’s check surroundings, which registers the mannequin into the central mannequin registry working in a platform shared providers account. When a mannequin is accredited within the central mannequin registry, this triggers a CI/CD pipeline to deploy the mannequin into the workforce’s manufacturing surroundings.

Centralized characteristic retailer

After the primary fashions are deployed to manufacturing and a number of use instances begin to share options created from the identical information, a characteristic retailer turns into important to make sure collaboration throughout use instances and scale back duplicate work. This module helps the ML platform engineering workforce arrange a centralized characteristic retailer to offer storage and governance for ML options created by the ML use instances, enabling characteristic reuse throughout tasks.

Logging and observability

This module helps LOBs and ML practitioners acquire visibility into the state of ML workloads throughout ML environments by centralization of log exercise corresponding to CloudTrail, CloudWatch, VPC circulate logs, and ML workload logs. Groups can filter, question, and visualize logs for evaluation, which will help improve safety posture as nicely.

Price and reporting

This module helps varied stakeholders (cloud admin, platform admin, cloud enterprise workplace) to generate experiences and dashboards to interrupt down prices at ML consumer, ML workforce, and ML product ranges, and monitor utilization corresponding to variety of customers, occasion varieties, and endpoints.

Clients have requested us to offer steering on what number of accounts to create and easy methods to construction these accounts. Within the subsequent part, we offer steering on that account construction as reference which you can modify to fit your wants in response to your enterprise governance necessities.

On this part, we focus on our advice for organizing your account construction. We share a baseline reference account construction; nonetheless, we advocate ML and information admins work carefully with their cloud admin to customise this account construction based mostly on their group controls.

We advocate organizing accounts by OU for safety, infrastructure, workloads, and deployments. Moreover, inside every OU, set up by non-production and manufacturing OU as a result of the accounts and workloads deployed beneath them have totally different controls. Subsequent, we briefly focus on these OUs.

Safety OU

The accounts on this OU are managed by the group’s cloud admin or safety workforce for monitoring, figuring out, defending, detecting, and responding to safety occasions.

Infrastructure OU

The accounts on this OU are managed by the group’s cloud admin or community workforce for managing enterprise-level infrastructure shared sources and networks.

We advocate having the next accounts beneath the infrastructure OU:

- Community – Arrange a centralized networking infrastructure corresponding to AWS Transit Gateway

- Shared providers – Arrange centralized AD providers and VPC endpoints

Workloads OU

The accounts on this OU are managed by the group’s platform workforce admins. If you happen to want totally different controls applied for every platform workforce, you may nest different ranges of OU for that goal, corresponding to an ML workloads OU, information workloads OU, and so forth.

We advocate the next accounts beneath the workloads OU:

- Staff-level ML dev, check, and prod accounts – Set this up based mostly in your workload isolation necessities

- Knowledge lake accounts – Partition accounts by your information area

- Central information governance account – Centralize your information entry insurance policies

- Central characteristic retailer account – Centralize options for sharing throughout groups

Deployments OU

The accounts on this OU are managed by the group’s platform workforce admins for deploying workloads and observability.

We advocate the next accounts beneath the deployments OU as a result of the ML platform workforce can arrange totally different units of controls at this OU stage to handle and govern deployments:

- ML shared providers accounts for check and prod – Hosts platform shared providers CI/CD and mannequin registry

- ML observability accounts for check and prod – Hosts CloudWatch logs, CloudTrail logs, and different logs as wanted

Subsequent, we briefly focus on group controls that must be thought of for embedding into member accounts for monitoring the infrastructure sources.

AWS surroundings controls

A management is a high-level rule that gives ongoing governance on your total AWS surroundings. It’s expressed in plain language. On this framework, we use AWS Management Tower to implement the next controls that show you how to govern your sources and monitor compliance throughout teams of AWS accounts:

- Preventive controls – A preventive management ensures that your accounts keep compliance as a result of it disallows actions that result in coverage violations and are applied utilizing a Service Management Coverage (SCP). For instance, you may set a preventive management that ensures that CloudTrail is just not deleted or stopped in AWS accounts or Areas.

- Detective controls – A detective management detects noncompliance of sources inside your accounts, corresponding to coverage violations, offers alerts by the dashboard, and is applied utilizing AWS Config guidelines. For instance, you may create a detective management to detects whether or not public learn entry is enabled to the Amazon Simple Storage Service (Amazon S3) buckets within the log archive shared account.

- Proactive controls – A proactive management scans your sources earlier than they’re provisioned and makes positive that the sources are compliant with that management and are applied utilizing AWS CloudFormation hooks. Sources that aren’t compliant is not going to be provisioned. For instance, you may set a proactive management that checks that direct web entry is just not allowed for a SageMaker pocket book occasion.

Interactions between ML platform providers, ML use instances, and ML operations

Completely different personas, corresponding to the pinnacle of knowledge science (lead information scientist), information scientist, and ML engineer, function modules 2–6 as proven within the following diagram for various levels of ML platform providers, ML use case growth, and ML operations together with information lake foundations and the central characteristic retailer.

The next desk summarizes the ops circulate exercise and setup circulate steps for various personas. As soon as a persona initiates a ML exercise as a part of ops circulate, the providers run as talked about in setup circulate steps.

| Persona | Ops Stream Exercise – Quantity | Ops Stream Exercise – Description | Setup Stream Step – Quantity | Setup Stream Step – Description |

| Lead Knowledge Science or ML Staff Lead |

1 |

Makes use of Service Catalog within the ML platform providers account and deploys the next:

|

1-A |

|

|

1-B |

|

|||

| Knowledge Scientist |

2 |

Conducts and tracks ML experiments in SageMaker notebooks |

2-A |

|

|

3 |

Automates profitable ML experiments with SageMaker tasks and pipelines |

3-A |

|

|

|

3-B |

After the SageMaker pipelines run, saves the mannequin within the native (dev) mannequin registry | |||

| Lead Knowledge Scientist or ML Staff Lead |

4 |

Approves the mannequin within the native (dev) mannequin registry |

4-A |

Mannequin metadata and mannequin package deal writes from the native (dev) mannequin registry to the central mannequin registry |

|

5 |

Approves the mannequin within the central mannequin registry |

5-A |

Initiates the deployment CI/CD course of to create SageMaker endpoints within the check surroundings | |

|

5-B |

Writes the mannequin data and metadata to the ML governance module (mannequin card, mannequin dashboard) within the ML platform providers account from the native (dev) account | |||

| ML Engineer |

6 |

Checks and screens the SageMaker endpoint within the check surroundings after CI/CD | . | |

|

7 |

Approves deployment for SageMaker endpoints within the prod surroundings |

7-A |

Initiates the deployment CI/CD course of to create SageMaker endpoints within the prod surroundings | |

|

8 |

Checks and screens the SageMaker endpoint within the check surroundings after CI/CD | . | ||

Personas and interactions with totally different modules of the ML platform

Every module caters to explicit goal personas inside particular divisions that make the most of the module most frequently, granting them main entry. Secondary entry is then permitted to different divisions that require occasional use of the modules. The modules are tailor-made in the direction of the wants of explicit job roles or personas to optimize performance.

We focus on the next groups:

- Central cloud engineering – This workforce operates on the enterprise cloud stage throughout all workloads for organising frequent cloud infrastructure providers, corresponding to organising enterprise-level networking, id, permissions, and account administration

- Knowledge platform engineering – This workforce manages enterprise information lakes, information assortment, information curation, and information governance

- ML platform engineering – This workforce operates on the ML platform stage throughout LOBs to offer shared ML infrastructure providers corresponding to ML infrastructure provisioning, experiment monitoring, mannequin governance, deployment, and observability

The next desk particulars which divisions have main and secondary entry for every module in response to the module’s goal personas.

| Module Quantity | Modules | Major Entry | Secondary Entry | Goal Personas | Variety of accounts |

|

1 |

Multi-account foundations | Central cloud engineering | Particular person LOBs |

|

Few |

|

2 |

Knowledge lake foundations | Central cloud or information platform engineering | Particular person LOBs |

|

A number of |

|

3 |

ML platform providers | Central cloud or ML platform engineering | Particular person LOBs |

|

One |

|

4 |

ML use case growth | Particular person LOBs | Central cloud or ML platform engineering |

|

A number of |

|

5 |

ML operations | Central cloud or ML engineering | Particular person LOBs |

|

A number of |

|

6 |

Centralized characteristic retailer | Central cloud or information engineering | Particular person LOBs |

|

One |

|

7 |

Logging and observability | Central cloud engineering | Particular person LOBs | One | |

|

8 |

Price and reporting | Particular person LOBs | Central platform engineering |

|

One |

Conclusion

On this put up, we launched a framework for governing the ML lifecycle at scale that helps you implement well-architected ML workloads embedding safety and governance controls. We mentioned how this framework takes a holistic method for constructing an ML platform contemplating information governance, mannequin governance, and enterprise-level controls. We encourage you to experiment with the framework and ideas launched on this put up and share your suggestions.

Concerning the authors

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 a long time of expertise architecting and constructing distributed, hybrid, and cloud purposes. He’s enthusiastic about constructing safe, scalable, dependable AI/ML and massive information options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he rides motorbike and walks along with his three-year previous sheep-a-doodle!

Ram Vittal is a Principal ML Options Architect at AWS. He has over 3 a long time of expertise architecting and constructing distributed, hybrid, and cloud purposes. He’s enthusiastic about constructing safe, scalable, dependable AI/ML and massive information options to assist enterprise clients with their cloud adoption and optimization journey to enhance their enterprise outcomes. In his spare time, he rides motorbike and walks along with his three-year previous sheep-a-doodle!

Sovik Kumar Nath is an AI/ML resolution architect with AWS. He has in depth expertise designing end-to-end machine studying and enterprise analytics options in finance, operations, advertising, healthcare, provide chain administration, and IoT. Sovik has printed articles and holds a patent in ML mannequin monitoring. He has double masters levels from the College of South Florida, College of Fribourg, Switzerland, and a bachelors diploma from the Indian Institute of Expertise, Kharagpur. Outdoors of labor, Sovik enjoys touring, taking ferry rides, and watching motion pictures.

Sovik Kumar Nath is an AI/ML resolution architect with AWS. He has in depth expertise designing end-to-end machine studying and enterprise analytics options in finance, operations, advertising, healthcare, provide chain administration, and IoT. Sovik has printed articles and holds a patent in ML mannequin monitoring. He has double masters levels from the College of South Florida, College of Fribourg, Switzerland, and a bachelors diploma from the Indian Institute of Expertise, Kharagpur. Outdoors of labor, Sovik enjoys touring, taking ferry rides, and watching motion pictures.

Maira Ladeira Tanke is a Senior Knowledge Specialist at AWS. As a technical lead, she helps clients speed up their achievement of enterprise worth by rising expertise and revolutionary options. Maira has been with AWS since January 2020. Previous to that, she labored as an information scientist in a number of industries specializing in attaining enterprise worth from information. In her free time, Maira enjoys touring and spending time along with her household someplace heat.

Maira Ladeira Tanke is a Senior Knowledge Specialist at AWS. As a technical lead, she helps clients speed up their achievement of enterprise worth by rising expertise and revolutionary options. Maira has been with AWS since January 2020. Previous to that, she labored as an information scientist in a number of industries specializing in attaining enterprise worth from information. In her free time, Maira enjoys touring and spending time along with her household someplace heat.

Ryan Lempka is a Senior Options Architect at Amazon Net Providers, the place he helps his clients work backwards from enterprise aims to develop options on AWS. He has deep expertise in enterprise technique, IT methods administration, and information science. Ryan is devoted to being a lifelong learner, and enjoys difficult himself day by day to study one thing new.

Ryan Lempka is a Senior Options Architect at Amazon Net Providers, the place he helps his clients work backwards from enterprise aims to develop options on AWS. He has deep expertise in enterprise technique, IT methods administration, and information science. Ryan is devoted to being a lifelong learner, and enjoys difficult himself day by day to study one thing new.

Sriharsh Adari is a Senior Options Architect at Amazon Net Providers (AWS), the place he helps clients work backwards from enterprise outcomes to develop revolutionary options on AWS. Over time, he has helped a number of clients on information platform transformations throughout business verticals. His core space of experience embrace Expertise Technique, Knowledge Analytics, and Knowledge Science. In his spare time, he enjoys taking part in sports activities, binge-watching TV reveals, and taking part in Tabla.

Sriharsh Adari is a Senior Options Architect at Amazon Net Providers (AWS), the place he helps clients work backwards from enterprise outcomes to develop revolutionary options on AWS. Over time, he has helped a number of clients on information platform transformations throughout business verticals. His core space of experience embrace Expertise Technique, Knowledge Analytics, and Knowledge Science. In his spare time, he enjoys taking part in sports activities, binge-watching TV reveals, and taking part in Tabla.