On-device real-time few-shot face stylization – Google Analysis Weblog

Lately, we now have witnessed rising curiosity throughout shoppers and researchers in built-in augmented actuality (AR) experiences utilizing real-time face function technology and enhancing features in cell purposes, together with brief movies, digital actuality, and gaming. Consequently, there’s a rising demand for light-weight, but high-quality face technology and enhancing fashions, which are sometimes based mostly on generative adversarial network (GAN) strategies. Nonetheless, nearly all of GAN fashions undergo from excessive computational complexity and the necessity for a big coaching dataset. As well as, it is usually vital to make use of GAN fashions responsibly.

On this submit, we introduce MediaPipe FaceStylizer, an environment friendly design for few-shot face stylization that addresses the aforementioned mannequin complexity and information effectivity challenges whereas being guided by Google’s accountable AI Principles. The mannequin consists of a face generator and a face encoder used as GAN inversion to map the picture into latent code for the generator. We introduce a mobile-friendly synthesis community for the face generator with an auxiliary head that converts options to RGB at every stage of the generator to generate prime quality photographs from coarse to high-quality granularities. We additionally fastidiously designed the loss functions for the aforementioned auxiliary heads and mixed them with the frequent GAN loss features to distill the coed generator from the trainer StyleGAN mannequin, leading to a light-weight mannequin that maintains excessive technology high quality. The proposed answer is obtainable in open supply by way of MediaPipe. Customers can fine-tune the generator to study a mode from one or just a few photographs utilizing MediaPipe Mannequin Maker, and deploy to on-device face stylization purposes with the custom-made mannequin utilizing MediaPipe FaceStylizer.

Few-shot on-device face stylization

An end-to-end pipeline

Our aim is to construct a pipeline to assist customers to adapt the MediaPipe FaceStylizer to completely different types by fine-tuning the mannequin with just a few examples. To allow such a face stylization pipeline, we constructed the pipeline with a GAN inversion encoder and environment friendly face generator mannequin (see beneath). The encoder and generator pipeline can then be tailored to completely different types by way of a few-shot studying course of. The consumer first sends a single or just a few comparable samples of the fashion photographs to MediaPipe ModelMaker to fine-tune the mannequin. The fine-tuning course of freezes the encoder module and solely fine-tunes the generator. The coaching course of samples a number of latent codes near the encoding output of the enter fashion photographs because the enter to the generator. The generator is then educated to reconstruct a picture of an individual’s face within the fashion of the enter fashion picture by optimizing a joint adversarial loss perform that additionally accounts for fashion and content material. With such a fine-tuning course of, the MediaPipe FaceStylizer can adapt to the custom-made fashion, which approximates the consumer’s enter. It might then be utilized to stylize check photographs of actual human faces.

Generator: BlazeStyleGAN

The StyleGAN mannequin household has been broadly adopted for face technology and varied face enhancing duties. To assist environment friendly on-device face technology, we based mostly the design of our generator on StyleGAN. This generator, which we name BlazeStyleGAN, is much like StyleGAN in that it additionally comprises a mapping community and synthesis community. Nonetheless, because the synthesis community of StyleGAN is the most important contributor to the mannequin’s excessive computation complexity, we designed and employed a extra environment friendly synthesis community. The improved effectivity and technology high quality is achieved by:

- Lowering the latent function dimension within the synthesis community to 1 / 4 of the decision of the counterpart layers within the trainer StyleGAN,

- Designing a number of auxiliary heads to remodel the downscaled function to the picture area to type a coarse-to-fine picture pyramid to guage the perceptual high quality of the reconstruction, and

- Skipping all however the closing auxiliary head at inference time.

With the newly designed structure, we prepare the BlazeStyleGAN mannequin by distilling it from a trainer StyleGAN mannequin. We use a multi-scale perceptual loss and adversarial loss within the distillation to switch the excessive constancy technology functionality from the trainer mannequin to the coed BlazeStyleGAN mannequin and in addition to mitigate the artifacts from the trainer mannequin.

Extra particulars of the mannequin structure and coaching scheme will be present in our paper.

Within the above determine, we show some pattern outcomes of our BlazeStyleGAN. By evaluating with the face picture generated by the trainer StyleGAN mannequin (prime row), the photographs generated by the coed BlazeStyleGAN (backside row) preserve excessive visible high quality and additional cut back artifacts produced by the trainer because of the loss perform design in our distillation.

An encoder for environment friendly GAN inversion

To assist image-to-image stylization, we additionally launched an environment friendly GAN inversion because the encoder to map enter photographs to the latent house of the generator. The encoder is outlined by a MobileNet V2 spine and educated with pure face photographs. The loss is outlined as a mix of picture perceptual high quality loss, which measures the content material distinction, fashion similarity and embedding distance, in addition to the L1 loss between the enter photographs and reconstructed photographs.

On-device efficiency

We documented mannequin complexities by way of parameter numbers and computing FLOPs within the following desk. In comparison with the trainer StyleGAN (33.2M parameters), BlazeStyleGAN (generator) considerably reduces the mannequin complexity, with solely 2.01M parameters and 1.28G FLOPs for output decision 256×256. In comparison with StyleGAN-1024 (producing picture dimension of 1024×1024), the BlazeStyleGAN-1024 can cut back each mannequin dimension and computation complexity by 95% with no notable high quality distinction and may even suppress the artifacts from the trainer StyleGAN mannequin.

| Mannequin | Picture Dimension | #Params (M) | FLOPs (G) | |||

| StyleGAN | 1024 | 33.17 | 74.3 | |||

| BlazeStyleGAN | 1024 | 2.07 | 4.70 | |||

| BlazeStyleGAN | 512 | 2.05 | 1.57 | |||

| BlazeStyleGAN | 256 | 2.01 | 1.28 | |||

| Encoder | 256 | 1.44 | 0.60 |

| Mannequin complexity measured by parameter numbers and FLOPs. |

We benchmarked the inference time of the MediaPipe FaceStylizer on varied high-end cell units and demonstrated the ends in the desk beneath. From the outcomes, each BlazeStyleGAN-256 and BlazeStyleGAN-512 achieved real-time efficiency on all GPU units. It might run in lower than 10 ms runtime on a high-end cellphone’s GPU. BlazeStyleGAN-256 also can obtain real-time efficiency on the iOS units’ CPU.

| Mannequin | BlazeStyleGAN-256 (ms) | Encoder-256 (ms) | ||

| iPhone 11 | 12.14 | 11.48 | ||

| iPhone 12 | 11.99 | 12.25 | ||

| iPhone 13 Professional | 7.22 | 5.41 | ||

| Pixel 6 | 12.24 | 11.23 | ||

| Samsung Galaxy S10 | 17.01 | 12.70 | ||

| Samsung Galaxy S20 | 8.95 | 8.20 |

| Latency benchmark of the BlazeStyleGAN, face encoder, and the end-to-end pipeline on varied cell units. |

Equity analysis

The mannequin has been educated with a excessive range dataset of human faces. The mannequin is anticipated to be honest to completely different human faces. The equity analysis demonstrates the mannequin performs good and balanced by way of human gender, skin-tone, and ages.

Face stylization visualization

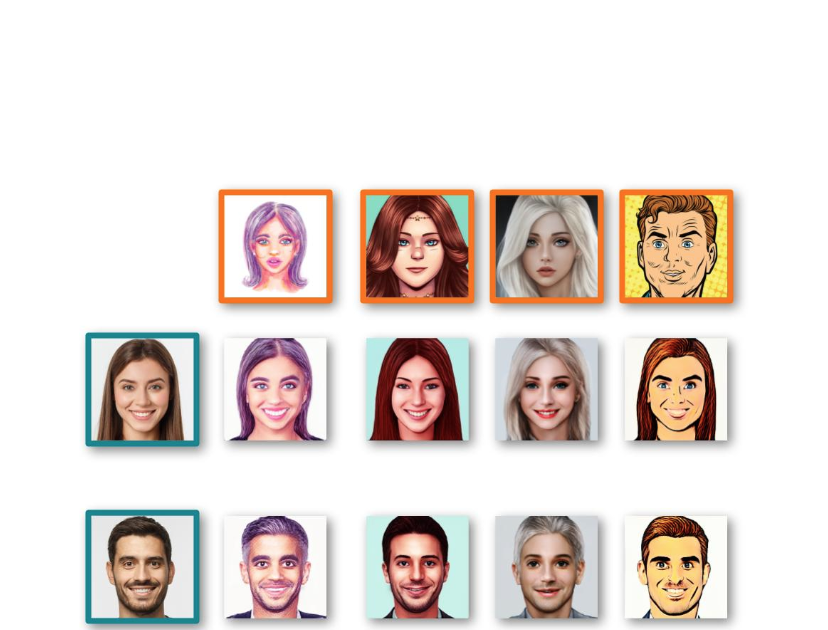

Some face stylization outcomes are demonstrated within the following determine. The photographs within the prime row (in orange bins) signify the fashion photographs used to fine-tune the mannequin. The photographs within the left column (within the inexperienced bins) are the pure face photographs used for testing. The 2×4 matrix of photographs represents the output of the MediaPipe FaceStylizer which is mixing outputs between the pure faces on the left-most column and the corresponding face types on the highest row. The outcomes show that our answer can obtain high-quality face stylization for a number of fashionable types.

|

| Pattern outcomes of our MediaPipe FaceStylizer. |

MediaPipe Options

The MediaPipe FaceStylizer goes to be launched to public customers in MediaPipe Solutions. Customers can leverage MediaPipe Model Maker to coach a custom-made face stylization mannequin utilizing their very own fashion photographs. After coaching, the exported bundle of TFLite mannequin information will be deployed to purposes throughout platforms (Android, iOS, Net, Python, and so on.) utilizing the MediaPipe Tasks FaceStylizer API in only a few strains of code.

Acknowledgements

This work is made potential by way of a collaboration spanning a number of groups throughout Google. We’d wish to acknowledge contributions from Omer Tov, Yang Zhao, Andrey Vakunov, Fei Deng, Ariel Ephrat, Inbar Mosseri, Lu Wang, Chuo-Ling Chang, Tingbo Hou, and Matthias Grundmann.