Past the Pen: AI’s Artistry in Handwritten Textual content Technology from Visible Archetypes

The rising subject of Styled Handwritten Textual content Technology (HTG) seeks to create handwritten textual content photos that replicate the distinctive calligraphic model of particular person writers. This analysis space has various sensible functions, from producing high-quality coaching knowledge for customized Handwritten Textual content Recognition (HTR) fashions to routinely producing handwritten notes for people with bodily impairments. Moreover, the distinct model representations acquired from fashions designed for this function can discover utility in different duties like author identification, signature verification, and manipulation of handwriting types.

When delving into styled handwriting era, solely counting on model switch proves limiting. It’s because emulating the calligraphy of a specific author extends past mere texture issues, similar to the colour and texture of the background and ink. It encompasses intricate particulars like stroke thickness, slant, skew, roundness, particular person character shapes, and ligatures. Exact dealing with of those visible parts is essential to forestall artifacts that might inadvertently alter the content material, similar to introducing small further or lacking strokes.

In response to this, specialised methodologies have been devised for HTG. One method includes treating handwriting as a trajectory composed of particular person strokes. Alternatively, it may be approached as a picture that captures its visible traits.

The previous set of strategies employs on-line HTG methods, the place the prediction of pen trajectory is carried out level by level. However, the latter set constitutes offline HTG fashions that instantly generate full textual photos. The work offered on this article focuses on the offline HTG paradigm because of its advantageous attributes. Not like the web method, it doesn’t necessitate costly pen-recording coaching knowledge. Because of this, it may be utilized even in situations the place details about an writer’s on-line handwriting is unavailable, similar to historic knowledge. Furthermore, the offline paradigm is less complicated to coach, because it avoids points like vanishing gradients and permits for parallelization.

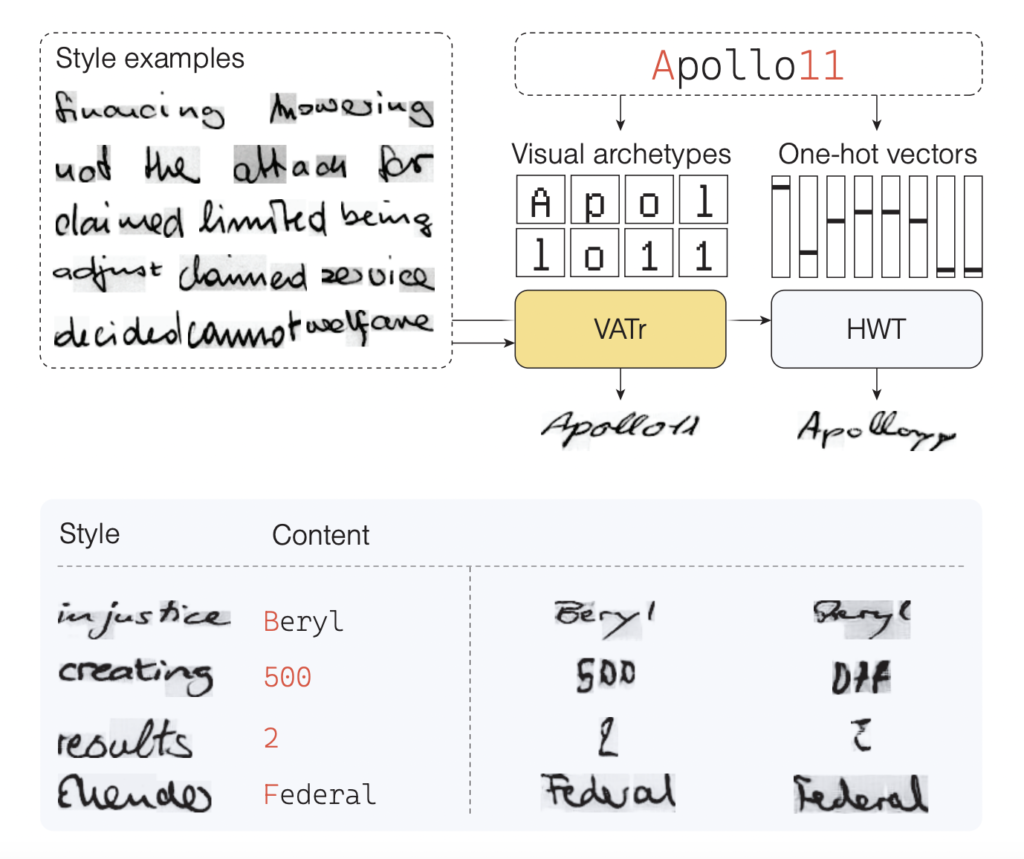

The structure employed on this examine, often called VATr (Visible Archetypes-based Transformer), introduces a novel and modern method to Few-Shot-styled offline Handwritten Textual content Technology (HTG). An summary of the proposed approach is offered within the determine beneath.

This method stands out by representing characters as steady variables and using them as question content material vectors inside a Transformer decoder for the era course of. The method begins with character illustration. Characters are remodeled into steady variables, that are then used as queries inside a Transformer decoder. This decoder is a vital part answerable for producing stylized textual content photos based mostly on the supplied content material.

A notable benefit of this technique is its means to facilitate the era of characters which might be much less steadily encountered within the coaching knowledge, similar to numbers, capital letters, and punctuation marks. That is achieved by capitalizing on the proximity within the latent house between uncommon symbols and extra generally occurring ones.

The structure employs the GNU Unifont font to render characters as 16×16 binary photos, successfully capturing the visible essence of every character. A dense encoding of those character photos is then realized and included into the Transformer decoder as queries. These queries information the decoder’s consideration to the model vectors, that are extracted by a pre-trained Transformer encoder.

Moreover, the method advantages from a pre-trained spine, which has been initially skilled on an in depth artificial dataset tailor-made to emphasise calligraphic model attributes. Whereas this system is usually disregarded within the context of HTG, its effectiveness is demonstrated in yielding sturdy model representations, significantly for types that haven’t been seen earlier than.

The VATr structure is validated by way of in depth experimental comparisons in opposition to current state-of-the-art generative strategies. Some outcomes and comparisons with state-of-the-art approaches are reported right here beneath.

This was the abstract of VATr, a novel AI framework for handwritten textual content era from visible archetypes. If you’re and need to be taught extra about it, please be at liberty to discuss with the hyperlinks cited beneath.

Try the Paper and GitHub. All Credit score For This Analysis Goes To the Researchers on This Undertaking. Additionally, don’t neglect to affix our 28k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI tasks, and extra.

Daniele Lorenzi obtained his M.Sc. in ICT for Web and Multimedia Engineering in 2021 from the College of Padua, Italy. He’s a Ph.D. candidate on the Institute of Data Know-how (ITEC) on the Alpen-Adria-Universität (AAU) Klagenfurt. He’s presently working within the Christian Doppler Laboratory ATHENA and his analysis pursuits embody adaptive video streaming, immersive media, machine studying, and QoS/QoE analysis.