Zero-shot textual content classification with Amazon SageMaker JumpStart

Pure language processing (NLP) is the sphere in machine studying (ML) involved with giving computer systems the flexibility to grasp textual content and spoken phrases in the identical approach as human beings can. Not too long ago, state-of-the-art architectures just like the transformer architecture are used to attain near-human efficiency on NLP downstream duties like textual content summarization, textual content classification, entity recognition, and extra.

Massive language fashions (LLMs) are transformer-based fashions skilled on a considerable amount of unlabeled textual content with tons of of hundreds of thousands (BERT) to over a trillion parameters (MiCS), and whose measurement makes single-GPU coaching impractical. Because of their inherent complexity, coaching an LLM from scratch is a really difficult job that only a few organizations can afford. A typical apply for NLP downstream duties is to take a pre-trained LLM and fine-tune it. For extra details about fine-tuning, confer with Domain-adaptation Fine-tuning of Foundation Models in Amazon SageMaker JumpStart on Financial data and Fine-tune transformer language models for linguistic diversity with Hugging Face on Amazon SageMaker.

Zero-shot studying in NLP permits a pre-trained LLM to generate responses to duties that it hasn’t been explicitly skilled for (even with out fine-tuning). Particularly talking about textual content classification, zero-shot textual content classification is a job in pure language processing the place an NLP mannequin is used to categorise textual content from unseen lessons, in distinction to supervised classification, the place NLP fashions can solely classify textual content that belong to lessons within the coaching information.

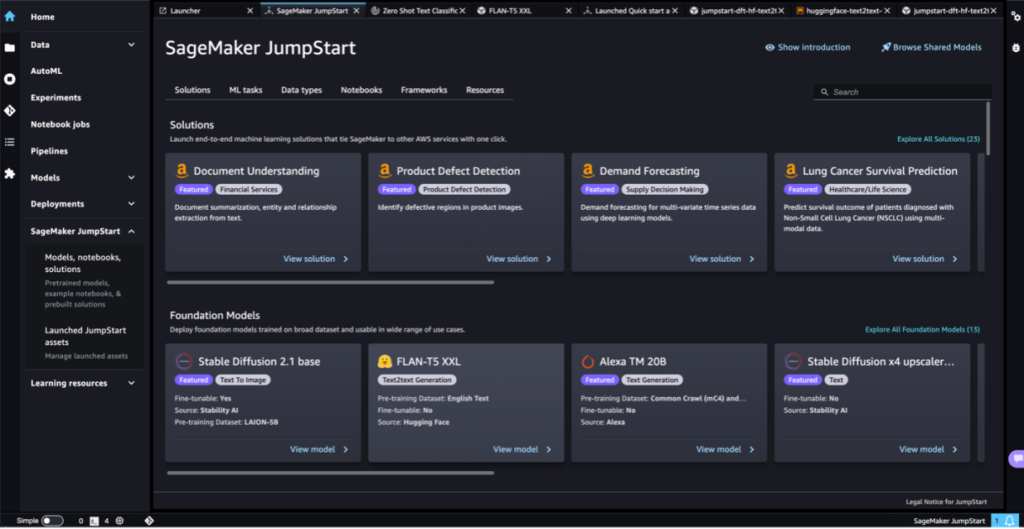

We just lately launched zero-shot classification mannequin assist in Amazon SageMaker JumpStart. SageMaker JumpStart is the ML hub of Amazon SageMaker that gives entry to pre-trained basis fashions (FMs), LLMs, built-in algorithms, and resolution templates that can assist you rapidly get began with ML. On this submit, we present how one can carry out zero-shot classification utilizing pre-trained fashions in SageMaker Jumpstart. You’ll learn to use the SageMaker Jumpstart UI and SageMaker Python SDK to deploy the answer and run inference utilizing the out there fashions.

Zero-shot studying

Zero-shot classification is a paradigm the place a mannequin can classify new, unseen examples that belong to lessons that weren’t current within the coaching information. For instance, a language mannequin that has beed skilled to grasp human language can be utilized to categorise New Yr’s resolutions tweets on a number of lessons like profession, well being, and finance, with out the language mannequin being explicitly skilled on the textual content classification job. That is in distinction to fine-tuning the mannequin, because the latter implies re-training the mannequin (by switch studying) whereas zero-shot studying doesn’t require further coaching.

The next diagram illustrates the variations between switch studying (left) vs. zero-shot studying (proper).

Yin et al. proposed a framework for creating zero-shot classifiers utilizing pure language inference (NLI). The framework works by posing the sequence to be categorized as an NLI premise and constructs a speculation from every candidate label. For instance, if we wish to consider whether or not a sequence belongs to the category politics, we may assemble a speculation of “This textual content is about politics.” The possibilities for entailment and contradiction are then transformed to label possibilities. As a fast evaluate, NLI considers two sentences: a premise and a speculation. The duty is to find out whether or not the speculation is true (entailment) or false (contradiction) given the premise. The next desk gives some examples.

| Premise | Label | Speculation |

| A person inspects the uniform of a determine in some East Asian nation. | Contradiction | The person is sleeping. |

| An older and youthful man smiling. | Impartial | Two males are smiling and laughing on the cats taking part in on the ground. |

| A soccer sport with a number of males taking part in. | entailment | Some males are taking part in a sport. |

Resolution overview

On this submit, we focus on the next:

- The right way to deploy pre-trained zero-shot textual content classification fashions utilizing the SageMaker JumpStart UI and run inference on the deployed mannequin utilizing quick textual content information

- The right way to use the SageMaker Python SDK to entry the pre-trained zero-shot textual content classification fashions in SageMaker JumpStart and use the inference script to deploy the mannequin to a SageMaker endpoint for a real-time textual content classification use case

- The right way to use the SageMaker Python SDK to entry pre-trained zero-shot textual content classification fashions and use SageMaker batch rework for a batch textual content classification use case

SageMaker JumpStart gives one-click fine-tuning and deployment for all kinds of pre-trained fashions throughout fashionable ML duties, in addition to a collection of end-to-end options that clear up widespread enterprise issues. These options take away the heavy lifting from every step of the ML course of, simplifying the event of high-quality fashions and decreasing time to deployment. The JumpStart APIs help you programmatically deploy and fine-tune an unlimited collection of pre-trained fashions by yourself datasets.

The JumpStart mannequin hub gives entry to a lot of NLP fashions that allow switch studying and fine-tuning on customized datasets. As of this writing, the JumpStart mannequin hub comprises over 300 textual content fashions throughout a wide range of fashionable fashions, comparable to Secure Diffusion, Flan T5, Alexa TM, Bloom, and extra.

Word that by following the steps on this part, you’ll deploy infrastructure to your AWS account which will incur prices.

Deploy a standalone zero-shot textual content classification mannequin

On this part, we reveal methods to deploy a zero-shot classification mannequin utilizing SageMaker JumpStart. You may entry pre-trained fashions by the JumpStart touchdown web page in Amazon SageMaker Studio. Full the next steps:

- In SageMaker Studio, open the JumpStart touchdown web page.

Check with Open and use JumpStart for extra particulars on methods to navigate to SageMaker JumpStart. - Within the Textual content Fashions carousel, find the “Zero-Shot Textual content Classification” mannequin card.

- Select View mannequin to entry the

facebook-bart-large-mnlimannequin.

Alternatively, you may seek for the zero-shot classification mannequin within the search bar and get to the mannequin in SageMaker JumpStart. - Specify a deployment configuration, SageMaker internet hosting occasion sort, endpoint identify, Amazon Simple Storage Service (Amazon S3) bucket identify, and different required parameters.

- Optionally, you may specify safety configurations like AWS Identity and Access Management (IAM) function, VPC settings, and AWS Key Management Service (AWS KMS) encryption keys.

- Select Deploy to create a SageMaker endpoint.

This step takes a few minutes to finish. When it’s full, you may run inference towards the SageMaker endpoint that hosts the zero-shot classification mannequin.

Within the following video, we present a walkthrough of the steps on this part.

Use JumpStart programmatically with the SageMaker SDK

Within the SageMaker JumpStart part of SageMaker Studio, beneath Fast begin options, yow will discover the solution templates. SageMaker JumpStart resolution templates are one-click, end-to-end options for a lot of widespread ML use circumstances. As of this writing, over 20 options can be found for a number of use circumstances, comparable to demand forecasting, fraud detection, and personalised suggestions, to call a number of.

The “Zero Shot Textual content Classification with Hugging Face” resolution gives a method to classify textual content with out the necessity to practice a mannequin for particular labels (zero-shot classification) by utilizing a pre-trained textual content classifier. The default zero-shot classification mannequin for this resolution is the facebook-bart-large-mnli (BART) mannequin. For this resolution, we use the 2015 New Year’s Resolutions dataset to categorise resolutions. A subset of the unique dataset containing solely the Resolution_Category (floor fact label) and the textual content columns is included within the resolution’s property.

The enter information contains textual content strings, an inventory of desired classes for classification, and whether or not the classification is multi-label or not for synchronous (real-time) inference. For asynchronous (batch) inference, we offer an inventory of textual content strings, the checklist of classes for every string, and whether or not the classification is multi-label or not in a JSON strains formatted textual content file.

The results of the inference is a JSON object that appears one thing like the next screenshot.

We now have the unique textual content within the sequence area, the labels used for the textual content classification within the labels area, and the chance assigned to every label (in the identical order of look) within the area scores.

To deploy the Zero Shot Textual content Classification with Hugging Face resolution, full the next steps:

- On the SageMaker JumpStart touchdown web page, select Fashions, notebooks, options within the navigation pane.

- Within the Options part, select Discover All Options.

- On the Options web page, select the Zero Shot Textual content Classification with Hugging Face mannequin card.

- Assessment the deployment particulars and for those who agree, select Launch.

The deployment will provision a SageMaker real-time endpoint for real-time inference and an S3 bucket for storing the batch transformation outcomes.

The next diagram illustrates the structure of this technique.

Carry out real-time inference utilizing a zero-shot classification mannequin

On this part, we evaluate methods to use the Python SDK to run zero-shot textual content classification (utilizing any of the out there fashions) in actual time utilizing a SageMaker endpoint.

- First, we configure the inference payload request to the mannequin. That is mannequin dependent, however for the BART mannequin, the enter is a JSON object with the next construction:

- Word that the BART mannequin is just not explicitly skilled on the

candidate_labels. We’ll use the zero-shot classification method to categorise the textual content sequence to unseen lessons. The next code is an instance utilizing textual content from the New Yr’s resolutions dataset and the outlined lessons: - Subsequent, you may invoke a SageMaker endpoint with the zero-shot payload. The SageMaker endpoint is deployed as a part of the SageMaker JumpStart resolution.

- The inference response object comprises the unique sequence, the labels sorted by rating from max to min, and the scores per label:

Run a SageMaker batch rework job utilizing the Python SDK

This part describes methods to run batch rework inference with the zero-shot classification facebook-bart-large-mnli mannequin utilizing the SageMaker Python SDK. Full the next steps:

- Format the enter information in JSON strains format and add the file to Amazon S3.

SageMaker batch rework will carry out inference on the information factors uploaded within the S3 file. - Arrange the mannequin deployment artifacts with the next parameters:

- model_id – Use

huggingface-zstc-facebook-bart-large-mnli. - deploy_image_uri – Use the

image_urisPython SDK operate to get the pre-built SageMaker Docker picture for themodel_id. The operate returns the Amazon Elastic Container Registry (Amazon ECR) URI. - deploy_source_uri – Use the

script_urisutility API to retrieve the S3 URI that comprises scripts to run pre-trained mannequin inference. We specify thescript_scopeasinference. - model_uri – Use

model_urito get the mannequin artifacts from Amazon S3 for the desiredmodel_id.

- model_id – Use

- Use

HF_TASKto outline the duty for the Hugging Face transformers pipeline andHF_MODEL_IDto outline the mannequin used to categorise the textual content:For an entire checklist of duties, see Pipelines within the Hugging Face documentation.

- Create a Hugging Face mannequin object to be deployed with the SageMaker batch rework job:

- Create a rework to run a batch job:

- Begin a batch rework job and use S3 information as enter:

You may monitor your batch processing job on the SageMaker console (select Batch rework jobs beneath Inference within the navigation pane). When the job is full, you may test the mannequin prediction output within the S3 file laid out in output_path.

For an inventory of all of the out there pre-trained fashions in SageMaker JumpStart, confer with Built-in Algorithms with pre-trained Model Table. Use the key phrase “zstc” (quick for zero-shot textual content classification) within the search bar to find all of the fashions able to doing zero-shot textual content classification.

Clear up

After you’re finished operating the pocket book, be certain that to delete all sources created within the course of to make sure that the prices incurred by the property deployed on this information are stopped. The code to wash up the deployed sources is supplied within the notebooks related to the zero-shot textual content classification resolution and mannequin.

Default safety configurations

The SageMaker JumpStart fashions are deployed utilizing the next default safety configurations:

To study extra about SageMaker security-related subjects, try Configure security in Amazon SageMaker.

Conclusion

On this submit, we confirmed you methods to deploy a zero-shot classification mannequin utilizing the SageMaker JumpStart UI and carry out inference utilizing the deployed endpoint. We used the SageMaker JumpStart New Yr’s resolutions resolution to point out how you need to use the SageMaker Python SDK to construct an end-to-end resolution and implement zero-shot classification software. SageMaker JumpStart gives entry to tons of of pre-trained fashions and options for duties like pc imaginative and prescient, pure language processing, advice methods, and extra. Check out the answer by yourself and tell us your ideas.

Concerning the authors

David Laredo is a Prototyping Architect at AWS Envision Engineering in LATAM, the place he has helped develop a number of machine studying prototypes. Beforehand, he has labored as a Machine Studying Engineer and has been doing machine studying for over 5 years. His areas of curiosity are NLP, time collection, and end-to-end ML.

David Laredo is a Prototyping Architect at AWS Envision Engineering in LATAM, the place he has helped develop a number of machine studying prototypes. Beforehand, he has labored as a Machine Studying Engineer and has been doing machine studying for over 5 years. His areas of curiosity are NLP, time collection, and end-to-end ML.

Vikram Elango is an AI/ML Specialist Options Architect at Amazon Net Companies, primarily based in Virginia, US. Vikram helps monetary and insurance coverage trade prospects with design and thought management to construct and deploy machine studying purposes at scale. He’s at the moment centered on pure language processing, accountable AI, inference optimization, and scaling ML throughout the enterprise. In his spare time, he enjoys touring, mountain climbing, cooking, and tenting together with his household.

Vikram Elango is an AI/ML Specialist Options Architect at Amazon Net Companies, primarily based in Virginia, US. Vikram helps monetary and insurance coverage trade prospects with design and thought management to construct and deploy machine studying purposes at scale. He’s at the moment centered on pure language processing, accountable AI, inference optimization, and scaling ML throughout the enterprise. In his spare time, he enjoys touring, mountain climbing, cooking, and tenting together with his household.

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart workforce. He obtained his PhD from College of Illinois at Urbana-Champaign and was a Put up Doctoral Researcher at Georgia Tech. He’s an energetic researcher in machine studying and algorithm design and has printed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart workforce. He obtained his PhD from College of Illinois at Urbana-Champaign and was a Put up Doctoral Researcher at Georgia Tech. He’s an energetic researcher in machine studying and algorithm design and has printed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.