Mastering GPUs: A Newbie’s Information to GPU-Accelerated DataFrames in Python

Partnership Publish

In the event you’re working in python with giant datasets, maybe a number of gigabytes in dimension, you may doubtless relate to the frustration of ready hours in your queries to complete as your CPU-based pandas DataFrame struggles to carry out operations. This precise state of affairs is the place a pandas consumer ought to think about leveraging the facility of GPUs for information processing with RAPIDS cuDF.

RAPIDS cuDF, with its pandas-like API, allows information scientists and engineers to shortly faucet into the immense potential of parallel computing on GPUs–with just some code line modifications.

In the event you’re unfamiliar with GPU acceleration, this submit is a simple introduction to the RAPIDS ecosystem and showcases the commonest performance of cuDF, the GPU-based pandas DataFrame counterpart.

Need a useful abstract of the following pointers? Observe together with the downloadable cuDF cheat sheet.

Leveraging GPUs with cuDF DataFrame

cuDF is an information science constructing block for the RAPIDS suite of GPU-accelerated libraries. It’s an EDA workhorse you need to use to construct permitting information pipelines to course of information and derive new options. As a elementary part inside the RAPIDS suite, cuDF underpins the opposite libraries, solidifying its function as a standard constructing block. Like all parts within the RAPIDS suite, cuDF employs the CUDA backend to energy GPU computations.

Nevertheless, with a simple and acquainted Python interface, cuDF customers need not work together immediately with that layer.

How cuDF Can Make Your Information Science Work Sooner

Are you bored with watching the clock whereas your script runs? Whether or not you are dealing with string information or working with time collection, there are various methods you need to use cuDF to drive your information work ahead.

- Time series analysis: Whether or not you are resampling information, extracting options, or conducting advanced computations, cuDF affords a considerable speed-up, probably as much as 880x sooner than pandas for time-series evaluation.

- Real-time exploratory data analysis (EDA): Searching by giant datasets generally is a chore with conventional instruments, however cuDF’s GPU-accelerated processing energy makes real-time exploration of even the largest information units potential

- Machine learning (ML) data preparation: Pace up information transformation duties and put together your information for generally used ML algorithms, comparable to regression, classification and clustering, with cuDF’s acceleration capabilities. Environment friendly processing means faster mannequin improvement and means that you can get in direction of the deployment faster.

- Large-scale data visualization: Whether or not you are creating warmth maps for geographic information or visualizing advanced monetary traits, builders can deploy information visualization libraries with high-performance and high-FPS information visualization through the use of cuDF and cuxfilter. This integration permits for real-time interactivity to grow to be a significant part of your analytics cycle.

- Giant-scale information filtering and transformation: For big datasets exceeding a number of gigabytes, you may carry out filtering and transformation duties utilizing cuDF in a fraction of the time it takes with pandas.

- String data processing: Historically, string information processing has been a difficult and sluggish activity because of the advanced nature of textual information. These operations are made easy with GPU-acceleration

- GroupBy operations: GroupBy operations are a staple in information evaluation however could be resource-intensive. cuDF quickens these duties considerably, permitting you to realize insights sooner when splitting and aggregating your information

Acquainted interface for GPU processing

The core premise of RAPIDS is to offer a well-recognized consumer expertise to common information science instruments in order that the facility of NVIDIA GPUs is well accessible for all practitioners. Whether or not you’re performing ETL, constructing ML fashions, or processing graphs, if you understand pandas, NumPy, scikit-learn or NetworkX, you’ll really feel at dwelling when utilizing RAPIDS.

Switching from CPU to GPU Information Science stack has by no means been simpler: with as little change as importing cuDF as an alternative of pandas, you may harness the large energy of NVIDIA GPUs, rushing up the workloads 10-100x (on the low finish), and having fun with extra productiveness — all whereas utilizing your favourite instruments.

Test the pattern code beneath that presents how acquainted cuDF API is to anybody utilizing pandas.

import pandas as pd

import cudf

df_cpu = pd.read_csv('/information/pattern.csv')

df_gpu = cudf.read_csv('/information/pattern.csv')

Loading information out of your favourite information sources

Studying and writing capabilities of cuDF have grown considerably for the reason that first launch of RAPIDS in October 2018. The information could be native to a machine, saved in an on-prem cluster, or within the cloud. cuDF makes use of fsspec library to summary a lot of the file-system associated duties so you may concentrate on what issues probably the most: creating options and constructing your mannequin.

Due to fsspec studying information from both native or cloud file system requires solely offering credentials to the latter. The instance beneath reads the identical file from two totally different areas,

import cudf

df_local = cudf.read_csv('/information/pattern.csv')

df_remote = cudf.read_csv(

's3://<bucket>/pattern.csv'

, storage_options = {'anon': True})

cuDF helps a number of file codecs: text-based codecs like CSV/TSV or JSON, columnar-oriented codecs like Parquet or ORC, or row-oriented codecs like Avro. When it comes to file system assist, cuDF can learn recordsdata from native file system, cloud suppliers like AWS S3, Google GS, or Azure Blob/Information Lake, on- or off-prem Hadoop Information Techniques, and in addition immediately from HTTP or (S)FTP net servers, Dropbox or Google Drive, or Jupyter File System.

Creating and saving DataFrames with ease

Studying recordsdata shouldn’t be the one solution to create cuDF DataFrames. Actually, there are a minimum of 4 methods to take action:

From a listing of values you may create DataFrame with one column,

cudf.DataFrame([1,2,3,4], columns=['foo'])

Passing a dictionary if you wish to create a DataFrame with a number of columns,

cudf.DataFrame({

'foo': [1,2,3,4]

, 'bar': ['a','b','c',None]

})

Creating an empty DataFrame and assigning to columns,

df_sample = cudf.DataFrame()

df_sample['foo'] = [1,2,3,4]

df_sample['bar'] = ['a','b','c',None]

Passing a listing of tuples,

cudf.DataFrame([

(1, 'a')

, (2, 'b')

, (3, 'c')

, (4, None)

], columns=['ints', 'strings'])

You can too convert to and from different reminiscence representations:

- From an inner GPU matrix represented as an DeviceNDArray,

- By DLPack reminiscence objects used to share tensors between deep learning frameworks and Apache Arrow format that facilitates a way more handy approach of manipulating reminiscence objects from numerous programming languages,

- To changing to and from pandas DataFrames and Collection.

As well as, cuDF helps saving the info saved in a DataFrame into a number of codecs and file methods. Actually, cuDF can retailer information in all of the codecs it could possibly learn.

All of those capabilities make it potential to stand up and working shortly it doesn’t matter what your activity is or the place your information lives.

Extracting, remodeling, and summarizing information

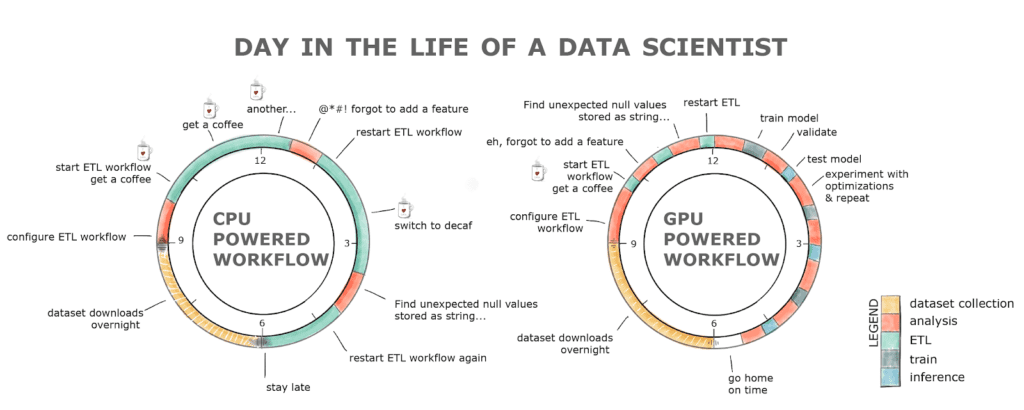

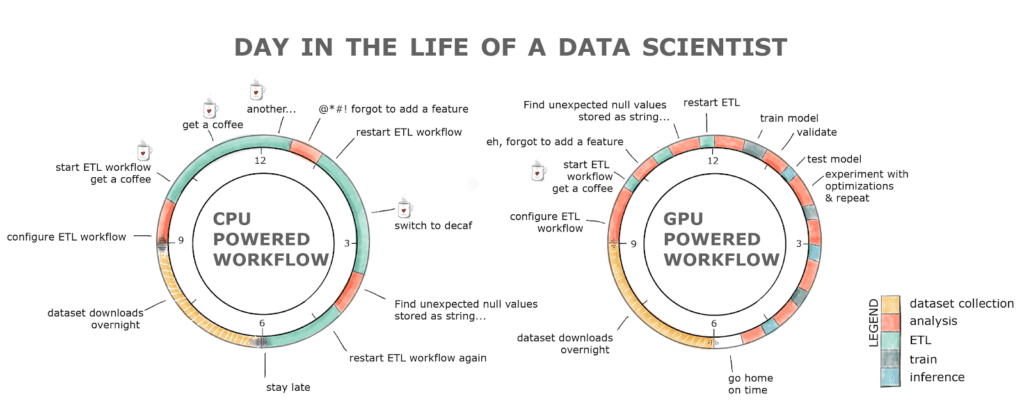

The elemental information science activity, and the one that every one information scientists complain about, is cleansing, featurizing and getting conversant in the dataset. We spend 80% of our time doing that. Why does it take a lot time?

One of many causes is as a result of the questions we ask the dataset take too lengthy to reply. Anybody who has tried to learn and course of a 2GB dataset on a CPU is aware of what we’re speaking about.

Moreover, since we’re human and we make errors, rerunning a pipeline would possibly shortly flip right into a full day train. This leads to misplaced productiveness and, doubtless, a espresso dependancy if we check out the chart beneath.

Determine 1. Typical workday for a developer utilizing a GPU- vs. CPU-powered workflow

RAPIDS with the GPU-powered workflow alleviates all these hurdles. The ETL stage is generally anyplace between 8-20x sooner, so loading that 2GB dataset takes seconds in comparison with minutes on a CPU, cleansing and reworking the info can also be orders of magnitude sooner! All this with a well-recognized interface and minimal code modifications.

Working with strings and dates on GPUs

Not more than 5 years in the past working with strings and dates on GPUs was thought of nearly not possible and past the attain of low-level programming languages like CUDA. In any case, GPUs have been designed to course of graphics, that’s, to govern giant arrays and matrices of ints and floats, not strings or dates.

RAPIDS means that you can not solely learn strings into the GPU reminiscence, but in addition extract options, course of, and manipulate them. If you’re conversant in Regex then extracting helpful data from a doc on a GPU is now a trivial activity because of cuDF. For instance, if you wish to discover and extract all of the phrases in your doc that match the [a-z]*circulate sample (like, informationcirculate, workcirculate, or circulate) all it’s worthwhile to do is,

df['string'].str.findall('([a-z]*circulate)')

Extracting helpful options from dates or querying the info for a particular time frame has grow to be simpler and sooner because of RAPIDS as nicely.

dt_to = dt.datetime.strptime("2020-10-03", "%Y-%m-%d")

df.question('dttm <= @dt_to')

Empowering Pandas Customers with GPU-acceleration

The transition from a CPU to a GPU information science stack is simple with RAPIDS. Importing cuDF as an alternative of pandas is a small change that may ship immense advantages. Whether or not you are engaged on an area GPU field or scaling as much as full-fledged information facilities, the GPU-accelerated energy of RAPIDS offers 10-100x velocity enhancements (on the low finish). This not solely results in elevated productiveness but in addition permits for environment friendly utilization of your favourite instruments, even in probably the most demanding, large-scale eventualities.

RAPIDS has really revolutionized the panorama of information processing, enabling information scientists to finish duties in minutes that after took hours and even days, resulting in elevated productiveness and decrease total prices.

To get began on making use of these methods to your dataset, learn the accelerated data analytics series on NVIDIA Technical Blog.

Editor’s Be aware: This post was up to date with permission and initially tailored from the NVIDIA Technical Weblog.