A New AI Analysis From DeepMind Proposes Two Path And Construction-Conscious Positional Encodings For Directed Graphs

Transformer fashions have been not too long ago gaining plenty of reputation. These neural community fashions observe relationships in sequential enter, such because the phrases in a sentence, to be taught context and which means. With the introduction of fashions like GPT 3.5 and GPT 4, proposed by OpenAI, the sphere of Synthetic Intelligence and, thereby, Deep Studying has actually superior and has been the speak of the city. Aggressive programming, conversational query answering, combinatorial optimization points, and graph studying duties all incorporate transformers as key elements.

Transformers fashions are utilized in aggressive programming to supply options from textual descriptions. The well-known chatbot ChatGPT, which is a GPT-based mannequin and a popular conversational question-answering mannequin, is one of the best instance of a transformer mannequin. Transformers have additionally been used to resolve combinatorial optimization points just like the Travelling Salesman Drawback, they usually have been profitable in graph studying duties, particularly with regards to predicting the traits of molecules.

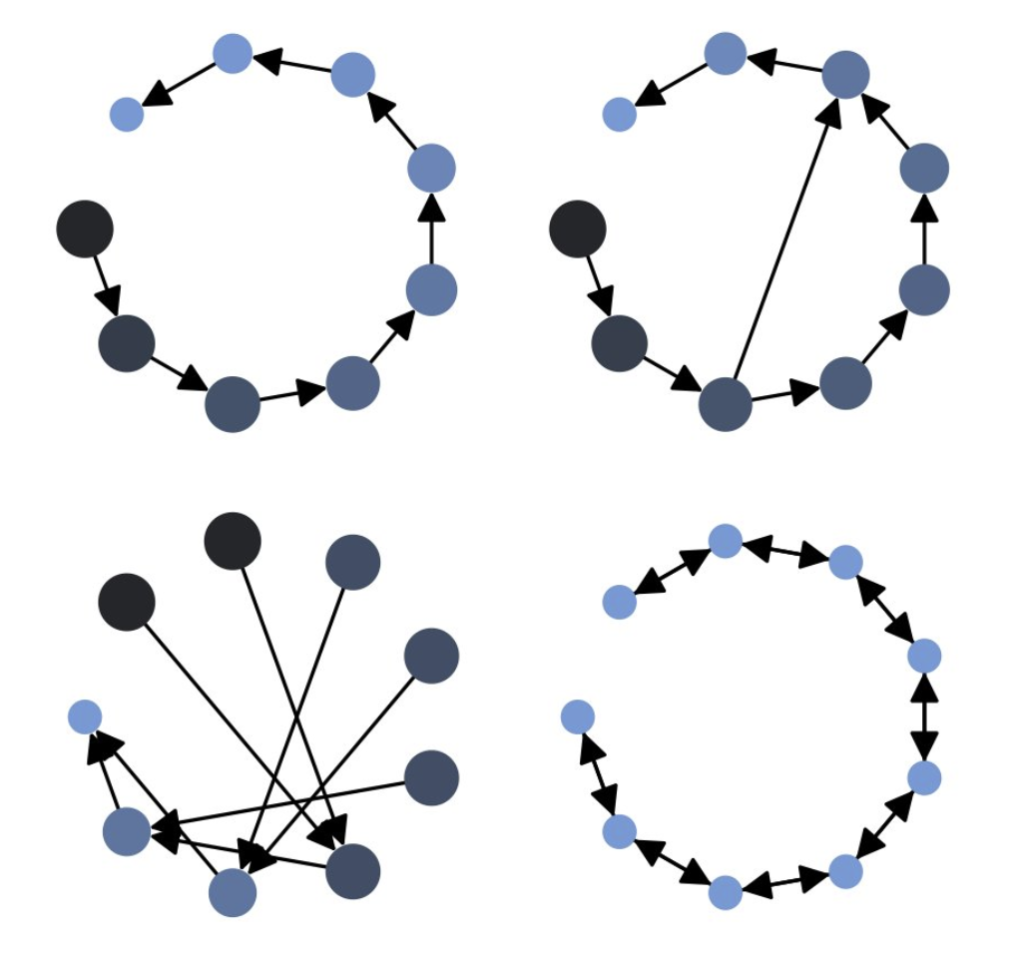

Transformer fashions have proven nice versatility in modalities, equivalent to pictures, audio, video, and undirected graphs, however transformers for directed graphs nonetheless lack consideration. To deal with this hole, a workforce of researchers has proposed two direction- and structure-aware positional encodings particularly designed for directed graphs. The Magnetic Laplacian, a direction-aware extension of the Combinatorial Laplacian, supplies the muse for the primary positional encoding that has been proposed. The supplied eigenvectors seize essential structural info whereas taking into account the directionality of edges in a graph. The transformer mannequin turns into extra cognizant of the directionality of the graph by together with these eigenvectors within the positional encoding technique, which allows it to efficiently characterize the semantics and dependencies present in directed graphs.

Directional random stroll encodings are the second positional encoding method that has been recommended. Random walks are a preferred technique for exploring and analyzing graphs by which the mannequin learns extra in regards to the directional construction of a directed graph by taking random walks within the graph and incorporating the stroll info into the positional encodings. On condition that it aids the mannequin’s comprehension of the hyperlinks and knowledge circulate contained in the graph, this information is utilized in quite a lot of downstream actions.

The workforce has shared that the empirical evaluation has proven how the direction- and structure-aware positional encodings have carried out nicely in quite a lot of downstream duties. The correctness testing of sorting networks which is one in all these duties, entails determining whether or not a selected set of operations really constitutes a sorting community. The recommended mannequin outperforms the earlier state-of-the-art technique by 14.7%, as measured by the Open Graph Benchmark Code2, by using the directionality info within the graph illustration of sorting networks.

The workforce has summarized the contributions as follows –

- A transparent connection between sinusoidal positional encodings, generally utilized in transformers, and the eigenvectors of the Laplacian has been established.

- The workforce has proposed spectral positional encodings that stretch to directed graphs, offering a approach to incorporate directionality info into the positional encodings.

- Random stroll positional encodings have been prolonged to directed graphs, enabling the mannequin to seize the directional construction of the graph.

- The workforce has evaluated the predictiveness of structure-aware positional encodings for varied graph distances, demonstrating their effectiveness. They’ve launched the duty of predicting the correctness of sorting networks, showcasing the significance of directionality on this utility.

- The workforce has quantified the advantages of representing a sequence of program statements as a directed graph and has proposed a brand new graph development technique for supply code, bettering predictive efficiency and robustness.

- A brand new state-of-the-art efficiency on the OGB Code2 dataset has been achieved, particularly for perform identify prediction, with a 2.85% greater F1 rating and a relative enchancment of 14.7%.

Try the Paper. All Credit score For This Analysis Goes To the Researchers on This Challenge. Additionally, don’t overlook to hitch our 26k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra.

🚀 Check Out 800+ AI Tools in AI Tools Club

Tanya Malhotra is a ultimate yr undergrad from the College of Petroleum & Power Research, Dehradun, pursuing BTech in Laptop Science Engineering with a specialization in Synthetic Intelligence and Machine Studying.

She is a Knowledge Science fanatic with good analytical and significant considering, together with an ardent curiosity in buying new abilities, main teams, and managing work in an organized method.