Predict car fleet failure chance utilizing Amazon SageMaker Jumpstart

Predictive upkeep is vital in automotive industries as a result of it may possibly keep away from out-of-the-blue mechanical failures and reactive upkeep actions that disrupt operations. By predicting car failures and scheduling upkeep and repairs, you’ll cut back downtime, enhance security, and increase productiveness ranges.

What if we might apply deep studying strategies to widespread areas that drive car failures, unplanned downtime, and restore prices?

On this put up, we present you learn how to prepare and deploy a mannequin to foretell car fleet failure chance utilizing Amazon SageMaker JumpStart. SageMaker Jumpstart is the machine studying (ML) hub of Amazon SageMaker, offering pre-trained, publicly out there fashions for a variety of downside sorts that can assist you get began with ML. The answer outlined within the put up is obtainable on GitHub.

SageMaker JumpStart resolution templates

SageMaker JumpStart supplies one-click, end-to-end options for a lot of widespread ML use instances. Discover the next use instances for extra data on out there resolution templates:

The SageMaker JumpStart resolution templates cowl quite a lot of use instances, underneath every of which a number of completely different resolution templates are supplied (the answer on this put up, Predictive Maintenance for Vehicle Fleets, is within the Options part). Select the answer template that most closely fits your use case from the SageMaker JumpStart touchdown web page. For extra data on particular options underneath every use case and learn how to launch a SageMaker JumpStart resolution, see Solution Templates.

Resolution overview

The AWS predictive upkeep resolution for automotive fleets applies deep studying strategies to widespread areas that drive car failures, unplanned downtime, and restore prices. It serves as an preliminary constructing block so that you can get to a proof of idea in a brief time frame. This resolution incorporates information preparation and visualization performance inside SageMaker and permits you to prepare and optimize the hyperparameters of deep studying fashions to your dataset. You need to use your individual information or strive the answer with an artificial dataset as a part of this resolution. This model processes car sensor information over time. A subsequent model will course of upkeep report information.

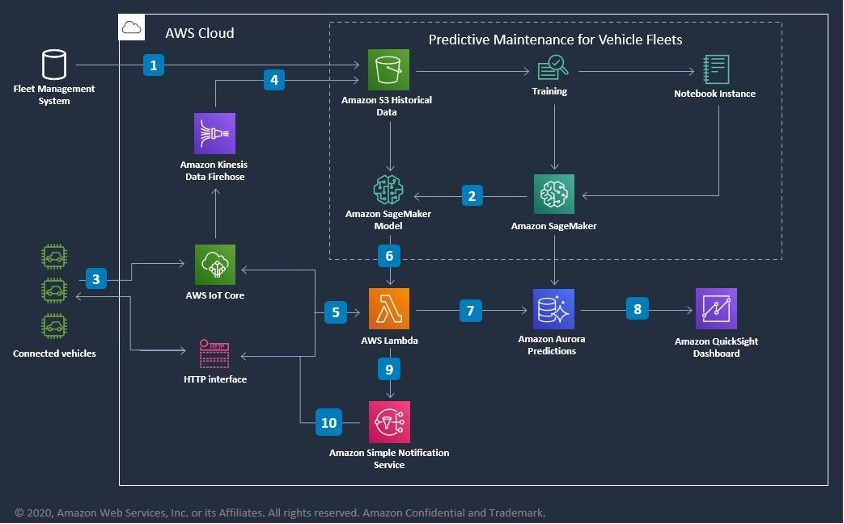

The next diagram demonstrates how you need to use this resolution with SageMaker elements. As a part of the answer, the next providers are used:

- Amazon S3 – We use Amazon Simple Storage Service (Amazon S3) to retailer datasets

- SageMaker pocket book – We use a pocket book to preprocess and visualize the information, and to coach the deep studying mannequin

- SageMaker endpoint – We use the endpoint to deploy the educated mannequin

The workflow consists of the next steps:

- An extract of historic information is created from the Fleet Administration System containing car information and sensor logs.

- After the ML mannequin is educated, the SageMaker mannequin artifact is deployed.

- The linked car sends sensor logs to AWS IoT Core (alternatively, by way of an HTTP interface).

- Sensor logs are continued by way of Amazon Kinesis Data Firehose.

- Sensor logs are despatched to AWS Lambda for querying towards the mannequin to make predictions.

- Lambda sends sensor logs to Sagemaker mannequin inference for predictions.

- Predictions are continued in Amazon Aurora.

- Mixture outcomes are displayed on an Amazon QuickSight dashboard.

- Actual-time notifications on the anticipated chance of failure are despatched to Amazon Simple Notification Service (Amazon SNS).

- Amazon SNS sends notifications again to the linked car.

The answer consists of six notebooks:

- 0_demo.ipynb – A fast preview of our resolution

- 1_introduction.ipynb – Introduction and resolution overview

- 2_data_preparation.ipynb – Put together a pattern dataset

- 3_data_visualization.ipynb – Visualize our pattern dataset

- 4_model_training.ipynb – Practice a mannequin on our pattern dataset to detect failures

- 5_results_analysis.ipynb – Analyze the outcomes from the mannequin we educated

Stipulations

Amazon SageMaker Studio is the built-in improvement atmosphere (IDE) inside SageMaker that gives us with all of the ML options that we’d like in a single pane of glass. Earlier than we are able to run SageMaker JumpStart, we have to arrange SageMaker Studio. You may skip this step if you have already got your individual model of SageMaker Studio operating.

The very first thing we have to do earlier than we are able to use any AWS providers is to verify now we have signed up for and created an AWS account. Then we create an administrative person and a bunch. For directions on each steps, consult with Set Up Amazon SageMaker Prerequisites.

The following step is to create a SageMaker area. A site units up all of the storage and permits you to add customers to entry SageMaker. For extra data, consult with Onboard to Amazon SageMaker Domain. This demo is created within the AWS Area us-east-1.

Lastly, you launch SageMaker Studio. For this put up, we suggest launching a person profile app. For directions, consult with Launch Amazon SageMaker Studio.

To run this SageMaker JumpStart resolution and have the infrastructure deployed to your AWS account, it is advisable to create an lively SageMaker Studio occasion (see Onboard to Amazon SageMaker Studio). When your occasion is prepared, use the directions in SageMaker JumpStart to launch the answer. The answer artifacts are included on this GitHub repository for reference.

Launch the SageMaker Jumpstart resolution

To get began with the answer, full the next steps:

- On the SageMaker Studio console, select JumpStart.

- On the Options tab, select Predictive Upkeep for Automobile Fleets.

- Select Launch.

It takes a couple of minutes to deploy the answer. - After the answer is deployed, select Open Pocket book.

In the event you’re prompted to pick out a kernel, select PyTorch 1.8 Python 3.6 for all notebooks on this resolution.

Resolution preview

We first work on the 0_demo.ipynb pocket book. On this pocket book, you may get a fast preview of what the result will appear like once you full the complete pocket book for this resolution.

Select Run and Run All Cells to run all cells in SageMaker Studio (or Cell and Run All in a SageMaker pocket book occasion). You may run all of the cells in every pocket book one after the opposite. Guarantee all of the cells end processing earlier than transferring to the subsequent pocket book.

This resolution depends on a config file to run the provisioned AWS sources. We generate the file as follows:

We’ve got some pattern time collection enter information consisting of a car’s battery voltage and battery present over time. Subsequent, we load and visualize the pattern information. As proven within the following screenshots, the voltage and present values are on the Y axis and the readings (19 readings recorded) are on the X axis.

We’ve got beforehand educated a mannequin on this voltage and present information that predicts the chance of auto failure and have deployed the mannequin as an endpoint in SageMaker. We are going to name this endpoint with some pattern information to find out the chance of failure within the subsequent time interval.

Given the pattern enter information, the anticipated chance of failure is 45.73%.

To maneuver to the subsequent stage, select Click on right here to proceed.

Introduction and resolution overview

The 1_introduction.ipynb pocket book supplies an outline of the answer and levels, and a glance into the configuration file that has content material definition, information sampling interval, prepare and check pattern rely, parameters, location, and column names for generated content material.

After you evaluation this pocket book, you possibly can transfer to the subsequent stage.

Put together a pattern dataset

We put together a pattern dataset within the 2_data_preparation.ipynb pocket book.

We first generate the configuration file for this resolution:

The config properties are as follows:

You may outline your individual dataset or use our scripts to generate a pattern dataset:

You may merge the sensor information and fleet car information collectively:

We are able to now transfer to information visualization.

Visualize our pattern dataset

We visualize our pattern dataset in 3_data_vizualization.ipynb. This resolution depends on a config file to run the provisioned AWS sources. Let’s generate the file just like the earlier pocket book.

The next screenshot exhibits our dataset.

Subsequent, let’s construct the dataset:

Now that the dataset is prepared, let’s visualize the information statistics. The next screenshot exhibits the information distribution based mostly on car make, engine sort, car class, and mannequin.

Evaluating the log information, let’s have a look at an instance of the imply voltage throughout completely different years for Make E and C (random).

The imply of voltage and present is on the Y axis and the variety of readings is on the X axis.

- Attainable values for log_target: [‘make’, ‘model’, ‘year’, ‘vehicle_class’, ‘engine_type’]

- Randomly assigned worth for

log_target: make

- Randomly assigned worth for

- Attainable values for log_target_value1: [‘Make A’, ‘Make B’, ‘Make E’, ‘Make C’, ‘Make D’]

- Randomly assigned worth for

log_target_value1: Make B

- Randomly assigned worth for

- Attainable values for log_target_value2: [‘Make A’, ‘Make B’, ‘Make E’, ‘Make C’, ‘Make D’]

- Randomly assigned worth for

log_target_value2: Make D

- Randomly assigned worth for

Primarily based on the above, we assume log_target: make, log_target_value1: Make B and log_target_value2: Make D

The next graphs break down the imply of the log information.

The next graphs visualize an instance of various sensor log values towards voltage and present.

Practice a mannequin on our pattern dataset to detect failures

Within the 4_model_training.ipynb pocket book, we prepare a mannequin on our pattern dataset to detect failures.

Let’s generate the configuration file just like the earlier pocket book, after which proceed with coaching configuration:

Analyze the outcomes from the mannequin we educated

Within the 5_results_analysis.ipynb pocket book, we get information from our hyperparameter tuning job, visualize metrics of all the roles to determine the perfect job, and construct an endpoint for the perfect coaching job.

Let’s generate the configuration file just like the earlier pocket book and visualize the metrics of all the roles. The next plot visualizes check accuracy vs. epoch.

The next screenshot exhibits the hyperparameter tuning jobs we ran.

Now you can visualize information from the perfect coaching job (out of the 4 coaching jobs) based mostly on the check accuracy (purple).

As we are able to see within the following screenshots, the check loss declines and AUC and accuracy improve with epochs.

Primarily based on the visualizations, we are able to now construct an endpoint for the perfect coaching job:

After we construct the endpoint, we are able to check the predictor by passing it pattern sensor logs:

Given the pattern enter information, the anticipated chance of failure is 34.60%.

Clear up

Once you’ve completed with this resolution, just remember to delete all undesirable AWS sources. On the Predictive Upkeep for Automobile Fleets web page, underneath Delete resolution, select Delete all sources to delete all of the sources related to the answer.

You’ll want to manually delete any further sources that you could have created on this pocket book. Some examples embody the additional S3 buckets (to the answer’s default bucket) and the additional SageMaker endpoints (utilizing a customized title).

Customise the answer

Our resolution is straightforward to customise. To change the enter information visualizations, consult with sagemaker/3_data_visualization.ipynb. To customise the machine studying, consult with sagemaker/source/train.py and sagemaker/source/dl_utils/network.py. To customise the dataset processing, consult with sagemaker/1_introduction.ipynb on learn how to outline the config file.

Moreover, you possibly can change the configuration within the config file. The default configuration is as follows:

The config file has the next parameters:

fleet_info_fn,fleet_sensor_logs_fn,fleet_dataset_fn,train_dataset_fn, andtest_dataset_fnoutline the situation of dataset informationvehicle_id_column,timestamp_column,target_column, andperiod_columnoutline the headers for columnsdataset_size,chunksize,processing_chunksize,period_ms, andwindow_lengthoutline the properties of the dataset

Conclusion

On this put up, we confirmed you learn how to prepare and deploy a mannequin to foretell car fleet failure chance utilizing SageMaker JumpStart. The answer relies on ML and deep studying fashions and permits all kinds of enter information together with any time-varying sensor information. As a result of each car has completely different telemetry on it, you possibly can fine-tune the supplied mannequin to the frequency and kind of information that you’ve got.

To study extra about what you are able to do with SageMaker JumpStart, consult with the next:

Sources

In regards to the Authors

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering clients steerage on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s enthusiastic about cloud and machine studying. Raj can be a machine studying specialist and works with AWS clients to design, deploy, and handle their AWS workloads and architectures.

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering clients steerage on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s enthusiastic about cloud and machine studying. Raj can be a machine studying specialist and works with AWS clients to design, deploy, and handle their AWS workloads and architectures.