Clever healthcare assistants: Empowering stakeholders with personalised assist and data-driven insights

Large language models (LLMs) have revolutionized the sphere of pure language processing, enabling machines to know and generate human-like textual content with exceptional accuracy. Nevertheless, regardless of their spectacular language capabilities, LLMs are inherently restricted by the info they have been educated on. Their information is static and confined to the data they have been educated on, which turns into problematic when coping with dynamic and always evolving domains like healthcare.

The healthcare business is a fancy, ever-changing panorama with an unlimited and quickly rising physique of information. Medical analysis, scientific practices, and therapy pointers are always being up to date, rendering even essentially the most superior LLMs rapidly outdated. Moreover, affected person knowledge, together with digital well being data (EHRs), diagnostic experiences, and medical histories, are extremely personalised and distinctive to every particular person. Relying solely on an LLM’s pre-trained information is inadequate for offering correct and personalised healthcare suggestions.

Moreover, healthcare choices usually require integrating info from a number of sources, corresponding to medical literature, scientific databases, and affected person data. LLMs lack the flexibility to seamlessly entry and synthesize knowledge from these various and distributed sources. This limits their potential to offer complete and well-informed insights for healthcare purposes.

Overcoming these challenges is essential for utilizing the complete potential of LLMs within the healthcare area. Sufferers, healthcare suppliers, and researchers require clever brokers that may present up-to-date, personalised, and context-aware assist, drawing from the newest medical information and particular person affected person knowledge.

Enter LLM function calling, a strong functionality that addresses these challenges by permitting LLMs to work together with exterior features or APIs, enabling them to entry and use further knowledge sources or computational capabilities past their pre-trained information. By combining the language understanding and era skills of LLMs with exterior knowledge sources and companies, LLM perform calling opens up a world of prospects for clever healthcare brokers.

On this weblog put up, we are going to discover how Mistral LLM on Amazon Bedrock can handle these challenges and allow the event of clever healthcare brokers with LLM perform calling capabilities, whereas sustaining sturdy knowledge safety and privateness by Amazon Bedrock Guardrails.

Healthcare agents outfitted with LLM perform calling can function clever assistants for varied stakeholders, together with sufferers, healthcare suppliers, and researchers. They will help sufferers by answering medical questions, deciphering take a look at outcomes, and offering personalised well being recommendation primarily based on their medical historical past and present situations. For healthcare suppliers, these brokers might help with duties corresponding to summarizing affected person data, suggesting potential diagnoses or therapy plans, and staying updated with the newest medical analysis. Moreover, researchers can use LLM perform calling to investigate huge quantities of scientific literature, determine patterns and insights, and speed up discoveries in areas corresponding to drug growth or illness prevention.

Advantages of LLM perform calling

LLM perform calling presents a number of benefits for enterprise purposes, together with enhanced decision-making, improved effectivity, personalised experiences, and scalability. By combining the language understanding capabilities of LLMs with exterior knowledge sources and computational sources, enterprises could make extra knowledgeable and data-driven choices, automate and streamline varied duties, present tailor-made suggestions and experiences for particular person customers or prospects, and deal with massive volumes of information and course of a number of requests concurrently.

Potential use instances for LLM perform calling within the healthcare area embrace affected person triage, medical query answering, and personalised therapy suggestions. LLM-powered brokers can help in triaging sufferers by analyzing their signs, medical historical past, and danger components, and offering preliminary assessments or suggestions for in search of applicable care. Sufferers and healthcare suppliers can obtain correct and up-to-date solutions to medical questions by utilizing LLMs’ capability to know pure language queries and entry related medical information from varied knowledge sources. Moreover, by integrating with digital well being data (EHRs) and scientific choice assist programs, LLM perform calling can present personalised therapy suggestions tailor-made to particular person sufferers’ medical histories, situations, and preferences.

Amazon Bedrock helps a wide range of foundation models. On this put up, we will probably be exploring the way to carry out perform calling utilizing Mistral from Amazon Bedrock. Mistral helps function calling, which permits brokers to invoke exterior features or APIs from inside a dialog movement. This functionality permits brokers to retrieve knowledge, carry out calculations, or use exterior companies to boost their conversational skills. Perform calling in Mistral is achieved by the usage of particular perform name blocks that outline the exterior perform to be invoked and deal with the response or output.

Answer overview

LLM perform calling usually entails integrating an LLM mannequin with an exterior API or perform that gives entry to further knowledge sources or computational capabilities. The LLM mannequin acts as an interface, processing pure language inputs and producing responses primarily based on its pre-trained information and the data obtained from the exterior features or APIs. The structure usually consists of the LLM mannequin, a perform or API integration layer, and exterior knowledge sources and companies.

Healthcare brokers can combine LLM fashions and name exterior features or APIs by a collection of steps: natural language input processing, self-correction, chain of thought, perform or API calling by an integration layer, knowledge integration and processing, and persona adoption. The agent receives pure language enter, processes it by the LLM mannequin, calls related exterior features or APIs if further knowledge or computations are required, combines the LLM mannequin’s output with the exterior knowledge or outcomes, and offers a complete response to the person.

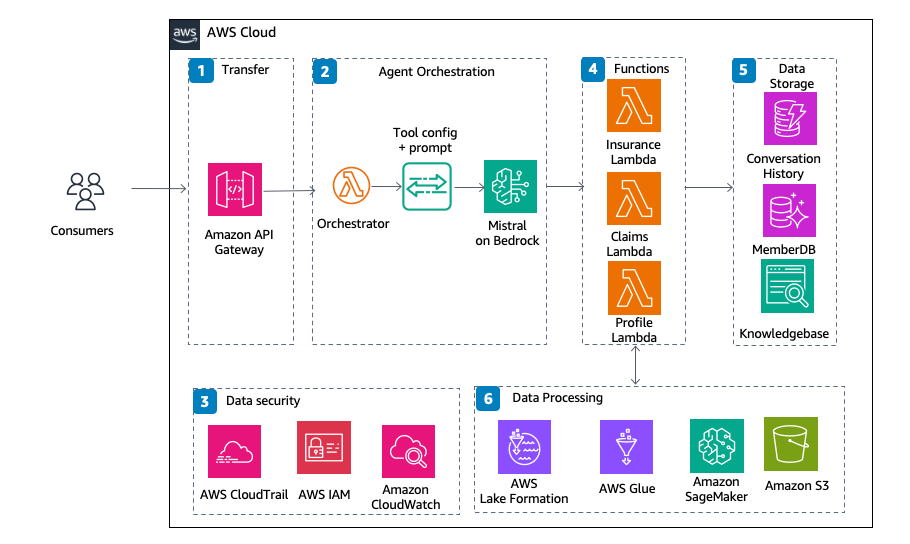

The structure for the Healthcare Agent is proven within the previous determine and is as follows:

- Shoppers work together with the system by Amazon API Gateway.

- AWS Lambda orchestrator, together with software configuration and prompts, handles orchestration and invokes the Mistral mannequin on Amazon Bedrock.

- Agent perform calling permits brokers to invoke Lambda features to retrieve knowledge, carry out computations, or use exterior companies.

- Capabilities corresponding to insurance coverage, claims, and pre-filled Lambda features deal with particular duties.

- Information is saved in a dialog historical past, and a member database (MemberDB) is used to retailer member info and the information base has static paperwork utilized by the agent.

- AWS CloudTrail, AWS Identity and Access Management (IAM), and Amazon CloudWatch deal with knowledge safety.

- AWS Glue, Amazon SageMaker, and Amazon Simple Storage Service (Amazon S3) facilitate knowledge processing.

A pattern code utilizing perform calling by the Mistral LLM might be discovered at mistral-on-aws.

Safety and privateness issues

Information privateness and safety are of utmost significance within the healthcare sector due to the delicate nature of private well being info (PHI) and the potential penalties of information breaches or unauthorized entry. Compliance with laws corresponding to HIPAA and GDPR is essential for healthcare organizations dealing with affected person knowledge. To take care of sturdy knowledge safety and regulatory compliance, healthcare organizations can use Amazon Bedrock Guardrails, a complete set of safety and privateness controls offered by Amazon Web Services (AWS).

Amazon Bedrock Guardrails presents a multi-layered strategy to knowledge safety, together with encryption at relaxation and in transit, entry controls, audit logging, floor fact validation and incident response mechanisms. It additionally offers superior security measures corresponding to knowledge residency controls, which permit organizations to specify the geographic areas the place their knowledge might be saved and processed, sustaining compliance with native knowledge privateness legal guidelines.

When utilizing LLM perform calling within the healthcare area, it’s important to implement sturdy safety measures and comply with greatest practices for dealing with delicate affected person info. Amazon Bedrock Guardrails can play an important position on this regard by serving to to offer a safe basis for deploying and working healthcare purposes and companies that use LLM capabilities.

Some key safety measures enabled by Amazon Bedrock Guardrails are:

- Information encryption: Affected person knowledge processed by LLM features might be encrypted at relaxation and in transit, ensuring that delicate info stays safe even within the occasion of unauthorized entry or knowledge breaches.

- Entry controls: Amazon Bedrock Guardrails permits granular entry controls, permitting healthcare organizations to outline and implement strict permissions for who can entry, modify, or course of affected person knowledge by LLM features.

- Safe knowledge storage: Affected person knowledge might be saved in safe, encrypted storage companies corresponding to Amazon S3 or Amazon Elastic File System (Amazon EFS), ensuring that delicate info stays protected even when at relaxation.

- Anonymization and pseudonymization: Healthcare organizations can use Amazon Bedrock Guardrails to implement knowledge anonymization and pseudonymization methods, ensuring that affected person knowledge used for coaching or testing LLM fashions doesn’t comprise personally identifiable info (PII).

- Audit logging and monitoring: Complete audit logging and monitoring capabilities offered by Amazon Bedrock Guardrails allow healthcare organizations to trace and monitor all entry and utilization of affected person knowledge by LLM features, enabling well timed detection and response to potential safety incidents.

- Common safety audits and assessments: Amazon Bedrock Guardrails facilitates common safety audits and assessments, ensuring that the healthcare group’s knowledge safety measures stay up-to-date and efficient within the face of evolving safety threats and regulatory necessities.

By utilizing Amazon Bedrock Guardrails, healthcare organizations can confidently deploy LLM perform calling of their purposes and companies, sustaining sturdy knowledge safety, privateness safety, and regulatory compliance whereas enabling the transformative advantages of AI-powered healthcare assistants.

Case research and real-world examples

3M Health Information Systems is collaborating with AWS to speed up AI innovation in scientific documentation by utilizing AWS machine studying (ML) companies, compute energy, and LLM capabilities. This collaboration goals to boost 3M’s pure language processing (NLP) and ambient scientific voice applied sciences, enabling clever healthcare brokers to seize and doc affected person encounters extra effectively and precisely. These brokers, powered by LLMs, can perceive and course of pure language inputs from healthcare suppliers, corresponding to spoken notes or queries, and use LLM perform calling to entry and combine related medical knowledge from EHRs, information bases, and different knowledge sources. By combining 3M’s area experience with AWS ML and LLM capabilities, the businesses can enhance scientific documentation workflows, scale back administrative burdens for healthcare suppliers, and in the end improve affected person care by extra correct and complete documentation.

GE Healthcare developed Edison, a safe intelligence answer operating on AWS, to ingest and analyze knowledge from medical gadgets and hospital info programs. This answer makes use of AWS analytics, ML, and Web of Issues (IoT) companies to generate insights and analytics that may be delivered by clever healthcare brokers powered by LLMs. These brokers, outfitted with LLM perform calling capabilities, can seamlessly entry and combine the insights and analytics generated by Edison, enabling them to help healthcare suppliers in bettering operational effectivity, enhancing affected person outcomes, and supporting the event of recent sensible medical gadgets. By utilizing LLM perform calling to retrieve and course of related knowledge from Edison, the brokers can present healthcare suppliers with data-driven suggestions and personalised assist, in the end enabling higher affected person care and simpler healthcare supply.

Future developments and developments

Future developments in LLM perform calling for healthcare may embrace extra superior pure language processing capabilities, corresponding to improved context understanding, multi-turn conversational skills, and higher dealing with of ambiguity and nuances in medical language. Moreover, the mixing of LLM fashions with different AI applied sciences, corresponding to laptop imaginative and prescient and speech recognition, might allow multimodal interactions and evaluation of varied medical knowledge codecs.

Rising applied sciences corresponding to multimodal models, which might course of and generate textual content, photographs, and different knowledge codecs concurrently, might improve LLM perform calling in healthcare by enabling extra complete evaluation and visualization of medical knowledge. Personalised language fashions, educated on particular person affected person knowledge, might present much more tailor-made and correct responses. Federated studying methods, which permit mannequin coaching on decentralized knowledge whereas preserving privateness, might handle data-sharing challenges in healthcare.

These developments and rising applied sciences might form the way forward for healthcare brokers by making them extra clever, adaptive, and personalised. Brokers might seamlessly combine multimodal knowledge, corresponding to medical photographs and lab experiences, into their evaluation and proposals. They might additionally constantly be taught and adapt to particular person sufferers’ preferences and well being situations, offering actually personalised care. Moreover, federated studying might allow collaborative mannequin growth whereas sustaining knowledge privateness, fostering innovation and information sharing throughout healthcare organizations.

Conclusion

LLM perform calling has the potential to revolutionize the healthcare business by enabling clever brokers that may perceive pure language, entry and combine varied knowledge sources, and supply personalised suggestions and insights. By combining the language understanding capabilities of LLMs with exterior knowledge sources and computational sources, healthcare organizations can improve decision-making, enhance operational effectivity, and ship superior affected person experiences. Nevertheless, addressing knowledge privateness and safety issues is essential for the profitable adoption of this know-how within the healthcare area.

Because the healthcare business continues to embrace digital transformation, we encourage readers to discover and experiment with LLM perform calling of their respective domains. By utilizing this know-how, healthcare organizations can unlock new prospects for bettering affected person care, advancing medical analysis, and streamlining operations. With a give attention to innovation, collaboration, and accountable implementation, the healthcare business can harness the facility of LLM perform calling to create a extra environment friendly, personalised, and data-driven future. AWS might help organizations use LLM perform calling and construct clever healthcare assistants by its AI/ML companies, together with Amazon Bedrock, Amazon Lex, and Lambda, whereas sustaining sturdy safety and compliance utilizing Amazon Bedrock Guardrails. To be taught extra, see AWS for Healthcare & Life Sciences.

Concerning the Authors

Laks Sundararajan is a seasoned Enterprise Architect serving to corporations reset, rework and modernize their IT, digital, cloud, knowledge and perception methods. A confirmed chief with important experience round Generative AI, Digital, Cloud and Information/Analytics Transformation, Laks is a Sr. Options Architect with Healthcare and Life Sciences (HCLS).

Laks Sundararajan is a seasoned Enterprise Architect serving to corporations reset, rework and modernize their IT, digital, cloud, knowledge and perception methods. A confirmed chief with important experience round Generative AI, Digital, Cloud and Information/Analytics Transformation, Laks is a Sr. Options Architect with Healthcare and Life Sciences (HCLS).

Subha Venugopal is a Senior Options Architect at AWS with over 15 years of expertise within the know-how and healthcare sectors. Specializing in digital transformation, platform modernization, and AI/ML, she leads AWS Healthcare and Life Sciences initiatives. Subha is devoted to enabling equitable healthcare entry and is enthusiastic about mentoring the following era of execs.

Subha Venugopal is a Senior Options Architect at AWS with over 15 years of expertise within the know-how and healthcare sectors. Specializing in digital transformation, platform modernization, and AI/ML, she leads AWS Healthcare and Life Sciences initiatives. Subha is devoted to enabling equitable healthcare entry and is enthusiastic about mentoring the following era of execs.