Unify structured information in Amazon Aurora and unstructured information in Amazon S3 for insights utilizing Amazon Q

In as we speak’s data-intensive enterprise panorama, organizations face the problem of extracting priceless insights from various information sources scattered throughout their infrastructure. Whether or not it’s structured information in databases or unstructured content material in doc repositories, enterprises typically wrestle to effectively question and use this wealth of data.

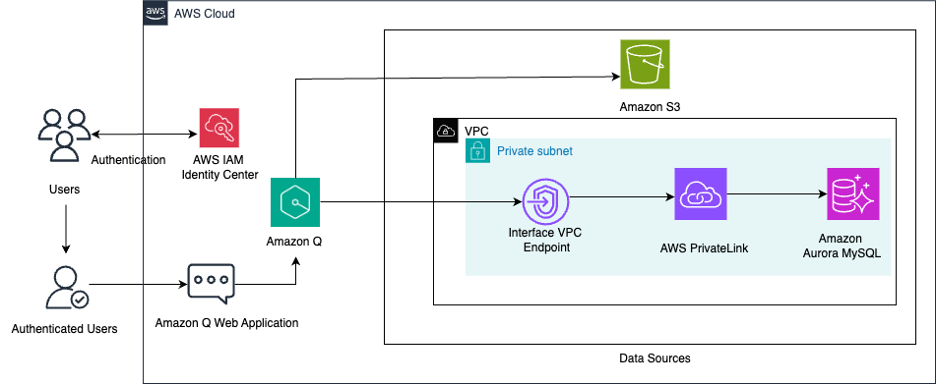

On this submit, we discover how you should utilize Amazon Q Business, the AWS generative AI-powered assistant, to construct a centralized data base in your group, unifying structured and unstructured datasets from completely different sources to speed up decision-making and drive productiveness. The answer combines information from an Amazon Aurora MySQL-Compatible Edition database and information saved in an Amazon Simple Storage Service (Amazon S3) bucket.

Resolution overview

Amazon Q Enterprise is a totally managed, generative AI-powered assistant that helps enterprises unlock the worth of their information and data. The important thing to utilizing the total potential of Amazon Q lies in its skill to seamlessly combine and question a number of information sources, from structured databases to unstructured content material shops. On this resolution, we use Amazon Q to construct a complete data base that mixes sales-related information from an Aurora MySQL database and gross sales paperwork saved in an S3 bucket. Aurora MySQL-Suitable is a totally managed, MySQL-compatible, relational database engine that mixes the pace and reliability of high-end industrial databases with the simplicity and cost-effectiveness of open-source databases. Amazon S3 is an object storage service that gives industry-leading scalability, information availability, safety, and efficiency.

This practice data base that connects these various information sources allows Amazon Q to seamlessly reply to a variety of sales-related questions utilizing the chat interface. The next diagram illustrates the answer structure.

Stipulations

For this walkthrough, it is best to have the next conditions:

Arrange your VPC

Establishing a VPC offers a safe, remoted community atmosphere for internet hosting the information sources that Amazon Q Enterprise will entry to index. On this submit, we use an Aurora MySQL database in a non-public subnet, and Amazon Q Enterprise accesses the personal DB occasion in a safe method utilizing an interface VPC endpoint.

Full the next steps:

- Select an AWS Area Amazon Q supports (for this submit, we use the us-east-1 Area).

- Create a VPC or use an present VPC with no less than two subnets. These subnets have to be in two completely different Availability Zones within the Area the place you need to deploy your DB occasion.

- Discuss with Steps 1 and a couple of in Configuring Amazon VPC support for Amazon Q Business connectors to configure your VPC so that you’ve got a non-public subnet to host an Aurora MySQL database together with a safety group in your database.

- Moreover, create a public subnet that may host an EC2 bastion server, which we create within the subsequent steps.

- Create an interface VPC endpoint for Aurora powered by AWS PrivateLink within the VPC you created. For directions, check with Access an AWS service using an interface VPC endpoint.

- Specify the personal subnet the place the Aurora MySQL database resides together with the database safety group you created.

Every interface endpoint is represented by a number of elastic community interfaces in your subnets, which is then utilized by Amazon Q Enterprise to hook up with the personal database.

Arrange an Aurora MySQL database

Full the next steps to create an Aurora MySQL database to host the structured gross sales information:

- On the Amazon RDS console, select Databases within the navigation pane.

- Select Create database.

- Choose Aurora, then Aurora (MySQL appropriate).

- For Templates, select Manufacturing or Dev/take a look at.

- Beneath Settings, enter a reputation in your database cluster identifier. For instance, q-aurora-mysql-source.

- For Credentials settings, select Self-managed, give the admin person a password, and hold the remainder of the parameters as default.

- Beneath Connectivity, for Digital personal cloud (VPC), select the VPC that you simply created.

- For DB subnet group, create a brand new subnet group or select an present one. Hold the remainder of the parameters as default.

- For Publicly accessible, select NO.

- Beneath VPC safety group (firewall), select Current and select the prevailing safety group that you simply created for the Aurora MySQL DB occasion.

- Depart the remaining parameters as default and create the database.

Create an EC2 bastion host to hook up with the personal Aurora MySQL DB occasion

On this submit, you connect with the personal DB occasion from the MySQL Workbench consumer in your native machine by an EC2 bastion host. Launch the EC2 occasion within the public subnet of the VPC you configured. The safety group hooked up to this EC2 bastion host occasion needs to be configured to permit SSH site visitors (port 22) out of your native machine’s IP handle. To facilitate the connection between the EC2 bastion host and the Aurora MySQL database, the safety group for the Aurora MySQL database ought to have an inbound rule to permit MySQL site visitors (port 3306) from the safety group of the EC2 bastion host. Conversely, the safety group for the EC2 bastion host ought to have an outbound rule to permit site visitors to the safety group of the Aurora MySQL database on port 3306. Discuss with Controlling access with security groups for extra particulars.

Configure IAM Identification Heart

An Amazon Q Enterprise utility requires you to make use of IAM Identification Heart to handle person entry. IAM Identification Heart is a single place the place you possibly can assign your workforce customers, also referred to as workforce identities, to offer constant entry to a number of AWS accounts and functions. On this submit, we use IAM Identification Heart because the SAML 2.0-aligned id supplier (IdP). Be sure to have enabled an IAM Identity Center instance, provisioned no less than one person, and supplied every person with a sound e mail handle. The Amazon Q Enterprise utility must be in the identical Area because the IAM Identification Heart occasion. For extra data on enabling customers in IAM Identification Heart, see Add users to your Identity Center directory.

Create an S3 bucket

Create a S3 bucket within the us-east-1 Area with the default settings and create a folder with a reputation of your selection contained in the bucket.

Create and cargo pattern information

On this submit, we use two pattern datasets: a total sales dataset CSV file and a sales target document in PDF format. The whole gross sales dataset accommodates details about orders positioned by prospects situated in varied geographical areas, by completely different gross sales channels. The gross sales doc accommodates details about gross sales targets for the 12 months for every of the gross sales channel. Full the steps within the part beneath to load each datasets.

Aurora MySQL database

Within the Amazon Q Enterprise utility, you create two indexes for a similar Aurora MySQL desk: one on the whole gross sales dataset and one other on an aggregated view of the whole gross sales information, to cater to the completely different kind of queries. Full the next steps:

- Securely connect to your personal Aurora MySQL database utilizing an SSH tunnel by an EC2 bastion host.

This allows you to handle and work together along with your database sources immediately out of your native MySQL Workbench consumer.

- Create the database and tables utilizing the next instructions on the native MySQL Workbench consumer:

- Obtain the pattern file csv in your native atmosphere.

- Use the next code to insert pattern information in your MYSQL consumer:

In the event you encounter the error LOAD DATA LOCAL INFILE file request rejected because of restrictions on entry when operating the statements in MySQL Workbench 8.0, you would possibly must edit the connection. On the Connection tab, go to the Superior sub-tab, and within the Others subject, add the road OPT_LOCAL_INFILE=1 and begin a brand new question tab after testing the connection.

- Confirm the information load by operating a choose assertion:

This could return 7,991 rows.

The next screenshot exhibits the database desk schema and the pattern information within the desk.

Amazon S3 bucket

Obtain the pattern file 2020_Sales_Target.pdf in your native atmosphere and add it to the S3 bucket you created. This gross sales goal doc accommodates details about the gross sales goal for 4 gross sales channels and appears like the next screenshot.

Create an Amazon Q utility

Full the next steps to create an Amazon Q utility:

- On the Amazon Q console, select Functions within the navigation pane.

- Select Create utility.

- Present the next particulars:

- Within the Utility particulars part, for Utility title, enter a reputation for the appliance (for instance,

sales_analyzer). - Within the Service entry part, for Select a way to authorize Amazon Q, choose Create and use a brand new service function.

- Depart all different default choices and select Create.

- Within the Utility particulars part, for Utility title, enter a reputation for the appliance (for instance,

- On the Choose retriever web page, you configure the retriever. The retriever is an index that can be utilized by Amazon Q to fetch information in actual time.

- For Retrievers, choose Use native retriever.

- For Index provisioning, choose Starter.

- For Variety of models, use the default worth of 1. Every unit can assist as much as 20,000 paperwork. For a database, every database row is taken into account a doc.

- Select Subsequent.

Configure Amazon Q to hook up with Aurora MySQL-Suitable

Full the next steps to configure Amazon Q to hook up with Aurora MySQL-Suitable:

- On the Join information sources web page, beneath Knowledge sources, select the Aurora (MySQL) information supply.

- Select Subsequent.

- Within the Title and outline part, configure the next parameters:

- For Knowledge supply title, enter a reputation (for instance,

aurora_mysql_sales). - For Description, enter an outline.

- For Knowledge supply title, enter a reputation (for instance,

- Within the Supply part, configure the next parameters:

- For Host, enter the database endpoint (for instance,

<databasename>.<ID>.<area>.rds.amazonaws.com).

- For Host, enter the database endpoint (for instance,

You may acquire the endpoint on the Amazon RDS console for the occasion on the Connectivity & safety tab.

-

- For Port, enter the Amazon RDS port for MySQL:

3306. - For Occasion, enter the database title (for instance,

gross sales). - Choose Allow SSL Certificates location.

- For Port, enter the Amazon RDS port for MySQL:

- For Authentication, select Create a brand new secret with a reputation of your selection.

- Present the person title and password in your MySQL database to create the key.

- Within the Configure VPC and safety group part, select the VPC and subnets the place your Aurora MySQL database is situated, and select the default VPC safety group.

- For IAM function, select Create a brand new service function.

- For Sync scope, beneath SQL question, enter the next question:

This choose assertion returns a major key column, a doc title column, and a textual content column that serves your doc physique for Amazon Q to reply questions. Be sure to don’t put ; on the finish of the question.

- For Major key column, enter

order_number. - For Title column, enter

sales_channel. - For Physique column, enter

sales_details.

- Beneath Sync run schedule, for Frequency, select Run on demand.

- Hold all different parameters as default and select Add information supply.

This course of could take a couple of minutes to finish. After the aurora_mysql_sales information supply is added, you may be redirected to the Join information sources web page.

- Repeat the steps so as to add one other Aurora MySQL information supply, referred to as

aggregated_sales, for a similar database however with the next particulars within the Sync scope This information supply can be utilized by Amazon Q for answering questions on aggregated gross sales.- Use the next SQL question:

-

- For Major key column, enter

scoy_id. - For Title column, enter

sales_channel. - For Physique column, enter

sales_aggregates.

- For Major key column, enter

After including the aggregated_sales information supply, you may be redirected to the Join information sources web page once more.

Configure Amazon Q to hook up with Amazon S3

Full the next steps to configure Amazon Q to hook up with Amazon S3:

- On the Join information sources web page, beneath Knowledge sources, select Amazon S3.

- Beneath Title and outline, enter an information supply title (for instance,

s3_sales_targets) and an outline. - Beneath Configure VPC and safety group settings, select No VPC.

- For IAM function, select Create a brand new service function.

- Beneath Sync scope, for the information supply location, enter the S3 bucket title containing the gross sales goal PDF doc.

- Depart all different parameters as default.

- Beneath Sync run schedule, for Frequency, select Run on demand.

- Select Add information supply.

- On the Join information sources web page, select Subsequent.

- Within the Replace teams and customers part, select Add customers and teams.

- Select the person as entered in IAM Identification Heart and select Assign.

- After you add the person, you possibly can select the Amazon Q Enterprise subscription to assign to the person. For this submit, we select Q Enterprise Lite.

- Beneath Net expertise service entry, choose Create and use a brand new service function and enter a service function title.

- Select Create utility.

After jiffy, the appliance can be created and you may be taken to the Functions web page on the Amazon Q Enterprise console.

Sync the information sources

Select the title of your utility and navigate to the Knowledge sources part. For every of the three information sources, choose the information supply and select Sync now. It should take a number of minutes to finish. After the sources have synced, it is best to see the Final sync standing present as Accomplished.

Customise and work together with the Amazon Q utility

At this level, you’ve got created an Amazon Q utility, synced the information supply, and deployed the net expertise. You may customise your internet expertise to make it extra intuitive to your utility customers.

- On the appliance particulars web page, select Customise internet expertise.

- For this submit, we’ve got custom-made the Title, Subtitle and Welcome message fields for our assistant.

- After you’ve got accomplished your customizations for the net expertise, return to the appliance particulars web page and select the net expertise URL.

- Sign up with the IAM Identification Heart person title and password you created earlier to begin the dialog with assistant.

Now you can take a look at the appliance by asking completely different questions, as proven within the following screenshot. You may observe within the following query that the channel names have been fetched from the Amazon S3 gross sales goal PDF.

The next screenshots present extra instance interactions.

The reply within the previous instance was derived from the 2 sources: the S3 bucket and the Aurora database. You may confirm the output by cross-referencing the PDF, which has a goal as $12 million for the in-store gross sales channel in 2020. The next SQL exhibits the precise gross sales achieved in 2020 for a similar channel:

As seen from the gross sales goal PDF information, the 2020 gross sales goal for the distributor gross sales channel was $7 million.

The next SQL within the Aurora MySQL database exhibits the precise gross sales achieved in 2020 for a similar channel:

The next screenshots present further questions.

You may confirm the previous solutions with the next SQL:

Clear up

To keep away from incurring future prices, clear up any sources you created as a part of this resolution, together with the Amazon Q Enterprise utility:

- On the Amazon Q Enterprise console, select Functions within the navigation pane, choose the appliance you created, and on the Actions menu, select Delete.

- Delete the AWS Identity and Access Management (IAM) roles created for the appliance and information retriever. You may identify the IAM roles utilized by the Amazon Q Enterprise utility and information retriever by inspecting the related configuration utilizing the AWS console or AWS Command Line Interface (AWS CLI).

- Delete the IAM Identity Center instance you created for this walkthrough.

- Empty the bucket you created after which delete the bucket.

- Delete the Aurora MySQL instance and Aurora cluster.

- Shut down the EC2 bastion host instance.

- Delete the VPC and associated elements—the NAT gateway and interface VPC endpoint.

Conclusion

On this submit, we demonstrated how organizations can use Amazon Q to construct a unified data base that integrates structured information from an Aurora MySQL database and unstructured information from an S3 bucket. By connecting these disparate information sources, Amazon Q allows you to seamlessly question data from two information sources and acquire priceless insights that drive higher decision-making.

We encourage you to do this resolution and share your expertise within the feedback. Moreover, you possibly can discover the numerous other data sources that Amazon Q for Enterprise can seamlessly combine with, empowering you to construct strong and insightful functions.

In regards to the Authors

Monjumi Sarma is a Technical Account Supervisor at Amazon Net Providers. She helps prospects architect trendy, scalable, and cost-effective options on AWS, which supplies them an accelerated path in the direction of modernization initiatives. She has expertise throughout analytics, huge information, ETL, cloud operations, and cloud infrastructure administration.

Monjumi Sarma is a Technical Account Supervisor at Amazon Net Providers. She helps prospects architect trendy, scalable, and cost-effective options on AWS, which supplies them an accelerated path in the direction of modernization initiatives. She has expertise throughout analytics, huge information, ETL, cloud operations, and cloud infrastructure administration.

Akchhaya Sharma is a Sr. Knowledge Engineer at Amazon Adverts. He builds and manages data-driven options for suggestion techniques, working along with a various and gifted staff of scientists, engineers, and product managers. He has expertise throughout analytics, huge information, and ETL.

Akchhaya Sharma is a Sr. Knowledge Engineer at Amazon Adverts. He builds and manages data-driven options for suggestion techniques, working along with a various and gifted staff of scientists, engineers, and product managers. He has expertise throughout analytics, huge information, and ETL.