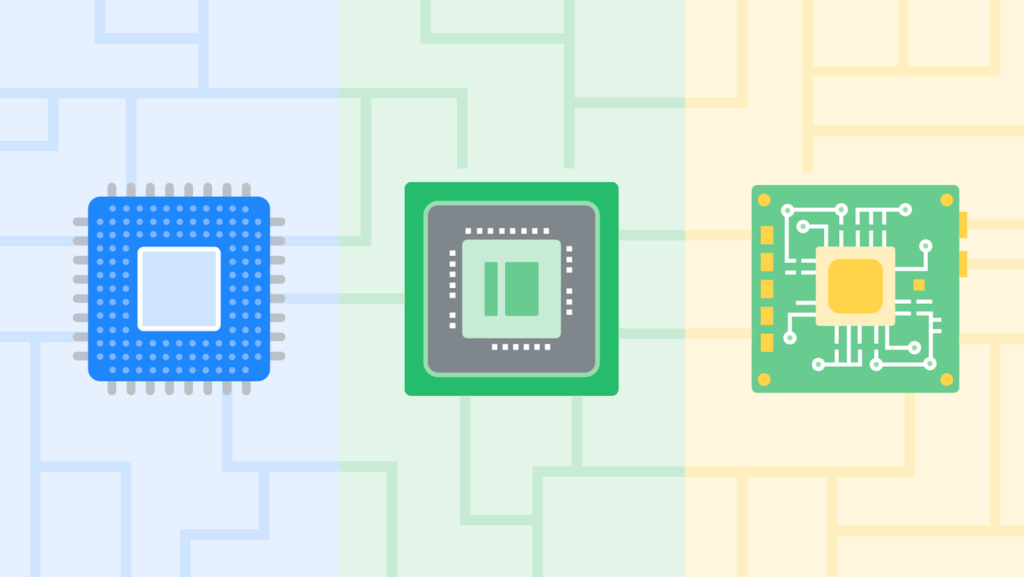

What’s the distinction between CPUs, GPUs and TPUs?

Again at I/O in Might, we announced Trillium, the sixth technology of our very personal custom-designed chip referred to as the Tensor Processing Unit, or TPU — and at present, we announced that it’s now available to Google Cloud Customers in preview. TPUs are what energy the AI that makes your Google units and apps as useful as attainable, and Trillium is essentially the most highly effective and sustainable TPU but.

However what precisely is a TPU? And what makes Trillium “{custom}”? To essentially perceive what makes Trillium so particular, it is essential to be taught not solely about TPUs, but additionally different sorts of compute processors — CPUs and GPUs — in addition to what makes them totally different. As a product supervisor who works on AI infrastructure at Google Cloud, Chelsie Czop is aware of precisely tips on how to break all of it down. “I work throughout a number of groups to ensure our platforms are as environment friendly as attainable for our clients who’re constructing AI merchandise,” she says. And what makes quite a lot of Google’s AI merchandise attainable, Chelsie says, are Google’s TPUs.

Let’s begin with the fundamentals! What are CPUs, GPUs and TPUs?

These are all chips that work as processors for compute duties. Consider your mind as a pc that may do issues like studying a ebook or doing a math drawback. Every of these actions is much like a compute activity. So if you happen to use your cellphone to take an image, ship a textual content or open an utility, your cellphone’s mind, or processor, is doing these compute duties.

What do the totally different acronyms stand for?

Regardless that CPUs, GPUs and TPUs are all processors, they’re progressively extra specialised. CPU stands for Central Processing Unit. These are general-purpose chips that may deal with a various vary of duties. Much like your mind, some duties could take longer if the CPU isn’t specialised in that space.

Then there’s the GPU, or Graphics Processing Unit. GPUs have grow to be the workhorse of accelerated compute duties, from graphic rendering to AI workloads. They’re what’s referred to as a sort of ASIC, or application-specific built-in circuit. Built-in circuits are typically made utilizing silicon, so that you would possibly hear folks discuss with chips as “silicon” — they’re the identical factor (and sure, that’s the place the time period “Silicon Valley” comes from!). Briefly, ASICs are designed for a single, particular objective.

The TPU, or Tensor Processing Unit, is Google’s personal ASIC. We designed TPUs from the bottom as much as run AI-based compute duties, making them much more specialised than CPUs and GPUs. TPUs have been on the coronary heart of a few of Google’s hottest AI providers, together with Search, YouTube and DeepMind’s massive language fashions.

Acquired it, so all of those chips are what make our units work. The place would I discover CPUs, GPUs and TPUs?

CPUs and GPUs are inside very acquainted gadgets you in all probability use on daily basis: You’ll discover CPUs in nearly each smartphone, they usually’re in private computing units like laptops, too. A GPU you’ll discover in high-end gaming techniques or some desktop units. TPUs you’ll solely discover in Google knowledge facilities: warehouse-style buildings filled with racks and racks of TPUs, buzzing alongside 24/7 to maintain Google’s, and our Cloud clients’, AI providers operating worldwide.

What made Google begin fascinated with creating TPUs?

CPUs have been invented within the late Nineteen Fifties, and GPUs got here round within the late ‘90s. After which right here at Google, we started thinking about TPUs about 10 years ago. Our speech recognition providers have been getting a lot better in high quality, and we realized that if each consumer began “speaking” to Google for simply three minutes a day, we would wish to double the variety of computer systems in our knowledge facilities. We knew we wanted one thing that was much more environment friendly than off-the-shelf {hardware} that was accessible on the time — and we knew we have been going to wish much more processing energy out of every chip. So, we constructed our personal!

And that “T” stands for Tensor, proper? Why?

Yep — a “tensor” is the generic title for the info constructions used for machine studying. Mainly, there’s a bunch of math occurring underneath the hood to make AI duties attainable. With our newest TPU, Trillium, we’ve elevated the quantity of calculations that may occur: Trillium has 4.7x peak compute efficiency per chip in comparison with the prior technology, TPU v5e.

What does that imply, precisely?

It mainly implies that Trillium is ready to work on all of the calculations required to run that complicated math 4.7 instances sooner than the final model. Not solely does Trillium work sooner, it may well additionally deal with bigger, extra sophisticated workloads.

Is there the rest that makes it an enchancment over our last-gen TPU?

One other factor that’s higher about Trillium is that it’s our most sustainable TPU but — the truth is, it’s 67% extra energy-efficient than our final TPU. Because the demand for AI continues to soar, the trade must scale infrastructure sustainably. Trillium primarily makes use of much less energy to do the identical work.

Now that clients are beginning to use it, what sort of impression do you suppose Trillium can have?

We’re already seeing some fairly unbelievable developments powered by Trillium! We now have clients utilizing it in applied sciences that analyze RNA for numerous ailments, flip written textual content into movies at unbelievable speeds and extra. And that’s simply from our very preliminary spherical of customers — now that Trillium’s in preview, we are able to’t wait to see what folks can do with it.