MaskGCT: A New Open State-of-the-Artwork Textual content-to-Speech Mannequin

Lately, text-to-speech (TTS) expertise has made important strides, but quite a few challenges nonetheless stay. Autoregressive (AR) programs, whereas providing various prosody, are inclined to endure from robustness points and sluggish inference speeds. Non-autoregressive (NAR) fashions, then again, require specific alignment between textual content and speech throughout coaching, which might result in unnatural outcomes. The brand new Masked Generative Codec Transformer (MaskGCT) addresses these points by eliminating the necessity for specific text-speech alignment and phone-level period prediction. This novel method goals to simplify the pipeline whereas sustaining and even enhancing the standard and expressiveness of generated speech.

MaskGCT is a brand new open-source, state-of-the-art TTS mannequin obtainable on Hugging Face. It brings a number of thrilling options to the desk, similar to zero-shot voice cloning and emotional TTS, and may synthesize speech in each English and Chinese language. The mannequin was educated on an in depth dataset of 100,000 hours of in-the-wild speech information, enabling it to generate long-form and variable-speed synthesis. Notably, MaskGCT includes a totally non-autoregressive structure. This implies the mannequin doesn’t depend on iterative prediction, leading to quicker inference occasions and a simplified synthesis course of. With a two-stage method, MaskGCT first predicts semantic tokens from textual content and subsequently generates acoustic tokens conditioned on these semantic token.

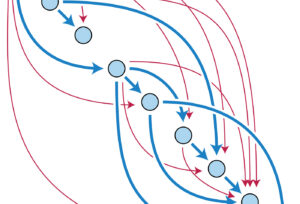

MaskGCT makes use of a two-stage framework that follows a “mask-and-predict” paradigm. Within the first stage, the mannequin predicts semantic tokens based mostly on the enter textual content. These semantic tokens are extracted from a speech self-supervised studying (SSL) mannequin. Within the second stage, the mannequin predicts acoustic tokens conditioned on the beforehand generated semantic tokens. This structure permits MaskGCT to completely bypass text-speech alignment and phoneme-level period prediction, distinguishing it from earlier NAR fashions. Furthermore, it employs a Vector Quantized Variational Autoencoder (VQ-VAE) to quantize the speech representations, which minimizes data loss. The structure is very versatile, permitting for the era of speech with controllable pace and period, and helps purposes like cross-lingual dubbing, voice conversion, and emotion management, all in a zero-shot setting.

MaskGCT represents a big leap ahead in TTS expertise on account of its simplified pipeline, non-autoregressive method, and sturdy efficiency throughout a number of languages and emotional contexts. Its coaching on 100,000 hours of speech information, protecting various audio system and contexts, offers it unparalleled versatility and naturalness in generated speech. Experimental outcomes reveal that MaskGCT achieves human-level naturalness and intelligibility, outperforming different state-of-the-art TTS fashions on key metrics. For instance, MaskGCT achieved superior scores in speaker similarity (SIM-O) and phrase error charge (WER) in comparison with different TTS fashions like VALL-E, VoiceBox, and NaturalSpeech 3. These metrics, alongside its high-quality prosody and suppleness, make MaskGCT a great device for purposes that require each precision and expressiveness in speech synthesis.

MaskGCT pushes the boundaries of what’s attainable in text-to-speech expertise. By eradicating the dependencies on specific text-speech alignment and period prediction and as an alternative utilizing a totally non-autoregressive, masked generative method, MaskGCT achieves a excessive stage of naturalness, high quality, and effectivity. Its flexibility to deal with zero-shot voice cloning, emotional context, and bilingual synthesis makes it a game-changer for varied purposes, together with AI assistants, dubbing, and accessibility instruments. With its open availability on platforms like Hugging Face, MaskGCT is not only advancing the sphere of TTS but additionally making cutting-edge expertise extra accessible for builders and researchers worldwide.

Take a look at the Paper and Model on Hugging Face. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to comply with us on Twitter and be a part of our Telegram Channel and LinkedIn Group. In case you like our work, you’ll love our newsletter.. Don’t Overlook to affix our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.