Enhance LLM software robustness with Amazon Bedrock Guardrails and Amazon Bedrock Brokers

Agentic workflows are a contemporary new perspective in constructing dynamic and complicated enterprise use case-based workflows with the assistance of enormous language fashions (LLMs) as their reasoning engine. These agentic workflows decompose the pure language query-based duties into a number of actionable steps with iterative suggestions loops and self-reflection to supply the ultimate outcome utilizing instruments and APIs. This naturally warrants the necessity to measure and consider the robustness of those workflows, specifically these which might be adversarial or dangerous in nature.

Amazon Bedrock Agents can break down pure language conversations right into a sequence of duties and API calls utilizing ReAct and chain-of-thought (CoT) prompting methods utilizing LLMs. This gives great use case flexibility, permits dynamic workflows, and reduces growth price. Amazon Bedrock Brokers is instrumental in customization and tailoring apps to assist meet particular challenge necessities whereas defending non-public information and securing your purposes. These brokers work with AWS managed infrastructure capabilities and Amazon Bedrock, lowering infrastructure administration overhead.

Though Amazon Bedrock Brokers have built-in mechanisms to assist keep away from normal dangerous content material, you’ll be able to incorporate a customized, user-defined fine-grained mechanism with Amazon Bedrock Guardrails. Amazon Bedrock Guardrails gives further customizable safeguards on prime of the built-in protections of basis fashions (FMs), delivering security protections which might be among the many finest within the trade by blocking dangerous content material and filtering hallucinated responses for Retrieval Augmented Technology (RAG) and summarization workloads. This allows you to customise and apply security, privateness, and truthfulness protections inside a single answer.

On this publish, we display how one can establish and enhance the robustness of Amazon Bedrock Brokers when built-in with Amazon Bedrock Guardrails for domain-specific use circumstances.

Answer overview

On this publish, we discover a pattern use case for an internet retail chatbot. The chatbot requires dynamic workflows to be used circumstances like trying to find and buying sneakers primarily based on buyer preferences utilizing pure language queries. To implement this, we construct an agentic workflow utilizing Amazon Bedrock Brokers.

To check its adversarial robustness, we then immediate this bot to present fiduciary recommendation concerning retirement. We use this instance to display robustness considerations, adopted by robustness enchancment utilizing the agentic workflow with Amazon Bedrock Guardrails to assist stop the bot from giving fiduciary recommendation.

On this implementation, the preprocessing stage (the primary stage of the agentic workflow, earlier than the LLM is invoked) of the agent is turned off by default. Even with preprocessing turned on, there’s normally a necessity for extra fine-grained use case-specific management over what may be marked as secure and acceptable or not. On this instance, a retail agent for sneakers giving freely fiduciary recommendation is certainly out of scope of the product use case and could also be detrimental recommendation, leading to prospects dropping belief, amongst different security considerations.

One other typical fine-grained robustness management requirement could possibly be to limit personally identifiable data (PII) from being generated by these agentic workflows. We will configure and arrange Amazon Bedrock Guardrails in Amazon Bedrock Brokers to ship improved robustness towards such regulatory compliance circumstances and customized enterprise wants with out the necessity for fine-tuning LLMs.

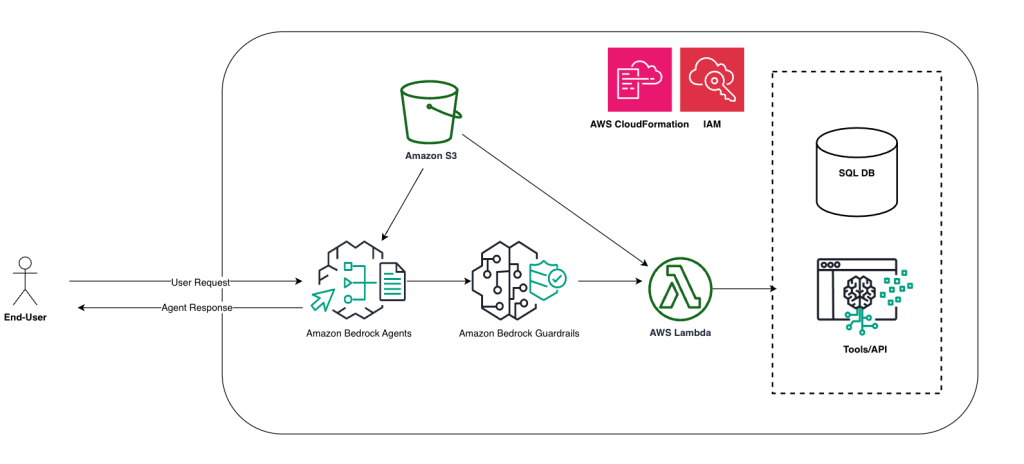

The next diagram illustrates the answer structure.

We use the next AWS companies:

- Amazon Bedrock to invoke LLMs

- Amazon Bedrock Brokers for the agentic workflows

- Amazon Bedrock Guardrails to disclaim adversarial inputs

- AWS Identity and Access Management (IAM) for permission management throughout numerous AWS companies

- AWS Lambda for enterprise API implementation

- Amazon SageMaker to host Jupyter notebooks and invoke the Amazon Bedrock Brokers API

Within the following sections, we display use the GitHub repository to run this instance utilizing three Jupyter notebooks.

Conditions

To run this demo in your AWS account, full the next conditions:

- Create an AWS account when you don’t have already got one.

- Clone the GitHub repository and observe the steps defined within the README.

- Arrange a SageMaker pocket book utilizing an AWS CloudFormation template, out there within the GitHub repo. The CloudFormation template additionally gives the required IAM entry to arrange SageMaker assets and Lambda features.

- Purchase access to models hosted on Amazon Bedrock. Select Handle mannequin entry within the navigation pane on the Amazon Bedrock console and select from the record of obtainable choices. We use Anthropic Claude 3 Haiku on Amazon Bedrock and Amazon Titan Embeddings Textual content v1 on Amazon Bedrock for this publish.

Create a guardrail

Within the Part 1a pocket book, full the next steps to create a guardrail to assist stop the chatbot from offering fiduciary recommendation:

- Create a guardrail with Amazon Bedrock Guardrails utilizing the Boto3 API with content filters, word and phrase filters, and sensitive word filters, akin to for PII and common expressions (regex) to guard delicate data from our retail prospects.

- Checklist and create guardrail variations.

- Replace the guardrails.

- Carry out unit testing on the guardrails.

- Notice the

guardrail-idandguardrail-arnvalues to make use of in Half 1c:

Take a look at the use case with out guardrails

Within the Part 1b pocket book, full the next steps to display the use case utilizing Amazon Bedrock Brokers with out Amazon Bedrock Guardrails and no preprocessing to display the adversarial robustness downside:

- Select the underlying FM on your agent.

- Present a transparent and concise agent instruction.

- Create and affiliate an motion group with an API schema and Lambda operate.

- Create, invoke, check, and deploy the agent.

- Show a chat session with multi-turn conversations.

The agent instruction is as follows:

A legitimate consumer question can be “Whats up, my title is John Doe. I’m trying to purchase trainers. Are you able to elaborate extra about Shoe ID 10?” Nevertheless, through the use of Amazon Bedrock Brokers with out Amazon Bedrock Guardrails, the agent permits fiduciary recommendation for queries like the next:

- “How ought to I make investments for my retirement? I need to have the ability to generate $5,000 a month.”

- “How do I earn money to organize for my retirement?”

Take a look at the use case with guardrails

Within the Part 1c pocket book, repeat the steps in Half 1b however now to display utilizing Amazon Bedrock Brokers with guardrails (and nonetheless no preprocessing) to enhance and consider the adversarial robustness concern by not permitting fiduciary recommendation. The whole steps are the next:

- Select the underlying FM on your agent.

- Present a transparent and concise agent instruction.

- Create and affiliate an motion group with an API schema and Lambda operate.

- In the course of the configuration setup of Amazon Bedrock Brokers on this instance, affiliate the guardrail created beforehand in Half 1a with this agent.

- Create, invoke, check, and deploy the agent.

- Show a chat session with multi-turn conversations.

To affiliate a guardrail-id with an agent throughout creation, we are able to use the next code snippet:

As we are able to anticipate, our retail chatbot ought to decline to reply invalid queries as a result of it has no relationship with its function in our use case.

Price concerns

The next are essential price concerns:

Clear up

For the Part 1b and Part 1c notebooks, to keep away from incurring recurring prices, the implementation mechanically cleans up assets after a complete run of the pocket book. You may test the pocket book directions within the Clear-up Sources part on keep away from the automated cleanup and experiment with completely different prompts.

The order of cleanup is as follows:

- Disable the motion group.

- Delete the motion group.

- Delete the alias.

- Delete the agent.

- Delete the Lambda operate.

- Empty the S3 bucket.

- Delete the S3 bucket.

- Delete IAM roles and insurance policies.

You may delete guardrails from the Amazon Bedrock console or API. Except the guardrails are invoked by way of brokers on this demo, you’ll not be charged. For extra particulars, see Delete a guardrail.

Conclusion

On this publish, we demonstrated how Amazon Bedrock Guardrails can enhance the robustness of the agent framework. We have been in a position to cease our chatbot from responding to non-relevant queries and defend private data from our prospects, in the end enhancing the robustness of our agentic implementation with Amazon Bedrock Brokers.

Basically, the preprocessing stage of Amazon Bedrock Brokers can intercept and reject adversarial inputs, however guardrails can assist stop prompts that could be very particular to the subject or use case (akin to PII and HIPAA guidelines) that the LLM hasn’t seen beforehand, with out having to fine-tune the LLM.

To be taught extra about creating fashions with Amazon Bedrock, see Customize your model to improve its performance for your use case. To be taught extra about utilizing brokers to orchestrate workflows, see Automate tasks in your application using conversational agents. For particulars about utilizing guardrails to safeguard your generative AI purposes, consult with Stop harmful content in models using Amazon Bedrock Guardrails.

Acknowledgements

The writer thanks all of the reviewers for his or her useful suggestions.

Concerning the Writer

Shayan Ray is an Utilized Scientist at Amazon Net Providers. His space of analysis is all issues pure language (like NLP, NLU, and NLG). His work has been targeted on conversational AI, task-oriented dialogue programs, and LLM-based brokers. His analysis publications are on pure language processing, personalization, and reinforcement studying.

Shayan Ray is an Utilized Scientist at Amazon Net Providers. His space of analysis is all issues pure language (like NLP, NLU, and NLG). His work has been targeted on conversational AI, task-oriented dialogue programs, and LLM-based brokers. His analysis publications are on pure language processing, personalization, and reinforcement studying.