A Crash Course of Planning for Notion Engineers in Autonomous Driving | by Patrick Langechuan Liu | Jun, 2024

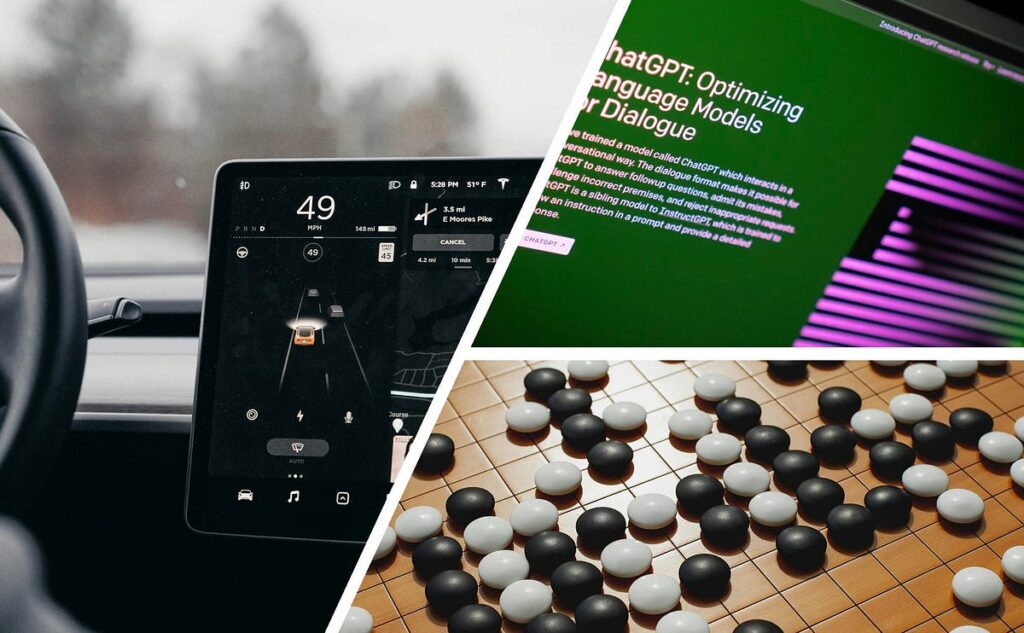

A classical modular autonomous driving system sometimes consists of notion, prediction, planning, and management. Till round 2023, AI (synthetic intelligence) or ML (machine studying) primarily enhanced notion in most mass-production autonomous driving programs, with its affect diminishing in downstream parts. In stark distinction to the low integration of AI within the planning stack, end-to-end notion programs (such because the BEV, or birds-eye-view perception pipeline) have been deployed in mass production vehicles.

There are a number of causes for this. A classical stack primarily based on a human-crafted framework is extra explainable and could be iterated quicker to repair area check points (inside hours) in comparison with machine learning-driven options (which may take days or even weeks). Nonetheless, it doesn’t make sense to let available human driving knowledge sit idle. Furthermore, growing computing energy is extra scalable than increasing the engineering workforce.

Thankfully, there was a robust development in each academia and trade to vary this example. First, downstream modules have gotten more and more data-driven and can also be built-in by way of totally different interfaces, such because the one proposed in CVPR 2023’s greatest paper, UniAD. Furthermore, pushed by the ever-growing wave of Generative AI, a single unified vision-language-action (VLA) mannequin exhibits nice potential for dealing with complicated robotics duties (RT-2 in academia, TeslaBot and 1X in trade) and autonomous driving (GAIA-1, DriveVLM in academia, and Wayve AI driver, Tesla FSD in trade). This brings the toolsets of AI and data-driven growth from the notion stack to the planning stack.

This weblog publish goals to introduce the issue settings, present methodologies, and challenges of the planning stack, within the type of a crash course for notion engineers. As a notion engineer, I lastly had a while over the previous couple of weeks to systematically be taught the classical planning stack, and I want to share what I discovered. I may even share my ideas on how AI will help from the attitude of an AI practitioner.

The supposed viewers for this publish is AI practitioners who work within the area of autonomous driving, particularly, notion engineers.

The article is a bit lengthy (11100 phrases), and the desk of contents beneath will most probably assist those that wish to do fast ctrl+F searches with the key phrases.

Desk of Contents (ToC)Why be taught planning?

What's planning?

The issue formulation

The Glossary of Planning

Conduct Planning

Frenet vs Cartesian programs

Classical tools-the troika of planning

Looking

Sampling

Optimization

Business practices of planning

Path-speed decoupled planning

Joint spatiotemporal planning

Determination making

What and why?

MDP and POMDP

Worth iteration and Coverage iteration

AlphaGo and MCTS-when nets meet timber

MPDM (and successors) in autonomous driving

Business practices of resolution making

Timber

No timber

Self-Reflections

Why NN in planning?

What about e2e NN planners?

Can we do with out prediction?

Can we do with simply nets however no timber?

Can we use LLMs to make choices?

The development of evolution

This brings us to an fascinating query: why be taught planning, particularly the classical stack, within the period of AI?

From a problem-solving perspective, understanding your prospects’ challenges higher will allow you, as a notion engineer, to serve your downstream prospects extra successfully, even when your predominant focus stays on notion work.

Machine studying is a device, not an answer. Essentially the most environment friendly technique to resolve issues is to mix new instruments with area data, particularly these with stable mathematical formulations. Area knowledge-inspired studying strategies are more likely to be extra data-efficient. As planning transitions from rule-based to ML-based programs, even with early prototypes and merchandise of end-to-end programs hitting the street, there’s a want for engineers who can deeply perceive each the basics of planning and machine studying. Regardless of these adjustments, classical and studying strategies will seemingly proceed to coexist for a substantial interval, maybe shifting from an 8:2 to a 2:8 ratio. It’s virtually important for engineers working on this area to grasp each worlds.

From a value-driven growth perspective, understanding the restrictions of classical strategies is essential. This perception means that you can successfully make the most of new ML instruments to design a system that addresses present points and delivers speedy affect.

Moreover, planning is a essential a part of all autonomous brokers, not simply in autonomous driving. Understanding what planning is and the way it works will allow extra ML skills to work on this thrilling subject and contribute to the event of really autonomous brokers, whether or not they’re automobiles or different types of automation.

The issue formulation

Because the “mind” of autonomous automobiles, the planning system is essential for the secure and environment friendly driving of automobiles. The objective of the planner is to generate trajectories which can be secure, snug, and effectively progressing in direction of the objective. In different phrases, security, consolation, and effectivity are the three key aims for planning.

As enter to the planning programs, all notion outputs are required, together with static street constructions, dynamic street brokers, free area generated by occupancy networks, and site visitors wait circumstances. The planning system should additionally guarantee car consolation by monitoring acceleration and jerk for easy trajectories, whereas contemplating interplay and site visitors courtesy.

The planning programs generate trajectories within the format of a sequence of waypoints for the ego car’s low-level controller to trace. Particularly, these waypoints signify the longer term positions of the ego car at a sequence of mounted time stamps. For instance, every level could be 0.4 seconds aside, masking an 8-second planning horizon, leading to a complete of 20 waypoints.

A classical planning stack roughly consists of worldwide route planning, native habits planning, and native trajectory planning. International route planning gives a road-level path from the beginning level to the tip level on a world map. Native habits planning decides on a semantic driving motion sort (e.g., automotive following, nudging, facet passing, yielding, and overtaking) for the following a number of seconds. Primarily based on the determined habits sort from the habits planning module, native trajectory planning generates a short-term trajectory. The worldwide route planning is usually offered by a map service as soon as navigation is ready and is past the scope of this publish. We’ll concentrate on habits planning and trajectory planning to any extent further.

Conduct planning and trajectory technology can work explicitly in tandem or be mixed right into a single course of. In specific strategies, habits planning and trajectory technology are distinct processes working inside a hierarchical framework, working at totally different frequencies, with habits planning at 1–5 Hz and trajectory planning at 10–20 Hz. Regardless of being extremely environment friendly more often than not, adapting to totally different situations might require vital modifications and fine-tuning. Extra superior planning programs mix the 2 right into a single optimization drawback. This method ensures feasibility and optimality with none compromise.

The Glossary of Planning

You might need seen that the terminology used within the above part and the picture don’t utterly match. There is no such thing as a normal terminology that everybody makes use of. Throughout each academia and trade, it’s not unusual for engineers to make use of totally different names to confer with the identical idea and the identical title to confer with totally different ideas. This means that planning in autonomous driving continues to be beneath energetic growth and has not absolutely converged.

Right here, I checklist the notation used on this publish and briefly clarify different notions current within the literature.

- Planning: A top-level idea, parallel to manage, that generates trajectory waypoints. Collectively, planning and management are collectively known as PnC (planning and management).

- Management: A top-level idea that takes in trajectory waypoints and generates high-frequency steering, throttle, and brake instructions for actuators to execute. Management is comparatively well-established in comparison with different areas and is past the scope of this publish, regardless of the widespread notion of PnC.

- Prediction: A top-level idea that predicts the longer term trajectories of site visitors brokers apart from the ego car. Prediction could be thought-about a light-weight planner for different brokers and can be known as movement prediction.

- Conduct Planning: A module that produces high-level semantic actions (e.g., lane change, overtake) and sometimes generates a rough trajectory. It is usually generally known as activity planning or resolution making, significantly within the context of interactions.

- Movement Planning: A module that takes in semantic actions and produces easy, possible trajectory waypoints at some stage in the planning horizon for management to execute. It is usually known as trajectory planning.

- Trajectory Planning: One other time period for movement planning.

- Determination Making: Conduct planning with a concentrate on interactions. With out ego-agent interplay, it’s merely known as habits planning. It is usually generally known as tactical resolution making.

- Route Planning: Finds the popular route over street networks, often known as mission planning.

- Mannequin-Primarily based Method: In planning, this refers to manually crafted frameworks used within the classical planning stack, versus neural community fashions. Mannequin-based strategies distinction with learning-based strategies.

- Multimodality: Within the context of planning, this sometimes refers to a number of intentions. This contrasts with multimodality within the context of multimodal sensor inputs to notion or multimodal giant language fashions (equivalent to VLM or VLA).

- Reference Line: A neighborhood (a number of hundred meters) and coarse path primarily based on international routing info and the present state of the ego car.

- Frenet Coordinates: A coordinate system primarily based on a reference line. Frenet simplifies a curvy path in Cartesian coordinates to a straight tunnel mannequin. See beneath for a extra detailed introduction.

- Trajectory: A 3D spatiotemporal curve, within the type of (x, y, t) in Cartesian coordinates or (s, l, t) in Frenet coordinates. A trajectory consists of each path and velocity.

- Path: A 2D spatial curve, within the type of (x, y) in Cartesian coordinates or (s, l) in Frenet coordinates.

- Semantic Motion: A high-level abstraction of motion (e.g., automotive following, nudge, facet cross, yield, overtake) with clear human intention. Additionally known as intention, coverage, maneuver, or primitive movement.

- Motion: A time period with no mounted which means. It will possibly confer with the output of management (high-frequency steering, throttle, and brake instructions for actuators to execute) or the output of planning (trajectory waypoints). Semantic motion refers back to the output of habits prediction.

Completely different literature might use numerous notations and ideas. Listed here are some examples:

These variations illustrate the variety in terminology and the evolving nature of the sphere.

Conduct Planning

As a machine studying engineer, you might discover that the habits planning module is a closely manually crafted intermediate module. There is no such thing as a consensus on the precise kind and content material of its output. Concretely, the output of habits planning is usually a reference path or object labeling on ego maneuvers (e.g., cross from the left or right-hand facet, cross or yield). The time period “semantic motion” has no strict definition and no mounted strategies.

The decoupling of habits planning and movement planning will increase effectivity in fixing the extraordinarily high-dimensional motion area of autonomous automobiles. The actions of an autonomous car must be reasoned at sometimes 10 Hz or extra (time decision in waypoints), and most of those actions are comparatively simple, like going straight. After decoupling, the habits planning layer solely must cause about future situations at a comparatively coarse decision, whereas the movement planning layer operates within the native answer area primarily based on the choice made by habits planning. One other good thing about habits planning is changing non-convex optimization to convex optimization, which we’ll talk about additional beneath.

Frenet vs Cartesian programs

The Frenet coordinate system is a extensively adopted system that deserves its personal introduction part. The Frenet body simplifies trajectory planning by independently managing lateral and longitudinal actions relative to a reference path. The sss coordinate represents longitudinal displacement (distance alongside the street), whereas the lll (or ddd) coordinate represents lateral displacement (facet place relative to the reference path).

Frenet simplifies a curvy path in Cartesian coordinates to a straight tunnel mannequin. This transformation converts non-linear street boundary constraints on curvy roads into linear ones, considerably simplifying the following optimization issues. Moreover, people understand longitudinal and lateral actions otherwise, and the Frenet body permits for separate and extra versatile optimization of those actions.

The Frenet coordinate system requires a clear, structured street graph with low curvature lanes. In follow, it’s most well-liked for structured roads with small curvature, equivalent to highways or metropolis expressways. Nonetheless, the problems with the Frenet coordinate system are amplified with growing reference line curvature, so it needs to be used cautiously on structured roads with excessive curvature, like metropolis intersections with information strains.

For unstructured roads, equivalent to ports, mining areas, parking heaps, or intersections with out pointers, the extra versatile Cartesian coordinate system is beneficial. The Cartesian system is best fitted to these environments as a result of it could actually deal with greater curvature and fewer structured situations extra successfully.

Planning in autonomous driving includes computing a trajectory from an preliminary high-dimensional state (together with place, time, velocity, acceleration, and jerk) to a goal subspace, making certain all constraints are glad. Looking, sampling, and optimization are the three most generally used instruments for planning.

Looking

Classical graph-search strategies are fashionable in planning and are utilized in route/mission planning on structured roads or straight in movement planning to search out the perfect path in unstructured environments (equivalent to parking or city intersections, particularly mapless situations). There’s a clear evolution path, from Dijkstra’s algorithm to A* (A-star), and additional to hybrid A*.

Dijkstra’s algorithm explores all attainable paths to search out the shortest one, making it a blind (uninformed) search algorithm. It’s a systematic technique that ensures the optimum path, however it’s inefficient to deploy. As proven within the chart beneath, it explores virtually all instructions. Primarily, Dijkstra’s algorithm is a breadth-first search (BFS) weighted by motion prices. To enhance effectivity, we will use details about the situation of the goal to trim down the search area.

The A* algorithm makes use of heuristics to prioritize paths that seem like main nearer to the objective, making it extra environment friendly. It combines the associated fee to this point (Dijkstra) with the associated fee to go (heuristics, primarily grasping best-first). A* solely ensures the shortest path if the heuristic is admissible and constant. If the heuristic is poor, A* can carry out worse than the Dijkstra baseline and should degenerate right into a grasping best-first search.

Within the particular utility of autonomous driving, the hybrid A* algorithm additional improves A* by contemplating car kinematics. A* might not fulfill kinematic constraints and can’t be tracked precisely (e.g., the steering angle is usually inside 40 levels). Whereas A* operates in grid area for each state and motion, hybrid A* separates them, sustaining the state within the grid however permitting steady motion in keeping with kinematics.

Analytical growth (shot to objective) is one other key innovation proposed by hybrid A*. A pure enhancement to A* is to attach essentially the most lately explored nodes to the objective utilizing a non-colliding straight line. If that is attainable, we’ve discovered the answer. In hybrid A*, this straight line is changed by Dubins and Reeds-Shepp (RS) curves, which adjust to car kinematics. This early stopping technique strikes a stability between optimality and feasibility by focusing extra on feasibility for the additional facet.

Hybrid A* is used closely in parking situations and mapless city intersections. Here’s a very good video showcasing the way it works in a parking situation.

Sampling

One other fashionable technique of planning is sampling. The well-known Monte Carlo technique is a random sampling technique. In essence, sampling includes choosing many candidates randomly or in keeping with a previous, after which selecting the right one in keeping with an outlined price. For sampling-based strategies, the quick analysis of many choices is essential, because it straight impacts the real-time efficiency of the autonomous driving system.

Massive Language Fashions (LLMs) primarily present samples, and there must be an evaluator with an outlined price that aligns with human preferences. This analysis course of ensures that the chosen output meets the specified standards and high quality requirements.

Sampling can happen in a parameterized answer area if we already know the analytical answer to a given drawback or subproblem. For instance, sometimes we wish to decrease the time integral of the sq. of jerk (the third by-product of place p(t)), indicated by the triple dots over p, the place one dot represents one order by-product with respect to time), amongst different standards.

It may be mathematically confirmed that quintic (fifth order) polynomials present the jerk-optimal connection between two states in a position-velocity-acceleration area, even when extra price phrases are thought-about. By sampling on this parameter area of quintic polynomials, we will discover the one with the minimal price to get the approximate answer. The price takes under consideration elements equivalent to velocity, acceleration, jerk restrict, and collision checks. This method primarily solves the optimization drawback by means of sampling.

Sampling-based strategies have impressed quite a few ML papers, together with CoverNet, Elevate-Splat-Shoot, NMP, and MP3. These strategies substitute mathematically sound quintic polynomials with human driving habits, using a big database. The analysis of trajectories could be simply parallelized, which additional helps the usage of sampling-based strategies. This method successfully leverages an enormous quantity of professional demonstrations to imitate human-like driving habits, whereas avoiding random sampling of acceleration and steering profiles.

Optimization

Optimization finds the perfect answer to an issue by maximizing or minimizing a particular goal operate beneath given constraints. In neural community coaching, an analogous precept is adopted utilizing gradient descent and backpropagation to regulate the community’s weights. Nonetheless, in optimization duties exterior of neural networks, fashions are often much less complicated, and simpler strategies than gradient descent are sometimes employed. For instance, whereas gradient descent could be utilized to Quadratic Programming, it’s typically not essentially the most environment friendly technique.

In autonomous driving, the planning price to optimize sometimes considers dynamic objects for impediment avoidance, static street constructions for following lanes, navigation info to make sure the proper route, and ego standing to judge smoothness.

Optimization could be categorized into convex and non-convex sorts. The important thing distinction is that in a convex optimization situation, there is just one international optimum, which can be the native optimum. This attribute makes it unaffected by the preliminary answer to the optimization issues. For non-convex optimization, the preliminary answer issues lots, as illustrated within the chart beneath.

Since planning includes extremely non-convex optimization with many native optima, it closely will depend on the preliminary answer. Moreover, convex optimization sometimes runs a lot quicker and is subsequently most well-liked for onboard real-time functions equivalent to autonomous driving. A typical method is to make use of convex optimization along with different strategies to stipulate a convex answer area first. That is the mathematical basis behind separating habits planning and movement planning, the place discovering an excellent preliminary answer is the function of habits planning.

Take impediment avoidance as a concrete instance, which usually introduces non-convex issues. If we all know the nudging path, then it turns into a convex optimization drawback, with the impediment place appearing as a decrease or higher sure constraint for the optimization drawback. If we don’t know the nudging path, we have to resolve first which path to nudge, making the issue a convex one for movement planning to unravel. This nudging path resolution falls beneath habits planning.

In fact, we will do direct optimization of non-convex optimization issues with instruments equivalent to projected gradient descent, alternating minimization, particle swarm optimization (PSO), and genetic algorithms. Nonetheless, that is past the scope of this publish.

How can we make such choices? We will use the aforementioned search or sampling strategies to deal with non-convex issues. Sampling-based strategies scatter many choices throughout the parameter area, successfully dealing with non-convex points equally to looking.

You might also query why deciding which path to nudge from is sufficient to assure the issue area is convex. To clarify this, we have to talk about topology. In path area, comparable possible paths can remodel repeatedly into one another with out impediment interference. These comparable paths, grouped as “homotopy courses” within the formal language of topology, can all be explored utilizing a single preliminary answer homotopic to them. All these paths kind a driving hall, illustrated because the crimson or inexperienced shaded space within the picture above. For a 3D spatiotemporal case, please confer with the QCraft tech blog.

We will make the most of the Generalized Voronoi diagram to enumerate all homotopy courses, which roughly corresponds to the totally different resolution paths out there to us. Nonetheless, this subject delves into superior mathematical ideas which can be past the scope of this weblog publish.

The important thing to fixing optimization issues effectively lies within the capabilities of the optimization solver. Sometimes, a solver requires roughly 10 milliseconds to plan a trajectory. If we will enhance this effectivity by tenfold, it could actually considerably affect algorithm design. This precise enchancment was highlighted throughout Tesla AI Day 2022. The same enhancement has occurred in notion programs, transitioning from 2D notion to Hen’s Eye View (BEV) as out there computing energy scaled up tenfold. With a extra environment friendly optimizer, extra choices could be calculated and evaluated, thereby decreasing the significance of the decision-making course of. Nonetheless, engineering an environment friendly optimization solver calls for substantial engineering assets.

Each time compute scales up by 10x, algorithm will evolve to subsequent technology.

— — The unverified legislation of algorithm evolution

A key differentiator in numerous planning programs is whether or not they’re spatiotemporally decoupled. Concretely, spatiotemporally decoupled strategies plan in spatial dimensions first to generate a path, after which plan the velocity profile alongside this path. This method is often known as path-speed decoupling.

Path-speed decoupling is also known as lateral-longitudinal (lat-long) decoupling, the place lateral (lat) planning corresponds to path planning and longitudinal (lengthy) planning corresponds to hurry planning. This terminology appears to originate from the Frenet coordinate system, which we’ll discover later.

Decoupled options are simpler to implement and might resolve about 95% of points. In distinction, coupled options have the next theoretical efficiency ceiling however are more difficult to implement. They contain extra parameters to tune and require a extra principled method to parameter tuning.

Path-speed decoupled planning

We will take Baidu Apollo EM planner for instance of a system that makes use of path-speed decoupled planning.

The EM planner considerably reduces computational complexity by remodeling a three-dimensional station-lateral-speed drawback into two two-dimensional issues: station-lateral and station-speed. On the core of Apollo’s EM planner is an iterative Expectation-Maximization (EM) step, consisting of path optimization and velocity optimization. Every step is split into an E-step (projection and formulation in a 2D state area) and an M-step (optimization within the 2D state area). The E-step includes projecting the 3D drawback into both a Frenet SL body or an ST velocity monitoring body.

The M-step (maximization step) in each path and velocity optimization includes fixing non-convex optimization issues. For path optimization, this implies deciding whether or not to nudge an object on the left or proper facet, whereas for velocity optimization, it includes deciding whether or not to overhaul or yield to a dynamic object crossing the trail. The Apollo EM planner addresses these non-convex optimization challenges utilizing a two-step course of: Dynamic Programming (DP) adopted by Quadratic Programming (QP).

DP makes use of a sampling or looking algorithm to generate a tough preliminary answer, successfully pruning the non-convex area right into a convex area. QP then takes the coarse DP outcomes as enter and optimizes them throughout the convex area offered by DP. In essence, DP focuses on feasibility, and QP refines the answer to attain optimality throughout the convex constraints.

In our outlined terminology, Path DP corresponds to lateral BP, Path QP to lateral MP, Velocity DP to longitudinal BP, and Velocity QP to longitudinal MP. Thus, the method includes conducting BP (Fundamental Planning) adopted by MP (Grasp Planning) in each the trail and velocity steps.

Joint spatiotemporal planning

Though decoupled planning can resolve 95% of instances in autonomous driving, the remaining 5% contain difficult dynamic interactions the place a decoupled answer typically ends in suboptimal trajectories. In these complicated situations, demonstrating intelligence is essential, making it a extremely popular subject within the area.

For instance, in narrow-space passing, the optimum habits could be to both decelerate to yield or speed up to cross. Such behaviors usually are not achievable throughout the decoupled answer area and require joint optimization. Joint optimization permits for a extra built-in method, contemplating each path and velocity concurrently to deal with intricate dynamic interactions successfully.

Nonetheless, there are vital challenges in joint spatiotemporal planning. Firstly, fixing the non-convex drawback straight in a higher-dimensional state area is tougher and time-consuming than utilizing a decoupled answer. Secondly, contemplating interactions in spatiotemporal joint planning is much more complicated. We’ll cowl this subject in additional element later once we talk about decision-making.

Right here we introduce two fixing strategies: brute power search and setting up a spatiotemporal hall for optimization.

Brute power search happens straight in 3D spatiotemporal area (2D in area and 1D in time), and could be carried out in both XYT (Cartesian) or SLT (Frenet) coordinates. We’ll take SLT for instance. SLT area is lengthy and flat, just like an power bar. It’s elongated within the L dimension and flat within the ST face. For brute power search, we will use hybrid A-star, with the associated fee being a mixture of progress price and value to go. Throughout optimization, we should conform to go looking constraints that forestall reversing in each the s and t dimensions.

One other technique is setting up a spatiotemporal hall, primarily a curve with the footprint of a automotive winding by means of a 3D spatiotemporal state area (SLT, for instance). The SSC (spatiotemporal semantic corridor, RAL 2019), encodes necessities given by semantic parts right into a semantic hall, producing a secure trajectory accordingly. The semantic hall consists of a sequence of mutually related collision-free cubes with dynamical constraints posed by the semantic parts within the spatiotemporal area. Inside every dice, it turns into a convex optimization drawback that may be solved utilizing Quadratic Programming (QP).

SSC nonetheless requires a BP (Conduct Planning) module to offer a rough driving trajectory. Complicated semantic parts of the atmosphere are projected into the spatiotemporal area regarding the reference lane. EPSILON (TRO 2021), showcases a system the place SSC serves because the movement planner working in tandem with a habits planner. Within the subsequent part, we’ll talk about habits planning, particularly specializing in interplay. On this context, habits planning is often known as resolution making.

What and why?

Determination making in autonomous driving is basically habits planning, however with a concentrate on interplay with different site visitors brokers. The belief is that different brokers are principally rational and can reply to our habits in a predictable method, which we will describe as “noisily rational.”

Individuals might query the need of resolution making when superior planning instruments can be found. Nonetheless, two key elements — uncertainty and interplay — introduce a probabilistic nature to the atmosphere, primarily as a result of presence of dynamic objects. Interplay is essentially the most difficult a part of autonomous driving, distinguishing it from normal robotics. Autonomous automobiles should not solely navigate but in addition anticipate and react to the habits of different brokers, making strong decision-making important for security and effectivity.

In a deterministic (purely geometric) world with out interplay, resolution making can be pointless, and planning by means of looking, sampling, and optimization would suffice. Brute power looking within the 3D XYT area may function a normal answer.

In most classical autonomous driving stacks, a prediction-then-plan method is adopted, assuming zero-order interplay between the ego car and different automobiles. This method treats prediction outputs as deterministic, requiring the ego car to react accordingly. This results in overly conservative habits, exemplified by the “freezing robotic” drawback. In such instances, prediction fills all the spatiotemporal area, stopping actions like lane adjustments in crowded circumstances — one thing people handle extra successfully.

To deal with stochastic methods, Markov Determination Processes (MDP) or Partially Observable Markov Determination Processes (POMDP) frameworks are important. These approaches shift the main target from geometry to likelihood, addressing chaotic uncertainty. By assuming that site visitors brokers behave rationally or no less than noisily rationally, resolution making will help create a secure driving hall within the in any other case chaotic spatiotemporal area.

Among the many three overarching targets of planning — security, consolation, and effectivity — resolution making primarily enhances effectivity. Conservative actions can maximize security and luxury, however efficient negotiation with different street brokers, achievable by means of resolution making, is crucial for optimum effectivity. Efficient resolution making additionally shows intelligence.

MDP and POMDP

We’ll first introduce Markov Determination Processes (MDP) and Partially Observable Markov Determination Processes (POMDP), adopted by their systematic options, equivalent to worth iteration and coverage iteration.

A Markov Course of (MP) is a kind of stochastic course of that offers with dynamic random phenomena, not like static likelihood. In a Markov Course of, the longer term state relies upon solely on the present state, making it adequate for prediction. For autonomous driving, the related state might solely embody the final second of knowledge, increasing the state area to permit for a shorter historical past window.

A Markov Determination Course of (MDP) extends a Markov Course of to incorporate decision-making by introducing motion. MDPs mannequin decision-making the place outcomes are partly random and partly managed by the choice maker or agent. An MDP could be modeled with 5 elements:

- State (S): The state of the atmosphere.

- Motion (A): The actions the agent can take to have an effect on the atmosphere.

- Reward (R): The reward the atmosphere gives to the agent on account of the motion.

- Transition Chance (P): The likelihood of transitioning from the outdated state to a brand new state upon the agent’s motion.

- Gamma (γ): A reduction issue for future rewards.

That is additionally the widespread framework utilized by reinforcement studying (RL), which is basically an MDP. The objective of MDP or RL is to maximise the cumulative reward obtained in the long term. This requires the agent to make good choices given a state from the atmosphere, in keeping with a coverage.

A coverage, π, is a mapping from every state, s ∈ S, and motion, a ∈ A(s), to the likelihood π(a|s) of taking motion a when in state s. MDP or RL research the issue of easy methods to derive the optimum coverage.

A Partially Observable Markov Determination Course of (POMDP) provides an additional layer of complexity by recognizing that states can’t be straight noticed however fairly inferred by means of observations. In a POMDP, the agent maintains a perception — a likelihood distribution over attainable states — to estimate the state of the atmosphere. Autonomous driving situations are higher represented by POMDPs attributable to their inherent uncertainties and the partial observability of the atmosphere. An MDP could be thought-about a particular case of a POMDP the place the statement completely reveals the state.

POMDPs can actively accumulate info, resulting in actions that collect essential knowledge, demonstrating the clever habits of those fashions. This functionality is especially worthwhile in situations like ready at intersections, the place gathering details about different automobiles’ intentions and the state of the site visitors gentle is essential for making secure and environment friendly choices.

Worth iteration and coverage iteration are systematic strategies for fixing MDP or POMDP issues. Whereas these strategies usually are not generally utilized in real-world functions attributable to their complexity, understanding them gives perception into precise options and the way they are often simplified in follow, equivalent to utilizing MCTS in AlphaGo or MPDM in autonomous driving.

To seek out the perfect coverage in an MDP, we should assess the potential or anticipated reward from a state, or extra particularly, from an motion taken in that state. This anticipated reward consists of not simply the speedy reward but in addition all future rewards, formally generally known as the return or cumulative discounted reward. (For a deeper understanding, confer with “Reinforcement Learning: An Introduction,” typically thought-about the definitive information on the topic.)

The worth operate (V) characterizes the standard of states by summing the anticipated returns. The action-value operate (Q) assesses the standard of actions for a given state. Each capabilities are outlined in keeping with a given coverage. The Bellman Optimality Equation states that an optimum coverage will select the motion that maximizes the speedy reward plus the anticipated future rewards from the ensuing new states. In easy phrases, the Bellman Optimality Equation advises contemplating each the speedy reward and the longer term penalties of an motion. For instance, when switching jobs, think about not solely the speedy pay increase (R) but in addition the longer term worth (S’) the brand new place provides.

It’s comparatively simple to extract the optimum coverage from the Bellman Optimality Equation as soon as the optimum worth operate is obtainable. However how do we discover this optimum worth operate? That is the place worth iteration involves the rescue.

Worth iteration finds the perfect coverage by repeatedly updating the worth of every state till it stabilizes. This course of is derived by turning the Bellman Optimality Equation into an replace rule. Primarily, we use the optimum future image to information the iteration towards it. In plain language, “pretend it till you make it!”

Worth iteration is assured to converge for finite state areas, whatever the preliminary values assigned to the states (for an in depth proof, please confer with the Bible of RL). If the low cost issue gamma is ready to 0, which means we solely think about speedy rewards, the worth iteration will converge after only one iteration. A smaller gamma results in quicker convergence as a result of the horizon of consideration is shorter, although it could not all the time be the most suitable choice for fixing concrete issues. Balancing the low cost issue is a key side of engineering follow.

One would possibly ask how this works if all states are initialized to zero. The speedy reward within the Bellman Equation is essential for bringing in extra info and breaking the preliminary symmetry. Take into consideration the states that instantly result in the objective state; their worth propagates by means of the state area like a virus. In plain language, it’s about making small wins, ceaselessly.

Nonetheless, worth iteration additionally suffers from inefficiency. It requires taking the optimum motion at every iteration by contemplating all attainable actions, just like Dijkstra’s algorithm. Whereas it demonstrates feasibility as a primary method, it’s sometimes not sensible for real-world functions.

Coverage iteration improves on this by taking actions in keeping with the present coverage and updating it primarily based on the Bellman Equation (not the Bellman Optimality Equation). Coverage iteration decouples coverage analysis from coverage enchancment, making it a a lot quicker answer. Every step is taken primarily based on a given coverage as an alternative of exploring all attainable actions to search out the one which maximizes the target. Though every iteration of coverage iteration could be extra computationally intensive as a result of coverage analysis step, it typically ends in a quicker convergence general.

In easy phrases, for those who can solely absolutely consider the consequence of 1 motion, it’s higher to make use of your individual judgment and do your greatest with the present info out there.

AlphaGo and MCTS — when nets meet timber

We’ve all heard the unbelievable story of AlphaGo beating the perfect human participant in 2016. AlphaGo formulates the gameplay of Go as an MDP and solves it with Monte Carlo Tree Search (MCTS). However why not use worth iteration or coverage iteration?

Worth iteration and coverage iteration are systematic, iterative strategies that resolve MDP issues. Nonetheless, even with improved coverage iteration, it nonetheless requires performing time-consuming operations to replace the worth of each state. A typical 19×19 Go board has roughly 2e170 possible states. This huge variety of states makes it intractable to unravel with conventional worth iteration or coverage iteration strategies.

AlphaGo and its successors use a Monte Carlo tree search (MCTS) algorithm to search out their strikes, guided by a worth community and a coverage community, educated on each human and laptop play. Let’s check out vanilla MCTS first.

Monte Carlo Tree Search (MCTS) is a technique for coverage estimation that focuses on decision-making from the present state. One iteration includes a four-step course of: choice, growth, simulation (or analysis), and backup.

- Choice: The algorithm follows essentially the most promising path primarily based on earlier simulations till it reaches a leaf node, a place not but absolutely explored.

- Growth: A number of youngster nodes are added to signify attainable subsequent strikes from the leaf node.

- Simulation (Analysis): The algorithm performs out a random sport from the brand new node till the tip, generally known as a “rollout.” This assesses the potential end result from the expanded node by simulating random strikes till a terminal state is reached.

- Backup: The algorithm updates the values of the nodes on the trail taken primarily based on the sport’s consequence. If the result is a win, the worth of the nodes will increase; if it’s a loss, the worth decreases. This course of propagates the results of the rollout again up the tree, refining the coverage primarily based on simulated outcomes.

After a given variety of iterations, MCTS gives the proportion frequency with which speedy actions had been chosen from the basis throughout simulations. Throughout inference, the motion with essentially the most visits is chosen. Right here is an interactive illustration of MTCS with the sport of tic-tac-toe for simplicity.

MCTS in AlphaGo is enhanced by two neural networks. Worth Community evaluates the successful price from a given state (board configuration). Coverage Community evaluates the motion distribution for all attainable strikes. These neural networks enhance MCTS by decreasing the efficient depth and breadth of the search tree. The coverage community helps in sampling actions, focusing the search on promising strikes, whereas the worth community gives a extra correct analysis of positions, decreasing the necessity for intensive rollouts. This mix permits AlphaGo to carry out environment friendly and efficient searches within the huge state area of Go.

Within the growth step, the coverage community samples the most probably positions, successfully pruning the breadth of the search area. Within the analysis step, the worth community gives an instinctive scoring of the place, whereas a quicker, light-weight coverage community performs rollouts till the sport ends to gather rewards. MCTS then makes use of a weighted sum of the evaluations from each networks to make the ultimate evaluation.

Observe {that a} single analysis of the worth community approaches the accuracy of Monte Carlo rollouts utilizing the RL coverage community however with 15,000 instances much less computation. This mirrors the fast-slow system design, akin to instinct versus reasoning, or System 1 versus System 2 as described by Nobel laureate Daniel Kahneman. Comparable designs could be noticed in newer works, equivalent to DriveVLM.

To be precise, AlphaGo incorporates two slow-fast programs at totally different ranges. On the macro stage, the coverage community selects strikes whereas the quicker rollout coverage community evaluates these strikes. On the micro stage, the quicker rollout coverage community could be approximated by a worth community that straight predicts the successful price of board positions.

What can we be taught from AlphaGo for autonomous driving? AlphaGo demonstrates the significance of extracting a superb coverage utilizing a sturdy world mannequin (simulation). Equally, autonomous driving requires a extremely correct simulation to successfully leverage algorithms just like these utilized by AlphaGo. This method underscores the worth of mixing robust coverage networks with detailed, exact simulations to reinforce decision-making and optimize efficiency in complicated, dynamic environments.

Within the sport of Go, all states are instantly out there to each gamers, making it an ideal info sport the place statement equals state. This permits the sport to be characterised by an MDP course of. In distinction, autonomous driving is a POMDP course of, because the states can solely be estimated by means of statement.

POMDPs join notion and planning in a principled manner. The everyday answer for a POMDP is just like that for an MDP, with a restricted lookahead. Nonetheless, the principle challenges lie within the curse of dimensionality (explosion in state area) and the complicated interactions with different brokers. To make real-time progress tractable, domain-specific assumptions are sometimes made to simplify the POMDP drawback.

MPDM (and the two follow-ups, and the white paper) is one pioneering research on this path. MPDM reduces the POMDP to a closed-loop ahead simulation of a finite, discrete set of semantic-level insurance policies, fairly than evaluating each attainable management enter for each car. This method addresses the curse of dimensionality by specializing in a manageable variety of significant insurance policies, permitting for efficient real-time decision-making in autonomous driving situations.

The assumptions of MPDM are twofold. First, a lot of the decision-making by human drivers includes discrete high-level semantic actions (e.g., slowing, accelerating, lane-changing, stopping). These actions are known as insurance policies on this context. The second implicit assumption considerations different brokers: different automobiles will make fairly secure choices. As soon as a car’s coverage is set, its motion (trajectory) is decided.

MPDM first selects one coverage for the ego car from many choices (therefore the “multi-policy” in its title) and selects one coverage for every close by agent primarily based on their respective predictions. It then performs ahead simulation (just like a quick rollout in MCTS). The perfect interplay situation after analysis is then handed on to movement planning, such because the Spatiotemporal Semantic Hall (SCC) talked about within the joint spatiotemporal planning session.

MPDM allows clever and human-like habits, equivalent to actively chopping into dense site visitors move even when there isn’t any adequate hole current. This isn’t attainable with a predict-then-plan pipeline, which doesn’t explicitly think about interactions. The prediction module in MPDM is tightly built-in with the habits planning mannequin by means of ahead simulation.

MPDM assumes a single coverage all through the choice horizon (10 seconds). Primarily, MPDM adopts an MCTS method with one layer deep and tremendous extensive, contemplating all attainable agent predictions. This leaves room for enchancment, inspiring many follow-up works equivalent to EUDM, EPSILON, and MARC. For instance, EUDM considers extra versatile ego insurance policies and assigns a coverage tree with a depth of 4, with every coverage masking a time period of two seconds over an 8-second resolution horizon. To compensate for the additional computation induced by the elevated tree depth, EUDM performs extra environment friendly width pruning by guided branching, figuring out essential situations and key automobiles. This method explores a extra balanced coverage tree.

The ahead simulation in MPDM and EUDM makes use of very simplistic driver fashions (Clever driver mannequin or IDM for longitudinal simulation, and Pure Pursuit or PP for lateral simulation). MPDM factors out that prime constancy realism issues lower than the closed-loop nature itself, so long as policy-level choices usually are not affected by low-level motion execution inaccuracies.

Contingency planning within the context of autonomous driving includes producing a number of potential trajectories to account for numerous attainable future situations. A key motivating instance is that skilled drivers anticipate a number of future situations and all the time plan for a secure backup plan. This anticipatory method results in a smoother driving expertise, even when automobiles carry out sudden cut-ins into the ego lane.

A essential side of contingency planning is deferring the choice bifurcation level. This implies delaying the purpose at which totally different potential trajectories diverge, permitting the ego car extra time to assemble info and reply to totally different outcomes. By doing so, the car could make extra knowledgeable choices, leading to smoother and extra assured driving behaviors, just like these of an skilled driver.

MARC additionally combines habits planning and movement planning collectively. This extends the notion and utility of ahead simulation. In different phrases, MPDM and EUDM nonetheless makes use of coverage tree for prime stage habits planning and depend on different movement planning pipelines equivalent to semantic spatiotemporal corridors (SSC), attributable to the truth that ego movement within the coverage tree continues to be characterised by closely quantized habits bucket. MARC extends this by retaining the quantized habits for brokers apart from ego however makes use of extra refined movement planning straight within the ahead rollout. In a manner it’s a hybrid method, the place hybrid carries an analogous which means to that in hybrid A*, a mixture of discrete and steady.

One attainable downside of MPDM and all its follow-up works is their reliance on easy insurance policies designed for highway-like structured environments, equivalent to lane retaining and lane altering. This reliance might restrict the potential of ahead simulation to deal with complicated interactions. To deal with this, following the instance of MPDM, the important thing to creating POMDPs simpler is to simplify the motion and state area by means of the expansion of a high-level coverage tree. It could be attainable to create a extra versatile coverage tree, for instance, by enumerating spatiotemporal relative place tags to all relative objects after which performing guided branching.

Determination-making stays a sizzling subject in present analysis. Even classical optimization strategies haven’t been absolutely explored but. Machine studying strategies may shine and have a disruptive affect, particularly with the arrival of Massive Language Fashions (LLMs), empowered by strategies like Chain of Thought (CoT) or Monte Carlo Tree Search (MCTS).

Timber

Timber are systematic methods to carry out decision-making. Tesla AI Day 2021 and 2022 showcased their decision-making capabilities, closely influenced by AlphaGo and the following MuZero, to deal with extremely complicated interactions.

At a excessive stage, Tesla’s method follows habits planning (resolution making) adopted by movement planning. It searches for a convex hall first after which feeds it into steady optimization, utilizing spatiotemporal joint planning. This method successfully addresses situations equivalent to slender passing, a typical bottleneck for path-speed decoupled planning.

Tesla additionally adopts a hybrid system that mixes data-driven and physics-based checks. Beginning with outlined targets, Tesla’s system generates seed trajectories and evaluates key situations. It then branches out to create extra situation variants, equivalent to asserting or yielding to a site visitors agent. Such an interplay search over the coverage tree is showcased within the shows of the years 2021 and 2022.

One spotlight of Tesla’s use of machine studying is the acceleration of tree search by way of trajectory optimization. For every node, Tesla makes use of physics-based optimization and a neural planner, reaching a ten ms vs. 100 µs timeframe — leading to a 10x to 100x enchancment. The neural community is educated with professional demonstrations and offline optimizers.

Trajectory scoring is carried out by combining classical physics-based checks (equivalent to collision checks and luxury evaluation) with neural community evaluators that predict intervention probability and price human-likeness. This scoring helps prune the search area, focusing computation on essentially the most promising outcomes.

Whereas many argue that machine studying needs to be utilized to high-level decision-making, Tesla makes use of ML essentially to speed up optimization and, consequently, tree search.

The Monte Carlo Tree Search (MCTS) technique seems to be an final device for decision-making. Curiously, these learning Massive Language Fashions (LLMs) are attempting to include MCTS into LLMs, whereas these engaged on autonomous driving try to exchange MCTS with LLMs.

As of roughly two years in the past, Tesla’s expertise adopted this method. Nonetheless, since March 2024, Tesla’s Full Self-Driving (FSD) has switched to a extra end-to-end method, considerably totally different from their earlier strategies.

We will nonetheless think about interactions with out implicitly rising timber. Advert-hoc logics could be carried out to carry out one-order interplay between prediction and planning. Even one-order interplay can already generate good habits, as demonstrated by TuSimple. MPDM, in its authentic kind, is basically one-order interplay, however executed in a extra principled and extendable manner.

TuSimple has additionally demonstrated the potential to carry out contingency planning, just like the method proposed in MARC (although MARC may accommodate a custom-made danger choice).

After studying the fundamental constructing blocks of classical planning programs, together with habits planning, movement planning, and the principled technique to deal with interplay by means of decision-making, I’ve been reflecting on potential bottlenecks within the system and the way machine studying (ML) and neural networks (NN) might assist. I’m documenting my thought course of right here for future reference and for others who might have comparable questions. Observe that the knowledge on this part might include private biases and speculations.

Let’s have a look at the issue from three totally different views: within the present modular pipeline, as an end-to-end (e2e) NN planner, or as an e2e autonomous driving system.

Going again to the drafting board, let’s overview the issue formulation of a planning system in autonomous driving. The objective is to acquire a trajectory that ensures security, consolation, and effectivity in a extremely unsure and interactive atmosphere, all whereas adhering to real-time engineering constraints onboard the car. These elements are summarized as targets, environments, and constraints within the chart beneath.

Uncertainty in autonomous driving can confer with uncertainty in notion (statement) and predicting long-term agent behaviors into the longer term. Planning programs should additionally deal with the uncertainty in future trajectory predictions of different brokers. As mentioned earlier, a principled decision-making system is an efficient technique to handle this.

Moreover, a sometimes ignored side is that planning should tolerate unsure, imperfect, and typically incomplete notion outcomes, particularly within the present age of vision-centric and HD map-less driving. Having a Normal Definition (SD) map onboard as a previous helps alleviate this uncertainty, however it nonetheless poses vital challenges to a closely handcrafted planner system. This notion uncertainty was thought-about a solved drawback by Stage 4 (L4) autonomous driving corporations by means of the heavy use of Lidar and HD maps. Nonetheless, it has resurfaced because the trade strikes towards mass manufacturing autonomous driving options with out these two crutches. An NN planner is extra strong and might deal with largely imperfect and incomplete notion outcomes, which is essential to mass manufacturing vision-centric and HD-mapless Superior Driver Help Programs (ADAS).

Interplay needs to be handled with a principled decision-making system equivalent to Monte Carlo Tree Search (MCTS) or a simplified model of MPDM. The primary problem is coping with the curse of dimensionality (combinatorial explosion) by rising a balanced coverage tree with good pruning by means of area data of autonomous driving. MPDM and its variants, in each academia and trade (e.g., Tesla), present good examples of easy methods to develop this tree in a balanced manner.

NNs may improve the real-time efficiency of planners by dashing up movement planning optimization. This may shift the compute load from CPU to GPU, reaching orders of magnitude speedup. A tenfold improve in optimization velocity can essentially affect high-level algorithm design, equivalent to MCTS.

Trajectories additionally must be extra human-like. Human likeness and takeover predictors could be educated with the huge quantity of human driving knowledge out there. It’s extra scalable to extend the compute pool fairly than keep a rising military of engineering skills.

An end-to-end (e2e) neural community (NN) planner nonetheless constitutes a modular autonomous driving (AD) design, accepting structured notion outcomes (and probably latent options) as its enter. This method combines prediction, resolution, and planning right into a single community. Firms equivalent to DeepRoute (2022) and Huawei (2024) declare to make the most of this technique. Observe that related uncooked sensor inputs, equivalent to navigation and ego car info, are omitted right here.

This e2e planner could be additional developed into an end-to-end autonomous driving system that mixes each notion and planning. That is what Wayve’s LINGO-2 (2024) and Tesla’s FSDv12 (2024) declare to attain.

The advantages of this method are twofold. First, it addresses notion points. There are numerous elements of driving that we can’t simply mannequin explicitly with generally used notion interfaces. For instance, it’s fairly difficult to handcraft a driving system to nudge around a puddle of water or slow down for dips or potholes. Whereas passing intermediate notion options would possibly assist, it could not essentially resolve the problem.

Moreover, emergent habits will seemingly assist resolve nook instances extra systematically. The clever dealing with of edge instances, such because the examples above, might consequence from the emergent habits of huge fashions.

My hypothesis is that, in its final kind, the end-to-end (e2e) driver can be a big imaginative and prescient and action-native multimodal mannequin enhanced by Monte Carlo Tree Search (MCTS), assuming no computational constraints.

A world mannequin in autonomous driving, as of 2024 consensus, is usually a multimodal mannequin masking no less than imaginative and prescient and motion modes (or a VA mannequin). Whereas language could be useful for accelerating coaching, including controllability, and offering explainability, it’s not important. In its absolutely developed kind, a world mannequin can be a VLA (vision-language-action) mannequin.

There are no less than two approaches to growing a world mannequin:

- Video-Native Mannequin: Prepare a mannequin to foretell future video frames, conditioned on or outputting accompanying actions, as demonstrated by fashions like GAIA-1.

- Multimodality Adaptors: Begin with a pretrained Massive Language Mannequin (LLM) and add multimodality adaptors, as seen in fashions like Lingo-2, RT2, or ApolloFM. These multimodal LLMs usually are not native to imaginative and prescient or motion however require considerably much less coaching assets.

A world mannequin can produce a coverage itself by means of the motion output, permitting it to drive the car straight. Alternatively, MCTS can question the world mannequin and use its coverage outputs to information the search. This World Mannequin-MCTS method, whereas rather more computationally intensive, may have the next ceiling in dealing with nook instances attributable to its specific reasoning logic.

Can we do with out prediction?

Most present movement prediction modules signify the longer term trajectories of brokers apart from the ego car as one or a number of discrete trajectories. It stays a query whether or not this prediction-planning interface is adequate or essential.

In a classical modular pipeline, prediction continues to be wanted. Nonetheless, a predict-then-plan pipeline positively caps the higher restrict of autonomous driving programs, as mentioned within the decision-making session. A extra essential query is easy methods to combine this prediction module extra successfully into the general autonomous driving stack. Prediction ought to help decision-making, and a queryable prediction module inside an general decision-making framework, equivalent to MPDM and its variants, is most well-liked. There aren’t any extreme points with concrete trajectory predictions so long as they’re built-in appropriately, equivalent to by means of coverage tree rollouts.

One other subject with prediction is that open-loop Key Efficiency Indicators (KPIs), equivalent to Common Displacement Error (ADE) and Closing Displacement Error (FDE), usually are not efficient metrics as they fail to replicate the affect on planning. As an alternative, metrics like recall and precision on the intent stage needs to be thought-about.

In an end-to-end system, an specific prediction module might not be essential, however implicit supervision — together with different area data from a classical stack — can positively assist or no less than enhance the info effectivity of the educational system. Evaluating the prediction habits, whether or not specific or implicit, may even be useful in debugging such an e2e system.

Conclusions First. For an assistant, neural networks (nets) can obtain very excessive, even superhuman efficiency. For brokers, I imagine that utilizing a tree construction continues to be useful (although not essentially a should).

To begin with, timber can enhance nets. Timber improve the efficiency of a given community, whether or not it’s NN-based or not. In AlphaGo, even with a coverage community educated by way of supervised studying and reinforcement studying, the general efficiency was nonetheless inferior to the MCTS-based AlphaGo, which integrates the coverage community as one element.

Second, nets can distill timber. In AlphaGo, MCTS used each a worth community and the reward from a quick rollout coverage community to judge a node (state or board place) within the tree. The AlphaGo paper additionally talked about that whereas a worth operate alone might be used, combining the outcomes of the 2 yielded the perfect outcomes. The worth community primarily distilled the data from the coverage rollout by straight studying the state-value pair. That is akin to how people distill the logical considering of the gradual System 2 into the quick, intuitive responses of System 1. Daniel Kahneman, in his guide “Thinking, Fast and Slow,” describes how a chess grasp can rapidly acknowledge patterns and make speedy choices after years of follow, whereas a novice would require vital effort to attain comparable outcomes. Equally, the worth community in AlphaGo was educated to offer a quick analysis of a given board place.

Latest papers discover the higher limits of this quick system with neural networks. The “chess without search” paper demonstrates that with adequate knowledge (ready by means of tree search utilizing a standard algorithm), it’s attainable to attain grandmaster-level proficiency. There’s a clear “scaling legislation” associated to knowledge dimension and mannequin dimension, indicating that as the quantity of knowledge and the complexity of the mannequin improve, so does the proficiency of the system.

So right here we’re with an influence duo: timber enhance nets, and nets distill timber. This constructive suggestions loop is basically what AlphaZero makes use of to bootstrap itself to achieve superhuman efficiency in a number of video games.

The identical ideas apply to the event of huge language fashions (LLMs). For video games, since we’ve clearly outlined rewards as wins or losses, we will use ahead rollout to find out the worth of a sure motion or state. For LLMs, the rewards usually are not as clear-cut as within the sport of Go, so we depend on human preferences to price the fashions by way of reinforcement studying with human suggestions (RLHF). Nonetheless, with fashions like ChatGPT already educated, we will use supervised fine-tuning (SFT), which is basically imitation studying, to distill smaller but nonetheless highly effective fashions with out RLHF.

Returning to the unique query, nets can obtain extraordinarily excessive efficiency with giant portions of high-quality knowledge. This might be adequate for an assistant, relying on the tolerance for errors, however it might not be adequate for an autonomous agent. For programs focusing on driving help (ADAS), nets by way of imitation studying could also be enough.

Timber can considerably enhance the efficiency of nets with an specific reasoning loop, making them maybe extra appropriate for absolutely autonomous brokers. The extent of the tree or reasoning loop will depend on the return on funding of engineering assets. For instance, even one order of interplay can present substantial advantages, as demonstrated in TuSimple AI Day.

From the abstract beneath of the most well liked representatives of AI programs, we will see that LLMs usually are not designed to carry out decision-making. In essence, LLMs are educated to finish paperwork, and even SFT-aligned LLM assistants deal with dialogues as a particular sort of doc (finishing a dialogue document).

I don’t absolutely agree with latest claims that LLMs are gradual programs (System 2). They’re unnecessarily gradual in inference attributable to {hardware} constraints, however of their vanilla kind, LLMs are quick programs as they can not carry out counterfactual checks. Prompting strategies equivalent to Chain of Thought (CoT) or Tree of Ideas (ToT) are literally simplified types of MCTS, making LLMs operate extra like slower programs.

There’s intensive analysis making an attempt to combine full-blown MCTS with LLMs. Particularly, LLM-MCTS (NeurIPS 2023) treats the LLM as a commonsense “world mannequin” and makes use of LLM-induced coverage actions as a heuristic to information the search. LLM-MCTS outperforms each MCTS alone and insurance policies induced by LLMs by a large margin for complicated, novel duties. The highly speculated Q-star from OpenAI appears to observe the identical method of boosting LLMs with MCTS, because the title suggests.

Beneath is a tough evolution of the planning stack in autonomous driving. It’s tough because the listed options usually are not essentially extra superior than those above, and their debut might not observe the precise chronological order. Nonetheless, we will observe normal developments. Observe that the listed consultant options from the trade are primarily based on my interpretation of assorted press releases and might be topic to error.

One development is the motion in direction of a extra end-to-end design with extra modules consolidated into one. We see the stack evolve from path-speed decoupled planning to joint spatiotemporal planning, and from a predict-then-plan system to a joint prediction and planning system. One other development is the growing incorporation of machine learning-based parts, particularly within the final three phases. These two developments converge in direction of an end-to-end NN planner (with out notion) and even an end-to-end NN driver (with notion).

- ML as a Device: Machine studying is a device, not a standalone answer. It will possibly help with planning even in present modular designs.

- Full Formulation: Begin with a full drawback formulation, then make cheap assumptions to stability efficiency and assets. This helps create a transparent path for a future-proof system design and permits for enhancements as assets improve. Recall the transition from POMDP’s formulation to engineering options like AlphaGo’s MCTS and MPDM.

- Adapting Algorithms: Theoretically lovely algorithms (e.g., Dijkstra and Worth Iteration) are nice for understanding ideas however want adaptation for sensible engineering (Worth Iteration to MCTS as Dijkstra’s algorithm to Hybrid A-star).

- Deterministic vs. Stochastic: Planning excels in resolving deterministic (not essentially static) scenes. Determination-making in stochastic scenes is essentially the most difficult activity towards full autonomy.

- Contingency Planning: This will help merge a number of futures into a typical motion. It’s useful to be aggressive to the diploma which you could all the time resort to a backup plan.

- Finish-to-end Fashions: Whether or not an end-to-end mannequin can resolve full autonomy stays unclear. It could nonetheless want classical strategies like MCTS. Neural networks can deal with assistants, whereas timber can handle brokers.

- End-To-End Planning of Autonomous Driving in Industry and Academia: 2022–2023, Arxiv 2024

- BEVGPT: Generative Pre-trained Large Model for Autonomous Driving Prediction, Decision-Making, and Planning, AAAI 2024

- Towards A General-Purpose Motion Planning for Autonomous Vehicles Using Fluid Dynamics

- Tusimple AI day, in Chinese language with English subtitle on Bilibili, 2023/07

- Tech blog on joint spatiotemporal planning by Qcraft, in Chinese language on Zhihu, 2022/08

- A review of the entire autonomous driving stack, in Chinese language on Zhihu, 2018/12

- Tesla AI Day Planning, in Chinese language on Zhihu, 2022/10

- Technical blog on ApolloFM, in Chinese language by Tsinghua AIR, 2024

- Optimal Trajectory Generation for Dynamic Street Scenarios in a Frenet Frame, ICRA 2010

- MP3: A Unified Model to Map, Perceive, Predict and Plan, CVPR 2021

- NMP: End-to-end Interpretable Neural Motion Planner, CVPR 2019 oral

- Lift, Splat, Shoot: Encoding Images From Arbitrary Camera Rigs by Implicitly Unprojecting to 3D, ECCV 2020

- CoverNet: Multimodal Behavior Prediction using Trajectory Sets, CVPR 2020

- Baidu Apollo EM Motion Planner, Baidu, 2018

- AlphaGo: Mastering the game of Go with deep neural networks and tree search, Nature 2016

- AlphaZero: A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play, Science 2017

- MuZero: Mastering Atari, Go, chess and shogi by planning with a learned model, Nature 2020

- ToT: Tree of Thoughts: Deliberate Problem Solving with Large Language Models, NeurIPS 2023 Oral

- CoT: Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, NeurIPS 2022

- LLM-MCTS: Large Language Models as Commonsense Knowledge for Large-Scale Task Planning, NeurIPS 2023

- MPDM: Multipolicy decision-making in dynamic, uncertain environments for autonomous driving, ICRA 2015

- MPDM2: Multipolicy Decision-Making for Autonomous Driving via Changepoint-based Behavior Prediction, RSS 2015

- MPDM3: Multipolicy decision-making for autonomous driving via changepoint-based behavior prediction: Theory and experiment, RSS 2017

- EUDM: Efficient Uncertainty-aware Decision-making for Automated Driving Using Guided Branching, ICRA 2020

- MARC: Multipolicy and Risk-aware Contingency Planning for Autonomous Driving, RAL 2023

- EPSILON: An Efficient Planning System for Automated Vehicles in Highly Interactive Environments, TRO 2021