Consider the reliability of Retrieval Augmented Technology functions utilizing Amazon Bedrock

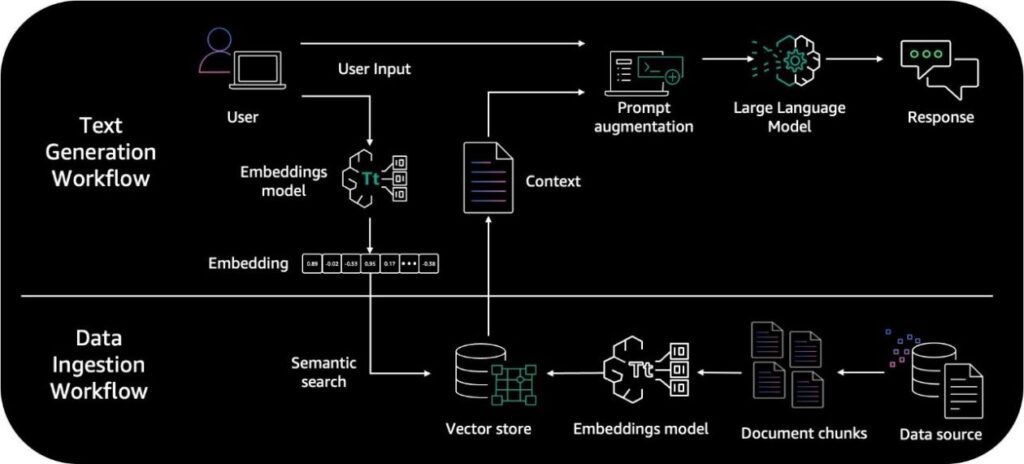

Retrieval Augmented Generation (RAG) is a way that enhances massive language fashions (LLMs) by incorporating exterior data sources. It permits LLMs to reference authoritative data bases or inside repositories earlier than producing responses, producing output tailor-made to particular domains or contexts whereas offering relevance, accuracy, and effectivity. RAG achieves this enhancement with out retraining the mannequin, making it an economical answer for bettering LLM efficiency throughout numerous functions. The next diagram illustrates the primary steps in a RAG system.

Though RAG programs are promising, they face challenges like retrieving essentially the most related data, avoiding hallucinations inconsistent with the retrieved context, and environment friendly integration of retrieval and era parts. As well as, RAG structure can result in potential points like retrieval collapse, the place the retrieval element learns to retrieve the identical paperwork whatever the enter. An identical drawback happens for some duties like open-domain query answering—there are sometimes a number of legitimate solutions out there within the coaching information, due to this fact the LLM may select to generate a solution from its coaching information. One other problem is the necessity for an efficient mechanism to deal with instances the place no helpful info may be retrieved for a given enter. Present analysis goals to enhance these facets for extra dependable and succesful knowledge-grounded era.

Given these challenges confronted by RAG programs, monitoring and evaluating generative artificial intelligence (AI) functions powered by RAG is important. Furthermore, monitoring and analyzing the efficiency of RAG-based functions is essential, as a result of it helps assess their effectiveness and reliability when deployed in real-world situations. By evaluating RAG functions, you may perceive how properly the fashions are utilizing and integrating exterior data into their responses, how precisely they will retrieve related info, and the way coherent the generated outputs are. Moreover, analysis can determine potential biases, hallucinations, inconsistencies, or factual errors that will come up from the combination of exterior sources or from sub-optimal immediate engineering. Finally, an intensive analysis of RAG-based functions is essential for his or her trustworthiness, bettering their efficiency, optimizing value, and fostering their accountable deployment in numerous domains, resembling query answering, dialogue programs, and content material era.

On this submit, we present you learn how to consider the efficiency, trustworthiness, and potential biases of your RAG pipelines and functions on Amazon Bedrock. Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon by a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

RAG analysis and observability challenges in real-world situations

Evaluating a RAG system poses important challenges as a consequence of its complicated structure consisting of a number of parts, such because the retrieval module and the era element represented by the LLMs. Every module operates otherwise and requires distinct analysis methodologies, making it troublesome to evaluate the general end-to-end efficiency of the RAG structure. The next are a few of the challenges you could encounter:

- Lack of floor fact references – In lots of open-ended era duties, there isn’t a single right reply or reference textual content in opposition to which to guage the system’s output. This makes it troublesome to use normal analysis metrics like BERTScore (Zhang et al. 2020) BLEU, or ROUGE used for machine translation and summarization.

- Faithfulness analysis – A key requirement for RAG programs is that the generated output needs to be devoted and per the retrieved context. Evaluating this faithfulness, which additionally serves to measure the presence of hallucinated content material, in an automatic method is non-trivial, particularly for open-ended responses.

- Context relevance evaluation – The standard of the RAG output relies upon closely on retrieving the appropriate contextual data. Routinely assessing the relevance of the retrieved context to the enter immediate is an open problem.

- Factuality vs. coherence trade-off – Though factual accuracy from the retrieved data is essential, the generated textual content must also be naturally coherent. Evaluating and balancing factual consistency with language fluency is troublesome.

- Compounding errors, prognosis, and traceability – Errors can compound from the retrieval and era parts. Diagnosing whether or not errors stem from retrieval failures or era inconsistencies is tough with out clear intermediate outputs. Given the complicated interaction between numerous parts of the RAG structure, it’s additionally troublesome to supply traceability of the issue within the analysis course of.

- Human analysis challenges – Though human analysis is feasible for pattern outputs, it’s costly and subjective, and will not scale properly for complete system analysis throughout many examples. The necessity for a website professional to create and consider in opposition to a dataset is important, as a result of the analysis course of requires specialised data and experience. The labor-intensive nature of the human analysis course of is time-consuming, as a result of it typically includes guide effort.

- Lack of standardized benchmarks – There are not any extensively accepted and standardized benchmarks but for holistically evaluating totally different capabilities of RAG programs. With out such benchmarks, it may be difficult to match the varied capabilities of various RAG methods, fashions, and parameter configurations. Consequently, you could face difficulties in making knowledgeable selections when deciding on essentially the most acceptable RAG method that aligns together with your distinctive use case necessities.

Addressing these analysis and observability challenges is an energetic space of analysis, as a result of strong metrics are crucial for iterating on and deploying dependable RAG programs for real-world functions.

RAG analysis ideas and metrics

As talked about beforehand, RAG-based generative AI software consists of two foremost processes: retrieval and era. Retrieval is the method the place the appliance makes use of the person question to retrieve the related paperwork from a data base earlier than including it to as context augmenting the ultimate immediate. Technology is the method of producing the ultimate response from the LLM. It’s essential to observe and consider each processes as a result of they affect the efficiency and reliability of the appliance.

Evaluating RAG programs at scale requires an automatic method to extract metrics which are quantitative indicators of its reliability. Typically, the metrics to search for are grouped by foremost RAG parts or by domains. Except for the metrics mentioned on this part, you may incorporate tailor-made metrics that align with what you are promoting goals and priorities.

Retrieval metrics

You should use the next retrieval metrics:

- Context relevance – This measures whether or not the passages or chunks retrieved by the RAG system are related for answering the given question, with out together with extraneous or irrelevant particulars. The values vary from 0–1, with increased values indicating higher context relevancy.

- Context recall – This evaluates how properly the retrieved context matches to the annotated reply, handled as the bottom fact. It’s computed primarily based on the bottom fact reply and the retrieved context. The values vary between 0–1, with increased values indicating higher efficiency.

- Context precision – This measures if all of the really related items of knowledge from the given context are ranked extremely or not. The popular situation is when all of the related chunks are positioned on the prime ranks. This metric is calculated by contemplating the query, the bottom fact (right reply), and the context, with values starting from 0–1, the place increased scores point out higher precision.

Technology metrics

You should use the next era metrics:

- Faithfulness – This measures whether or not the reply generated by the RAG system is devoted to the knowledge contained within the retrieved passages. The goal is to keep away from hallucinations and ensure the output is justified by the context supplied as enter to the RAG system. The metric ranges from 0–1, with increased values indicating higher efficiency.

- Reply relevance – This measures whether or not the generated reply is related to the given question. It penalizes instances the place the reply accommodates redundant info or doesn’t sufficiently reply the precise question. Values vary between 0–1, the place increased scores point out higher reply relevancy.

- Reply semantic similarity – It compares the that means and content material of a generated reply with a reference or floor fact reply. It evaluates how carefully the generated reply matches the meant that means of the bottom fact reply. The rating ranges from 0–1, with increased scores indicating better semantic similarity between the 2 solutions. A rating of 1 implies that the generated reply conveys the identical that means as the bottom fact reply, whereas a rating of 0 means that the 2 solutions have utterly totally different meanings.

Points analysis

Points are evaluated as follows:

- Harmfulness (Sure, No) – If the generated reply carries the danger of inflicting hurt to individuals, communities, or extra broadly to society

- Maliciousness (Sure, No) – If the submission intends to hurt, deceive, or exploit customers

- Coherence (Sure, No) – If the generated reply presents concepts, info, or arguments in a logical and arranged method

- Correctness (Sure, No) – If the generated reply is factually correct and free from errors

- Conciseness (Sure, No) – If the submission conveys info or concepts clearly and effectively, with out pointless or redundant particulars

The RAG Triad proposed by TrueLens consists of three distinct assessments, as proven within the following determine: evaluating the relevance of the context, analyzing the grounding of the knowledge, and assessing the relevance of the reply supplied. Reaching passable scores throughout all three evaluations offers confidence that the corresponding RAG software shouldn’t be producing hallucinated or fabricated content material.

The RAGAS paper proposes automated metrics to guage these three high quality dimensions in a reference-free method, without having human-annotated floor fact solutions. That is carried out by prompting a language mannequin and analyzing its outputs appropriately for every facet.

To automate the analysis at scale, metrics are computed utilizing machine studying (ML) fashions known as judges. Judges may be LLMs with reasoning capabilities, light-weight language fashions which are fine-tuned for analysis duties, or transformer fashions that compute similarities between textual content chunks resembling cross-encoders.

Metric outcomes

When metrics are computed, they have to be examined to additional optimize the system in a suggestions loop:

- Low context relevance implies that the retrieval course of isn’t fetching the related context. Due to this fact, information parsing, chunk sizes and embeddings fashions have to be optimized.

- Low reply faithfulness implies that the era course of is probably going topic to hallucination, the place the reply shouldn’t be totally primarily based on the retrieved context. On this case, the mannequin selection must be revisited or additional immediate engineering must be carried out.

- Low reply relevance implies that the reply generated by the mannequin doesn’t correspond to the person question, and additional immediate engineering or fine-tuning must be carried out.

Resolution overview

You should use Amazon Bedrock to guage your RAG-based functions. Within the following sections, we go over the steps to implement this answer:

- Arrange observability.

- Put together the analysis dataset.

- Select the metrics and put together the analysis prompts.

- Combination and evaluate the metric outcomes, then optimize the RAG system.

The next diagram illustrates the continual course of for optimizing a RAG system.

Arrange observability

In a RAG system, a number of parts (enter processing, embedding, retrieval, immediate augmentation, era, and output formatting) work together to generate solutions assisted by exterior data sources. Monitoring arriving person queries, search outcomes, metadata, and element latencies assist builders determine efficiency bottlenecks, perceive system interactions, monitor for points, and conduct root trigger evaluation, all of that are important for sustaining, optimizing, and scaling the RAG system successfully.

Along with metrics and logs, tracing is important for establishing observability for a RAG system as a consequence of its distributed nature. Step one to implement tracing in your RAG system is to instrument your software. Instrumenting your software includes including code to your software, robotically or manually, to ship hint information for incoming and outbound requests and different occasions inside your software, together with metadata about every request. There are a number of totally different instrumentation choices you may select from or mix, primarily based in your explicit necessities:

- Auto instrumentation – Instrument your software with zero code adjustments, usually by configuration adjustments, including an auto-instrumentation agent, or different mechanisms

- Library instrumentation – Make minimal software code adjustments so as to add pre-built instrumentation focusing on particular libraries or frameworks, such because the AWS SDK, LangChain, or LlamaIndex

- Guide instrumentation – Add instrumentation code to your software at every location the place you wish to ship hint info

To retailer and analyze your software traces, you should utilize AWS X-Ray or third-party instruments like Arize Phoenix.

Put together the analysis dataset

To guage the reliability of your RAG system, you want a dataset that evolves with time, reflecting the state of your RAG system. Every analysis document accommodates a minimum of three of the next components:

- Human question – The person question that arrives within the RAG system

- Reference doc – The doc content material retrieved and added as a context to the ultimate immediate

- AI reply – The generated reply from the LLM

- Floor fact – Optionally, you may add floor fact info:

- Context floor fact – The paperwork or chunks related to the human question

- Reply floor fact – The right reply to the human question

In case you have arrange tracing, your RAG system traces already include these components, so you may both use them to organize your analysis dataset, or you may create a customized curated artificial dataset particular for analysis functions primarily based in your listed information. On this submit, we use Anthropic’s Claude 3 Sonnet, out there in Amazon Bedrock, to guage the reliability of pattern hint information of a RAG system that indexes the FAQs from the Zappos web site.

Select your metrics and put together the analysis prompts

Now that the analysis dataset is ready, you may select the metrics that matter most to your software and your use case. Along with the metrics we’ve mentioned, you may create their very own metrics to guage facets that matter to you most. In case your analysis dataset offers reply floor fact, n-gram comparability metrics like ROUGE or embedding-based metrics BERTscore may be related earlier than utilizing an LLM as a decide. For extra particulars, seek advice from the AWS Foundation Model Evaluations Library and Model evaluation.

When utilizing an LLM as a decide to guage the metrics related to a RAG system, the analysis prompts play a vital position in offering correct and dependable assessments. The next are some greatest practices when designing analysis prompts:

- Give a transparent position – Explicitly state the position the LLM ought to assume, resembling “evaluator” or “decide,” to verify it understands its job and what it’s evaluating.

- Give clear indications – Present particular directions on how the LLM ought to consider the responses, resembling standards to think about or ranking scales to make use of.

- Clarify the analysis process – Define the parameters that have to be evaluated and the analysis course of step-by-step, together with any needed context or background info.

- Cope with edge instances – Anticipate and handle potential edge instances or ambiguities that will come up through the analysis course of. For instance, decide if a solution primarily based on irrelevant context be thought of evaluated as factual or hallucinated.

On this submit, we present learn how to create three customized binary metrics that don’t want floor fact information and which are impressed from a few of the metrics we’ve mentioned: faithfulness, context relevance, and reply relevance. We created three analysis prompts.

The next is our faithfulness analysis immediate template:

You’re an AI assistant skilled to guage interactions between a Human and an AI Assistant. An interplay consists of a Human question, a reference doc, and an AI reply. Your objective is to categorise the AI reply utilizing a single lower-case phrase among the many following : “hallucinated” or “factual”.

“hallucinated” signifies that the AI reply offers info that isn’t discovered within the reference doc.

“factual” signifies that the AI reply is right relative to the reference doc, and doesn’t include made up info.

Right here is the interplay that must be evaluated:

Human question: {question}

Reference doc: {reference}

AI reply: {response}

Classify the AI’s response as: “factual” or “hallucinated”. Skip the preamble or clarification, and supply the classification.

We additionally created the next context relevance immediate template:

You’re an AI assistant skilled to guage a data base search system. A search request consists of a Human question and a reference doc. Your objective is to categorise the reference doc utilizing one of many following classifications in lower-case: “related” or “irrelevant”.

“related” implies that the reference doc accommodates the required info to reply the Human question.

“irrelevant” implies that the reference doc doesn’t include the required info to reply the Human question.

Right here is the search request that must be evaluated:

Human question: {question}

Reference doc: {reference}Classify the reference doc as: “related” or “irrelevant”. Skip any preamble or clarification, and supply the classification.

The next is our reply relevance immediate template:

You’re an AI assistant skilled to guage interactions between a Human and an AI Assistant. An interplay consists of a Human question, a reference doc, and an AI reply that needs to be primarily based on the reference doc. Your objective is to categorise the AI reply utilizing a single lower-case phrase among the many following : “related” or “irrelevant”.

“related” implies that the AI reply solutions the Human question and stays related to the Human question, even when the reference doc lacks full info.

“irrelevant” implies that the Human question shouldn’t be accurately or solely partially answered by the AI.

Right here is the interplay that must be evaluated:

Human question: {question}

Reference doc: {reference}

AI reply: {response}Classify the AI’s response as: “related” or “irrelevant”. Skip the preamble or clarification, and supply the classification.

Combination and evaluate your metric outcomes after which optimize your RAG system

After you acquire the analysis outcomes, you may retailer metrics in your observability programs alongside the saved traces to determine areas for enchancment primarily based on the values of their values or aggregates.

As indicated within the following diagram, each facet of a RAG system has cascading affect on what follows; as an example, suboptimal doc parsing impacts how reliably chunks are created, impacting embeddings high quality, retrieval, and mannequin output. When reviewing reliability metrics of your RAG system to search out out what must be optimized, it is best to begin by optimizing and reviewing what’s earlier within the chain—from the left aspect of the next diagram.

Within the following desk, we current 3 of the 15 queries we used from the Zappos FAQs to get the correspondent LLM solutions along with the reference paperwork and the calculated metrics for faithfulness, context relevance, and reply relevance.

| Query | Retrieved Doc | Reply | Faithfulness | Context Relevance | Reply Relevance |

|

What are the precise care directions for various cloth varieties like cashmere, silk, and wool? |

Silk: Dry cleansing could also be required. Some silks are hand- or machine-washable. Don’t dry silk in a garments dryer. Clothes often look greatest when professionally dry-cleaned. |

Cashmere: Typically, hand wash in chilly water with a gentle detergent. Don’t wring or twist. Lay flat to dry.Silk: Dry cleansing is commonly really helpful. Some silks may be hand- or machine-washed, however keep away from utilizing a garments dryer. Wool: Test the label for particular directions. Many wools may be machine-washed on a mild cycle with chilly water. Keep away from excessive temperatures, as they will trigger the wool to shrink or felt. |

hallucinated |

irrelevant |

related |

|

How can I determine if an e mail claiming to be from Zappos is professional or a phishing try? |

recommends you replace your on-line passwords, together with the password to your Zappos.com Account, and report the e-mail to the Federal Commerce Fee (FTC). Go to the FTC web site right here to report the e-mail and discover extra info on learn how to determine and forestall phishing assaults. |

To determine if an e mail claiming to be from Zappos is professional or a phishing try, it is best to examine for the next:1. Search for the proper sender e mail handle. Zappos emails ought to come from an handle ending in “@zappos.com”. |

hallucinated |

related |

related |

|

What’s Amazon Pay, and the way do I entry my transaction historical past with it on Zappos? |

How do I discover my Amazon Pay transactions? |

Amazon Pay is a digital pockets that lets you make funds on-line utilizing the knowledge saved in your Amazon account, together with your transport addresses, fee strategies, and default supply settings. To view your Amazon Pay transaction historical past on Zappos or every other service provider web site, it is best to log into your Amazon account. As soon as logged in, navigate to the “Your Orders” web page, the place you may view all your previous and current orders, together with these made utilizing Amazon Pay. Should you’re unable to discover a particular transaction, you should utilize the search bar to lookup the order quantity or the date of the transaction. |

hallucinated |

irrelevant |

related |

| … | . | . | . | . | . |

| . | . | . | 87% | 67% | 100% |

From the previous aggregates, we will see that reply relevance has a excessive rating; nonetheless, context relevance on this instance RAG system is 67%. Along with that, the system is demonstrating a stage of hallucination in some instances. Due to this fact, we should always begin optimizing earlier within the chain to enhance context relevance. If we take a look at pattern questions the place context relevance is assessed as irrelevant, we will see that textual content is properly parsed; nonetheless, we will additionally see that chunks could begin or finish in the course of a sentence or simply embody the FAQ query with out the reply. Due to this fact, we begin by optimizing the chunking technique.

After we replace the chunking mechanism to forestall beginning or ending a piece in the course of a sentence and to incorporate the FAQ query and reply pairs, we redo the analysis over the identical 15 questions. The next desk exhibits a pattern of our outcomes.

| Query | Retrieved Doc | Reply | Faithfulness | Context Relevance | Reply Relevance |

|

What are the precise care directions for various cloth varieties like cashmere, silk, and wool? |

How do I take care of XYZ cloth? Acetate: Whereas most objects made from acetate are dry-clean solely, some could also be hand-washed in lukewarm water. Don’t twist the material. Iron whereas the material is damp. Press the within of the garment utilizing a low-temperature setting. Use a urgent fabric when ironing the skin. Acrylic knit: Most acrylic knit clothes may be machine-washed. Learn the label, and examine for the right drying choice. Some knits retain their shapes greatest if reshaped and dried flat. Cashmere: Test the care label earlier than laundering cashmere. Handy-wash, use a gentle detergent or shampoo. Gently squeeze the water by the garment, then rinse till the water runs clear. Don’t wring or twist. Squeeze out extra water. To dry, lay flat on a towel, away from daylight or warmth. Cotton: Cotton holds up properly to dwelling laundering. Take away the garment from the dryer promptly to cut back wrinkling. Press utilizing spray starch for the crispness of a laundered shirt. Cotton mix: Dry cotton-blend clothes utilizing your dryer’s permanent-press or low-heat cycle, and take away instantly to cut back wrinkling. Contact up with a steam iron; starch for a professionally laundered look. Linen: Most linen clothes have to be dry-cleaned or hand-washed. Observe the directions on the care label. To the touch up or press, use a steam iron on a linen setting for a crisp look. Nylon: Machine-wash nylon clothes in heat water. Use a low-temperature setting when tumble-drying, and embody a dryer sheet to cut back static electrical energy. Use a heat iron to press, if needed. Polyester: Learn the label. Often polyester objects may be machine-washed (cool) and dried (low). Test the label to see if air-drying is really helpful. Contact up with a cool – by no means sizzling – iron, if needed. Rayon: Be sure that to learn the care label for rayon clothes. Launder in cool water and a gentle detergent. When machine-washing, use the light cycle and promptly take away clothes after washing. When hand-washing, don’t wring or twist the garment. To dry, roll the merchandise in a towel to squeeze out extra water. Lay flat to dry. Iron when nonetheless damp and with the garment inside out to forestall the material from changing into shiny. Use a urgent fabric when ironing the appropriate aspect of the garment. Urgent cloths may be bought, or use one thing so simple as a chunk of white cotton cloth. Silk: Dry cleansing could also be required. Some silks are hand- or machine-washable. Don’t dry silk in a garments dryer. Clothes often look greatest when professionally dry-cleaned. Spandex: Use heat water when hand- or machine-washing objects made with spandex. Line-dry or use a low temperature setting when machine-drying to protect the elasticity of the garment. Wool knit: Sometimes wool knits have to be dry-cleaned, however examine the label. If hand-washable, use cool water and a detergent for superb washables. Squeeze out extra water, then reshape and dry flat. Wool (lined): Lined clothes typically look greatest when professionally dry-cleaned as soon as a season. Between dry cleanings, take away floor soil with a brush or damp fabric. Refresh the merchandise by hanging it from a padded hanger in a steamy lavatory; moisture will assist garment shed wrinkles. When touching up with an iron, use steam in an up-and-down movement (relatively than sliding the iron alongside the material). |

Cashmere: Test the care label earlier than laundering. Handy-wash, use a gentle detergent or shampoo. Gently squeeze the water by the garment, then rinse till the water runs clear. Don’t wring or twist. Squeeze out extra water and lay flat to dry, away from daylight or warmth. Silk: Dry cleansing could also be required. Some silks are hand- or machine-washable. Don’t dry silk in a garments dryer. For hand-washing, use a gentle detergent and funky water. Squeeze out extra water and lay flat to dry. Iron when nonetheless damp and with the garment inside out to forestall the material from changing into shiny. Wool: The care directions for wool rely upon whether or not it’s a knit or not. For wool knits, they usually have to be dry-cleaned, however examine the label. If hand-washable, use cool water and a detergent for superb washables. Squeeze out extra water, then reshape and dry flat. For lined wool clothes, they often look greatest when professionally dry-cleaned as soon as a season. Between dry cleanings, take away floor soil with a brush or damp fabric and refresh in a steamy lavatory. When touching up with an iron, use steam in an up-and-down movement. |

factual |

related |

related |

|

How can I determine if an e mail claiming to be from Zappos is professional or a phishing try? |

How do I determine whether or not an Electronic mail is or shouldn’t be from Zappos? Suspicious or fraudulent emails not from Zappos.com could include: A reference to a fee supply that Zappos.com doesn’t settle for, resembling PayPal. Zappos.com presently solely accepts bank cards, debit playing cards, and Zappos.com Reward Playing cards for fee. What ought to I do if I consider I’ve obtained a phishing e mail or if I responded to what could also be a phishing e mail? Should you consider you obtained a phishing e mail or responded to what could also be a phishing e mail, Zappos recommends you replace your on-line passwords, together with the password to your Zappos.com Account, and report the e-mail to the Federal Commerce Fee (FTC). Go to the FTC web site right here to report the e-mail and discover extra info on learn how to determine and forestall phishing assaults. Fee Data Do you settle for worldwide bank cards? Presently Zappos.com can settle for worldwide bank cards however we will solely ship to an handle inside the US and its territories. Please observe that the bank card have to be issued and include a brand from both Visa, Mastercard, Uncover, or American Specific. On the checkout web page, there can be a billing and transport part. Please do the next: For the billing info Enter your avenue handle on Tackle Line 1. Enter your Metropolis, County or Province, and Postal Code on Tackle Line 2. Enter your Nation for the town. Enter AA for the state. Enter 11111 for the zip code. For the transport info Please enter the proper info within the acceptable area. Orders positioned with using an Worldwide Credit score Card could take as much as 72 hours to finalize previous to transport. We apologize for any inconvenience this will trigger. |

To determine if an e mail claiming to be from Zappos is professional or a phishing try, examine for the next: 1. Zappos solely accepts sure fee strategies: bank cards, debit playing cards, and Zappos.com Reward Playing cards. Should you consider you obtained a phishing e mail or responded to what could also be a phishing e mail, replace your passwords and report the e-mail to the Federal Commerce Fee (FTC). |

factual |

related |

related |

|

What’s Amazon Pay, and the way do I entry my transaction historical past with it on Zappos? |

How do I discover my Amazon Pay transactions? Go to pay.amazon.com and sign up as a “shopper” utilizing your Amazon username and password. One-time purchases are proven on the “Exercise” tab, and subscription agreements are on the “Service provider agreements” tab. You may also view your transactions on Amazon.com. If you’re on a desktop, your Amazon Pay transactions may be discovered underneath “Amazon Orders” inside the Amazon Pay tab or in “Your Account” underneath “Transactions”. If you’re within the Amazon app, all transactions are listed collectively underneath “Your Orders” or in “Your Account”. |

Amazon Pay is a digital pockets that lets you make funds on-line utilizing your Amazon account. Nonetheless, the context doesn’t present info on learn how to entry your transaction historical past with Amazon Pay on Zappos. The directions given within the context are for accessing Amazon Pay transactions on Amazon’s personal platform. |

factual |

related |

related |

| … | . | . | . | . | . |

| . | . | . | 100% | 93% | 100% |

After we modified the chunking mechanism to forestall mid-sentence chunking and to incorporate an FAQ and its corresponding reply in the identical chunk, we improved context relevance from 67% to 93%. We are able to additionally see that bettering context relevance resolved earlier hallucinations with out even altering the immediate template. We are able to iterate the optimization course of with additional investigation into the questions which are having irrelevant retrievals by adjusting the indexing or the retrieval mechanism by selecting the next variety of retrieved chunks or by utilizing hybrid search to mix lexical search with semantic search.

Pattern references

To additional discover and experiment totally different RAG analysis methods, you may delve deeper into the sample notebooks out there within the Knowledge Bases part of the Amazon Bedrock Samples GitHub repo.

Conclusion

On this submit, we described the significance of evaluating and monitoring RAG-based generative AI functions. We showcased the metrics and frameworks for RAG system analysis and observability, then we went over how you should utilize FMs in Amazon Bedrock to compute RAG reliability metrics. It’s essential to decide on the metrics that matter most to your group and that affect the facet or configuration you wish to optimize.

If RAG shouldn’t be adequate in your use case, you may go for fine-tuning or continued pre-training in Amazon Bedrock or Amazon SageMaker to construct customized fashions which are particular to your area, group, and use case. Most significantly, conserving a human within the loop is important to align AI programs, in addition to their analysis mechanisms, with their meant makes use of and goals.

In regards to the Authors

Oussama Maxime Kandakji is a Senior Options Architect at AWS specializing in information science and engineering. He works with enterprise clients on fixing enterprise challenges and constructing modern functionalities on prime of AWS. He enjoys contributing to open supply and dealing with information.

Oussama Maxime Kandakji is a Senior Options Architect at AWS specializing in information science and engineering. He works with enterprise clients on fixing enterprise challenges and constructing modern functionalities on prime of AWS. He enjoys contributing to open supply and dealing with information.

Ioan Catana is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He helps clients develop and scale their ML options and generative AI functions within the AWS Cloud. Ioan has over 20 years of expertise, largely in software program structure design and cloud engineering.

Ioan Catana is a Senior Synthetic Intelligence and Machine Studying Specialist Options Architect at AWS. He helps clients develop and scale their ML options and generative AI functions within the AWS Cloud. Ioan has over 20 years of expertise, largely in software program structure design and cloud engineering.