REST: A Stress-Testing Framework for Evaluating Multi-Downside Reasoning in Massive Reasoning Fashions

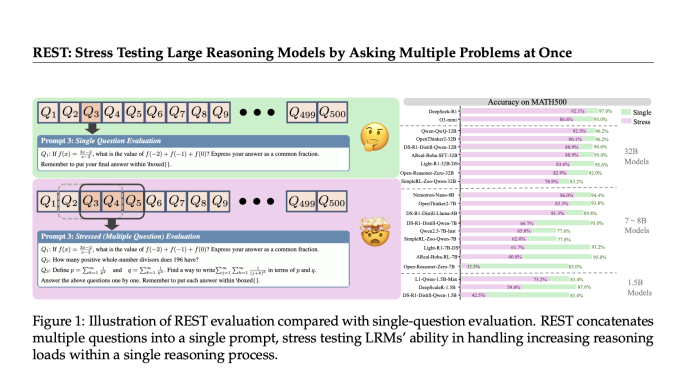

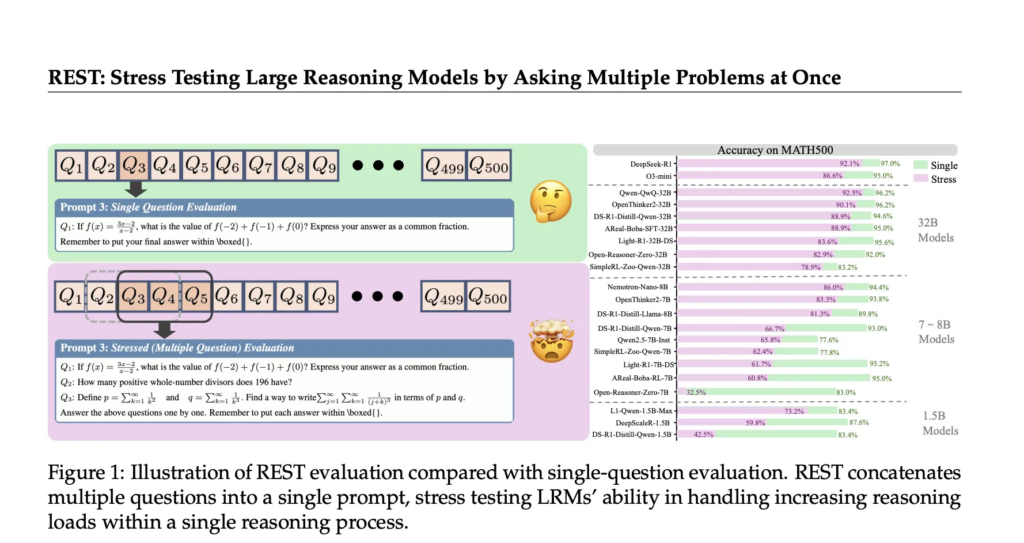

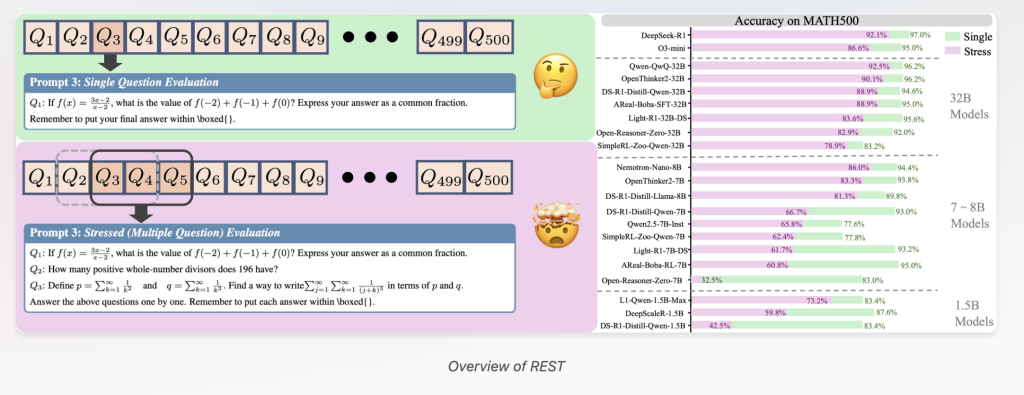

Massive Reasoning Fashions (LRMs) have quickly superior, exhibiting spectacular efficiency in advanced problem-solving duties throughout domains like arithmetic, coding, and scientific reasoning. Nonetheless, present analysis approaches primarily deal with single-question testing, which reveals vital limitations. This text introduces REST (Reasoning Analysis by means of Simultaneous Testing) — a novel multi-problem stress-testing framework designed to push LRMs past remoted problem-solving and higher replicate their real-world multi-context reasoning capabilities.

Why Present Analysis Benchmarks Fall Brief for Massive Reasoning Fashions

Most present benchmarks, reminiscent of GSM8K and MATH, consider LRMs by asking one query at a time. Whereas efficient for preliminary mannequin improvement, this remoted query strategy faces two crucial drawbacks:

- Lowering Discriminative Energy: Many state-of-the-art LRMs now obtain near-perfect scores on well-liked benchmarks (e.g., DeepSeek-R1 reaching 97% accuracy on MATH500). These saturated outcomes make it more and more tough to differentiate true mannequin enhancements, forcing the costly, steady creation of tougher datasets to distinguish capabilities.

- Lack of Actual-World Multi-Context Analysis: Actual-world purposes — like instructional tutoring, technical assist, or multitasking AI assistants — require reasoning throughout a number of, probably interfering questions concurrently. Single-question testing doesn’t seize these dynamic, multi-problem challenges that replicate true cognitive load and reasoning robustness.

Introducing REST: Stress-Testing LRMs with A number of Issues at As soon as

To deal with these challenges, researchers from Tsinghua College, OpenDataLab, Shanghai AI Laboratory, and Renmin College developed REST, a easy but highly effective analysis methodology that concurrently assessments LRMs on a number of questions bundled right into a single immediate.

- Multi-Query Benchmark Reconstruction: REST repurposes present benchmarks by concatenating a number of questions into one immediate, adjusting the stress degree parameter that controls what number of questions are offered concurrently.

- Complete Analysis: REST evaluates crucial reasoning competencies past primary problem-solving — together with contextual precedence allocation, cross-problem interference resistance, and dynamic cognitive load administration.

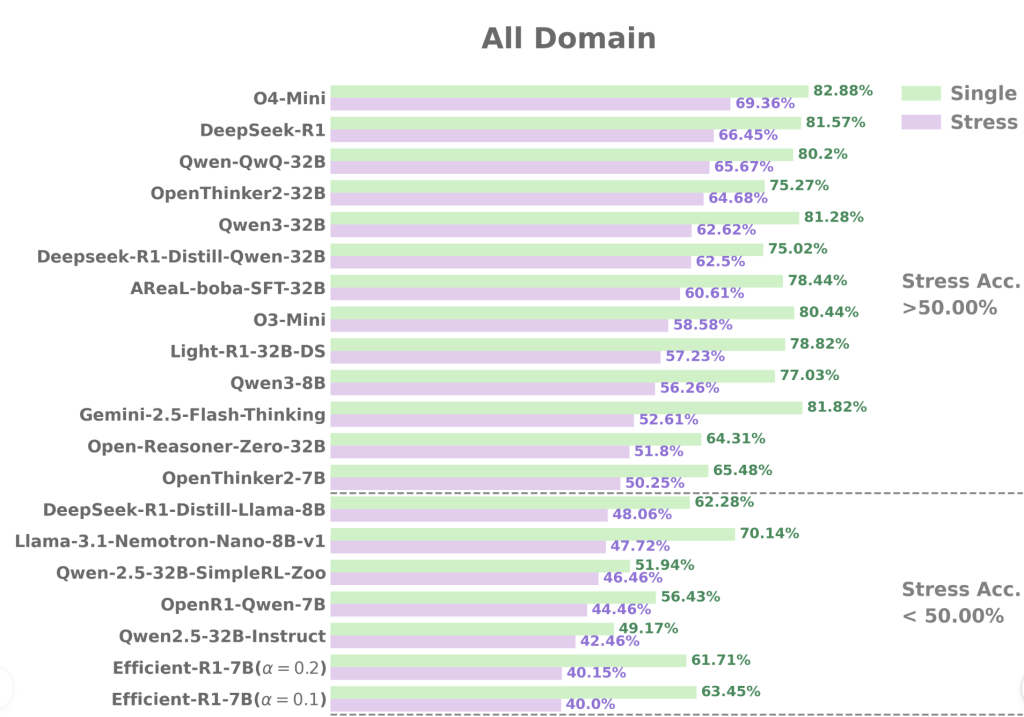

- Extensive Applicability: The framework is validated on 34 superior LRMs starting from 1.5 billion to 671 billion parameters, examined on 7 various benchmarks throughout various problem ranges (from easy GSM8K to difficult AIME and GPQA).

REST Reveals Key Insights About LRM Reasoning Talents

The REST analysis uncovers a number of groundbreaking findings:

1. Important Efficiency Degradation Beneath Multi-Downside Stress

Even state-of-the-art LRMs like DeepSeek-R1 present notable accuracy drops when dealing with a number of questions collectively. For instance, DeepSeek-R1’s accuracy on difficult benchmarks like AIME24 falls by practically 30% beneath REST in comparison with remoted query testing. This contradicts prior assumptions that giant language fashions are inherently able to effortlessly multitasking throughout issues.

2. Enhanced Discriminative Energy Amongst Comparable Fashions

REST dramatically amplifies the variations between fashions with near-identical single-question scores. On MATH500, as an illustration:

- R1-7B and R1-32B obtain shut single-question accuracies of 93% and 94.6%, respectively.

- Beneath REST, R1-7B’s accuracy plummets to 66.75% whereas R1-32B maintains a excessive 88.97%, revealing a stark 22% efficiency hole.

Equally, amongst same-sized fashions like AReaL-boba-RL-7B and OpenThinker2-7B, REST captures vital variations in multi-problem dealing with skills that single-question evaluations masks.

3. Publish-Coaching Strategies Might Not Assure Strong Multi-Downside Reasoning

Fashions fine-tuned with reinforcement studying or supervised tuning on single-problem reasoning usually fail to protect their benefits in REST’s multi-question setting. This requires rethinking coaching methods to optimize reasoning robustness beneath lifelike multi-context situations.

4. “Long2Short” Coaching Enhances Efficiency Beneath Stress

Fashions skilled with “long2short” methods — which encourage concise and environment friendly reasoning chains — preserve increased accuracy beneath REST. This means a promising avenue for designing fashions higher suited to simultaneous multi-problem reasoning.

How REST Stimulates Reasonable Reasoning Challenges

By rising the cognitive load on LRMs by means of simultaneous drawback presentation, REST simulates real-world calls for the place reasoning programs should dynamically prioritize, keep away from overthinking one drawback, and resist interference from concurrent duties.

REST additionally systematically analyzes error sorts, revealing widespread failure modes reminiscent of:

- Query Omission: Ignoring later questions in a multi-question immediate.

- Abstract Errors: Incorrectly summarizing solutions throughout issues.

- Reasoning Errors: Logical or calculation errors inside the reasoning course of.

These nuanced insights are largely invisible in single-question assessments.

Sensible Analysis Setup and Benchmark Protection

- REST evaluated 34 LRMs spanning sizes from 1.5B to 671B parameters.

- Benchmarks examined embody:

- Easy: GSM8K

- Medium: MATH500, AMC23

- Difficult: AIME24, AIME25, GPQA Diamond, LiveCodeBench

- Mannequin era parameters are set based on official pointers, with output token limits of 32K for reasoning fashions.

- Utilizing the standardized OpenCompass toolkit ensures constant, reproducible outcomes.

Conclusion: REST as a Future-Proof, Reasonable LRM Analysis Paradigm

REST constitutes a major leap ahead in evaluating giant reasoning fashions by:

- Addressing Benchmark Saturation: Revitalizes present datasets with out costly full replacements.

- Reflecting Actual-World Multi-Activity Calls for: Checks fashions beneath lifelike, excessive cognitive load situations.

- Guiding Mannequin Growth: Highlights the significance of coaching strategies like Long2Short to mitigate overthinking and encourage adaptive reasoning focus.

In sum, REST paves the best way for extra dependable, sturdy, and application-relevant benchmarking of next-generation reasoning AI programs.

Take a look at the Paper, Project Page and Code. All credit score for this analysis goes to the researchers of this mission. SUBSCRIBE NOW to our AI E-newsletter

Sajjad Ansari is a ultimate yr undergraduate from IIT Kharagpur. As a Tech fanatic, he delves into the sensible purposes of AI with a deal with understanding the influence of AI applied sciences and their real-world implications. He goals to articulate advanced AI ideas in a transparent and accessible method.