Human-AI Collaboration in Bodily Duties – Machine Studying Weblog | ML@CMU

TL;DR: At SmashLab, we’re creating an clever assistant that makes use of the sensors in a smartwatch to assist bodily duties comparable to cooking and DIY. This weblog put up explores how we use much less intrusive scene understanding—in comparison with cameras—to allow useful, context-aware interactions for job execution of their each day lives.

Desirous about AI assistants for duties past simply the digital world? Every single day, we carry out many duties, together with cooking, crafting, and medical self-care (just like the COVID-19 self-test equipment), which contain a collection of discrete steps. Precisely executing all of the steps may be tough; once we strive a brand new recipe, for instance, we would have questions at any step and may make errors by skipping necessary steps or doing them within the flawed order.

This venture, Procedural Interplay from Sensing Module (PrISM), goals to assist customers in executing these sorts of duties by way of dialogue-based interactions. Through the use of sensors comparable to a digicam, wearable gadgets like a smartwatch, and privacy-preserving ambient sensors like a Doppler Radar, an assistant can infer the consumer’s context (what they’re doing inside the job) and supply contextually located assist.

To realize human-like help, we should contemplate many issues: how does the agent perceive the consumer’s context? How ought to it reply to consumer’s spontaneous questions? When ought to it determine to intervene proactively? And most significantly, how do each human customers and AI assistants evolve collectively by way of on a regular basis interactions?

Whereas completely different sensing platforms (e.g., cameras, LiDAR, Doppler Radars, and many others.) can be utilized in our framework, we concentrate on a smartwatch-based assistant within the following. The smartwatch is chosen for its ubiquity, minimal privateness considerations in comparison with camera-based techniques, and functionality for monitoring a consumer throughout numerous each day actions.

Monitoring Consumer Actions with Multimodal Sensing

Human Exercise Recognition (HAR) is a method to determine consumer exercise contexts from sensors. For instance, a smartwatch has movement and audio sensors to detect completely different each day actions comparable to hand washing and chopping greens [1]. Nonetheless, out of the field, state-of-the-art HAR struggles from noisy knowledge and less-expressive actions which might be usually a part of each day life duties.

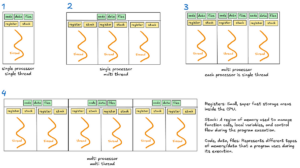

PrISM-Tracker (IMWUT’22) [2] improves monitoring by including state transition data, that’s, how customers transition from one step to a different and the way lengthy they normally spend at every step. The tracker makes use of an prolonged model of the Viterbi algorithm [3] to stabilize the frame-by-frame HAR prediction.

As proven within the above determine, PrISM-Tracker improves the accuracy of frame-by-frame monitoring. Nonetheless, the general accuracy is round 50-60%, highlighting the problem of utilizing only a smartwatch to exactly monitor the process state on the body degree. Nonetheless, we are able to develop useful interactions out of this imperfect sensing.

Responding to Consumer Ambiguous Queries

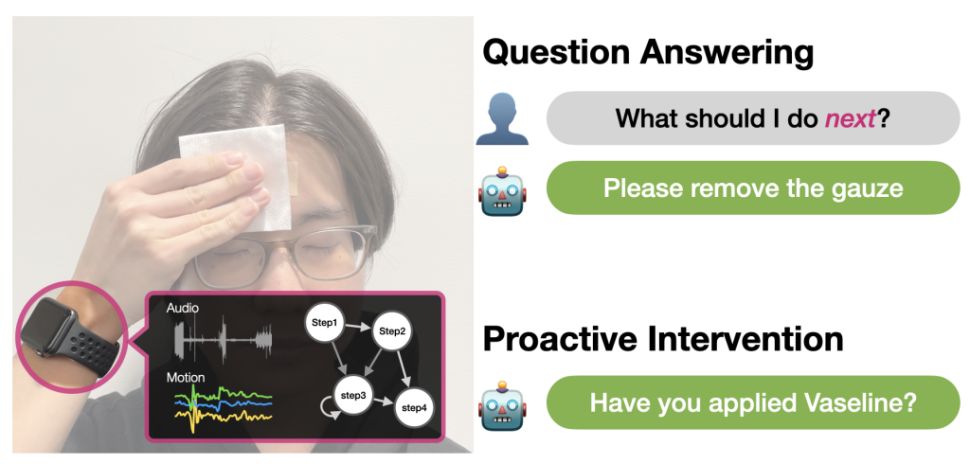

Voice assistants (like Siri and Amazon Alexa), able to answering consumer queries throughout numerous bodily duties, have proven promise in guiding customers by way of advanced procedures. Nonetheless, customers usually discover it difficult to articulate their queries exactly, particularly when unfamiliar with the particular vocabulary. Our PrISM-Q&A (IMWUT’24) [4] can resolve such points with context derived from PrISM-Tracker.

When a query is posed, sensed contextual data is equipped to Giant Language Fashions (LLMs) as a part of the immediate context used to generate a response, even within the case of inherently imprecise questions like “What ought to I do subsequent with this?” and “Did I miss any step?” Our research demonstrated improved accuracy in query answering and most well-liked consumer expertise in comparison with current voice assistants in a number of duties: cooking, latte-making, and skincare.

As a result of PrISM-Tracker could make errors, the output of PrISM-Q&A can also be incorrect. Thus, if the assistant makes use of the context data, the assistant first characterizes its present understanding of the context within the response to keep away from complicated the consumer, as an illustration, “In case you are washing your arms, then the subsequent step is slicing vegetables.” This manner, it tries to assist customers determine the error and shortly appropriate it interactively to get the specified reply.

Intervening with Customers Proactively to Stop Errors

Subsequent, we prolonged the assistant’s functionality by incorporating proactive intervention to stop errors. Technical challenges embody noise in sensing knowledge and uncertainties in consumer conduct, particularly since customers are allowed flexibility within the order of steps to finish duties. To deal with these challenges, PrISM-Observer (UIST’24) [5] employs a stochastic mannequin to attempt to account for uncertainties and decide the optimum timing for delivering reminders in actual time.

Crucially, the assistant doesn’t impose a inflexible, predefined step-by-step sequence; as a substitute, it displays consumer conduct and intervenes proactively when needed. This method balances consumer autonomy and proactive steering, enabling people to carry out important duties safely and precisely.

Future Instructions

Our assistant system has simply been rolled out, and loads of future work continues to be on the horizon.

Minimizing the info assortment effort

To coach the underlying human exercise recognition mannequin on the smartwatch and construct a transition graph, we presently conduct 10 to twenty classes of the duty, every annotated with step labels. Using a zero-shot multimodal exercise recognition mannequin and refining step granularity are important for scaling the assistant to deal with numerous each day duties.

Co-adaptation of the consumer and AI assistant

As future work, we’re excited to deploy our assistants in healthcare settings to assist on a regular basis look after post-operative pores and skin most cancers sufferers and people with dementia.

Mackay [6] launched the concept of a human-computer partnership, the place people and clever brokers collaborate to outperform both working alone. Additionally, reciprocal co-adaptation [7] refers to the place each the consumer and the system adapt to and have an effect on the others’ conduct to attain sure targets. Impressed by these concepts, we’re actively exploring methods to fine-tune our assistant by way of interactions after deployment. This helps the assistant enhance context understanding and discover a snug management stability by exploring the mixed-initiative interplay design [8].

Conclusion

There are various open questions in terms of perfecting assistants for bodily duties. Understanding consumer context precisely throughout these duties is especially difficult on account of components like sensor noise. By way of our PrISM venture, we purpose to beat these challenges by designing interventions and growing human-AI collaboration methods. Our objective is to create useful and dependable interactions, even within the face of imperfect sensing.

Our code and datasets can be found on GitHub. We’re actively working on this thrilling analysis discipline. In case you are , please contact Riku Arakawa (HCII Ph.D. scholar).

Acknowledgments

The writer thanks each collaborator within the venture. The event of the PrISM assistant for well being functions is in collaboration with College Hospitals of Cleveland Division of Dermatology and Fraunhofer Portugal AICOS.

References

[1] Mollyn, V., Ahuja, Ok., Verma, D., Harrison, C., & Goel, M. (2022). SAMoSA: Sensing actions with movement and subsampled audio. Proceedings of the ACM on Interactive, Cellular, Wearable and Ubiquitous Applied sciences, 6(3), 1-19.

[2] Arakawa, R., Yakura, H., Mollyn, V., Nie, S., Russell, E., DeMeo, D. P., … & Goel, M. (2023). Prism-tracker: A framework for multimodal process monitoring utilizing wearable sensors and state transition data with user-driven dealing with of errors and uncertainty. Proceedings of the ACM on Interactive, Cellular, Wearable and Ubiquitous Applied sciences, 6(4), 1-27.

[3] Forney, G. D. (1973). The viterbi algorithm. Proceedings of the IEEE, 61(3), 268-278.

[4] Arakawa, R., Lehman, JF. & Goel, M. (2024) “Prism-q&a: Step-aware voice assistant on a smartwatch enabled by multimodal process monitoring and enormous language fashions.” Proceedings of the ACM on Interactive, Cellular, Wearable and Ubiquitous Applied sciences, 8(4), 1-26.

[5] Arakawa, R., Yakura, H., & Goel, M. (2024, October). PrISM-Observer: Intervention agent to assist customers carry out on a regular basis procedures sensed utilizing a smartwatch. In Proceedings of the thirty seventh Annual ACM Symposium on Consumer Interface Software program and Know-how (pp. 1-16).

[6] Mackay, W. E. (2023, November). Creating human-computer partnerships. In Worldwide Convention on Pc-Human Interplay Analysis and Purposes (pp. 3-17). Cham: Springer Nature Switzerland.

[7] Beaudouin-Lafon, M., Bødker, S., & Mackay, W. E. (2021). Generative theories of interplay. ACM Transactions on Pc-Human Interplay (TOCHI), 28(6), 1-54.

[8] Allen, J. E., Guinn, C. I., & Horvtz, E. (1999). Blended-initiative interplay. IEEE Clever Techniques and their Purposes, 14(5), 14-23.