ScribeAgent: Nice-Tuning Open-Supply LLMs for Enhanced Internet Navigation – Machine Studying Weblog | ML@CMU

TL;DR: LLM net brokers are designed to foretell a sequence of actions to finish a user-specified job. Most current brokers are constructed on high of general-purpose, proprietary fashions like GPT-4 and rely closely on immediate engineering. We reveal that fine-tuning open-source LLMs utilizing a big set of high-quality, real- world workflow knowledge can enhance efficiency whereas utilizing a smaller LLM spine, which may scale back serving prices.

As massive language fashions (LLMs) proceed to advance, a pivotal query arises when making use of them to specialised duties: ought to we fine-tune the mannequin or depend on prompting with in-context examples? Whereas prompting is easy and extensively adopted, our current work demonstrates that fine-tuning with in-domain knowledge can considerably improve efficiency over prompting in net navigation. On this weblog submit, we’ll introduce the paper “ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data“, the place we present fine-tuning a 7B open-source LLM utilizing large-scale, high-quality, real-world net workflow knowledge can surpass closed-source fashions reminiscent of GPT-4 and o1-preview on net navigation duties. This end result underscores the immense potential of specialised fine-tuning in tackling complicated reasoning duties.

Background: LLM Internet Brokers and the Want for Nice-Tuning

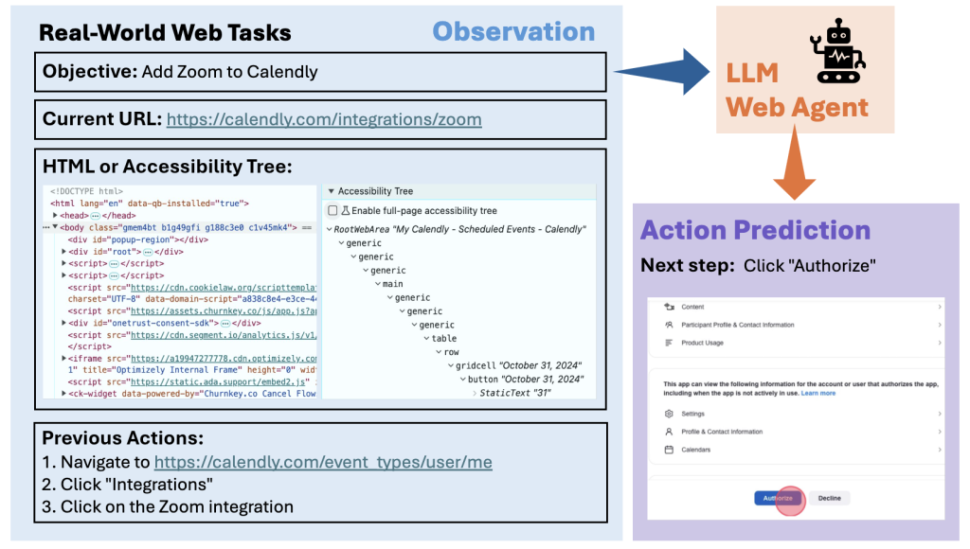

LLM-powered automated brokers have emerged as a big analysis area, with “net brokers” being one well-liked route. These brokers can navigate web sites to unravel real-world duties. To take action, the person first defines a high-level goal. The agent then outputs step-by-step actions based mostly on the person’s aim, present remark, and interplay historical past. For text-only brokers, the remark sometimes contains the web site’s URL, the webpage itself, and presumably the accessibility tree utilized by assistive applied sciences (see the introduction determine). The agent can then carry out actions reminiscent of keyboard and mouse operations.

Present net brokers rely closely on prompting general-purpose, proprietary LLMs like GPT-4. To leverage LLMs for net navigation, earlier analysis explores numerous prompting strategies:

- Higher planning capacity: A number of research make use of superior search methods to allow brokers to plan forward and choose the optimum motion in the long run (e.g., SteP, Tree Search).

- Higher reasoning capacity: Strategies like self-feedback and iterative refinement enable brokers to enhance their very own actions iteratively (e.g., AdaPlanner, Bagel). Incorporating exterior evaluators supplies an extra layer of oversight (e.g., Agent Eval & Refine).

- Reminiscence utilization: By using reminiscence databases, brokers can retrieve previous trajectories to make use of as demonstrations for present duties. This helps brokers be taught from earlier interactions (e.g., AWM, Synapse).

Whereas these approaches are efficient, the ensuing brokers carry out considerably under human ranges on commonplace benchmarks, reminiscent of Mind2Web and WebArena. This happens due to the next challenges:

- Lack of web-specific data: Basic-purpose LLMs should not particularly educated to interpret web-specific languages like HTML.

- Restricted planning and exploration capacity: LLMs should not developed to carry out sequential reasoning over an extended horizon, the place the agent should keep in mind previous actions, perceive the evolving state of the atmosphere, carry out lively exploration, and plan a number of steps forward to realize a aim.

- Sensible constraints: Reliance on proprietary fashions can result in elevated prices and dependency on a single supplier. Actual-time net interplay can require a considerable amount of API calls. Any adjustments within the supplier’s service phrases, pricing, or availability can have an effect on the agent’s performance.

Nice-tuning open-source LLMs affords an interesting approach to deal with these challenges (Determine 1). Nonetheless, fine-tuning comes with its personal set of necessary questions. For instance, how can we acquire adequate domain-specific datasets to coach the mannequin successfully? How ought to we formulate the enter prompts and outputs to align with the pre-trained mannequin and the online navigation duties? Which fashions ought to we fine-tune? Addressing these questions is essential to unlocking the total potential of open-source LLMs for net navigation.

Introducing ScribeAgent: Nice-Tuning with In-Area Information

ScribeAgent is developed by adapting open-source LLMs for net navigation by fine-tuning on in-domain knowledge as a substitute of prompting-based strategies. We introduce two key facets to make fine-tuning profitable: (1) Developing a large-scale, high-quality dataset and (2) fine-tuning LLMs to leverage this knowledge.

Step 1: Crafting a Giant-Scale, Excessive-High quality Dataset

We collaborated with Scribe, an AI workflow documentation software program that streamlines the creation of step-by-step guides for web-based duties. Scribe permits customers to report their net interactions through a browser extension, changing them into well-annotated directions for particular enterprise wants. See Determine 2 for an instance scribe.

This collaboration supplied entry to an enormous database of real-world, high-quality net workflows annotated by precise customers. These workflows cowl a wide range of net domains, together with social platforms like Fb and LinkedIn; procuring websites like Amazon and Shopify; productiveness instruments like Notion and Calendly; and lots of others. Every workflow contains a high-level person goal and a sequence of steps to realize the duty. Every step incorporates (1) the present net web page’s URL, (2) uncooked HTML, (3) a pure language description of the motion carried out, (4) the kind of motion, like click on or kind, and (5) the HTML component that’s the goal of the motion.

The uncooked HTML knowledge of real-world web sites could be exceedingly lengthy, usually starting from 10K to 100K tokens, surpassing the context window of most open-source LLMs. To make the info manageable for fine-tuning, we applied a pruning algorithm that retains important construction and content material whereas eliminating redundant components. Lastly, we reformat the dataset right into a next-step prediction job: The enter consists of the person goal, the present net web page’s URL, the processed HTML, and the earlier actions. The agent is predicted to generate the following motion based mostly on the enter. We spotlight the next traits for the ensuing dataset:

- Scale: Covers over 250 domains and 10,000 subdomains.

- Process size: Common 11 steps per job.

- Coaching tokens: Roughly 6 billion.

This dataset’s scale and high quality are unparalleled in prior net agent analysis.

Step 2: Nice-Tuning Open-Supply LLMs

After acquiring the dataset, we confronted two essential selections: which mannequin to fine-tune and find out how to fine-tune it. To probe into these questions, we leverage the dataset and carry out a sequence of ablation research:

- LLM spine: Mistral, Qwen, LLaMA

- Mannequin dimension: small (<10B parameters), medium (10–30B parameters), massive (>30B parameters)

- Context window: 32K tokens vs. 65K tokens

- Nice-tuning methodology: Full fine-tuning vs. LoRA

We fine-tuned every mannequin variant on the identical coaching dataset and evaluated their efficiency on a check set. The detailed outcomes can be found in our paper and Determine 3, however the important thing takeaways are:

- The Qwen household considerably outperformed Mistral and LLaMA fashions, each earlier than and after fine-tuning.

- Growing the mannequin dimension and context window size constantly led to improved efficiency.

- Whereas full fine-tuning has a slight efficiency achieve over parameter-efficient fine-tuning, it requires way more GPU, reminiscence, and time. Then again, LoRA decreased computational necessities with out compromising efficiency.

Primarily based on the ablation research outcomes, we develop two variations of ScribeAgent by fine-tuning open-source LLMs utilizing LoRA:

- ScribeAgent-Small: Primarily based on Qwen2 Instruct 7B; cost-effective and environment friendly for inference.

- ScribeAgent-Giant: Primarily based on Qwen2.5 Instruct 32B; superior efficiency in inside and exterior evaluations.

Empirical Outcomes: Nice-Tuned Fashions Surpass GPT-4-Primarily based Brokers

We evaluated ScribeAgent on three datasets: our proprietary check set, derived from the real-world workflows we collected; the text-based Mind2Web benchmark; and the interactive WebArena.

On our proprietary dataset, we noticed that ScribeAgent considerably outperforms proprietary fashions like GPT-4o, GPT-4o mini, o1-mini, and o1-preview, showcasing the advantages of specialised fine-tuning over general-purpose LLMs (Determine 4). Notably, ScribeAgent-Small has solely 7B parameters and ScribeAgent-Giant has 32B parameters, neither requiring extra scaling throughout inference. In distinction, these proprietary baselines are sometimes bigger and demand extra computational sources at inference time, making ScribeAgent a better option by way of accuracy, latency, and value. As well as, whereas the non-fine-tuned Qwen2 mannequin performs extraordinarily poorly, fine-tuning it with our dataset boosts its efficiency by almost sixfold, highlighting the significance of domain-specific knowledge.

As for Mind2Web, we adopted the benchmark setup and examined our brokers in two settings: multi-stage QA and direct era. The multi-stage QA setting leverages a pretrained element-ranking mannequin to filter out extra possible candidate components from the total HTML and ask the agent to pick out one choice from the candidate record. The direct era setting is way more difficult and requires the agent to immediately generate an motion based mostly on the total HTML. To judge ScribeAgent’s generalization efficiency, we didn’t fine-tune it on the Mind2Web coaching knowledge, so the analysis is zero-shot.

Our outcomes spotlight that, for multi-stage analysis, ScribeAgent-Giant achieves the very best general zero-shot efficiency. Its component accuracy and step success price metrics are additionally aggressive with the best-fine-tuned baseline, HTML-T5-XL, on cross-website and cross-domain duties. Within the direct era setting, ScribeAgent-Giant outperforms all current baselines, with step success charges 2-3 instances increased than these achieved by the fine-tuned Flan-T5.

The first failure instances of our fashions end result from the distribution mismatch between our coaching knowledge and the artificial Mind2Web knowledge. As an illustration, our agent would possibly predict one other component with equivalent perform however completely different from the bottom fact. It additionally decomposes typing actions right into a click on adopted by a typing motion, whereas Mind2Web expects a single kind. These points could be addressed by bettering the analysis process. After resolving these issues, we noticed a mean of 8% improve in job success price and component accuracy for ScribeAgent.

Analysis on WebArena is extra sophisticated. First, WebArena expects actions specified within the accessibility tree format, whereas ScribeAgent outputs actions in HTML format. Second, the interactive nature of WebArena requires the agent to resolve when to terminate the duty. To handle these challenges, we developed a multi-agent system that leverages GPT-4o for motion translation and job completeness analysis.

In comparison with current text-only brokers, ScribeAgent augmented with GPT-4o achieved the very best job success price throughout 4 of 5 domains in WebArena and improved the earlier greatest complete success price by 7.3% (Determine 6). In domains extra aligned with our coaching knowledge, reminiscent of Reddit and GitLab, ScribeAgent demonstrated stronger generalization capabilities and better success charges. We refer the readers to our paper for extra experiment particulars on all three benchmarks.

Conclusion

In abstract, ScribeAgent demonstrates that fine-tuning open-source LLMs with high-quality, in-domain knowledge can outperform even probably the most superior prompting strategies. Whereas our outcomes are promising, there are limitations to contemplate. ScribeAgent was developed primarily to showcase the effectiveness of fine-tuning and doesn’t incorporate exterior reasoning and planning modules; integrating these strategies may additional enhance its efficiency. Moreover, increasing ScribeAgent’s capabilities to deal with multi-modal inputs, reminiscent of screenshots, could make it extra versatile and strong in real-world net environments.

To be taught extra about ScribeAgent and discover our detailed findings, we invite you to learn our full paper. The venture’s progress, together with future enhancements and updates, could be adopted on our GitHub repository. Keep tuned for upcoming mannequin releases!