Construct generative AI functions on Amazon Bedrock with the AWS SDK for Python (Boto3)

Amazon Bedrock is a totally managed service that gives a selection of high-performing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon via a single API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI. With Amazon Bedrock, you’ll be able to experiment with and consider high FMs on your use case, privately customise them together with your information utilizing methods resembling fine-tuning and Retrieval Augmented Technology (RAG), and construct brokers that run duties utilizing your enterprise methods and information sources. As a result of Amazon Bedrock is serverless, you don’t need to handle any infrastructure, and you’ll securely combine and deploy generative AI capabilities into your functions utilizing the AWS companies you might be already conversant in.

On this publish, we reveal use Amazon Bedrock with the AWS SDK for Python (Boto3) to programmatically incorporate FMs.

Resolution overview

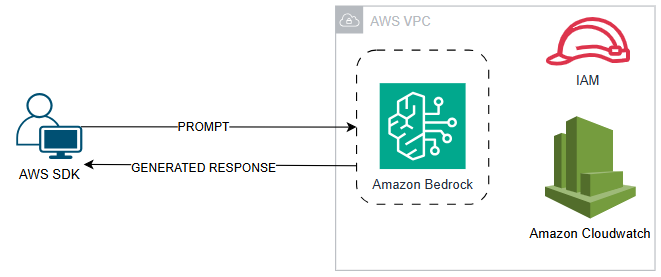

The answer makes use of an AWS SDK for Python script with options that invoke Anthropic’s Claude 3 Sonnet on Amazon Bedrock. By utilizing this FM, it generates an output utilizing a immediate as enter. The next diagram illustrates the answer structure.

Conditions

Earlier than you invoke the Amazon Bedrock API, be sure to have the next:

Deploy the answer

After you full the stipulations, you can begin utilizing Amazon Bedrock. Start by scripting with the next steps:

- Import the required libraries:

- Arrange the Boto3 shopper to make use of the Amazon Bedrock runtime and specify the AWS Area:

- Outline the mannequin to invoke utilizing its model ID. On this instance, we use Anthropic’s Claude 3 Sonnet on Amazon Bedrock:

- Assign a immediate, which is your message that shall be used to work together with the FM at invocation:

Prompt engineering techniques can enhance FM efficiency and improve outcomes.

Earlier than invoking the Amazon Bedrock mannequin, we have to outline a payload, which acts as a set of directions and data guiding the mannequin’s technology course of. This payload construction varies relying on the chosen mannequin. On this instance, we use Anthropic’s Claude 3 Sonnet on Amazon Bedrock. Consider this payload because the blueprint for the mannequin, and supply it with the required context and parameters to generate the specified textual content based mostly in your particular immediate. Let’s break down the important thing components inside this payload:

- anthropic_version – This specifies the precise Amazon Bedrock model you’re utilizing.

- max_tokens – This units a restrict on the entire variety of tokens the mannequin can generate in its response. Tokens are the smallest significant unit of textual content (phrase, punctuation, subword) processed and generated by giant language fashions (LLMs).

- temperature – This parameter controls the extent of randomness within the generated textual content. Larger values result in extra inventive and probably sudden outputs, and decrease values promote extra conservative and constant outcomes.

- top_k – This defines the variety of most possible candidate phrases thought-about at every step in the course of the technology course of.

- top_p – This influences the sampling chance distribution for choosing the subsequent phrase. Larger values favor frequent phrases, whereas decrease values permit for extra numerous and probably shocking selections.

- messages – That is an array containing particular person messages for the mannequin to course of.

- function – This defines the sender’s function inside the message (the consumer for the immediate you present).

- content material – This array holds the precise immediate textual content itself, represented as a “textual content” kind object.

- Outline the payload as follows:

- You may have set the parameters and the FM you need to work together with. Now you ship a request to Amazon Bedrock by offering the FM to work together with and the payload that you just outlined:

- After the request is processed, you’ll be able to show the results of the generated textual content from Amazon Bedrock:

Let’s take a look at our full script:

Invoking the mannequin with the immediate “Whats up, how are you?” will yield the end result proven within the following screenshot.

![]()

Clear up

Once you’re executed utilizing Amazon Bedrock, clear up short-term assets like IAM customers and Amazon CloudWatch logs to keep away from pointless costs. Value concerns depend upon utilization frequency, chosen mannequin pricing, and useful resource utilization whereas the script runs. See Amazon Bedrock Pricing for pricing particulars and cost-optimization methods like deciding on acceptable fashions, optimizing prompts, and monitoring utilization.

Conclusion

On this publish, we demonstrated programmatically work together with Amazon Bedrock FMs utilizing Boto3. We explored invoking a selected FM and processing the generated textual content, showcasing the potential for builders to make use of these fashions of their functions for a wide range of use circumstances, resembling:

- Textual content technology – Generate inventive content material like poems, scripts, musical items, and even completely different programming languages

- Code completion – Improve developer productiveness by suggesting related code snippets based mostly on present code or prompts

- Information summarization – Extract key insights and generate concise summaries from giant datasets

- Conversational AI – Develop chatbots and digital assistants that may have interaction in pure language conversations

Keep curious and discover how generative AI can revolutionize varied industries. Discover the completely different fashions and APIs and run comparisons of how every mannequin supplies completely different outputs. Discover the mannequin that can suit your use case and use this script as a base to create brokers and integrations in your answer.

In regards to the Writer

Merlin Naidoo is a Senior Technical Account Supervisor at AWS with over 15 years of expertise in digital transformation and revolutionary technical options. His ardour is connecting with individuals from all backgrounds and leveraging expertise to create significant alternatives that empower everybody. When he’s not immersed on the planet of tech, you’ll find him collaborating in energetic sports activities.