Pure Language Era Inside Out: Educating Machines to Write Like People

Pure Language Era Inside Out: Educating Machines to Write Like People

Picture by Editor | Midjourney

Pure language technology (NLG) is an enthralling space of synthetic intelligence (AI), or extra particularly of pure language processing (NLP), geared toward enabling machines to supply human-like textual content that drives human-machine communication for problem-solving. This text explores what NLG is, the way it works, and the way this space has advanced over latest years whereas underscoring its significance in a number of functions.

Understanding Pure Language Era

AI and pc techniques generally don’t function on human language however on numerical representations of knowledge. Subsequently, NLG entails reworking information that’s being processed into human-readable textual content. Widespread use instances of NLG embody automated report writing, chatbots, question-answering, and customized content material creation.

To higher comprehend how NLG works, it’s important to additionally perceive its relationship with pure language understanding (NLU): NLG focuses on producing language, whereas NLU focuses on deciphering and understanding it. Therefore, the reverse transformation course of to that occurring in NLG takes place in NLU: human language inputs like textual content have to be encoded into numerical — usually vector — representations of the textual content that algorithms and fashions can analyze, interpret, and make sense of by discovering complicated language patterns throughout the textual content.

At its core, NLG may be understood as a recipe. Simply as a chef places collectively components in a particular order to create a dish, NLG techniques assemble components of language based mostly on enter information equivalent to a immediate, and context data.

It is very important perceive that latest NLG approaches just like the transformer structure described later, mix data in inputs by incorporating NLU steps into the preliminary levels of NLG. Producing a response sometimes requires understanding an enter request or immediate formulated by the person. As soon as this understanding course of is utilized and language items of the jigsaw are meaningfully assembled, an NLG system generates an output response phrase by phrase. This implies the output language just isn’t generated solely without delay, however one phrase after one other. In different phrases, the broader language technology downside is decomposed right into a sequence of less complicated issues, particularly a next-word prediction downside, which is addressed iteratively and sequentially.

The next-word prediction downside is formulated at a low structure stage as a classification job. Similar to a traditional classification mannequin in machine studying may be educated to categorise photos of animals into species, or financial institution clients into eligible or not eligible for a mortgage, an NLG mannequin incorporates at its closing stage a classification layer that estimates the chance of every phrase in a vocabulary or language being the following phrase the system ought to generate as a part of the message: thus, the highest-likelihood phrase is returned because the precise subsequent phrase.

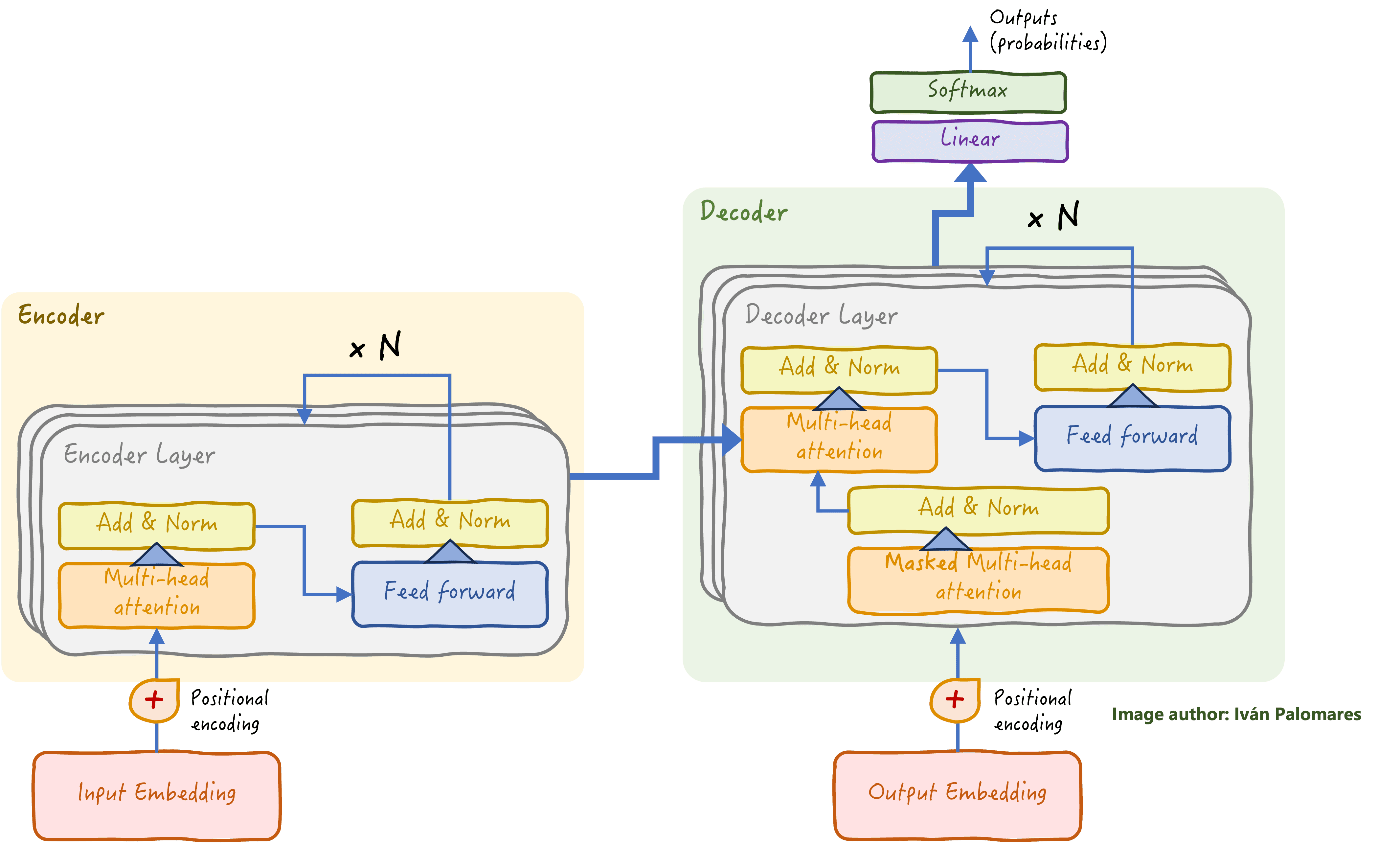

The encoder-decoder transformer structure is the inspiration of recent giant language fashions (LLMs), which excel at NLG duties. On the closing stage of the decoder stack (top-right nook of the diagram under) is a classification layer educated to learn to predict the following phrase to generate.

Classical transformer structure: the encoder stack focuses on language understanding of the enter, whereas the decoder stack makes use of the perception gained to generate a response phrase by phrase.

Evolution of NLG Strategies and Architectures

NLG has advanced considerably, from restricted and reasonably static rule-based techniques within the early days of NLP to classy fashions like Transformers and LLMs these days, able to performing a powerful vary of language duties together with not solely human language but additionally code technology. The comparatively latest introduction of retrieval-augmented technology (RAG) has additional enhanced NLG capabilities, addressing some limitations of LLMs like hallucinations and information obsolescence by integrating exterior information as extra contextual inputs for the technology course of. RAG helped allow extra related and context-aware responses expressed in human language, by retrieving in real-time related data to complement the person’s enter prompts.

Developments, Challenges, and Future Instructions

The way forward for NLG appears to be like promising, though it’s not exempt from challenges equivalent to:

- Making certain accuracy in generated textual content: as fashions’ sophistication and complexity develop, making certain that they generate factually right and contextually acceptable content material stays a key precedence for AI builders.

- Moral concerns: it’s important to sort out points like bias in generated textual content and the potential for misuse or unlawful makes use of, requiring extra stable frameworks for accountable AI deployment.

- Value of coaching and constructing fashions: the numerous computational assets wanted for coaching state-of-the-art NLG fashions like these based mostly on transformers, generally is a barrier for a lot of organizations, limiting their accessibility. Cloud suppliers and main AI companies are regularly introducing available in the market options to eradicate this burden.

As AI applied sciences proceed to broaden, we are able to anticipate extra superior and intuitive NLG that hold blurring the boundaries between human and machine-driven communication.