How Druva used Amazon Bedrock to deal with basis mannequin complexity when constructing Dru, Druva’s backup AI copilot

This publish is co-written with David Gildea and Tom Nijs from Druva.

Druva permits cyber, information, and operational resilience for hundreds of enterprises, and is trusted by 60 of the Fortune 500. Clients use Druva Data Resiliency Cloud to simplify information safety, streamline information governance, and acquire information visibility and insights. Unbiased software program distributors (ISVs) like Druva are integrating AI assistants into their consumer functions to make software program extra accessible.

Dru, the Druva backup AI copilot, permits real-time interplay and customized responses, with customers partaking in a pure dialog with the software program. From discovering inconsistencies and errors throughout the surroundings to scheduling backup jobs and setting retention insurance policies, customers want solely ask and Dru responds. Dru can even advocate actions to enhance the surroundings, treatment backup failures, and determine alternatives to reinforce safety.

On this publish, we present how Druva approached pure language querying (NLQ)—asking questions in English and getting tabular information as solutions—utilizing Amazon Bedrock, the challenges they confronted, pattern prompts, and key learnings.

Use case overview

The next screenshot illustrates the Dru dialog interface.

In a single dialog interface, Dru offers the next:

- Interactive reporting with real-time insights – Customers can request information or custom-made reviews with out intensive looking out or navigating by a number of screens. Dru additionally suggests follow-up questions to reinforce consumer expertise.

- Clever responses and a direct conduit to Druva’s documentation – Customers can acquire in-depth data about product options and functionalities with out guide searches or watching coaching movies. Dru additionally suggests sources for additional studying.

- Assisted troubleshooting – Customers can request summaries of high failure causes and obtain urged corrective measures. Dru on the backend decodes log information, deciphers error codes, and invokes API calls to troubleshoot.

- Simplified admin operations, with elevated seamlessness and accessibility – Customers can carry out duties like creating a brand new backup coverage or triggering a backup, managed by Druva’s current role-based entry management (RBAC) mechanism.

- Personalized web site navigation by conversational instructions – Customers can instruct Dru to navigate to particular web site areas, eliminating the necessity for guide menu exploration. Dru additionally suggests follow-up actions to hurry up activity completion.

Challenges and key learnings

On this part, we talk about the challenges and key learnings of Druva’s journey.

Total orchestration

Initially, we adopted an AI agent method and relied on the inspiration mannequin (FM) to make plans and invoke instruments utilizing the reasoning and performing (ReAct) methodology to reply consumer questions. Nonetheless, we discovered the target too broad and sophisticated for the AI agent. The AI agent would take greater than 60 seconds to plan and reply to a consumer query. Generally it might even get caught in a thought-loop, and the general success charge wasn’t passable.

We determined to maneuver to the immediate chaining method utilizing a directed acyclic graph (DAG). This method allowed us to interrupt the issue down into a number of steps:

- Determine the API route.

- Generate and invoke personal API calls.

- Generate and run information transformation Python code.

Every step grew to become an unbiased stream, so our engineers might iteratively develop and consider the efficiency and pace till they labored nicely in isolation. The workflow additionally grew to become extra controllable by defining correct error paths.

Stream 1: Determine the API route

Out of the a whole lot of APIs that energy Druva merchandise, we wanted to match the precise API the applying must name to reply the consumer query. For instance, “Present me my backup failures for the previous 72 hours, grouped by server.” Having related names and synonyms in API routes make this retrieval drawback extra advanced.

Initially, we formulated this activity as a retrieval drawback. We tried completely different strategies, together with k-nearest neighbor (k-NN) search of vector embeddings, BM25 with synonyms, and a hybrid of each throughout fields together with API routes, descriptions, and hypothetical questions. We discovered that the only and most correct manner was to formulate it as a classification activity to the FM. We curated a small checklist of examples in question-API route pairs, which helped enhance the accuracy and make the output format extra constant.

Stream 2: Generate and invoke personal API calls

Subsequent, we API name with the proper parameters and invoke it. FM hallucination of parameters, significantly these with free-form JSON object, is among the main challenges in the entire workflow. For instance, the unsupported key server can seem within the generated parameter:

We tried completely different prompting strategies, corresponding to few-shot prompting and chain of thought (CoT), however the success charge was nonetheless unsatisfactory. To make API name technology and invocation extra strong, we separated this activity into two steps:

- First, we used an FM to generate parameters in a JSON dictionary as an alternative of a full API request headers and physique.

- Afterwards, we wrote a postprocessing perform to take away parameters that didn’t conform to the API schema.

This methodology supplied a profitable API invocation, on the expense of getting extra information than required for downstream processing.

Stream 3: Generate and run information transformation Python code

Subsequent, we took the response from the API name and reworked it to reply the consumer query. For instance, “Create a pandas dataframe and group it by server column.” Just like stream 2, FM hallucination is once more an impediment. Generated code can include syntax errors, corresponding to complicated PySpark features with Pandas features.

After making an attempt many alternative prompting strategies with out success, we regarded on the reflection sample, asking the FM to self-correct code in a loop. This improved the success charge on the expense of extra FM invocations, which have been slower and dearer. We discovered that though smaller fashions are sooner and more cost effective, at instances that they had inconsistent outcomes. Anthropic’s Claude 2.1 on Amazon Bedrock gave extra correct outcomes on the second strive.

Mannequin selections

Druva chosen Amazon Bedrock for a number of compelling causes, with safety and latency being crucial. A key issue on this choice was the seamless integration with Druva’s companies. Utilizing Amazon Bedrock aligned naturally with Druva’s current surroundings on AWS, sustaining a safe and environment friendly extension of their capabilities.

Moreover, certainly one of our major challenges in growing Dru concerned deciding on the optimum FMs for particular duties. Amazon Bedrock successfully addresses this problem with its intensive array of accessible FMs, every providing distinctive capabilities. This selection enabled Druva to conduct the fast and complete testing of assorted FMs and their parameters, facilitating the number of probably the most appropriate one. The method was streamlined as a result of Druva didn’t must delve into the complexities of working or managing these various FMs, because of the strong infrastructure supplied by Amazon Bedrock.

By the experiments, we discovered that completely different fashions carried out higher in particular duties. For instance, Meta Llama 2 carried out higher with code technology activity; Anthropic Claude Occasion was good in environment friendly and cost-effective dialog; whereas Anthropic Claude 2.1 was good in getting desired responses in retry flows.

These have been the newest fashions from Anthropic and Meta on the time of this writing.

Answer overview

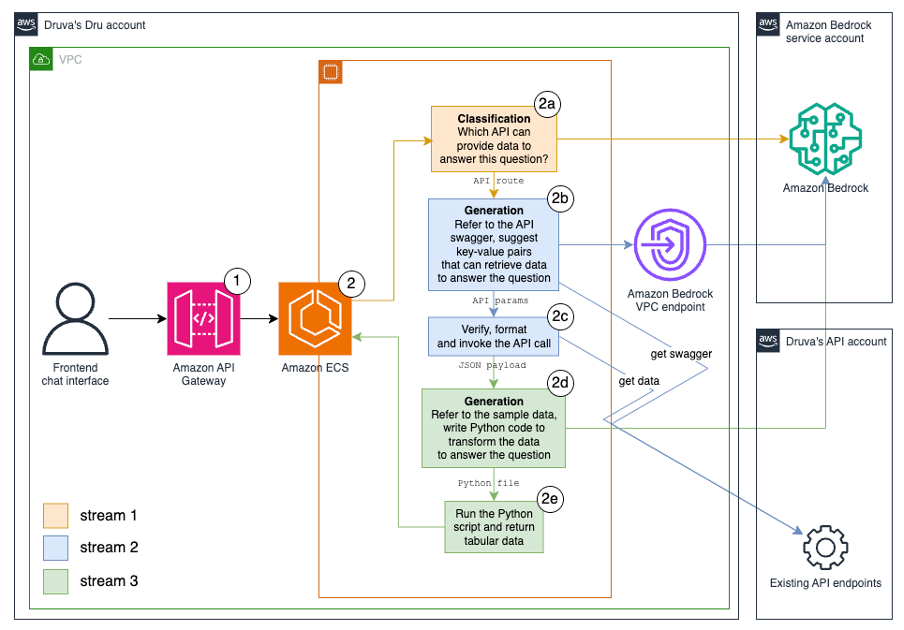

The next diagram reveals how the three streams work collectively as a single workflow to reply consumer questions with tabular information.

The next are the steps of the workflow:

- The authenticated consumer submits a query to Dru, for instance, “Present me my backup job failures for the final 72 hours,” as an API name.

- The request arrives on the microservice on our current Amazon Elastic Container Service (Amazon ECS) cluster. This course of consists of the next steps:

- A classification activity utilizing the FM offers the accessible API routes within the immediate and asks for the one which greatest matches with consumer query.

- An API parameters technology activity utilizing the FM will get the corresponding API swagger, then asks the FM to recommend key-value pairs to the API name that may retrieve information to reply the query.

- A customized Python perform verifies, codecs, and invokes the API name, then passes the information in JSON format to the subsequent step.

- A Python code technology activity utilizing the FM samples just a few data of information from the earlier step, then asks the FM to put in writing Python code to remodel the information to reply the query.

- A customized Python perform runs the Python code and returns the reply in tabular format.

To take care of consumer and system safety, we ensure in our design that:

- The FM can’t straight hook up with any Druva backend companies.

- The FM resides in a separate AWS account and digital personal cloud (VPC) from the backend companies.

- The FM can’t provoke actions independently.

- The FM can solely reply to questions despatched from Druva’s API.

- Regular buyer permissions apply to the API calls made by Dru.

- The decision to the API (Step 1) is barely attainable for authenticated consumer. The authentication part lives exterior the Dru resolution and is used throughout different inside options.

- To keep away from immediate injection, jailbreaking, and different malicious actions, a separate module checks for these earlier than the request reaches this service (Amazon API Gateway in Step 1).

For extra particulars, discuss with Druva’s Secret Sauce: Meet the Technology Behind Dru’s GenAI Magic.

Implementation particulars

On this part, we talk about Steps 2a–2e within the resolution workflow.

2a. Search for the API definition

This step makes use of an FM to carry out classification. It takes the consumer query and a full checklist of accessible API routes with significant names and descriptions because the enter, and responds The next is a pattern immediate:

2b. Generate the API name

This step makes use of an FM to generate API parameters. It first appears up the corresponding swagger for the API route (from Step 2a). Subsequent, it passes the swagger and the consumer query to an FM and responds with some key-value pairs to the API route that may retrieve related information. The next is a pattern immediate:

2c. Validate and invoke the API name

Within the earlier step, even with an try and floor responses with swagger, the FM can nonetheless hallucinate fallacious or nonexistent API parameters. This step makes use of a programmatic approach to confirm, format, and invoke the API name to get information. The next is the pseudo code:

2nd. Generate Python code to remodel information

This step makes use of an FM to generate Python code. It first samples just a few data of enter information to cut back enter tokens. Then it passes the pattern information and the consumer query to an FM and responds with a Python script that transforms information to reply the query. The next is a pattern immediate:

2e. Run the Python code

This step entails a Python script, which imports the generated Python bundle, runs the transformation, and returns the tabular information as the ultimate response. If an error happens, it should invoke the FM to attempt to right the code. When every little thing fails, it returns the enter information. The next is the pseudo code:

Conclusion

Utilizing Amazon Bedrock for the answer basis led to outstanding achievements in accuracy, as evidenced by the next metrics in our evaluations utilizing an inside dataset:

- Stream 1: Determine the API route – Achieved an ideal accuracy charge of 100%

- Stream 2: Generate and invoke personal API calls – Maintained this commonplace with a 100% accuracy charge

- Stream 3: Generate and run information transformation Python code – Attained a extremely commendable accuracy of 90%

These outcomes should not simply numbers; they’re a testomony to the robustness and effectivity of the Amazon Bedrock based mostly resolution. With such excessive ranges of accuracy, Druva is now poised to confidently broaden their horizons. Our subsequent aim is to increase this resolution to embody a wider vary of APIs throughout Druva merchandise. The subsequent enlargement will likely be scaling up utilization and considerably enrich the expertise of Druva clients. By integrating extra APIs, Druva will provide a extra seamless, responsive, and contextual interplay with Druva merchandise, additional enhancing the worth delivered to Druva customers.

To be taught extra about Druva’s AI options, go to the Dru solution page, the place you’ll be able to see a few of these capabilities in motion by recorded demos. Go to the AWS Machine Learning blog to see how different clients are utilizing Amazon Bedrock to resolve their enterprise issues.

In regards to the Authors

David Gildea is the VP of Product for Generative AI at Druva. With over 20 years of expertise in cloud automation and rising applied sciences, David has led transformative initiatives in information administration and cloud infrastructure. Because the founder and former CEO of CloudRanger, he pioneered revolutionary options to optimize cloud operations, later resulting in its acquisition by Druva. Presently, David leads the Labs workforce within the Workplace of the CTO, spearheading R&D into generative AI initiatives throughout the group, together with initiatives like Dru Copilot, Dru Examine, and Amazon Q. His experience spans technical analysis, industrial planning, and product growth, making him a outstanding determine within the area of cloud expertise and generative AI.

David Gildea is the VP of Product for Generative AI at Druva. With over 20 years of expertise in cloud automation and rising applied sciences, David has led transformative initiatives in information administration and cloud infrastructure. Because the founder and former CEO of CloudRanger, he pioneered revolutionary options to optimize cloud operations, later resulting in its acquisition by Druva. Presently, David leads the Labs workforce within the Workplace of the CTO, spearheading R&D into generative AI initiatives throughout the group, together with initiatives like Dru Copilot, Dru Examine, and Amazon Q. His experience spans technical analysis, industrial planning, and product growth, making him a outstanding determine within the area of cloud expertise and generative AI.

Tom Nijs is an skilled backend and AI engineer at Druva, keen about each studying and sharing data. With a concentrate on optimizing programs and utilizing AI, he’s devoted to serving to groups and builders convey revolutionary options to life.

Tom Nijs is an skilled backend and AI engineer at Druva, keen about each studying and sharing data. With a concentrate on optimizing programs and utilizing AI, he’s devoted to serving to groups and builders convey revolutionary options to life.

Corvus Lee is a Senior GenAI Labs Options Architect at AWS. He’s keen about designing and growing prototypes that use generative AI to resolve buyer issues. He additionally retains up with the newest developments in generative AI and retrieval strategies by making use of them to real-world eventualities.

Corvus Lee is a Senior GenAI Labs Options Architect at AWS. He’s keen about designing and growing prototypes that use generative AI to resolve buyer issues. He additionally retains up with the newest developments in generative AI and retrieval strategies by making use of them to real-world eventualities.

Fahad Ahmed is a Senior Options Architect at AWS and assists monetary companies clients. He has over 17 years of expertise constructing and designing software program functions. He lately discovered a brand new ardour of creating AI companies accessible to the plenty.

Fahad Ahmed is a Senior Options Architect at AWS and assists monetary companies clients. He has over 17 years of expertise constructing and designing software program functions. He lately discovered a brand new ardour of creating AI companies accessible to the plenty.