Superb-Tuning GPT-4o – KDnuggets

Picture by Writer

GPT-4o is nice for normal duties, however it might wrestle with particular use instances. To handle this, we will work on refining the prompts for higher output. If that does not work, we will strive perform calling. If points persist, we will use the RAG pipeline to collect further context from paperwork.

Most often, fine-tuning GPT-4o is taken into account the final resort as a result of excessive price, longer coaching time, and experience required. Nonetheless, if all of the aforementioned options have been tried and the objective is to change type and tone and enhance accuracy for a selected use case, then fine-tuning the GPT-4 mannequin on a customized dataset is an possibility.

On this tutorial, we’ll discover ways to arrange the OpenAI Python API, load and course of knowledge, add the processed dataset to the cloud, fine-tune the GPT-4o mannequin utilizing the dataset, and entry the fine-tuned mannequin.

Setting Up

We shall be utilizing the Legal Text Classification dataset from Kaggle to fine-tune the GPT-4o mannequin. The very first thing we have to do is obtain the CSV file and cargo it utilizing Pandas. After that, we’ll drop the minor lessons from the dataset, conserving solely the highest 5 authorized textual content labels. It is vital to know the information by performing knowledge evaluation earlier than we start the fine-tuning course of.

import pandas as pd

df = pd.read_csv("legal_text_classification.csv", index_col=0)

# Knowledge cleansing

df = df.drop(df[df.case_outcome == "discussed"].index)

df = df.drop(df[df.case_outcome == "distinguished"].index)

df = df.drop(df[df.case_outcome == "affirmed"].index)

df = df.drop(df[df.case_outcome == "approved"].index)

df = df.drop(df[df.case_outcome == "related"].index)

df.head()

The dataset comprises columns for case_outcomes, case_title, and case_text. All of those columns shall be used to create our immediate for mannequin coaching.

Subsequent, we’ll set up the OpenAI Python package deal.

%%seize

%pip set up openai

We’ll now load the OpenAI API key from an surroundings variable and use it to initialize the OpenAI consumer for the chat completion perform.

To generate the response, we’ll create a customized immediate. It comprises system directions telling the mannequin what to do. In our case, it tells it to categorise authorized textual content into recognized classes. Then, we create the consumer question utilizing the case title, case textual content, and assistant function to generate solely a single label.

from IPython.show import Markdown, show

from openai import OpenAI

import os

classes = df.case_outcome.distinctive().tolist()

openai_api_key = os.environ["OPENAI_API_KEY"]

consumer = OpenAI(api_key=openai_api_key)

response = consumer.chat.completions.create(

mannequin="gpt-4o-2024-08-06",

messages=[

{

"role": "system",

"content": f"Classify the following legal text based on the outcomes of the case. Please categorize it in to {categories}.",

},

{

"role": "user",

"content": f"Case Title: {df.case_title[0]} nnCase Textual content: {df.case_text[0]}",

},

{"function": "assistant", "content material": "Case Consequence:"},

],

)

show(Markdown(response.selections[0].message.content material))

The response is correct, however it’s misplaced within the take a look at. We solely need it to generate “cited” as an alternative of producing the textual content.

The case "Alpine Hardwood (Aust) Pty Ltd v Hardys Pty Ltd (No 2) 2002 FCA 224; (2002) 190 ALR 121" is cited within the given textual content.

Creating the Dataset

We’ll shuffle the dataset and extract solely 200 samples. We are able to practice the mannequin on the complete dataset, however it’ll price extra, and the mannequin coaching time will enhance.

After that, the dataset shall be break up into coaching and validation units.

Write the perform that can use the immediate type and dataset to create the messages after which save the dataset as a JSONL file. The immediate type is just like the one we used earlier.

We’ll convert the practice and validation datasets into the JSONL file format and save them within the native listing.

import json

from sklearn.model_selection import train_test_split

# shuffle the dataset and choose the highest 200 rows

data_cleaned = df.pattern(frac=1).reset_index(drop=True).head(200)

# Cut up the information into coaching and validation units (80% practice, 20% validation)

train_data, validation_data = train_test_split(

data_cleaned, test_size=0.2, random_state=42

)

def save_to_jsonl(knowledge, output_file_path):

jsonl_data = []

for index, row in knowledge.iterrows():

jsonl_data.append(

{

"messages": [

{

"role": "system",

"content": f"Classify the following legal text based on the outcomes of the case. Please categorize it in to {categories}.",

},

{

"role": "user",

"content": f"Case Title: {row['case_title']} nnCase Textual content: {row['case_text']}",

},

{

"function": "assistant",

"content material": f"Case Consequence: {row['case_outcome']}",

},

]

}

)

# Save to JSONL format

with open(output_file_path, "w") as f:

for merchandise in jsonl_data:

f.write(json.dumps(merchandise) + "n")

# Save the coaching and validation units to separate JSONL recordsdata

train_output_file_path = "legal_text_classification_train.jsonl"

validation_output_file_path = "legal_text_classification_validation.jsonl"

save_to_jsonl(train_data, train_output_file_path)

save_to_jsonl(validation_data, validation_output_file_path)

print(f"Coaching dataset save to {train_output_file_path}")

print(f"Validation dataset save to {validation_output_file_path}")

Output:

Coaching dataset save to legal_text_classification_train.jsonl

Validation dataset save to legal_text_classification_validation.jsonl

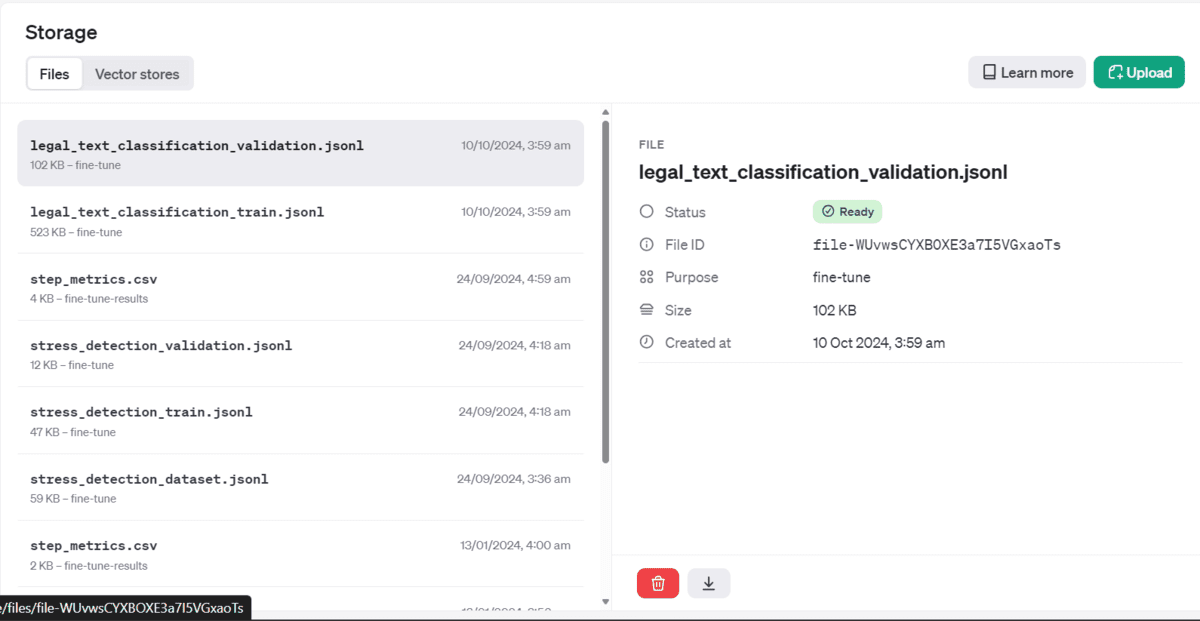

Importing the Processed Dataset

We’ll use the’ recordsdata’ perform to add coaching and validation recordsdata into the OpenAI cloud. Why are we importing these recordsdata? The fine-tuning course of happens within the OpenAI cloud, and importing these recordsdata is important so the cloud can simply entry them for coaching functions.

train_file = consumer.recordsdata.create(

file=open(train_output_file_path, "rb"),

objective="fine-tune"

)

valid_file = consumer.recordsdata.create(

file=open(validation_output_file_path, "rb"),

objective="fine-tune"

)

print(f"Coaching File Data: {train_file}")

print(f"Validation File Data: {valid_file}")

Output:

Coaching File Data: FileObject(id='file-fw39Ok3Uqq5nSnEFBO581lS4', bytes=535847, created_at=1728514772, filename="legal_text_classification_train.jsonl", object="file", objective="fine-tune", standing="processed", status_details=None)

Validation File Data: FileObject(id='file-WUvwsCYXBOXE3a7I5VGxaoTs', bytes=104550, created_at=1728514773, filename="legal_text_classification_validation.jsonl", object="file", objective="fine-tune", standing="processed", status_details=None)

When you go to your OpenAI dashboard and storage menu, you will note that your recordsdata have been uploaded securely and are prepared to make use of.

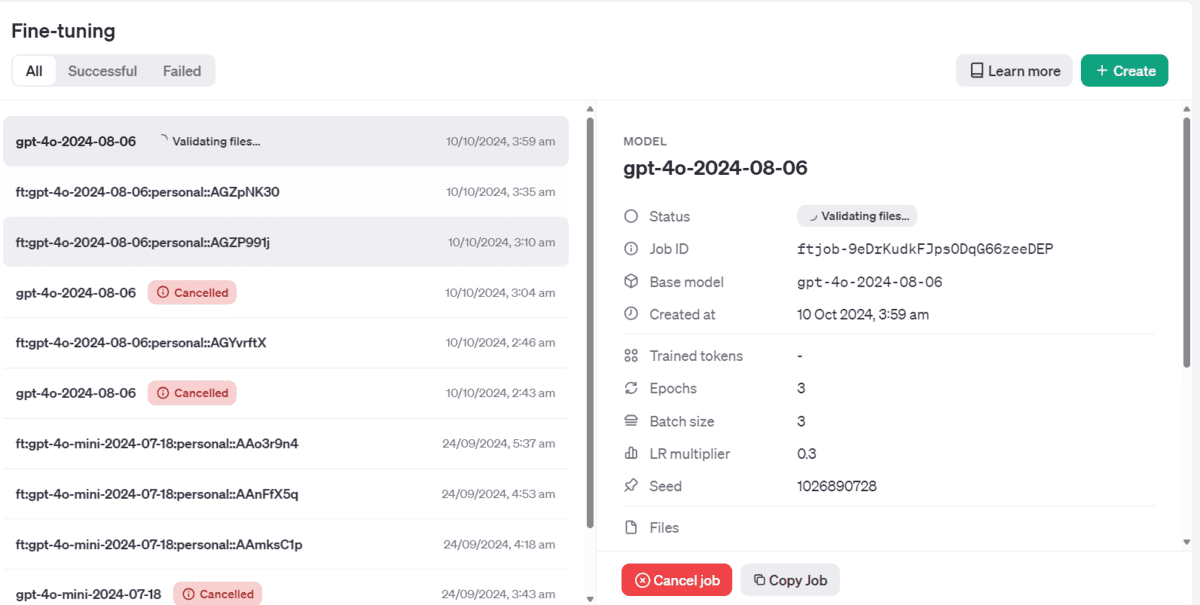

Beginning the Superb-tuning Job

We’ll now create the fine-tuning job by offering the perform with coaching and validation file id, mannequin title, and hyperparameters.

mannequin = consumer.fine_tuning.jobs.create(

training_file=train_file.id,

validation_file=valid_file.id,

mannequin="gpt-4o-2024-08-06",

hyperparameters={

"n_epochs": 3,

"batch_size": 3,

"learning_rate_multiplier": 0.3

}

)

job_id = mannequin.id

standing = mannequin.standing

print(f'Superb-tuning mannequin with jobID: {job_id}.')

print(f"Coaching Response: {mannequin}")

print(f"Coaching Standing: {standing}")

As quickly as we run this perform, the coaching job shall be initiated, and we will view the job standing, job ID, and different metadata.

Superb-tuning mannequin with jobID: ftjob-9eDrKudkFJps0DqG66zeeDEP.

Coaching Response: FineTuningJob(id='ftjob-9eDrKudkFJps0DqG66zeeDEP', created_at=1728514787, error=Error(code=None, message=None, param=None), fine_tuned_model=None, finished_at=None, hyperparameters=Hyperparameters(n_epochs=3, batch_size=3, learning_rate_multiplier=0.3), mannequin="gpt-4o-2024-08-06", object="fine_tuning.job", organization_id='org-jLXWbL5JssIxj9KNgoFBK7Qi', result_files=[], seed=1026890728, standing="validating_files", trained_tokens=None, training_file="file-fw39Ok3Uqq5nSnEFBO581lS4", validation_file="file-WUvwsCYXBOXE3a7I5VGxaoTs", estimated_finish=None, integrations=[], user_provided_suffix=None)

Coaching Standing: validating_files

You possibly can even view the job standing on the OpenAI dashboard too.

When the fine-tuning job is completed, we’ll obtain an e mail containing all the data on use the fine-tuned mannequin.

Accessing the Superb-tuned Mannequin

To entry the fine-tuned mannequin, we first must entry the mannequin title. We are able to try this by retrieving the fine-tuning job data, deciding on the newest job, after which selecting the fine-tuned mannequin title.

outcome = consumer.fine_tuning.jobs.record()

# Retrieve the fine-tuned mannequin

fine_tuned_model = outcome.knowledge[0].fine_tuned_model

print(fine_tuned_model)

That is our fine-tuned mannequin title. We are able to begin utilizing it by straight typing it within the chat completion perform.

ft:gpt-4o-2024-08-06:private::AGaF9lqH

To generate a response, we’ll use the chat completion perform with the fine-tuned mannequin title and messages in a mode just like the dataset.

completion = consumer.chat.completions.create(

mannequin=fine_tuned_model,

messages=[

{

"role": "system",

"content": f"Classify the following legal text based on the outcomes of the case. Please categorize it in to {categories}.",

},

{

"role": "user",

"content": f"Case Title: {df['case_title'][10]} nnCase Textual content: {df['case_text'][10]}",

},

{"function": "assistant", "content material": "Case Consequence:"},

],

)

print(f"predicated: {completion.selections[0].message.content material}")

print(f"precise: {df['case_outcome'][10]}")

As we will see, as an alternative of offering the entire sentence it has simply returned the label and the right label.

predicated: cited

precise: cited

Let’s attempt to classify the 101th pattern within the dataset.

completion = consumer.chat.completions.create(

mannequin=fine_tuned_model,

messages=[

{

"role": "system",

"content": f"Classify the following legal text based on the outcomes of the case. Please categorize it in to {categories}.",

},

{

"role": "user",

"content": f"Case Title: {df['case_title'][100]} nnCase Textual content: {df['case_text'][100]}",

},

{"function": "assistant", "content material": "Case Consequence:"},

],

)

print(f"predicated: {completion.selections[0].message.content material}")

print(f"precise: {df['case_outcome'][100]}")

That is good. We’ve efficiently fine-tuned our mannequin. To additional enhance the efficiency, I counsel fine-tuning the mannequin on the complete dataset and coaching it for not less than 5 epochs.

predicated: thought of

precise: thought of

Ultimate ideas

Superb-tuning GPT-4o is straightforward and requires minimal effort and {hardware}. All you should do is add a bank card to your OpenAI account and begin utilizing it. If you’re not a Python programmer, you may all the time use the OpenAI dashboard to add the dataset, begin the fine-tuning job, and use it within the playground. OpenAI supplies a low/no-code resolution, and all you want is a bank card.

Abid Ali Awan (@1abidaliawan) is an authorized knowledge scientist skilled who loves constructing machine studying fashions. At present, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college students scuffling with psychological sickness.