How one can Use Steady Diffusion Successfully

From the immediate to the image, Steady Diffusion is a pipeline with many elements and parameters. All these elements working collectively creates the output. If a part behave in a different way, the output will change. Subsequently, a nasty setting can simply wreck your image. On this put up, you will note:

- How the completely different elements of the Steady Diffusion pipeline impacts your output

- How one can discover the perfect configuration that can assist you generate a top quality image

Kick-start your venture with my e-book Mastering Digital Art with Stable Diffusion. It gives self-study tutorials with working code.

Let’s get began.

How one can Use Steady Diffusion Successfully.

Photograph by Kam Idris. Some rights reserved.

Overview

This put up is in three elements; they’re:

- Significance of a Mannequin

- Deciding on a Sampler and Scheduler

- Measurement and the CFG Scale

Significance of a Mannequin

If there may be one part within the pipeline that has essentially the most affect, it have to be the mannequin. Within the Net UI, it’s known as the “checkpoint”, named after how we saved the mannequin once we skilled a deep studying mannequin.

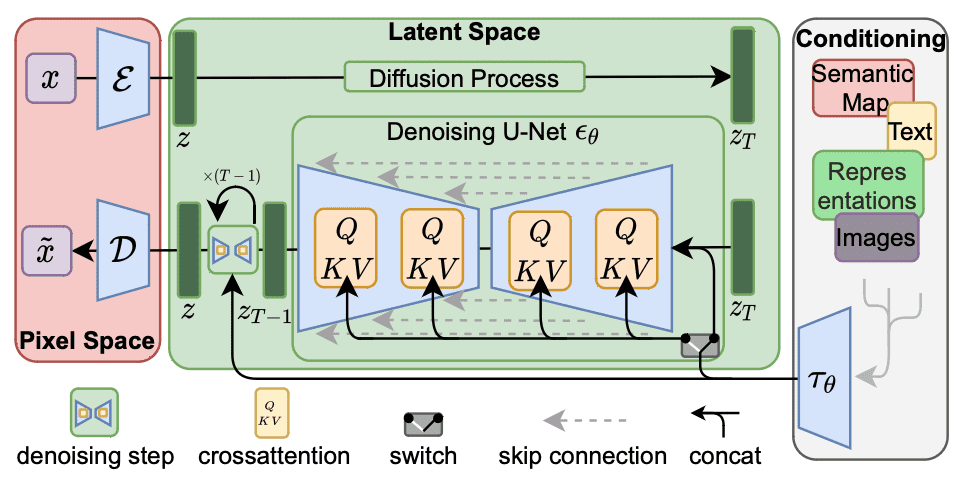

The Net UI helps a number of Steady Diffusion mannequin architectures. The commonest structure these days is the model 1.5 (SD 1.5). Certainly, all model 1.x share an identical structure (every mannequin has 860M parameters) however are skilled or fine-tuned beneath completely different methods.

Structure of Steady Diffusion 1.x. Determine from Rombach et al (2022)

There’s additionally Steady Diffusion 2.0 (SD 2.0), and its up to date model 2.1. This isn’t a “revision” from model 1.5, however a mannequin skilled from scratch. It makes use of a special textual content encoder (OpenCLIP as a substitute of CLIP); subsequently, they might perceive key phrases in a different way. One noticeable distinction is that OpenCLIP is aware of fewer names of celebrities and artists. Therefore, the immediate from Steady Diffusion 1.5 could also be out of date in 2.1. As a result of the encoder is completely different, SD2.x and SD1.x are incompatible, whereas they share an identical structure.

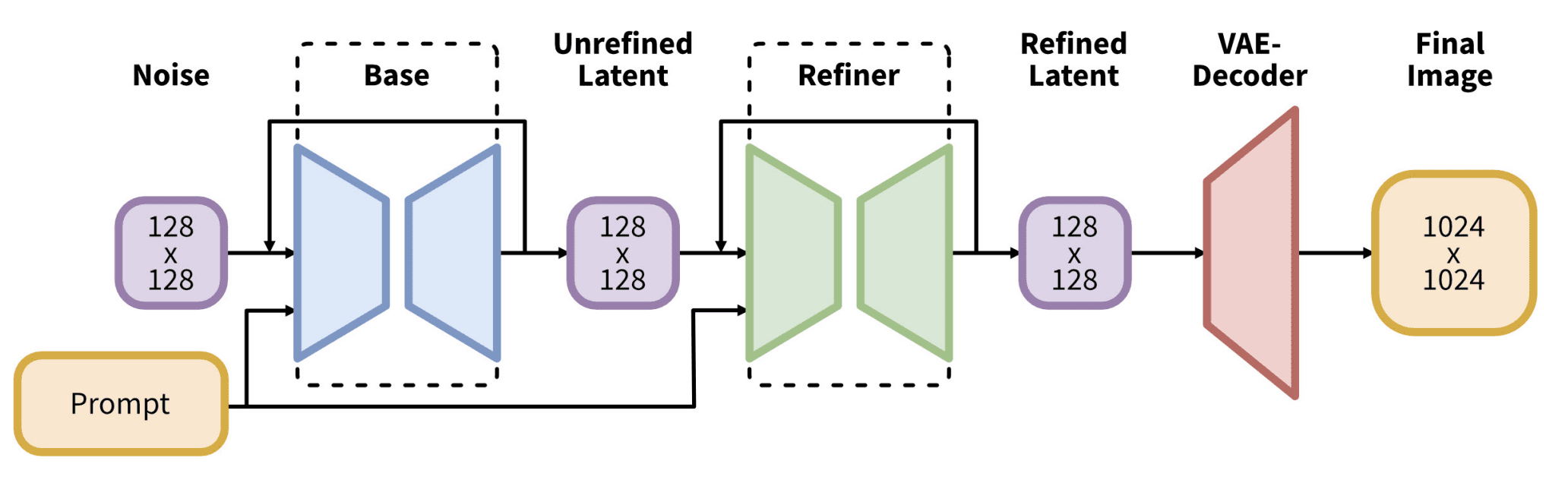

Subsequent comes the Steady Diffusion XL (SDXL). Whereas model 1.5 has a local decision of 512×512 and model 2.0 elevated it to 768×768, SDXL is at 1024×1024. You aren’t urged to make use of a vastly completely different dimension than their native decision. SDXL is a special structure, with a a lot bigger 6.6B parameters pipeline. Most notably, the fashions have two elements: the Base mannequin and the Refiner mannequin. They arrive in pairs, however you possibly can swap out considered one of them for a appropriate counterpart, or skip the refiner if you want. The textual content encoder used combines CLIP and OpenCLIP. Therefore, it ought to perceive your immediate higher than any older structure. Working SDXL is slower and requires way more reminiscence, however often in higher high quality.

Structure of SDXL. Determine from Podell et al (2023)

What issues to you is that you need to classify your fashions into three incompatible households: SD1.5, SD2.x, and SDXL. They behave in a different way along with your immediate. Additionally, you will discover that SD1.5 and SD2.x would want a detrimental immediate for a very good image, however it’s much less necessary in SDXL. In the event you’re utilizing SD2.x fashions, additionally, you will discover you could choose your refiner within the Net UI.

Photos generated with the immediate, ‘A quick meals restaurant in a desert with identify “Sandy Burger”’, utilizing SD 1.5 with completely different random seed. Notice that none of them spelled the identify accurately.

Photos generated with the immediate, ‘A quick meals restaurant in a desert with identify “Sandy Burger”’, utilizing SD 2.0 with completely different random seed. Notice that not all of them spelled the identify accurately.

Photos generated with the immediate, ‘A quick meals restaurant in a desert with identify “Sandy Burger”’, utilizing SDXL with completely different random seed. Notice that three of them spelled the identify accurately and just one letter is lacking on the final one.

One attribute of Steady Diffusion is that the unique fashions are much less succesful however adaptable. Subsequently, a whole lot of third-party fine-tuned fashions are produced. Most important are the fashions specializing in sure types, similar to Japanese anime, western cartoons, Pixar-style 2.5D graphics, or photorealistic photos.

You’ll find fashions on Civitai.com or Hugging Face Hub. Search with key phrases similar to “photorealistic” or “2D” and sorting by ranking would often assist.

Deciding on a Sampler and Scheduler

Picture diffusion is to start out with noise and replaces the noise strategically with pixels till the ultimate image is produced. It’s later discovered that this course of will be represented as a stochastic differential equation. Fixing the equation numerically is feasible, and there are completely different algorithms of various accuracy.

Essentially the most generally used sampler is Euler. It’s conventional however nonetheless helpful. Then, there’s a household of DPM samplers. Some new samplers, similar to UniPC and LCM, have been launched lately. Every sampler is an algorithm. It’s to run for a number of steps, and completely different parameters are utilized in every step. The parameters are set utilizing a scheduler, similar to Karras or exponential. Some samplers have an alternate “ancestral” mode, which provides randomness to every step. That is helpful if you would like extra artistic output. These samplers often bear a suffix “a” of their identify, similar to “Euler a” as a substitute of “Euler”. The non-ancestral samplers converge, i.e., they may stop altering the output after sure steps. Ancestral samplers would give a special output for those who enhance the step dimension.

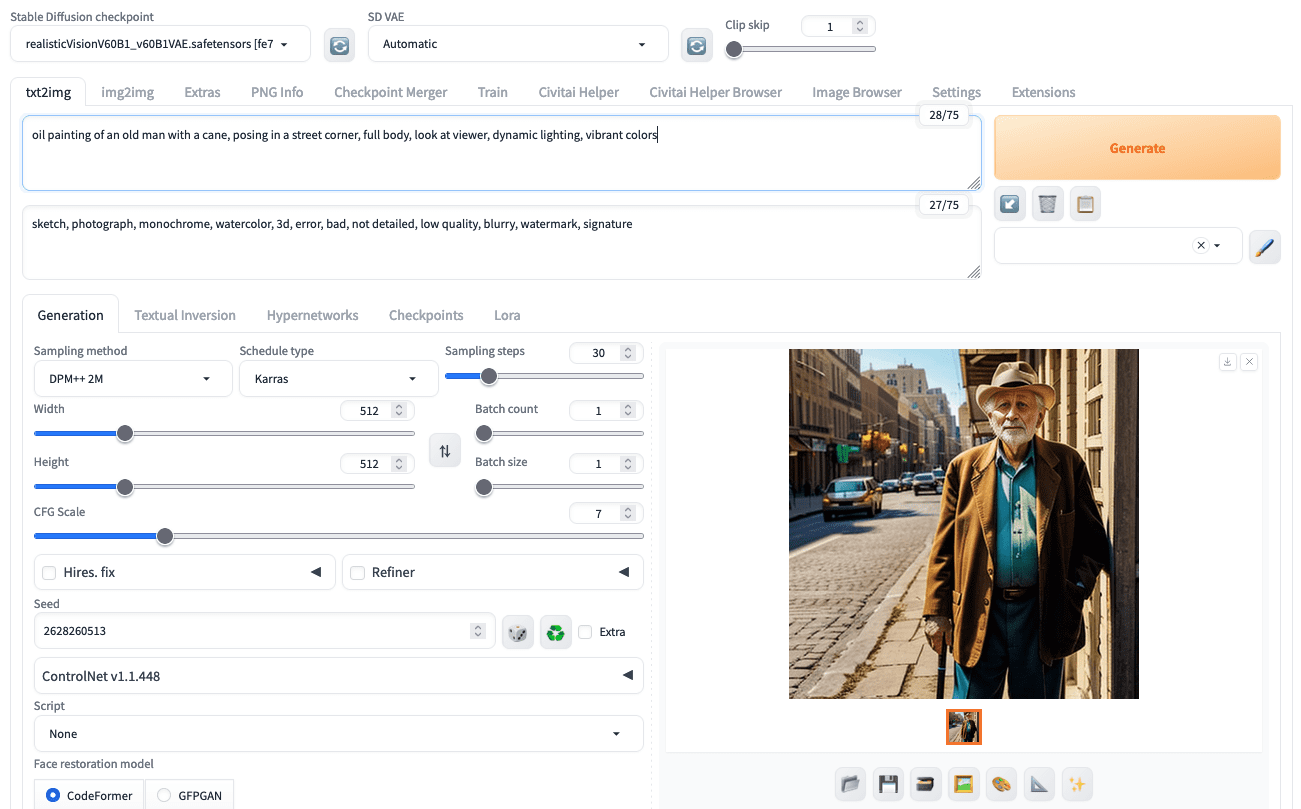

Deciding on sampler, scheduler, steps, and different parameters within the Steady Diffusion Net UI

As a person, you possibly can assume Karras is the scheduler for all instances. Nevertheless, the scheduler and step dimension would want some experimentation. Both Euler or DPM++2M needs to be chosen as a result of they steadiness high quality and pace finest. You can begin with a step dimension of round 20 to 30; the extra steps you select, the higher the output high quality by way of particulars and accuracy, however proportionally slower.

Measurement and CFG Scale

Recall that the picture diffusion course of begins from a loud image, regularly putting pixels conditioned by the immediate. How a lot the conditioning can affect the diffusion course of is managed by the parameter CFG scale (classifier-free steerage scale).

Sadly, the optimum worth of CFG scale depends upon the mannequin. Some fashions work finest with a CFG scale of 1 to 2, whereas others are optimized for 7 to 9. The default worth is 7.5 within the Net UI. However as a common rule, the upper the CFG scale, the stronger the output picture conforms to your immediate.

In case your CFG scale is just too low, the output picture will not be what you anticipated. Nevertheless, there may be one more reason you don’t get what you anticipated: The output dimension. For instance, for those who immediate for an image of a person standing, chances are you’ll get a headshot of a half-body shot as a substitute until you set the picture dimension to a top considerably larger than the width. The diffusion course of units the image composition within the early steps. It’s simpler to plan a standing man on a taller canvas.

Producing a half-body shot if offered a sq. canvas.

Producing a full physique shot with the identical immediate, similar seed, and solely the canvas dimension is modified.

Equally, for those who give an excessive amount of element to one thing that occupies a small a part of the picture, these particulars can be ignored as a result of there aren’t sufficient pixels to render these particulars. That’s the reason SDXL, for instance, is usually higher than SD 1.5 because you often use a bigger pixel dimension.

As a closing comment, producing photos utilizing picture diffusion fashions entails randomness. At all times begin with a batch of a number of photos to verify the unhealthy output will not be merely because of the random seed.

Additional Readings

This part gives extra sources on the subject if you wish to go deeper.

Abstract

On this put up, you realized about some refined particulars that impacts the picture era in Steady Diffusion. Particularly, you realized:

- The distinction between completely different variations of Steady Diffusion

- How the scheduler and sampler impacts the picture diffusion course of

- How the canvas dimension could have an effect on the output