Meta presents Self-Taught Evaluators: A New AI Method that Goals to Enhance Evaluators with out Human Annotations and Outperforms Generally Used LLM Judges Equivalent to GPT-4

Developments in NLP have led to the event of enormous language fashions (LLMs) able to performing complicated language-related duties with excessive accuracy. These developments have opened up new potentialities in expertise and communication, permitting for extra pure and efficient human-computer interactions.

A big drawback in NLP is the reliance on human annotations for mannequin analysis. Human-generated information is important for coaching and validating fashions, however amassing this information is each pricey and time-consuming. Moreover, as fashions enhance, beforehand collected annotations could should be up to date, lowering their utility in evaluating newer fashions. This creates a steady want for recent information, which poses challenges for scaling and sustaining efficient mannequin evaluations. Addressing this drawback is essential for advancing NLP applied sciences and their functions.

Present strategies for mannequin analysis usually contain amassing massive quantities of human choice judgments over mannequin responses. These strategies embody utilizing automated metrics for duties with reference solutions or using classifiers that output scores instantly. Nevertheless, these strategies face limitations, particularly for complicated duties the place a number of legitimate responses are potential, resembling artistic writing or coding. The excessive variance in human judgments and the related prices spotlight the necessity for extra environment friendly and scalable analysis strategies.

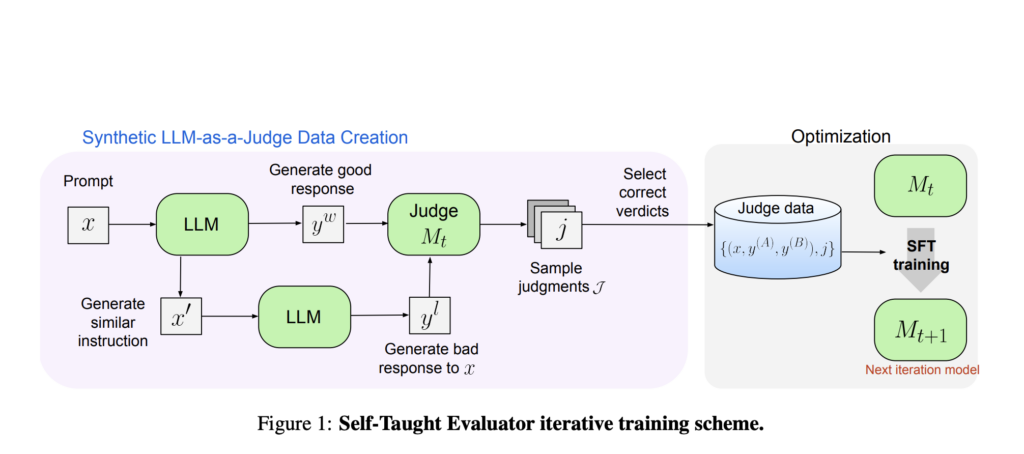

Researchers at Meta FAIR have launched a novel strategy referred to as the “Self-Taught Evaluator.” This technique eliminates the necessity for human annotations through the use of synthetically generated information for coaching. The method begins with a seed mannequin, which produces contrasting artificial choice pairs. The mannequin then evaluates these pairs and improves iteratively, utilizing its judgments to reinforce its efficiency in subsequent iterations. This strategy leverages the mannequin’s functionality to generate and consider information, considerably lowering dependency on human-generated annotations.

The proposed technique includes a number of key steps. Initially, a baseline response is generated for a given instruction utilizing a seed LLM. A modified model of the instruction is then created, prompting the LLM to generate a brand new response designed to be decrease high quality than the unique. These paired responses kind the idea for coaching information. The mannequin, appearing as an LLM-as-a-Decide, generates reasoning traces and judgments for these pairs. This course of is repeated iteratively, with the mannequin regularly enhancing its judgment accuracy by way of self-generated and self-evaluated information, successfully making a cycle of self-improvement.

The efficiency of the Self-Taught Evaluator was examined utilizing the Llama-3-70B-Instruct mannequin. The tactic improved the mannequin’s accuracy on the RewardBench benchmark from 75.4 to 88.7, matching or surpassing the efficiency of fashions skilled with human annotations. This vital enchancment demonstrates the effectiveness of artificial information in enhancing mannequin analysis. Moreover, the researchers carried out a number of iterations, additional refining the mannequin’s capabilities. The ultimate mannequin achieved 88.3 accuracy with a single inference and 88.7 with majority voting, showcasing its robustness and reliability.

In conclusion, the Self-Taught Evaluator affords a scalable and environment friendly NLP mannequin analysis answer. By leveraging artificial information and iterative self-improvement, it addresses the challenges of counting on human annotations and retains tempo with the fast developments in language mannequin improvement. This strategy enhances mannequin efficiency and reduces the dependency on human-generated information, paving the way in which for extra autonomous and environment friendly NLP techniques. The analysis group’s work at Meta FAIR marks a big step ahead within the quest for extra superior and autonomous analysis strategies within the area of NLP.

Try the Paper. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t overlook to comply with us on Twitter and be a part of our Telegram Channel and LinkedIn Group. In the event you like our work, you’ll love our newsletter..

Don’t Overlook to hitch our 47k+ ML SubReddit

Discover Upcoming AI Webinars here

Nikhil is an intern marketing consultant at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s all the time researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.