LLM experimentation at scale utilizing Amazon SageMaker Pipelines and MLflow

Giant language fashions (LLMs) have achieved exceptional success in numerous pure language processing (NLP) duties, however they might not at all times generalize nicely to particular domains or duties. It’s possible you’ll have to customise an LLM to adapt to your distinctive use case, enhancing its efficiency in your particular dataset or job. You may customise the mannequin utilizing immediate engineering, Retrieval Augmented Technology (RAG), or fine-tuning. Analysis of a personalized LLM in opposition to the bottom LLM (or different fashions) is critical to verify the customization course of has improved the mannequin’s efficiency in your particular job or dataset.

On this submit, we dive into LLM customization utilizing fine-tuning, exploring the important thing concerns for profitable experimentation and the way Amazon SageMaker with MLflow can simplify the method utilizing Amazon SageMaker Pipelines.

LLM choice and fine-tuning journeys

When working with LLMs, clients usually have completely different necessities. Some could also be serious about evaluating and choosing probably the most appropriate pre-trained basis mannequin (FM) for his or her use case, whereas others may have to fine-tune an current mannequin to adapt it to a particular job or area. Let’s discover two buyer journeys:

- High quality-tuning an LLM for a particular job or area adaptation – On this consumer journey, it’s good to customise an LLM for a particular job or area information. This requires fine-tuning the mannequin. The fine-tuning course of might contain a number of experiment, every requiring a number of iterations with completely different combos of datasets, hyperparameters, prompts, and fine-tuning strategies, equivalent to full or Parameter-Environment friendly High quality-Tuning (PEFT). Every iteration will be thought of a run inside an experiment.

High quality-tuning an LLM is usually a complicated workflow for information scientists and machine studying (ML) engineers to operationalize. To simplify this course of, you should utilize Amazon SageMaker with MLflow and SageMaker Pipelines for fine-tuning and analysis at scale. On this submit, we describe the step-by-step answer and supply the supply code within the accompanying GitHub repository.

Resolution overview

Operating lots of of experiments, evaluating the outcomes, and holding a observe of the ML lifecycle can develop into very complicated. That is the place MLflow will help streamline the ML lifecycle, from information preparation to mannequin deployment. By integrating MLflow into your LLM workflow, you possibly can effectively handle experiment monitoring, mannequin versioning, and deployment, offering reproducibility. With MLflow, you possibly can observe and examine the efficiency of a number of LLM experiments, establish the best-performing fashions, and deploy them to manufacturing environments with confidence.

You may create workflows with SageMaker Pipelines that allow you to organize information, fine-tune fashions, and consider mannequin efficiency with easy Python code for every step.

Now you should utilize SageMaker managed MLflow to run LLM fine-tuning and analysis experiments at scale. Particularly:

- MLflow can handle monitoring of fine-tuning experiments, evaluating analysis outcomes of various runs, mannequin versioning, deployment, and configuration (equivalent to information and hyperparameters)

- SageMaker Pipelines can orchestrate a number of experiments primarily based on the experiment configuration

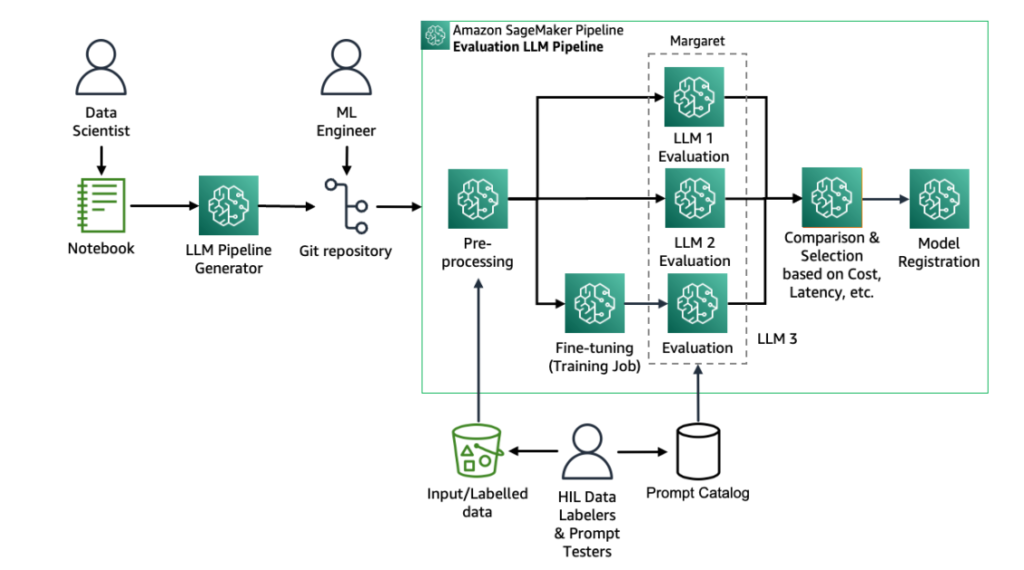

The next determine exhibits the overview of the answer.

Conditions

Earlier than you start, be sure to have the next stipulations in place:

- Hugging Face login token – You want a Hugging Face login token to entry the fashions and datasets used on this submit. For directions to generate a token, see User access tokens.

- SageMaker entry with required IAM permissions – You’ll want to have entry to SageMaker with the mandatory AWS Identity and Access Management (IAM) permissions to create and handle sources. Ensure you have the required permissions to create notebooks, deploy fashions, and carry out different duties outlined on this submit. To get began, see Quick setup to Amazon SageMaker. Please observe this submit to be sure to have correct IAM function confugured for MLflow.

Arrange an MLflow monitoring server

MLflow is straight built-in in Amazon SageMaker Studio. To create an MLflow monitoring server to trace experiments and runs, full the next steps:

- On the SageMaker Studio console, select MLflow below Functions within the navigation pane.

- For Identify, enter an applicable server identify.

- For Artifact storage location (S3 URI), enter the placement of an Amazon Simple Storage Service (Amazon S3) bucket.

- Select Create.

The monitoring server might require as much as 20 minutes to initialize and develop into operational. When it’s working, you possibly can observe its ARN to make use of within the llm_fine_tuning_experiments_mlflow.ipynb pocket book. The ARN can have the next format:

For subsequent steps, you possibly can confer with the detailed description offered on this submit, in addition to the step-by-step directions outlined within the llm_fine_tuning_experiments_mlflow.ipynb pocket book. You may Launch the pocket book in Amazon SageMaker Studio Classic or SageMaker JupyterLab.

Overview of SageMaker Pipelines for experimentation at scale

We use SageMaker Pipelines to orchestrate LLM fine-tuning and analysis experiments. With SageMaker Pipelines, you possibly can:

- Run a number of LLM experiment iterations concurrently, decreasing total processing time and price

- Effortlessly scale up or down primarily based on altering workload calls for

- Monitor and visualize the efficiency of every experiment run with MLflow integration

- Invoke downstream workflows for additional evaluation, deployment, or mannequin choice

MLflow integration with SageMaker Pipelines requires the monitoring server ARN. You additionally want so as to add the mlflow and sagemaker-mlflow Python packages as dependencies within the pipeline setup. Then you should utilize MLflow in any pipeline step with the next code snippet:

Log datasets with MLflow

With MLflow, you possibly can log your dataset data alongside different key metrics, equivalent to hyperparameters and mannequin analysis. This allows monitoring and reproducibility of experiments throughout completely different runs, permitting for extra knowledgeable decision-making about which fashions carry out finest on particular duties or domains. By logging your datasets with MLflow, you possibly can retailer metadata, equivalent to dataset descriptions, model numbers, and information statistics, alongside your MLflow runs.

Within the preproccess step, you possibly can log coaching information and analysis information. On this instance, we obtain the info from a Hugging Face dataset. We’re utilizing HuggingFaceH4/no_robots for fine-tuning and analysis. First, it’s good to set the MLflow monitoring ARN and experiment identify to log information. After you course of the info and choose the required variety of rows, you possibly can log the info utilizing the log_input API of MLflow. See the next code:

High quality-tune a Llama mannequin with LoRA and MLflow

To streamline the method of fine-tuning LLM with Low-Rank Adaption (LoRA), you should utilize MLflow to trace hyperparameters and save the ensuing mannequin. You may experiment with completely different LoRA parameters for coaching and log these parameters together with different key metrics, equivalent to coaching loss and analysis metrics. This allows monitoring of your fine-tuning course of, permitting you to establish the best LoRA parameters for a given dataset and job.

For this instance, we use the PEFT library from Hugging Face to fine-tune a Llama 3 mannequin. With this library, we will carry out LoRA fine-tuning, which gives sooner coaching with lowered reminiscence necessities. It will probably additionally work nicely with much less coaching information.

We use the HuggingFace class from the SageMaker SDK to create a coaching step in SageMaker Pipelines. The precise implementation of coaching is outlined in llama3_fine_tuning.py. Similar to the earlier step, we have to set the MLflow monitoring URI and use the identical run_id:

Whereas utilizing the Coach class from Transformers, you possibly can point out the place you wish to report the coaching arguments. In our case, we wish to log all of the coaching arguments to MLflow:

When the coaching is full, it can save you the complete mannequin, so it’s good to merge the adapter weights to the bottom mannequin:

The merged mannequin will be logged to MLflow with the mannequin signature, which defines the anticipated format for mannequin inputs and outputs, together with any further parameters wanted for inference:

Consider the mannequin

Mannequin analysis is the important thing step to pick probably the most optimum coaching arguments for fine-tuning the LLM for a given dataset. On this instance, we use the built-in analysis functionality of MLflow with the mlflow.evaluate() API. For query answering fashions, we use the default evaluator logs exact_match, token_count, toxicity, flesch_kincaid_grade_level, and ari_grade_level.

MLflow can load the mannequin that was logged within the fine-tuning step. The bottom mannequin is downloaded from Hugging Face and adapter weights are downloaded from the logged mannequin. See the next code:

These analysis outcomes are logged in MLflow in the identical run that logged the info processing and fine-tuning step.

Create the pipeline

After you’ve got the code prepared for all of the steps, you possibly can create the pipeline:

You may run the pipeline utilizing the SageMaker Studio UI or utilizing the next code snippet within the pocket book:

Evaluate experiment outcomes

After you begin the pipeline, you possibly can observe the experiment in MLflow. Every run will log particulars of the preprocessing, fine-tuning, and analysis steps. The preprocessing step will log coaching and analysis information, and the fine-tuning step will log all coaching arguments and LoRA parameters. You may choose these experiments and examine the outcomes to search out the optimum coaching parameters and finest fine-tuned mannequin.

You may open the MLflow UI from SageMaker Studio.

Then you possibly can choose the experiment to filter out runs for that experiment. You may choose a number of runs to make the comparability.

Whenever you examine, you possibly can analyze the analysis rating in opposition to the coaching arguments.

Register the mannequin

After you analyze the analysis outcomes of various fine-tuned fashions, you possibly can choose one of the best mannequin and register it in MLflow. This mannequin might be robotically synced with Amazon SageMaker Model Registry.

Deploy the mannequin

You may deploy the mannequin via the SageMaker console or SageMaker SDK. You may pull the mannequin artifact from MLflow and use the ModelBuilder class to deploy the mannequin:

Clear up

With a purpose to not incur ongoing prices, delete the sources you created as a part of this submit:

- Delete the MLflow monitoring server.

- Run the final cell within the pocket book to delete the SageMaker pipeline:

Conclusion

On this submit, we targeted on run LLM fine-tuning and analysis experiments at scale utilizing SageMaker Pipelines and MLflow. You need to use managed MLflow from SageMaker to check coaching parameters and analysis outcomes to pick one of the best mannequin and deploy that mannequin in SageMaker. We additionally offered pattern code in a GitHub repository that exhibits the fine-tuning, analysis, and deployment workflow for a Llama3 mannequin.

You can begin profiting from SageMaker with MLflow for conventional MLOps or to run LLM experimentation at scale.

Concerning the Authors

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for Generative AI to assist clients and companions construct GenAI functions utilizing AWS providers. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure and ML functions.

Jagdeep Singh Soni is a Senior Companion Options Architect at AWS primarily based within the Netherlands. He makes use of his ardour for Generative AI to assist clients and companions construct GenAI functions utilizing AWS providers. Jagdeep has 15 years of expertise in innovation, expertise engineering, digital transformation, cloud structure and ML functions.

Dr. Sokratis Kartakis is a Principal Machine Studying and Operations Specialist Options Architect for Amazon Net Providers. Sokratis focuses on enabling enterprise clients to industrialize their ML and generative AI options by exploiting AWS providers and shaping their working mannequin, equivalent to MLOps/FMOps/LLMOps foundations, and transformation roadmap utilizing finest improvement practices. He has spent over 15 years inventing, designing, main, and implementing progressive end-to-end production-level ML and AI options within the domains of vitality, retail, well being, finance, motorsports, and extra.

Dr. Sokratis Kartakis is a Principal Machine Studying and Operations Specialist Options Architect for Amazon Net Providers. Sokratis focuses on enabling enterprise clients to industrialize their ML and generative AI options by exploiting AWS providers and shaping their working mannequin, equivalent to MLOps/FMOps/LLMOps foundations, and transformation roadmap utilizing finest improvement practices. He has spent over 15 years inventing, designing, main, and implementing progressive end-to-end production-level ML and AI options within the domains of vitality, retail, well being, finance, motorsports, and extra.

Kirit Thadaka is a Senior Product Supervisor at AWS targeted on generative AI experimentation on Amazon SageMaker. Kirit has in depth expertise working with clients to construct scalable workflows for MLOps to make them extra environment friendly at bringing fashions to manufacturing.

Kirit Thadaka is a Senior Product Supervisor at AWS targeted on generative AI experimentation on Amazon SageMaker. Kirit has in depth expertise working with clients to construct scalable workflows for MLOps to make them extra environment friendly at bringing fashions to manufacturing.

Piyush Kadam is a Senior Product Supervisor for Amazon SageMaker, a totally managed service for generative AI builders. Piyush has in depth expertise delivering merchandise that assist startups and enterprise clients harness the facility of basis fashions.

Piyush Kadam is a Senior Product Supervisor for Amazon SageMaker, a totally managed service for generative AI builders. Piyush has in depth expertise delivering merchandise that assist startups and enterprise clients harness the facility of basis fashions.