Construct a self-service digital assistant utilizing Amazon Lex and Information Bases for Amazon Bedrock

Organizations attempt to implement environment friendly, scalable, cost-effective, and automatic buyer assist options with out compromising the client expertise. Generative synthetic intelligence (AI)-powered chatbots play an important position in delivering human-like interactions by offering responses from a data base with out the involvement of stay brokers. These chatbots might be effectively utilized for dealing with generic inquiries, liberating up stay brokers to concentrate on extra advanced duties.

Amazon Lex offers superior conversational interfaces utilizing voice and textual content channels. It options pure language understanding capabilities to acknowledge extra correct identification of consumer intent and fulfills the consumer intent quicker.

Amazon Bedrock simplifies the method of growing and scaling generative AI functions powered by massive language fashions (LLMs) and different basis fashions (FMs). It provides entry to a various vary of FMs from main suppliers corresponding to Anthropic Claude, AI21 Labs, Cohere, and Stability AI, in addition to Amazon’s proprietary Amazon Titan fashions. Moreover, Knowledge Bases for Amazon Bedrock empowers you to develop functions that harness the ability of Retrieval Augmented Era (RAG), an method the place retrieving related info from information sources enhances the mannequin’s means to generate contextually applicable and knowledgeable responses.

The generative AI functionality of QnAIntent in Amazon Lex enables you to securely join FMs to firm information for RAG. QnAIntent offers an interface to make use of enterprise information and FMs on Amazon Bedrock to generate related, correct, and contextual responses. You should utilize QnAIntent with new or current Amazon Lex bots to automate FAQs via textual content and voice channels, corresponding to Amazon Connect.

With this functionality, you now not have to create variations of intents, pattern utterances, slots, and prompts to foretell and deal with a variety of FAQs. You possibly can merely join QnAIntent to firm data sources and the bot can instantly deal with questions utilizing the allowed content material.

On this put up, we reveal how one can construct chatbots with QnAIntent that connects to a data base in Amazon Bedrock (powered by Amazon OpenSearch Serverless as a vector database) and construct wealthy, self-service, conversational experiences in your prospects.

Resolution overview

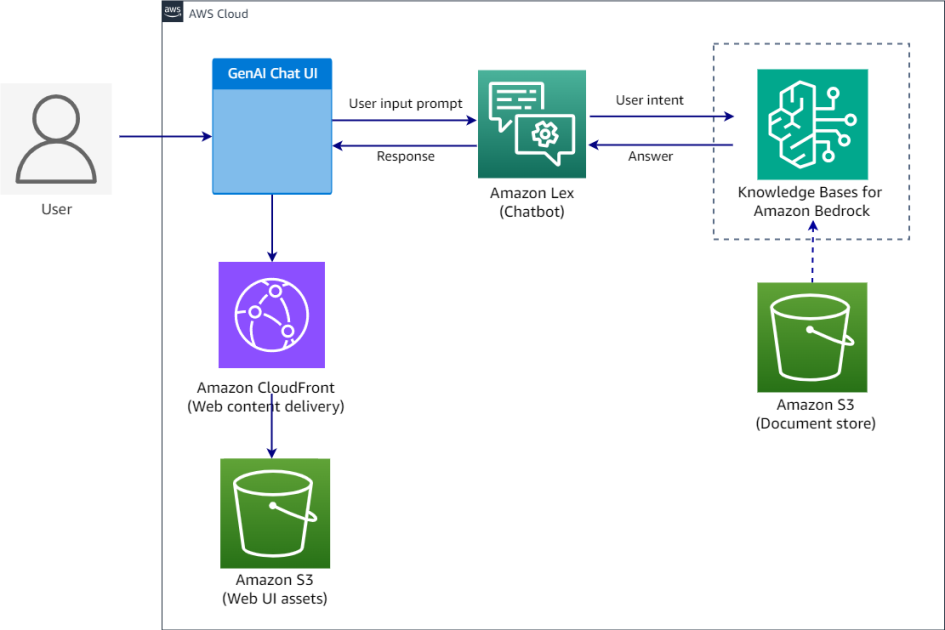

The answer makes use of Amazon Lex, Amazon Simple Storage Service (Amazon S3), and Amazon Bedrock within the following steps:

- Customers work together with the chatbot via a prebuilt Amazon Lex internet UI.

- Every consumer request is processed by Amazon Lex to find out consumer intent via a course of known as intent recognition.

- Amazon Lex offers the built-in generative AI function QnAIntent, which might be instantly hooked up to a data base to satisfy consumer requests.

- Information Bases for Amazon Bedrock makes use of the Amazon Titan embeddings mannequin to transform the consumer question to a vector and queries the data base to seek out the chunks which are semantically much like the consumer question. The consumer immediate is augmented together with the outcomes returned from the data base as a further context and despatched to the LLM to generate a response.

- The generated response is returned via QnAIntent and despatched again to the consumer within the chat utility via Amazon Lex.

The next diagram illustrates the answer structure and workflow.

Within the following sections, we take a look at the important thing elements of the answer in additional element and the high-level steps to implement the answer:

- Create a data base in Amazon Bedrock for OpenSearch Serverless.

- Create an Amazon Lex bot.

- Create new generative AI-powered intent in Amazon Lex utilizing the built-in QnAIntent and level the data base.

- Deploy the pattern Amazon Lex internet UI out there within the GitHub repo. Use the supplied AWS CloudFormation template in your most popular AWS Area and configure the bot.

Conditions

To implement this resolution, you want the next:

- An AWS account with privileges to create AWS Identity and Access Management (IAM) roles and insurance policies. For extra info, see Overview of access management: Permissions and policies.

- Familiarity with AWS providers corresponding to Amazon S3, Amazon Lex, Amazon OpenSearch Service, and Amazon Bedrock.

- Entry enabled for the Amazon Titan Embeddings G1 – Textual content mannequin and Anthropic Claude 3 Haiku on Amazon Bedrock. For directions, see Model access.

- An information supply in Amazon S3. For this put up, we use Amazon shareholder docs (Amazon Shareholder letters – 2023 & 2022) as an information supply to hydrate the data base.

Create a data base

To create a brand new data base in Amazon Bedrock, full the next steps. For extra info, discuss with Create a knowledge base.

- On the Amazon Bedrock console, select Information bases within the navigation pane.

- Select Create data base.

- On the Present data base particulars web page, enter a data base title, IAM permissions, and tags.

- Select Subsequent.

- For Knowledge supply title, Amazon Bedrock prepopulates the auto-generated information supply title; nonetheless, you may change it to your necessities.

- Maintain the information supply location as the identical AWS account and select Browse S3.

- Choose the S3 bucket the place you uploaded the Amazon shareholder paperwork and select Select.

It will populate the S3 URI, as proven within the following screenshot.

It will populate the S3 URI, as proven within the following screenshot. - Select Subsequent.

- Choose the embedding mannequin to vectorize the paperwork. For this put up, we choose Titan embedding G1 – Textual content v1.2.

- Choose Fast create a brand new vector retailer to create a default vector retailer with OpenSearch Serverless.

- Select Subsequent.

- Assessment the configurations and create your data base.

After the data base is efficiently created, it is best to see a data base ID, which you want when creating the Amazon Lex bot. - Select Sync to index the paperwork.

Create an Amazon Lex bot

Full the next steps to create your bot:

- On the Amazon Lex console, select Bots within the navigation pane.

- Select Create bot.

- For Creation technique, choose Create a clean bot.

- For Bot title, enter a reputation (for instance,

FAQBot).

- For Runtime position, choose Create a brand new IAM position with primary Amazon Lex permissions to entry different providers in your behalf.

- Configure the remaining settings primarily based in your necessities and select Subsequent.

- On the Add language to bot web page, you may select from different languages supported.

For this put up, we select English (US). - Select Executed.

After the bot is efficiently created, you’re redirected to create a brand new intent.

- Add utterances for the brand new intent and select Save intent.

Add QnAIntent to your intent

Full the next steps so as to add QnAIntent:

- On the Amazon Lex console, navigate to the intent you created.

- On the Add intent dropdown menu, select Use built-in intent.

- For Constructed-in intent, select AMAZON.QnAIntent – GenAI function.

- For Intent title, enter a reputation (for instance,

QnABotIntent). - Select Add.

After you add the QnAIntent, you’re redirected to configure the data base.

- For Choose mannequin, select Anthropic and Claude3 Haiku.

- For Select a data retailer, choose Information base for Amazon Bedrock and enter your data base ID.

- Select Save intent.

- After you save the intent, select Construct to construct the bot.

You need to see a Efficiently constructed message when the construct is full.

You need to see a Efficiently constructed message when the construct is full.

Now you can take a look at the bot on the Amazon Lex console. - Select Take a look at to launch a draft model of your bot in a chat window inside the console.

- Enter inquiries to get responses.

Deploy the Amazon Lex internet UI

The Amazon Lex internet UI is a prebuilt absolutely featured internet shopper for Amazon Lex chatbots. It eliminates the heavy lifting of recreating a chat UI from scratch. You possibly can rapidly deploy its options and decrease time to worth in your chatbot-powered functions. Full the next steps to deploy the UI:

- Observe the directions within the GitHub repo.

- Earlier than you deploy the CloudFormation template, replace the

LexV2BotIdandLexV2BotAliasIdvalues within the template primarily based on the chatbot you created in your account.

- After the CloudFormation stack is deployed efficiently, copy the

WebAppUrlworth from the stack Outputs tab. - Navigate to the online UI to check the answer in your browser.

Clear up

To keep away from incurring pointless future prices, clear up the assets you created as a part of this resolution:

- Delete the Amazon Bedrock data base and the information within the S3 bucket when you created one particularly for this resolution.

- Delete the Amazon Lex bot you created.

- Delete the CloudFormation stack.

Conclusion

On this put up, we mentioned the importance of generative AI-powered chatbots in buyer assist methods. We then supplied an summary of the brand new Amazon Lex function, QnAIntent, designed to attach FMs to your organization information. Lastly, we demonstrated a sensible use case of establishing a Q&A chatbot to investigate Amazon shareholder paperwork. This implementation not solely offers immediate and constant customer support, but additionally empowers stay brokers to dedicate their experience to resolving extra advanced points.

Keep updated with the most recent developments in generative AI and begin constructing on AWS. For those who’re looking for help on tips on how to start, take a look at the Generative AI Innovation Center.

In regards to the Authors

Supriya Puragundla is a Senior Options Architect at AWS. She has over 15 years of IT expertise in software program growth, design and structure. She helps key buyer accounts on their information, generative AI and AI/ML journeys. She is keen about data-driven AI and the realm of depth in ML and generative AI.

Supriya Puragundla is a Senior Options Architect at AWS. She has over 15 years of IT expertise in software program growth, design and structure. She helps key buyer accounts on their information, generative AI and AI/ML journeys. She is keen about data-driven AI and the realm of depth in ML and generative AI.

Manjula Nagineni is a Senior Options Architect with AWS primarily based in New York. She works with main monetary service establishments, architecting and modernizing their large-scale functions whereas adopting AWS Cloud providers. She is keen about designing cloud-centered massive information workloads. She has over 20 years of IT expertise in software program growth, analytics, and structure throughout a number of domains corresponding to finance, retail, and telecom.

Manjula Nagineni is a Senior Options Architect with AWS primarily based in New York. She works with main monetary service establishments, architecting and modernizing their large-scale functions whereas adopting AWS Cloud providers. She is keen about designing cloud-centered massive information workloads. She has over 20 years of IT expertise in software program growth, analytics, and structure throughout a number of domains corresponding to finance, retail, and telecom.

Mani Khanuja is a Tech Lead – Generative AI Specialists, creator of the ebook Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Training Basis Board. She leads machine studying initiatives in varied domains corresponding to laptop imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.

Mani Khanuja is a Tech Lead – Generative AI Specialists, creator of the ebook Utilized Machine Studying and Excessive Efficiency Computing on AWS, and a member of the Board of Administrators for Ladies in Manufacturing Training Basis Board. She leads machine studying initiatives in varied domains corresponding to laptop imaginative and prescient, pure language processing, and generative AI. She speaks at inner and exterior conferences such AWS re:Invent, Ladies in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for lengthy runs alongside the seaside.