eSentire delivers non-public and safe generative AI interactions to prospects with Amazon SageMaker

eSentire is an industry-leading supplier of Managed Detection & Response (MDR) providers defending customers, information, and purposes of over 2,000 organizations globally throughout greater than 35 industries. These safety providers assist their prospects anticipate, stand up to, and get better from refined cyber threats, stop disruption from malicious assaults, and enhance their safety posture.

In 2023, eSentire was on the lookout for methods to ship differentiated buyer experiences by persevering with to enhance the standard of its safety investigations and buyer communications. To perform this, eSentire constructed AI Investigator, a pure language question software for his or her prospects to entry safety platform information by utilizing AWS generative artificial intelligence (AI) capabilities.

On this submit, we share how eSentire constructed AI Investigator utilizing Amazon SageMaker to supply non-public and safe generative AI interactions to their prospects.

Advantages of AI Investigator

Earlier than AI Investigator, prospects would have interaction eSentire’s Safety Operation Heart (SOC) analysts to know and additional examine their asset information and related menace circumstances. This concerned handbook effort for patrons and eSentire analysts, forming questions and looking out via information throughout a number of instruments to formulate solutions.

eSentire’s AI Investigator permits customers to finish advanced queries utilizing pure language by becoming a member of a number of sources of knowledge from every buyer’s personal safety telemetry and eSentire’s asset, vulnerability, and menace information mesh. This helps prospects shortly and seamlessly discover their safety information and speed up inside investigations.

Offering AI Investigator internally to the eSentire SOC workbench has additionally accelerated eSentire’s investigation course of by bettering the size and efficacy of multi-telemetry investigations. The LLM fashions increase SOC investigations with data from eSentire’s safety consultants and safety information, enabling higher-quality investigation outcomes whereas additionally decreasing time to analyze. Over 100 SOC analysts are actually utilizing AI Investigator fashions to research safety information and supply speedy investigation conclusions.

Answer overview

eSentire prospects count on rigorous safety and privateness controls for his or her delicate information, which requires an structure that doesn’t share information with exterior giant language mannequin (LLM) suppliers. Subsequently, eSentire determined to construct their very own LLM utilizing Llama 1 and Llama 2 foundational fashions. A basis mannequin (FM) is an LLM that has undergone unsupervised pre-training on a corpus of textual content. eSentire tried a number of FMs out there in AWS for his or her proof of idea; nonetheless, the simple entry to Meta’s Llama 2 FM via Hugging Face in SageMaker for coaching and inference (and their licensing construction) made Llama 2 an apparent selection.

eSentire has over 2 TB of sign information saved of their Amazon Simple Storage Service (Amazon S3) information lake. eSentire used gigabytes of extra human investigation metadata to carry out supervised fine-tuning on Llama 2. This additional step updates the FM by coaching with information labeled by safety consultants (akin to Q&A pairs and investigation conclusions).

eSentire used SageMaker on a number of ranges, finally facilitating their end-to-end course of:

- They used SageMaker pocket book situations extensively to spin up GPU situations, giving them the flexibleness to swap high-power compute out and in when wanted. eSentire used situations with CPU for information preprocessing and post-inference evaluation and GPU for the precise mannequin (LLM) coaching.

- The extra good thing about SageMaker pocket book situations is its streamlined integration with eSentire’s AWS setting. As a result of they’ve huge quantities of knowledge (terabyte scale, over 1 billion complete rows of related information in preprocessing enter) saved throughout AWS—in Amazon S3 and Amazon Relational Database Service (Amazon RDS) for PostgreSQL clusters—SageMaker pocket book situations allowed safe motion of this quantity of knowledge instantly from the AWS supply (Amazon S3 or Amazon RDS) to the SageMaker pocket book. They wanted no extra infrastructure for information integration.

- SageMaker real-time inference endpoints present the infrastructure wanted for internet hosting their customized self-trained LLMs. This was very helpful together with SageMaker integration with Amazon Elastic Container Registry (Amazon ECR), SageMaker endpoint configuration, and SageMaker fashions to supply the whole configuration required to spin up their LLMs as wanted. The absolutely featured end-to-end deployment functionality offered by SageMaker allowed eSentire to effortlessly and constantly replace their mannequin registry as they iterate and replace their LLMs. All of this was completely automated with the software program growth lifecycle (SDLC) utilizing Terraform and GitHub, which is simply attainable via SageMaker ecosystem.

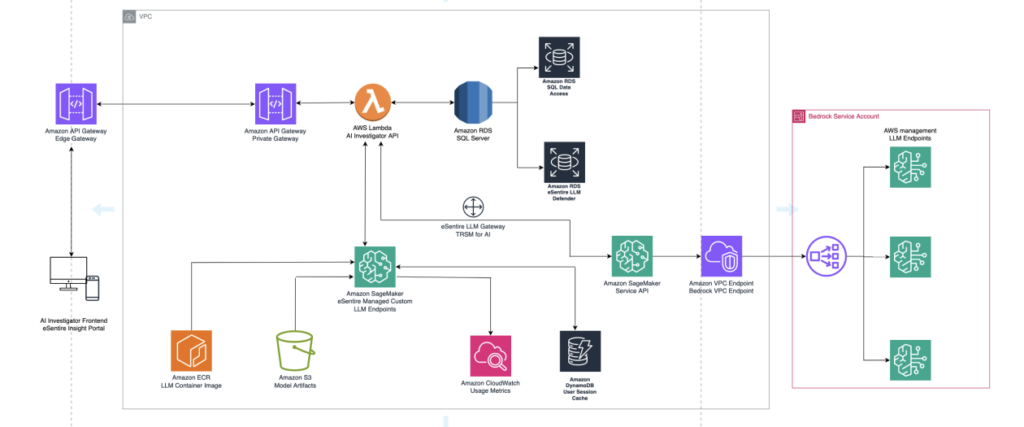

The next diagram visualizes the structure diagram and workflow.

The applying’s frontend is accessible via Amazon API Gateway, utilizing each edge and personal gateways. To emulate intricate thought processes akin to these of a human investigator, eSentire engineered a system of chained agent actions. This technique makes use of AWS Lambda and Amazon DynamoDB to orchestrate a collection of LLM invocations. Every LLM name builds upon the earlier one, making a cascade of interactions that collectively produce high-quality responses. This intricate setup makes positive that the appliance’s backend information sources are seamlessly built-in, thereby offering tailor-made responses to buyer inquiries.

When a SageMaker endpoint is constructed, an S3 URI to the bucket containing the mannequin artifact and Docker picture is shared utilizing Amazon ECR.

For his or her proof of idea, eSentire chosen the Nvidia A10G Tensor Core GPU housed in an MLG5 2XL occasion for its steadiness of efficiency and value. For LLMs with considerably bigger numbers of parameters, which demand better computational energy for each coaching and inference duties, eSentire used 12XL situations geared up with 4 GPUs. This was crucial as a result of the computational complexity and the quantity of reminiscence required for LLMs can improve exponentially with the variety of parameters. eSentire plans to harness P4 and P5 occasion varieties for scaling their manufacturing workloads.

Moreover, a monitoring framework that captures the inputs and outputs of AI Investigator was essential to allow menace searching visibility to LLM interactions. To perform this, the appliance integrates with an open sourced eSentire LLM Gateway project to observe the interactions with buyer queries, backend agent actions, and utility responses. This framework permits confidence in advanced LLM purposes by offering a safety monitoring layer to detect malicious poisoning and injection assaults whereas additionally offering governance and help for compliance via logging of consumer exercise. The LLM gateway can be built-in with different LLM providers, akin to Amazon Bedrock.

Amazon Bedrock lets you customise FMs privately and interactively, with out the necessity for coding. Initially, eSentire’s focus was on coaching bespoke fashions utilizing SageMaker. As their technique developed, they started to discover a broader array of FMs, evaluating their in-house skilled fashions towards these offered by Amazon Bedrock. Amazon Bedrock affords a sensible setting for benchmarking and an economical resolution for managing workloads as a consequence of its serverless operation. This serves eSentire properly, particularly when buyer queries are sporadic, making serverless a cost-effective various to persistently operating SageMaker situations.

From a safety perspective as properly, Amazon Bedrock doesn’t share customers’ inputs and mannequin outputs with any mannequin suppliers. Moreover, eSentire have customized guardrails for NL2SQL utilized to their fashions.

Outcomes

The next screenshot exhibits an instance of eSentire’s AI Investigator output. As illustrated, a pure language question is posed to the appliance. The software is ready to correlate a number of datasets and current a response.

Dustin Hillard, CTO of eSentire, shares: “eSentire prospects and analysts ask lots of of safety information exploration questions per 30 days, which usually take hours to finish. AI Investigator is now with an preliminary rollout to over 100 prospects and greater than 100 SOC analysts, offering a self-serve speedy response to advanced questions on their safety information. eSentire LLM fashions are saving 1000’s of hours of buyer and analyst time.”

Conclusion

On this submit, we shared how eSentire constructed AI Investigator, a generative AI resolution that gives non-public and safe self-serve buyer interactions. Clients can get close to real-time solutions to advanced questions on their information. AI Investigator has additionally saved eSentire important analyst time.

The aforementioned LLM gateway challenge is eSentire’s personal product and AWS bears no accountability.

In case you have any feedback or questions, share them within the feedback part.

In regards to the Authors

Aishwarya Subramaniam is a Sr. Options Architect in AWS. She works with industrial prospects and AWS companions to speed up prospects’ enterprise outcomes by offering experience in analytics and AWS providers.

Aishwarya Subramaniam is a Sr. Options Architect in AWS. She works with industrial prospects and AWS companions to speed up prospects’ enterprise outcomes by offering experience in analytics and AWS providers.

Ilia Zenkov is a Senior AI Developer specializing in generative AI at eSentire. He focuses on advancing cybersecurity with experience in machine studying and information engineering. His background contains pivotal roles in growing ML-driven cybersecurity and drug discovery platforms.

Ilia Zenkov is a Senior AI Developer specializing in generative AI at eSentire. He focuses on advancing cybersecurity with experience in machine studying and information engineering. His background contains pivotal roles in growing ML-driven cybersecurity and drug discovery platforms.

Dustin Hillard is liable for main product growth and expertise innovation, methods groups, and company IT at eSentire. He has deep ML expertise in speech recognition, translation, pure language processing, and promoting, and has revealed over 30 papers in these areas.

Dustin Hillard is liable for main product growth and expertise innovation, methods groups, and company IT at eSentire. He has deep ML expertise in speech recognition, translation, pure language processing, and promoting, and has revealed over 30 papers in these areas.