Environment friendly and cost-effective multi-tenant LoRA serving with Amazon SageMaker

Within the quickly evolving panorama of synthetic intelligence (AI), the rise of generative AI fashions has ushered in a brand new period of personalised and clever experiences. Organizations are more and more utilizing the ability of those language fashions to drive innovation and improve their providers, from pure language processing to content material technology and past.

Utilizing generative AI fashions within the enterprise setting, nevertheless, requires taming their intrinsic energy and enhancing their abilities to deal with particular buyer wants. In instances the place an out-of-the-box mannequin is lacking information of domain- or organization-specific terminologies, a customized fine-tuned mannequin, additionally referred to as a domain-specific giant language mannequin (LLM), may be an possibility for performing customary duties in that area or micro-domain. BloombergGPT is an instance of LLM that was educated from scratch to have a greater understanding of extremely specialised vocabulary discovered within the monetary area. In the identical sense, area specificity might be addressed by means of fine-tuning at a smaller scale. Prospects are fine-tuning generative AI fashions primarily based on domains together with finance, gross sales, advertising and marketing, journey, IT, HR, finance, procurement, healthcare and life sciences, customer support, and plenty of extra. Moreover, impartial software program distributors (ISVs) are constructing safe, managed, multi-tenant, end-to-end generative AI platforms with fashions which can be personalized and personalised primarily based on their buyer’s datasets and domains. For instance, Forethought launched SupportGPT, a generative AI platform for buyer help.

Because the calls for for personalised and specialised AI options develop, companies usually discover themselves grappling with the problem of effectively managing and serving a mess of fine-tuned fashions throughout numerous use instances and buyer segments. With the necessity to serve a variety of AI-powered use instances, from resume parsing and job ability matching, domain-specific to electronic mail technology and pure language understanding, these companies are sometimes left with the daunting job of managing a whole lot of fine-tuned fashions, every tailor-made to particular buyer wants or use instances. The complexities of this problem are compounded by the inherent scalability and cost-effectiveness considerations that include deploying and sustaining such a various mannequin ecosystem. Conventional approaches to mannequin serving can rapidly grow to be unwieldy and useful resource intensive, resulting in elevated infrastructure prices, operational overhead, and potential efficiency bottlenecks.

High quality-tuning monumental language fashions is prohibitively costly when it comes to the {hardware} required and the storage and switching value for internet hosting impartial cases for various duties. LoRA (Low-Rank Adaptation) is an environment friendly adaptation technique that neither introduces inference latency nor reduces enter sequence size whereas retaining excessive mannequin high quality. Importantly, it permits for fast job switching when deployed as a service by sharing the overwhelming majority of the mannequin parameters.

On this publish, we discover an answer that addresses these challenges head-on utilizing LoRA serving with Amazon SageMaker. By utilizing the brand new efficiency optimizations of LoRA methods in SageMaker large model inference (LMI) containers together with inference parts, we reveal how organizations can effectively handle and serve their rising portfolio of fine-tuned fashions, whereas optimizing prices and offering seamless efficiency for his or her prospects.

The most recent SageMaker LMI container presents unmerged-LoRA inference, sped up with our LMI-Dist inference engine and OpenAI fashion chat schema. To study extra about LMI, check with LMI Starting Guide, LMI handlers Inference API Schema, and Chat Completions API Schema.

New LMI options for serving LoRA adapters at scale on SageMaker

There are two sorts of LoRA that may be put onto numerous engines:

- Merged LoRA – This is applicable the adapter by modifying the bottom mannequin in place. It has zero added latency whereas operating, however has a price to use or unapply the merge. It really works finest for instances with just a few adapters. It’s best for single-adapter batches, and doesn’t help multi-adapter batches.

- Unmerged LoRA – This alters the mannequin operators to issue within the adapters with out altering the bottom mannequin. It has a better inference latency for the extra adapter operations. Nevertheless, it does help multi-adapter batches. It really works finest to be used instances with numerous adapters.

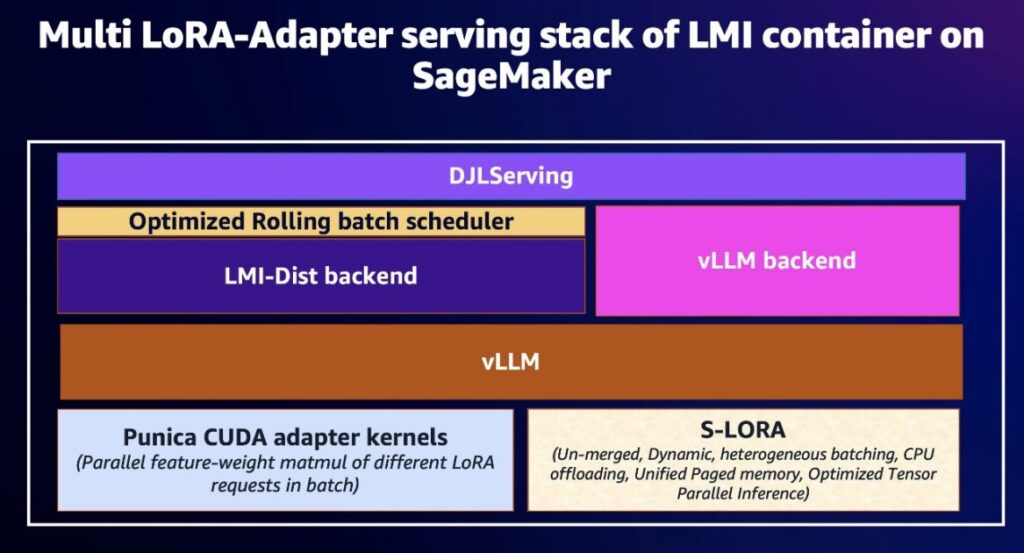

The brand new LMI container presents out-of-box integration and abstraction with SageMaker for internet hosting a number of unmerged LoRA adapters with increased efficiency (low latency and excessive throughput) utilizing the vLLM backend LMI-Dist backend that makes use of vLLM, which in-turn makes use of S-LORA and Punica. The LMI container presents two backends for serving LoRA adapters: the LMI-Dist backend (really useful) and the vLLM Backend. Each backends are primarily based on the open supply vLLM library for serving LoRA adapters, however the LMI-Dist backend supplies extra optimized steady (rolling) batching implementation. You aren’t required to configure these libraries individually; the LMI container supplies the higher-level abstraction by means of the vLLM and LMI-Dist backends. We suggest you begin with the LMI-Dist backend as a result of it has extra efficiency optimizations associated to steady (rolling) batching.

S-LoRA shops all adapters in the principle reminiscence and fetches the adapters utilized by the presently operating queries to the GPU reminiscence. To effectively use the GPU reminiscence and scale back fragmentation, S-LoRA proposes unified paging. Unified paging makes use of a unified reminiscence pool to handle dynamic adapter weights with totally different ranks and KV cache tensors with various sequence lengths. Moreover, S-LoRA employs a novel tensor parallelism technique and extremely optimized customized CUDA kernels for heterogeneous batching of LoRA computation. Collectively, these options allow S-LoRA to serve 1000’s of LoRA adapters on a single GPU or throughout a number of GPUs with a small overhead.

Punica is designed to effectively serve a number of LoRA fashions on a shared GPU cluster. It achieves this by following three design pointers:

- Consolidating multi-tenant LoRA serving workloads to a small variety of GPUs to extend general GPU utilization

- Enabling batching for various LoRA fashions to enhance efficiency and GPU utilization

- Specializing in the decode stage efficiency, which is the predominant think about the price of mannequin serving

Punica makes use of a brand new CUDA kernel design referred to as Segmented Collect Matrix-Vector Multiplication (SGMV) to batch GPU operations for concurrent runs of a number of LoRA fashions, considerably enhancing GPU effectivity when it comes to reminiscence and computation. Punica additionally implements a scheduler that routes requests to lively GPUs and migrates requests for consolidation, optimizing GPU useful resource allocation. Total, Punica achieves excessive throughput and low latency in serving multi-tenant LoRA fashions on a shared GPU cluster. For extra data, learn the Punica whitepaper.

The next determine exhibits the multi LoRA adapter serving stack of the LMI container on SageMaker.

As proven within the previous determine, the LMI container supplies the higher-level abstraction by means of the vLLM and LMI-Dist backends to serve LoRA adapters at scale on SageMaker. Consequently, you’re not required to configure the underlying libraries (S-LORA, Punica, or vLLM) individually. Nevertheless, there may be instances the place you need to management a few of the efficiency driving parameters relying in your use case and utility efficiency necessities. The next are the frequent configuration choices the LMI container supplies to tune LoRA serving. For extra particulars on configuration choices particular to every backend, check with vLLM Engine User Guide and LMI-Dist Engine User Guide.

Design patterns for serving fine-tuned LLMs at scale

Enterprises grappling with the complexities of managing generative AI fashions usually encounter situations the place a strong and versatile design sample is essential. One frequent use case entails a single base mannequin with a number of LoRA adapters, every tailor-made to particular buyer wants or use instances. This method permits organizations to make use of a foundational language mannequin whereas sustaining the agility to fine-tune and deploy personalized variations for his or her numerous buyer base.

Single-base mannequin with a number of fine-tuned LoRA adapters

An enterprise providing a resume parsing and job ability matching service might use a single high-performance base mannequin, resembling Mistral 7B. The Mistral 7B base mannequin is especially well-suited for job-related content material technology duties, resembling creating personalised job descriptions and tailor-made electronic mail communications. Mistral’s sturdy efficiency in pure language technology and its means to seize industry-specific terminology and writing types make it a useful asset for such an enterprise’s prospects within the HR and recruitment area. By fine-tuning Mistral 7B with LoRA adapters, enterprises can be certain that the generated content material aligns with the distinctive branding, tone, and necessities of every buyer, delivering a extremely personalised expertise.

Multi-base fashions with a number of fine-tuned LoRA adapters

Alternatively, the identical enterprise might use the Llama 3 base mannequin for extra basic pure language processing duties, resembling resume parsing, abilities extraction, and candidate matching. Llama 3’s broad information base and strong language understanding capabilities allow it to deal with a variety of paperwork and codecs, ensuring their providers can successfully course of and analyze candidate data, whatever the supply. By fine-tuning Llama 3 with LoRA adapters, such enterprises can tailor the mannequin’s efficiency to particular buyer necessities, resembling regional dialects, industry-specific terminology, or distinctive information codecs. By using a multi-base mannequin, multi-adapter design sample, enterprises can benefit from the distinctive strengths of every language mannequin to ship a complete and extremely personalised job profile to a candidate resume matching service. This method permits enterprises to cater to the various wants of their prospects, ensuring every consumer receives tailor-made AI-powered options that improve their recruitment and expertise administration processes.

Successfully implementing and managing these design patterns, the place a number of base fashions are coupled with quite a few LoRA adapters, is a key problem that enterprises should deal with to unlock the total potential of their generative AI investments. A well-designed and scalable method to mannequin serving is essential in delivering cost-effective, high-performance, and personalised experiences to prospects.

Answer overview

The next sections define the coding steps to deploy a base LLM, TheBloke/Llama-2-7B-Chat-fp16, with LoRA adapters on SageMaker. It entails getting ready a compressed archive with the bottom mannequin recordsdata and LoRA adapter recordsdata, importing it to Amazon Simple Storage Service (Amazon S3), deciding on and configuring the SageMaker LMI container to allow LoRA help, making a SageMaker endpoint configuration and endpoint, defining an inference element for the mannequin, and sending inference requests specifying totally different LoRA adapters like Spanish (“es”) and French (“fr”) within the request payload to make use of these fine-tuned language capabilities. For extra data on deploying fashions utilizing SageMaker inference parts, see Amazon SageMaker adds new inference capabilities to help reduce foundation model deployment costs and latency.

To showcase multi-base fashions with their LoRA adapters, we add one other base mannequin, mistralai/Mistral-7B-v0.1, and its LoRA adapter to the identical SageMaker endpoint, as proven within the following diagram.

Conditions

You might want to full some stipulations earlier than you’ll be able to run the pocket book:

Add your LoRA adapters to Amazon S3

To arrange the LoRA adapters, create a adapters.tar.gz compressed archive containing the LoRA adapters listing. The adapters listing ought to include subdirectories for every of the LoRA adapters, with every adapter subdirectory containing the adapter_model.bin file (the adapter weights) and the adapter_config.json file (the adapter configuration). We usually receive these adapter recordsdata by utilizing the PeftModel.save_pretrained() technique from the Peft library. After you assemble the adapters listing with the adapter recordsdata, you compress it right into a adapters.tar.gz archive and add it to an S3 bucket for deployment or sharing. We embrace the LoRA adapters within the adapters listing as follows:

Obtain LoRA adapters, compress them, and add the compressed file to Amazon S3:

Choose and LMI container and configure LMI to allow LoRA

SageMaker supplies optimized containers for LMI that help totally different frameworks for mannequin parallelism, permitting the deployment of LLMs throughout a number of GPUs. For this publish, we make use of the DeepSpeed container, which encompasses frameworks resembling DeepSpeed and vLLM, amongst others. See the next code:

Create a SageMaker endpoint configuration

Create an endpoint configuration utilizing the suitable occasion kind. Set ContainerStartupHealthCheckTimeoutInSeconds to account for the time taken to obtain the LLM weights from Amazon S3 or the mannequin hub, and the time taken to load the mannequin on the GPUs:

Create a SageMaker endpoint

Create a SageMaker endpoint primarily based on the endpoint configuration outlined within the earlier step. You employ this endpoint for internet hosting the inference element (mannequin) inference and make invocations.

Create a SageMaker inference element (mannequin)

Now that you’ve got created a SageMaker endpoint, let’s create our mannequin as an inference element. The SageMaker inference element lets you deploy a number of basis fashions (FMs) on the identical SageMaker endpoint and management what number of accelerators and the way a lot reminiscence is reserved for every FM. See the next code:

Make inference requests utilizing totally different LoRA adapters

With the endpoint and inference mannequin prepared, now you can ship requests to the endpoint utilizing the LoRA adapters you fine-tuned for Spanish and French languages. The precise LoRA adapter is specified within the request payload beneath the "adapters" discipline. We use "es" for the Spanish language adapter and "fr" for the French language adapter, as proven within the following code:

Add one other base mannequin and inference element and its LoRA adapter

Let’s add one other base mannequin and its LoRA adapter to the identical SageMaker endpoint for multi-base fashions with a number of fine-tuned LoRA adapters. The code is similar to the earlier code for creating the Llama base mannequin and its LoRA adapter.

Configure the SageMaker LMI container to host the bottom mannequin (mistralai/Mistral-7B-v0.1) and its LoRA adapter (mistral-lora-multi-adapter/adapters/fr):

Create a brand new SageMaker mannequin and inference element for the bottom mannequin (mistralai/Mistral-7B-v0.1) and its LoRA adapter (mistral-lora-multi-adapter/adapters/fr):

Invoke the identical SageMaker endpoint for the newly created inference element for the bottom mannequin (mistralai/Mistral-7B-v0.1) and its LoRA adapter (mistral-lora-multi-adapter/adapters/fr):

Clear up

Delete the SageMaker inference parts, fashions, endpoint configuration, and endpoint to keep away from incurring pointless prices:

Conclusion

The flexibility to effectively handle and serve a various portfolio of fine-tuned generative AI fashions is paramount if you’d like your group to ship personalised and clever experiences at scale in at the moment’s quickly evolving AI panorama. With the inference capabilities of SageMaker LMI coupled with the efficiency optimizations of LoRA methods, you’ll be able to overcome the challenges of multi-tenant fine-tuned LLM serving. This resolution lets you consolidate AI workloads, batch operations throughout a number of fashions, and optimize useful resource utilization for cost-effective, high-performance supply of tailor-made AI options to your prospects. As demand for specialised AI experiences continues to develop, we’ve proven how the scalable infrastructure and cutting-edge mannequin serving methods of SageMaker place AWS as a strong platform for unlocking generative AI’s full potential. To begin exploring the advantages of this resolution for your self, we encourage you to make use of the code example and sources we’ve offered on this publish.

In regards to the authors

Michael Nguyen is a Senior Startup Options Architect at AWS, specializing in leveraging AI/ML to drive innovation and develop enterprise options on AWS. Michael holds 12 AWS certifications and has a BS/MS in Electrical/Laptop Engineering and an MBA from Penn State College, Binghamton College, and the College of Delaware.

Michael Nguyen is a Senior Startup Options Architect at AWS, specializing in leveraging AI/ML to drive innovation and develop enterprise options on AWS. Michael holds 12 AWS certifications and has a BS/MS in Electrical/Laptop Engineering and an MBA from Penn State College, Binghamton College, and the College of Delaware.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Laptop Imaginative and prescient domains. He helps prospects obtain excessive efficiency mannequin inference on SageMaker.

Dhawal Patel is a Principal Machine Studying Architect at AWS. He has labored with organizations starting from giant enterprises to mid-sized startups on issues associated to distributed computing, and Synthetic Intelligence. He focuses on Deep studying together with NLP and Laptop Imaginative and prescient domains. He helps prospects obtain excessive efficiency mannequin inference on SageMaker.

Vivek Gangasani is a AI/ML Startup Options Architect for Generative AI startups at AWS. He helps rising GenAI startups construct progressive options utilizing AWS providers and accelerated compute. At present, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of Giant Language Fashions. In his free time, Vivek enjoys climbing, watching motion pictures and making an attempt totally different cuisines.

Vivek Gangasani is a AI/ML Startup Options Architect for Generative AI startups at AWS. He helps rising GenAI startups construct progressive options utilizing AWS providers and accelerated compute. At present, he’s centered on creating methods for fine-tuning and optimizing the inference efficiency of Giant Language Fashions. In his free time, Vivek enjoys climbing, watching motion pictures and making an attempt totally different cuisines.

Qing Lan is a Software program Improvement Engineer in AWS. He has been engaged on a number of difficult merchandise in Amazon, together with excessive efficiency ML inference options and excessive efficiency logging system. Qing’s workforce efficiently launched the primary Billion-parameter mannequin in Amazon Promoting with very low latency required. Qing has in-depth information on the infrastructure optimization and Deep Studying acceleration.

Qing Lan is a Software program Improvement Engineer in AWS. He has been engaged on a number of difficult merchandise in Amazon, together with excessive efficiency ML inference options and excessive efficiency logging system. Qing’s workforce efficiently launched the primary Billion-parameter mannequin in Amazon Promoting with very low latency required. Qing has in-depth information on the infrastructure optimization and Deep Studying acceleration.