Constructing MLOps Capabilities at GitLab As a One-Particular person ML Platform Staff

Eduardo Bonet is an incubation engineer at GitLab, constructing out their MLOps capabilities.

One of many first options Eduardo carried out on this function was a diff for Jupyter Notebooks, bringing code evaluations into the info science course of.

Eduardo believes in an iterative, feedback-driven product improvement course of, though he emphasizes that “minimal viable change” doesn’t essentially imply that there’s an instantly seen value-add from the person’s perspective.

Whereas LLMs are rapidly gaining traction, Eduardo thinks they’ll not substitute ML or conventional software program engineering however add to the capabilities. Thus, he believes that GitLab’s present deal with MLOps – fairly than LLMOps – is precisely proper.

This text was initially an episode of the ML Platform Podcast. On this present, Piotr Niedźwiedź and Aurimas Griciūnas, along with ML platform professionals, talk about design decisions, greatest practices, instance software stacks, and real-world learnings from a few of the greatest ML platform professionals.

On this episode, Eduardo Bonet shares what he realized from constructing MLOps capabilities at GitLab as a one-person ML platform staff.

You’ll be able to watch it on YouTube:

Or Hearken to it as a podcast on:

However in case you favor a written model, right here you’ve gotten it!

On this episode, you’ll study:

-

1

Code evaluations within the information science job circulation -

2

CI/CD pipelines vs. ML coaching pipelines -

3

The connection between DevOps and MLOps -

4

Builiding a local experiment tracker at GitLab from scratch -

5

MLOps vs. LLMOps

Who’s Eduardo?

Aurimas: Good day everybody, and welcome to the Machine Studying Platform Podcast. As all the time, along with me is my co-host, Pjotr Niedźwiedź, the CEO and founding father of neptune.ai.

As we speak on the present, we’ve our visitor, Eduardo Bonet. Eduardo, a employees incubation engineer in GitLab, is accountable for bringing all the capabilities and goodies of MLOps to GitLab natively.

Hello, Eduardo. Please share extra about your self with our viewers.

Eduardo Bonet: Good day everybody, thanks for having me.

I’m initially from Brazil, however I’ve lived within the Netherlands for six years. I’ve a really bizarre background. Nicely, it’s management automation engineering, however I’ve all the time labored with software program improvement, however not all the time on the similar place, so it’s completely different.

I’ve been a backend, frontend, Android developer, information scientist, machine studying engineer, and now I’m an incubation engineer.

I reside in Amsterdam with my accomplice, my child, and my canine. That’s the final gist.

What’s an incubation engineer?

Piotr: Speaking of your background, I’ve by no means heard of the time period incubation engineer earlier than. What’s it about?

Eduardo Bonet: The incubation division at GitLab consists of some extra incubation engineers. It’s a bunch of people that attempt to discover new options or incubate new options or new markets in GitLab.

We’re all engineers, so we’re alleged to ship code to the code base. We’re alleged to discover a group or a brand new persona that we wish to convey into GitLab, speak to them, see what they need, introduce new options, and discover whether or not these options make sense in GitLab or not.

It’s a really early improvement of latest options, so we use the time period incubation. Our engineers are extra targeted on transferring from zero to eighty. At this level, we cross on to a daily staff, which is able to, if it is sensible, do the eighty to ninety-five or eighty to 100.

A day within the lifetime of an incubation engineer

Aurimas: You’re a single-person staff constructing out the MLOps capabilities, proper?

Eduardo Bonet: Sure.

Aurimas: Are you able to give us a glimpse into your day-to-day? How do you handle to do all of that?

Eduardo Bonet: GitLab is nice as a result of I don’t have loads of conferences—no less than not internally. I spend most of my day coding and implementing options, after which I get in touch with clients both straight by scheduling calls with them or by reaching out to the group on Slack, LinkedIn, or bodily meetups. I speak to them about what they need, what they want, and what the necessities are.

One of many challenges is that it’s not a buyer; the those who I’ve to consider usually are not the customers of GitLab however the individuals who don’t use GitLab. These are those that I’m constructing for. These are those that I put in options for as a result of those which can be already utilizing GitLab already use GitLab.

Incubation is extra about bringing new markets and other people into GitLab’s ecosystem. Relying solely on the shoppers we have already got just isn’t sufficient. I have to exit and have a look at customers who wish to use it or possibly have it accessible however don’t have causes to make use of it.

Aurimas: However in terms of, let’s say, new capabilities that you’re constructing, you talked about that you’re speaking with clients, proper?

I’d guess these are organizations that develop common software program however would additionally like to make use of GitLab for machine studying. Or are you straightly focusing on some clients who usually are not but GitLab clients—let’s name them “possible customers.”

Eduardo Bonet: Sure, each of them.

The best ones are clients who’re already on GitLab and have a knowledge science group of their firm, however that information science group doesn’t discover good causes to make use of GitLab. I can strategy them and see, making it simpler as a result of they will begin utilizing it instantly.

However there are additionally model new customers who’ve by no means had GitLab. They’ve a extra information science-heavy workflow, and I’m looking for a technique to arrange their MLops cycle and the way GitLab could be an possibility for them.

Aurimas: Was it straightforward to slim down the capabilities that you simply’re going to construct subsequent? Let’s say you began on the very starting.

Eduardo Bonet: Yeah.

In DevOps, you’ve gotten the DevOps lifecycle, and I’m at present wanting on the DevOps half, which is up till a mannequin is prepared for deployment.

I began with code assessment. I carried out Jupyter Notebook diffs and code evaluations for Jupyter Notebooks some time in the past. Then, I carried out mannequin experiments. This was launched lately, and now I’m implementing the mannequin registry. I began engaged on the motor registry inside GitLab.

After getting the mannequin registry, there are some issues that you may add, however proper now, that’s the principle one. Observability could be added later after getting the registry, in order that’s extra a part of the Ops, however on the Dev facet of issues, that is what I’ve been taking a look at:

- Code evaluations

- Mannequin experiments

- Mannequin registry

- Pipelines

Aurimas: And these requests got here straight from the customers, I assume?

Eduardo Bonet: It relies upon. I used to be a machine studying engineer and a knowledge scientist earlier than, so loads of what I do is fixing private ache factors.

I convey loads of my expertise into taking a look at what may very well be as a result of I used to be a GitLab person earlier than as a knowledge scientist and as an engineer. So I may see what may very well be carried out with GitLab but additionally what I couldn’t do as a result of the tooling was not there. So I convey that to the desk, and I speak to loads of clients.

Up to now, clients have urged options equivalent to integrating MLflow or mannequin experiments and the mannequin registry.

There are loads of issues to be carried out, and it’s onerous to decide on what to search for. At that time, I normally go along with what I’m most enthusiastic about as a result of if I’m enthusiastic about one thing, I can construct sooner, after which I can construct extra

Kickstarting a brand new initiative

Piotr: I’ve extra questions on the organizational degree.

It considerations one thing I’ve learn within the GitLab Handbook. For individuals who don’t know what it’s, it’s a sort of open-source, public wiki or a set of paperwork that describes how GitLab is organized.

It’s an important supply of inspiration for learn how to construction completely different points of a software program firm, from HR to engineering merchandise.

There was a paragraph about how we’re beginning new issues, like MLOps assist or MLOps GitLab providing for the MLOps group. You’re an instance of this coverage.

On the one hand, they’re beginning lean. You’re a one-man present, proper? However they put an excellent senior man in command of it. For me, it seems like a sensible factor to do, however it’s shocking, and I feel that I’ve made this error previously once I wished to start out one thing new.

I wished to start out lean, so I put a extra junior-level particular person in cost as a result of it’s about being lean. Nevertheless, it was not essentially profitable as a result of the issue was not sufficiently well-defined.

Due to this fact, my query is, what are the hats you’re successfully carrying to run this? It seems like an interdisciplinary undertaking.

Eduardo Bonet: There are various methods of kickstarting a brand new initiative inside an organization. Beginning lean or incubation engineers are extra for the dangerous stuff, for instance, issues that we don’t actually know if make sense or not, or usually tend to not make sense than to make sense.

In different circumstances, each staff that isn’t incubated can even kickstart their very own initiatives. They’ve their very own technique of learn how to strategy. They’ve extra individuals. They’ve UX assist. They’ve loads of other ways.

Our manner is to have an concept, construct it, ship it, and take a look at it with customers. The hats I normally should put on are largely:

- Backend/frontend engineer – to deploy the options that I want

- Product handler – to speak to clients, enter the method of deploying issues at GitLab, perceive the discharge cycle, handle every little thing round, and handle the method with different groups.

- UX – there’s a bit little bit of UX, however I favor to delegate it to precise UX researchers and designers. However for the early model, I normally construct one thing as a substitute of asking a UX or a designer to create a design. I construct one thing and ask them to enhance it.

Piotr: You even have this design system, Pajamas, proper?

Eduardo Bonet: Sure, Pajamas helps rather a lot. Not less than you get the blocks going and transferring, however you may nonetheless construct one thing unhealthy even when you have blocks. So I normally ask for UX assist as soon as there’s one thing aligned or one thing extra tangible that they will have a look at. At this level, we are able to already ship to customers as nicely, so the UX has suggestions from customers straight.

There’s additionally the info scientist hat, however it’s probably not at delivering issues. After I chat with clients, it’s actually useful that I used to be a knowledge scientist and a machine studying engineer as a result of then I can speak in additional technical phrases with them or extra direct phrases. Generally the customers wish to speak technical, they wish to speak on a better degree, they wish to get all the way down to it. In order that’s very useful.

On the day-to-day, the info science and machine studying hat is extra for conversations and what must be carried out fairly than for what they do proper now.

Piotr: Who could be the following particular person you’ll invite to your staff to assist you? In the event you can select, what could be the place?

Eduardo Bonet: Proper now, it might be a UX designer after which extra engineers. That’s how it might develop a bit extra.

Piotr: I’m asking this query as a result of what you do is a sort of excessive hardcore model of an ML platform staff, the place the ML platform staff is meant to serve information science and ML groups inside the group. Nonetheless, you’ve gotten a broader spectrum of groups to serve.

Eduardo Bonet: We now have each information science and machine studying groups inside GitLab. I separate each as a result of one helps the enterprise make selections, and the opposite makes use of machine studying and AI for product improvement. They’re clients of what I construct, so I’ve inner clients of what I construct.

However I constructed each in order that we are able to use them internally, and exterior clients can, too. It’s nice to have that direct dogfooding inside the firm. A variety of GitLab is constructed round dogfooding as a result of we use our product for practically every little thing.

Having them use the tooling as nicely, the mannequin experiments, for instance, was nice. They have been early customers, they usually gave me some suggestions on what was working and what was not in Pocket book diffs. In order that’s nice as nicely. It’s higher to have them round.

Code evaluations within the information science job circulation

Aurimas: Are these machine studying groups utilizing another third-party instruments, or are they absolutely solely counting on what you’ve gotten constructed?

Eduardo Bonet: No, what I’ve constructed is inadequate for a full MLOps lifecycle. The groups are utilizing different instruments as nicely.

Aurimas: I assume what you’re constructing will substitute what they’re at present utilizing?

Eduardo Bonet: If what I constructed is best than that particular answer that they want, sure, then hopefully, they are going to substitute it with what I constructed.

Aurimas: So that you’ve been at it for round one and a half years, proper?

Eduardo Bonet: Sure.

Aurimas: Might you describe the success of your tasks? How do you measure them? What are the statistics?

Eduardo Bonet: I’ve inner metrics that I take advantage of, for instance, for Jupyter Pocket book diffs or code evaluations. The preliminary speculation is that information scientists wish to have code evaluations, however they will’t as a result of the tooling just isn’t there, so we deployed code evaluations. It was the very first thing that I labored on. There was an enormous spike in code evaluations after the function was deployed—even when I needed to hack the implementation a bit.

I carried out my very own model of diffs for Jupyter Notebooks, and we noticed an enormous, sustained spike. There was a bounce after which a sustained variety of evaluations and feedback on Jupyter Notebooks. Which means the speculation was appropriate. They wished to do code evaluations, however they only didn’t have any technique to do it.

However we additionally depend on loads of qualitative suggestions as a result of I’m not taking a look at our present customers; I’m taking a look at new customers coming in. For that, I take advantage of loads of social media to get an concept of what customers need or whether or not they just like the options, and I additionally chat with people.

It’s humorous as a result of I went to the pub with ex-colleagues and a knowledge scientist, and there was a bug on Jupyter. They nearly made me take my laptop computer to repair the bug whereas there, and I fastened it the following week. However I see now extra information scientists coming in and asking for information science stuff in GitLab than earlier than.

Aurimas: You talked about code evaluations. Do I perceive appropriately that you simply imply with the ability to show Jupyter Pocket book diffs? That might then end in code evaluations as a result of beforehand, you couldn’t try this.

Eduardo Bonet: Sure.

Piotr: Is it carried out in the best way of pull requests? Like with pull requests or is extra about, “Okay, here’s a Jupyter Pocket book” as a result of I see a couple of – let’s name them “jobs to be carried out” – round it.

For instance, I’ve carried out one thing in a Jupyter Pocket book, possibly some information exploration and mannequin coaching inside the pocket book. I see outcomes, and I wish to get suggestions, you already know, on the place to study and the place I ought to change one thing, like ideas from colleagues. That is one use case that involves my thoughts.

Second, and that’s one thing I’ve not seen, however possibly as a result of this performance was not accessible, is a pull request, a merge state of affairs.

Eduardo Bonet: The main target was precisely on the merge request circulation. Once you push a change to Jupyter Pocket book, and also you create a merge request, you will note the diff of the Jupyter Pocket book with the photographs displayed over there, in a simplified model.

I convert each Jupyter Notebooks to their markdown types, do some cleanup as a result of there’s loads of stuff in there that’s not mandatory, maximize data, cut back noise, after which diff these markdown variations. Then, you may remark and talk about the notebooks’ markdown variations. It doesn’t matter for the person—nothing modifications for the person. There’s the push, and it’s over there.

After I was in information science, it was not even concerning the ones who used notebooks for machine studying. These are essential, however it’s additionally the info scientists who’re extra targeted on the enterprise circumstances. The ultimate artifact of their work is normally a report, and the pocket book is normally the ultimate a part of their report—like the ultimate technical a part of their report.

After I was a knowledge scientist, we’d assessment one another’s paperwork—the ultimate studies—and there could be graphs and stuff, however no one would see how these graphs have been generated. For instance, what was the code? What was the equation? Was there a lacking plus signal someplace that would fully flip the choice being made in the long run? Not realizing that could be very harmful.

I’d say that for this function, the customers who can get essentially the most out of it usually are not those who solely deal with machine studying however those that are extra on the enterprise facet of knowledge science.

Piotr: This is sensible. This idea of pull requests and code assessment within the context of reporting makes good sense for me. I used to be unsure, as an example, in mannequin constructing, I’ve not seen a lot of pull requests. Possibly when you have a shared or function engineering library, then sure, pipelining, sure, however you wouldn’t do pipeline essentially in notebooks—no less than it wouldn’t be my advice, however yeah, it is sensible.

Aurimas: Even in machine studying, the experimentation environments profit rather a lot earlier than you truly push your pipeline to manufacturing, proper?

Eduardo Bonet: Yeah.

And there’s one other idea about code assessment that was essential to me: code assessment is the place code tradition grows. It’s a kickstarter to create a tradition of improvement, a shared tradition of improvement amongst your friends.

Knowledge scientists don’t have that. It’s not that they don’t wish to; it’s that in the event that they don’t code assessment, they don’t speak concerning the code, they don’t share what’s frequent, what just isn’t, what are errors, greatest case or not.

For me, code assessment is much less about correctness and extra about mentoring and discussing what’s being pushed.

I hope that with Jupyter code evaluations, together with the common code evaluations and all the issues we’ve, we are able to push this code assessment or code tradition to information scientists higher–like permitting them to develop this tradition themselves by giving them the mandatory instruments.

Piotr: I actually like what you mentioned. I’ve been an engineer nearly all my life, and code assessment is considered one of my favourite elements of it.

In the event you’re working as a staff—once more, not about correctness however about discovering how one thing could be carried out easier or in another way—additionally ensure that a staff understands one another’s code and that you’ve it lined so that you don’t rely upon one particular person.

It isn’t apparent learn how to make it a part of the method once you’re engaged on fashions, for me no less than, however I’m actually seeing that we’re lacking one thing right here as MLOps practitioners.

Eduardo Bonet: The second half that involves this, to the merge request, is the mannequin experiments themselves. I’m constructing that second half independently of merge requests, however finally, ideally, it will likely be a part of the merge request circulation.

So once you push a change to a mannequin, it already runs hyperparameter tuning in your CI/CD pipelines. You already show on the merge request, together with the modifications, the fashions, the potential fashions, and the potential performances of every mannequin that you may choose to deploy your mannequin—your candidates, what I name each a candidate.

From the merge request, you may choose which mannequin will go into manufacturing or turn out to be a mannequin model consumed later. That’s the second a part of the merge requests that we’re taking a look at.

Piotr: So that you’re saying this may also be a part of the report after hyperparameter optimization as soon as there’s a change? You’ll conduct hyperparameter optimization to find out the mannequin’s potential high quality after these modifications. So that you see that the extent of merge.

We’ve one thing like that, proper? Once we are engaged on the code, you’re going to get a report from the assessments, no less than the unit assessments. Yeah, it handed. The safety take a look at handed, okay. It appears good…

Eduardo Bonet: In the identical manner that you’ve this for software program improvement, the place you’ve gotten safety scanning, dependency scanning, and every little thing else, you should have the report of the candidates being generated for that merge request.

Then, you’ve gotten a view of what modified. You’ll be able to monitor down the place the change got here from and the way it impacts the mannequin or the experiment over time. As soon as the merge request is merged, you may deploy the mannequin.

CI/CD pipelines vs. ML coaching pipelines

Aurimas: I’ve a query right here. It’s about making your machine studying coaching pipeline a part of your CI/CD pipeline. If I hear appropriately, you’re treating them as the identical factor, appropriate?

Eduardo Bonet: There are a number of pipelines that you may check out, and there are a number of instruments that do pipelines. GitLab pipelines are extra thought out for CI/CD, after the code is within the repository. Other tools, like Kubeflow or Airflow, are higher at operating any pipeline.

A variety of our customers use GitLab for CI/CD as soon as the code is there, after which they set off the pipeline. They use GitLab to orchestrate triggering pipelines on Kubeflow or no matter software they’re utilizing, like Airflow or one thing—it’s normally one of many two.

Some individuals additionally solely use GitLab pipelines, which I used to do as nicely once I was a machine studying engineer. I used to be utilizing GitLab pipelines, after which I labored on migrating to Kubeflow, after which I regretted it as a result of my fashions weren’t that large for my use case. It was high quality to run on the CI/CD pipeline, and I didn’t have to deploy an entire different set of tooling to deal with my use case—it was simply higher to go away it at GitLab.

We’re engaged on enhancing the CI, our pipeline runner. In model 16.1, which is out now, we have runners with GPU support, so in case you want GPU assist, you need to use GitLab runners. We have to enhance different points to make CI higher at dealing with the info science use case of pipelines as a result of they begin sooner than standard with common—nicely, not common—however software program improvement usually.

Piotr: Once you mentioned GitLab runners assist GPU now, or you may choose up one with GPU, we’re, by the best way, GitLab customers as an organization, however I used to be unaware of that, or possibly I misunderstood it. Do you additionally present your clients with infrastructure, or are you a proxy over cloud suppliers? How does it work?

Eduardo Bonet: We offer these by means of a partnership. There are two forms of GitLab customers: self-managed, the place you may deploy your personal GitLab. Self-managed customers have been in a position to make use of their very own GPU runners for some time.

What was launched on this new model is what we offer on gitlab.com. In the event you’re a person of the SaaS platform, now you can use GPU-enabled runners as nicely.

The connection between DevOps and MLOps

Piotr: Thanks for explaining! I wished to ask you about it as a result of, possibly half a yr or extra in the past, I shared a weblog put up on the MLOps community Slack about the relationship between MLOps and DevOps. I had a thesis that we must always consider MLOps as an addition to the DevOps stack fairly than an unbiased stack that’s impressed by DevOps however unbiased.

You’re in a DevOps firm—no less than, that’s how GitLab presents itself as we speak—you’ve gotten many DevOps clients, and also you perceive the processes there. On the similar time, you’ve gotten intensive expertise in information science and ML and are operating an MLOps initiative at GitLab.

What, in your opinion, are we lacking in a conventional DevOps stack to assist MLOps processes?

Eduardo Bonet: For me, there isn’t a distinction between MLOps and DevOps. They’re the identical factor. DevOps is the artwork of deploying helpful software program, and MLOps is the artwork of deploying helpful software program that features machine studying options. That’s the distinction between the 2.

As a DevOps firm, we can not fall into the lure of claiming, “Okay, you may simply use DevOps.” There are some use circumstances. Some particular options are mandatory for the MLOps workflow that isn’t current in conventional software program improvement. That stems from the non-determinism of machine studying.

Once you write code, you’ve gotten inputs and outputs. You realize the logic as a result of it was written down. You won’t know the outcomes, however the logic is there. In machine studying, you may outline the logic for some fashions, however for many of them, you may simply approximate the logic they realized from the info.

There’s the method of permitting the mannequin to extract the patterns from the info, which isn’t current in conventional software program improvement – so the fashions are just like the builders. The fashions develop patterns from the enter information to the output information.

The opposite half is, “How have you learnt whether it is doing what you’re alleged to be doing?” However to be honest, that can also be current in DevOps—that’s why you do A-B testing and issues like that on common software program. Even when you already know what the change is, it doesn’t imply customers will see it in the identical manner.

You don’t know if it will likely be a greater product in case you deploy the change you’ve gotten, so that you do A/B testing, person testing, and assessments, proper? In order that half can also be current, however it’s much more essential for machine studying as a result of it’s the one manner you already know if it’s working.

With common or conventional software program, once you deploy the change, you no less than know that whether it is appropriate, you may take a look at if the change is appropriate, even in case you don’t know whether or not it strikes the metrics or not. For machine studying, that’s the solely manner you may normally implement assessments, however these assessments are non-deterministic.

The common testing stack that you simply use for software program improvement doesn’t actually apply to machine studying as a result of, by definition, machine studying entails loads of flaky assessments. So, your manner of figuring out if that’s appropriate can be in manufacturing. You’ll be able to, at most, proxy if it really works the best way you supposed it to, however you may solely know if it really works the best way supposed on the manufacturing degree.

Machine studying places stress on completely different locations than conventional software program improvement. It contains every little thing that conventional software program improvement has, however it places new stresses on completely different areas. And to be honest, each single manner of improvement places stresses on someplace.

For instance, Android improvement places its personal stresses on learn how to develop and deploy. For instance, you can not know the model the person is utilizing. That drawback just isn’t particular however very obvious in cell improvement—ML is one other area the place this is applicable. It would have its personal stresses that may require its personal tooling.

Piotr: Let’s speak extra about examples. Let’s say that we’ve a SaaS firm that has not used machine studying, no less than on the manufacturing degree, to this point, however they’re very subtle or comply with the very best practices in terms of software program improvement.

So let’s say they’ve GitLab, a devoted SRE, engineering, and DevOps groups. They’re monitoring their software program on manufacturing utilizing, let’s say Splunk. (I’m constructing the tech stack on the fly right here.)

They’re about to launch two fashions to manufacturing: one recommender system and, second, chatbots for his or her documentation and SDK. There are two information science groups, however the ML groups are constructed of knowledge scientists, so they don’t seem to be essentially expert in MLOps or DevOps.

You’ve gotten a DevOps staff and also you’re a CTO. What would you do right here? Ought to the DevOps staff assist them in transferring into manufacturing? Ought to we begin by fascinated by organising an MLOps staff? What could be your sensible advice right here?

Eduardo Bonet: My advice doesn’t matter very a lot, however I’d doubtlessly begin with the DevOps staff supporting and figuring out the bottlenecks inside that particular firm that the prevailing DevOps path doesn’t assist. For instance, retraining. Both manner, to implement retraining, the DevOps staff might be the very best one to work on. They won’t know precisely what retraining is, however they know the way the infrastructure is – they know the way every little thing works over there.

If there may be sufficient demand finally, the DevOps staff is perhaps cut up, and it would turn out to be an ML platform in itself. However in case you don’t wish to rent anybody, if you wish to begin lean, maybe selecting up somebody from the DevOps staff within the space to assist your information scientists may very well be one of the best ways of beginning.

Piotr: The GitLab buyer record is sort of giant. However let’s speak about these you met personally. Have you ever seen DevOps engineers or DevOps groups efficiently supporting ML groups? Do you see any frequent patterns? How do DevOps engineers work? What’s the path for a DevOps engineer to get conversant in MLops processes and be able to be known as an MLops engineer?

Eduardo Bonet: It normally fails when one does one thing and ships to the opposite to do their factor. Let’s say the info scientist spends a couple of months doing their trendy mannequin after which goes, “Oh, I’ve a mannequin, deploy it.” That doesn’t work, actually. They have to be concerned early, however that’s true for software program improvement as nicely.

In the event you say that you simply’re growing one thing, some new function, some new service, and then you definately deploy it, you make your complete service, and then you definately go to the DevOps staff and say, “Okay, deploy this factor.” That doesn’t work. There are gonna be loads of points deploying that software program.

There’s much more stress on this once you speak about machine studying as a result of fetching information could be slower, or there’s extra processing, or I don’t know. The mannequin could be heavy, so a pipeline can fail when it’s loaded into reminiscence throughout a run. It’s higher if they’re within the course of. Doing something, like probably not engaged on it, however on the conferences and discussions, following the problems, following the threads, giving perception earlier than, in order that when the mannequin is on a stage that it may be deployed, then it’s simpler.

However it’s additionally essential that the mannequin just isn’t the primary answer. So, deploy first, even when it’s a foul one. This unhealthy classical software program answer doesn’t carry out as nicely after which enhance – I see machine studying far more as an optimization for many circumstances as a substitute of the primary answer that you simply’ll make use of to resolve that.

I’ve seen it being profitable. I’ve additionally seen information scientists’ groups making an attempt to assist themselves, succeeding and failing. DevOps groups succeeding and failing at supporting ML platforms succeeding and failing at assist. It would rely upon the corporate tradition. It will rely upon the individuals on this group metropolis, however communication normally no less than makes these issues a bit bit much less. Contain the individuals earlier than, not when you’re for the time being of deploying the factor.

Finish-to-end ML groups

Aurimas: And what’s your opinion about these end-to-end machine studying groups? Like absolutely self-service machine studying groups, can they handle your complete improvement and monitoring circulation encapsulated in a single staff? As a result of that’s what DevOps is about, proper? Containing the circulation of improvement in a single staff.

Eduardo Bonet: I won’t be the very best particular person as a result of I’m biased since I do end-to-end stuff. I prefer it. It reduces the variety of hops it’s a must to go and the quantity of communication loss from staff to staff.

I like multidisciplinary groups, even product ones. You’ve gotten your backend, your entrance finish, your PM, and everyone collectively, and then you definately construct, you deploy, or one thing—you construct a ship or sort of just like the mentality that you’re accountable for your personal DevOps, after which there’s a DevOps platform that builds.

For my part, I favor after they take possession of end-to-end, actually going and saying, okay, we’re gonna go from speaking to the shopper to understanding what they want. Even the engineers, I wish to see engineers speaking to clients or our assist, all of them deploying the function, all of them delivery it, measuring it, and iterating over it.

Aurimas: What could be the composition of this staff, which might have the ability to ship a machine studying product?

Eduardo Bonet: It would have its information scientist or a machine studying engineer. These days, I favor to start out extra on the software program than on the info science half. A machine studying engineer would begin with the software program. Then, the info scientist finally makes it even higher. So begin with the function you’re constructing—front-end and back-end—after which add your machine studying engineer and information scientist.

You can too do much more with the DevOps half. The essential half is to ship quick, to ship one thing, even when it’s unhealthy to start with, and iterate off that one thing unhealthy fairly than looking for one thing that’s good and simply making use of it six months later. However at this level, you don’t even know if the customers need that or not. You deploy that basically good mannequin that nobody cares about.

For us, smaller iterations are higher. You are inclined to deploy higher merchandise by delivery small issues sooner fairly than making an attempt to get to the great product as a result of your definition of excellent is just in your head. Your customers have one other definition of “good,” and also you solely know their definition of excellent by placing issues for them to make use of or take a look at. And in case you do it in small chunks, they will devour it higher than in case you simply say, okay, there may be this enormous function right here for you. Please take a look at it.

Constructing a local experiment tracker at GitLab from scratch

Aurimas: I’ve some questions associated to your work at GitLab. Certainly one of them is that you simply’re now constructing native capabilities in GitLab, together with experiment monitoring. I do know that it’s sort of carried out by way of the MLflow consumer, however you handle all the servers beneath your self.

How did you resolve to not convey a third-party software and fairly construct this natively?

Eduardo Bonet: I normally don’t do it as a result of I don’t like re-implementing stuff myself, however GitLab is self-managed – we cater to our self-managed clients, and GitLab is a Rails, largely Rails monolith. The codebase is Rails, and it doesn’t use microservices.

I may deploy MLflow behind a function flag, like putting in GitLab, and it’ll arrange MLflow concurrently. However then I must deal with learn how to set up it on all of the completely different locations that GitLab is put in at, that are rather a lot – I’ve seen an set up on a mainframe or one thing – and I don’t wish to deal with all these installations.

Second, I wish to combine throughout the platform. I would like mannequin experiments to be one thing apart from their very own vertical function. I would like this to be built-in with the CI. I would like this to be built-in with the merge request. I wish to be built-in with points.

If the info is within the GitLab database, it’s a lot easier to cross-reference all these items. For instance, I deployed integration with the CI/CD final week. In the event you create your candidate or run by means of GitLab CI, you may cross a flag, and we’ll already join the candidate to the CI job. You’ll be able to see the merge request, the CI, the logs, and every little thing else.

You need to have the ability to handle that on our facet because it’s higher for the customers if we personal this on the GitLab facet. It does imply I needed to strip out most of the MLflow server’s options, so, for instance, there are not any visualizations in GitLab. And I’ll be including them over time. It will come. I had to have the ability to deploy one thing helpful, and over time, we’ll be including. However that’s what’s the reasoning behind re-implementing the backend whereas nonetheless utilizing the MLFlow consumer.

Piotr: As a part of the iterative course of, is that this what you’re calling “minimal viable change”?

Eduardo Bonet: Yeah, it’s even a bit bit under that, the minimal, as a result of now that it’s accessible, customers can inform what’s wanted to turn out to be the minimal for them to be helpful.

Piotr: As a product staff, we’re impressed by GitLab. Just lately, I used to be requested whether or not the “minimal viable worth” for change that might convey worth is simply too large to be carried out in a single dash, however we will need to have—I feel it was one thing round webhooks and setting some foundations for webhooks—a system that may repeat the decision if the system that you simply obtain the decision is down.

The problem was about offering one thing, some basis, that might convey worth to the tip person. However how would you do it at GitLab?

For example, to convey worth to the person, you must arrange a sort of backend and implement one thing within the backend that wouldn’t be uncovered to the person. Would it not match right into a dash at GitLab?

Eduardo Bonet: It does. A variety of what I did was not seen or actually helpful. I spent 5 months engaged on these mannequin experiments till I may say I may onboard the primary person. That was not dogfooding. So it was 5 months.

I nonetheless needed to discover methods of getting suggestions, for instance, with the movies I share at times to debate. Even when it’s simply discussing the best way you’re going or the imaginative and prescient, you may really feel whether or not individuals need that imaginative and prescient or not, or whether or not there are higher methods to realize that imaginative and prescient. However it’s work that must be carried out, even when it’s not seen.

Not all work can be seen. Even in case you go iterative, you may nonetheless do work that isn’t seen, however it must be carried out. So, I needed to refactor how packages are dealt with in our mannequin experiments and our experiment monitoring. That’s extra of a change that might make my life simpler over time than the person’s life, however it was nonetheless mandatory.

Piotr: So there isn’t a silver bullet as a result of we’re battling such a strategy and are tremendous interested in the way you do it. At first look, it sounds, for me no less than, that each change has to convey some worth to the person.

Eduardo Bonet: I don’t assume each change has to convey worth to the person as a result of then you definately fall into traps. This places some main stresses in your decision-making, equivalent to biases towards short-term issues that have to be delivered, and it pushes issues like code high quality, for instance, away from that line of considering.

However each methods of considering are mandatory. You can’t use just one. In the event you solely use “minimal viable change” on a regular basis, you should have a minimal viable change in there. That’s not what customers really need. They need a product. They need one thing tangible. In any other case, there’s no product. That’s why software program engineering is difficult.

MLOps vs. LLMOps

Piotr: We’re recording podcasts, in 2023 it might be unusual to not ask about it. We’re asking as a result of everyone is asking questions on giant language fashions.

I’m not speaking concerning the affect on humanity, although all of these are honest questions. Let’s speak extra tactically, out of your perspective and present understanding of how companies can use giant language fashions, foundational fashions, in productions.

What similarities do you see to MLOps? What’s going to keep the identical? What is totally pointless? What forms of “jobs to be carried out” are lacking within the conventional, extra conventional, current MLOps stack?

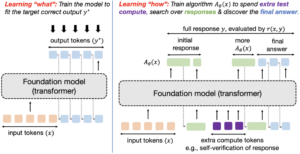

So let’s do sort of a diff. We’ve an MLOps stack. We had DevOps and added MLOps, proper? There was a diff, we mentioned that. Now, we’re additionally including giant language fashions to the image. What’s the diff?

Eduardo Bonet: There are two elements right here. Once you’re speaking of LLMOps, you may consider it as a immediate plus the big mannequin that you simply’re utilizing as a mannequin itself, just like the conjunction of them as a mannequin. From there on, it’s going to behave very a lot the identical as a daily machine studying mannequin, so that you’re going to wish the identical observability ranges, the identical issues that you must maintain when deploying it in manufacturing.

On the create facet, although, solely now are we seeing prompts being taken as their very own artworks that you must model, talk about, and supply the best methods of adjusting, measuring, discover, that they are going to have completely different behaviors and completely different fashions, and that any change.

I’ve seen some firms begin to implement a immediate registry the place the product supervisor can go and alter the immediate while not having the backend or frontend to enter the codebase. That’s considered one of them, and that’s an early one. Proper now, no less than, you solely have a immediate that you simply most likely populate with information, meta prompts, or second-layer prompts.

However the subsequent degree is prompt-generating prompts, and we haven’t explored that degree but. There’s one other complete degree of Ops that we don’t find out about but. So, how will you handle prompts that generate prompts or cross on flags? For instance, I can have a immediate the place I cross an possibility that appends one thing to the immediate. Okay. Be quick, be small, be concise, for instance.

Prompts will turn out to be their programming language, and features are outlined as prompts. You cross arguments which can be prompts themselves to those features.

How do you handle the brokers in your stack? How do you handle variations of brokers, what they’re doing proper now, and their affect in the long run when you’ve gotten 5 or 6 completely different brokers interacting? There are loads of challenges that we’ve but to study as a result of it’s so early within the course of. It’s been two months since this turned usable as a product, so it’s very, very early.

Piotr: I simply wished so as to add the remark that in most use circumstances, if it’s in manufacturing, there’s a human within the loop. Generally, the human within the loop is a buyer, proper? Particularly if we’re speaking concerning the chat kind of expertise.

However I’m curious to see use circumstances of foundational fashions within the context the place people usually are not accessible, like predictive upkeep, demand prediction, and predatory scoring—issues that you simply want to actually automate with out having people within the loop. How will it behave? How would we have the ability to take a look at and validate these – I’m not even positive whether or not we must always name them fashions, prompts, or agent configuration.

One other query I’m curious to listen to your ideas on: How will we, and if sure, how will we join foundational fashions with extra classical deep studying and machine studying fashions? Will it’s related by way of brokers or in another way? Or by no means?

Eduardo Bonet: I feel it will likely be by means of brokers as a result of brokers are a really broad abstraction. You’ll be able to embody something as an agent. So it’s very easy to say brokers as a result of, nicely, you are able to do that with brokers—our insurance policies or no matter.

However that’s the way you present extra context. For instance, a search could be very sophisticated when you’ve gotten too many labels that you simply can not encode in a immediate. You want both a simple manner of discovering, like a dumb manner of operating a question, that you’ve an agent or a software—a few of them additionally name this “software.”. You give your agent instruments.

This may be so simple as operating a question or saying one thing or extra sophisticated as requesting an API that predicts. For instance, the agent will study to cross the best parameters to this API. You’ll nonetheless use generative AI since you’re not coding the entire pipeline. However for some elements, it is sensible, even when you have one thing working.

Maybe it’s higher in case you cut up off some inter-deterministic chunks that you already know, what, what’s the output of that particular software that you simply wish to give your agent entry to.

Piotr: So, my final query—I’d play satan’s advocate right here—is: Possibly GitLab ought to skip the MLOps half and simply deal with the LLMOps half. It’s going to be an even bigger market. Will we want MLOps after we use giant language fashions? Does it make sense to spend money on it?

Eduardo Bonet: I feel so. We’re nonetheless studying the boundaries of when to use ML, basic ML, and why each mannequin has its personal locations the place it’s higher and the place it’s not. LLMs are additionally a part of this.

There can be circumstances the place common ML is best. For instance, you may first deploy your function with LLM, then enhance the software program, after which enhance with machine studying, so ML turns into the third degree of optimization.

I don’t assume LLM will kill ML. Nothing kills something. Individuals have been saying that Ruby, COBOL, and Java will die. Resolution timber could be lifeless as a result of now we’ve neural networks. Even when it’s simply to maintain the simplicity, generally you don’t need these extra sophisticated options. You need one thing that you may management, that you already know what was the enter and the output.

MLOps is a greater focus for now, initially, till we begin studying what LLMOps are as a result of we’ve a greater understanding of how this matches into GitLab itself. However it’s one thing we’re fascinated by, like learn how to use it, as a result of we’re additionally utilizing LLMs internally.

We’re dogfooding our personal issues with learn how to deploy AI-backed options. We’re studying with it, and sure, these may turn out to be a product finally, these may turn out to be immediate administration, may turn out to be a product finally, however at this level, even for us to deal with our personal fashions, the mannequin registry is extra of a priority fairly than immediate the author to point out or no matter.

Aurimas: Eduardo, it was very nice speaking with you. Do you’ve gotten something that you simply want to share with our listeners?

Eduardo Bonet: The mannequin experiments we’ve been discussing can be found for our customers on GitLab as 16.0. I’ll depart a hyperlink to the documentation if you wish to try it out.

If you wish to comply with what I do, I normally put up a brief YouTube video about my advancements every two weeks or so. There’s additionally a playlist that you may comply with.

In the event you’re in Amsterdam, drop by the MLOps community meetup we manage.

Aurimas: Thanks, Eduardo. I’m tremendous glad to have had you right here. And in addition thanks to everybody who was listening. And see you within the subsequent episode.